“Perceptual error optimization for Monte Carlo animation rendering” by Korać, Salaün, Georgiev, Grittmann, Slusallek, et al. …

Conference:

Type(s):

Title:

- Perceptual error optimization for Monte Carlo animation rendering

Session/Category Title: Technical Papers Fast-Forward

Presenter(s)/Author(s):

Abstract:

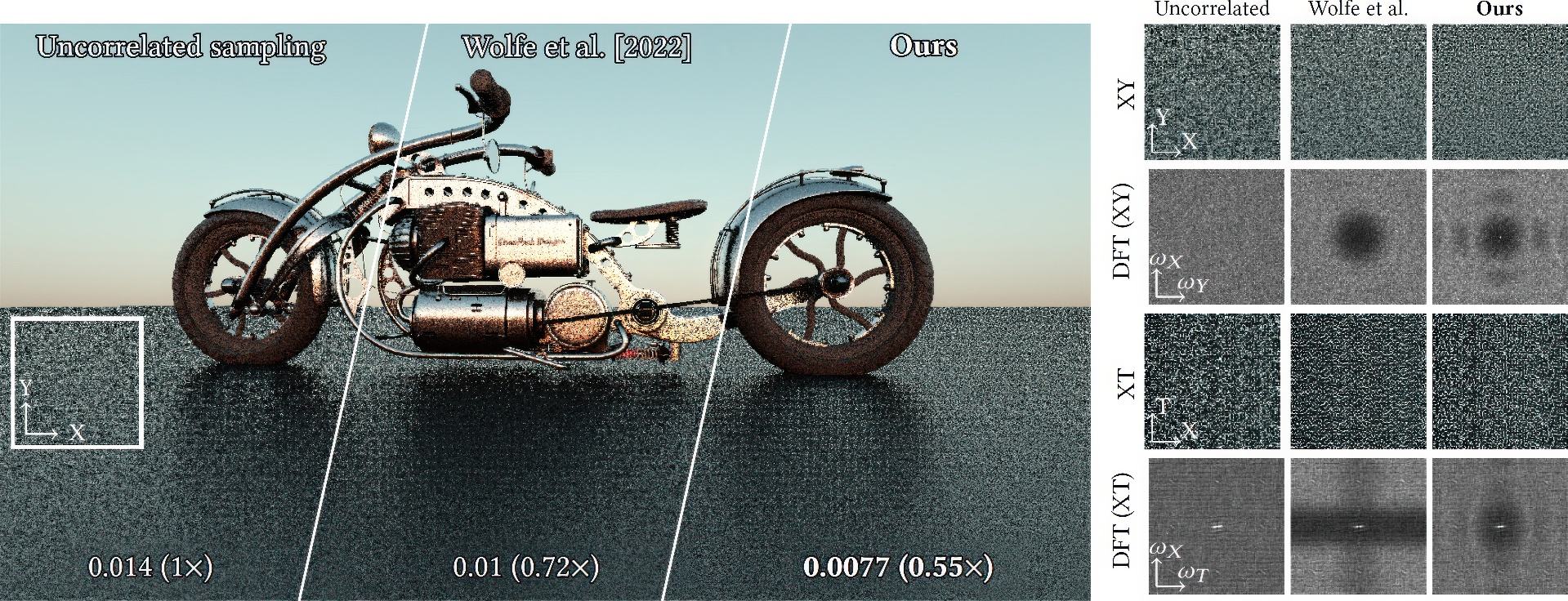

Independently estimating individual pixel values in Monte Carlo rendering results in a perceptually sub-optimal white-noise distribution of error in image space. Recent works have shown that perceptual fidelity can be improved significantly by distributing pixel error as blue noise instead. Most such works have focused on static images, ignoring the temporal perceptual effects of animation display. We extend prior formulations to simultaneously consider the spatial and temporal domains, and perform an analysis to motivate a perceptually better spatio-temporal error distribution. We then propose a practical error optimization algorithm for spatio-temporal rendering and demonstrate its effectiveness in various configurations.

References:

[1]

Abdalla GM Ahmed and Peter Wonka. 2020. Screen-space blue-noise diffusion of Monte Carlo sampling error via hierarchical ordering of pixels. ACM Transactions on Graphics (TOG) 39, 6 (2020), 1–15.

[2]

Peter G.J. Barten. 1999. Contrast sensitivity of the human eye and its effects on image quality. SPIE – The International Society for Optical Engineering. https://doi.org/10.1117/3.353254

[3]

Laurent Belcour and Eric Heitz. 2021. Lessons Learned and Improvements When Building Screen-Space Samplers with Blue-Noise Error Distribution. In ACM SIGGRAPH 2021 Talks (Virtual Event, USA) (SIGGRAPH ’21). Association for Computing Machinery, New York, NY, USA, Article 9, 2 pages. https://doi.org/10.1145/3450623.3464645

[4]

Nicolas Bonnotte. 2013. Unidimensional and evolution methods for optimal transportation. Ph. D. Dissertation. Paris 11.

[5]

Christina A. Burbeck and D. H. Kelly. 1980. Spatiotemporal characteristics of visual mechanisms: excitatory-inhibitory model. J. Opt. Soc. Am. 70, 9 (Sep 1980), 1121–1126. https://doi.org/10.1364/JOSA.70.001121

[6]

Vassillen Chizhov, Iliyan Georgiev, Karol Myszkowski, and Gurprit Singh. 2022. Perceptual error optimization for Monte Carlo rendering. ACM Trans. Graph. 41, 3 (2022). https://doi.org/10.1145/3504002

[7]

S. Daly. 1993. The Visible Differences Predictor: An Algorithm for the Assessment of Image Fidelity. In Digital Image and Human Vision. 179–206.

[8]

S. Daly. 1998. Engineering observations from spatiovelocity and spatiotemporal visual models. In Human Vision and Electronic Imaging III. SPIE Vol. 3299, 180–191.

[9]

H. de Lange. 1958. Research into the Dynamic Nature of the Human Fovea→Cortex Systems with Intermittent and Modulated Light. I. Attenuation Characteristics with White and Colored Light. J. Opt. Soc. Am. 48, 11 (1958), 777–784.

[10]

Sergey Ermakov and Svetlana Leora. 2019. Monte Carlo Methods and the Koksma-Hlawka Inequality. Mathematics 7, 8 (2019). https://doi.org/10.3390/math7080725

[11]

Iliyan Georgiev and Marcos Fajardo. 2016. Blue-noise dithered sampling. In ACM SIGGRAPH 2016 Talks. 1–1.

[12]

S.T. Hammett and A.T. Smith. 1992. Two temporal channels or three? A re-evaluation. Vision Research 32, 2 (1992), 285–291. https://doi.org/10.1016/0042-6989(92)90139-A

[13]

Johannes Hanika, Lorenzo Tessari, and Carsten Dachsbacher. 2021. Fast Temporal Reprojection without Motion Vectors. Journal of Computer Graphics Techniques Vol 10, 3 (2021).

[14]

Eric Heitz and Laurent Belcour. 2019. Distributing monte carlo errors as a blue noise in screen space by permuting pixel seeds between frames. In Computer Graphics Forum, Vol. 38. Wiley Online Library, 149–158.

[15]

Eric Heitz, Laurent Belcour, Victor Ostromoukhov, David Coeurjolly, and Jean-Claude Iehl. 2019. A low-discrepancy sampler that distributes monte carlo errors as a blue noise in screen space. In ACM SIGGRAPH 2019 Talks. 1–2.

[16]

Akshay Jindal, Krzysztof Wolski, Karol Myszkowski, and Rafał K. Mantiuk. 2021. Perceptual Model for Adaptive Local Shading and Refresh Rate. ACM Trans. Graph. 40, 6, Article 281 (2021).

[17]

Leonid V. Kantorovich and Gennady S. Rubinstein. 1958. On a space of completely additive functions. Vestnik Leningrad Univ 13 7 (1958), 52–59.

[18]

Michael Kass and Davide Pesare. 2011. Coherent noise for non-photorealistic rendering. ACM Transactions on Graphics (TOG) 30, 4 (2011), 1–6.

[19]

D.H. Kelly. 1979. Motion and Vision 2. Stabilized Spatio-Temporal Threshold Surface. Journal of the Optical Society of America 69, 10 (1979), 1340–1349.

[20]

Rafał K. Mantiuk, Maliha Ashraf, and Alexandre Chapiro. 2022. StelaCSF: A Unified Model of Contrast Sensitivity as the Function of Spatio-Temporal Frequency, Eccentricity, Luminance and Area. ACM Trans. Graph. 41, 4, Article 145 (2022).

[21]

Rafał K Mantiuk, Gyorgy Denes, Alexandre Chapiro, Anton Kaplanyan, Gizem Rufo, Romain Bachy, Trisha Lian, and Anjul Patney. 2021. FovVideoVDP: A visible difference predictor for wide field-of-view video. ACM Transactions on Graphics (TOG) 40, 4 (2021), 1–19.

[22]

K. Myszkowski, P. Rokita, and T. Tawara. 1999. Perceptually-Informed Accelerated Rendering of High Quality Walkthrough Sequences. In Proc. EGSR. 5–18.

[23]

Lois Paulin, Nicolas Bonneel, David Coeurjolly, Jean-Claude Iehl, Antoine Webanck, Mathieu Desbrun, and Victor Ostromoukhov. 2020. Sliced optimal transport sampling.ACM Trans. Graph. 39, 4 (2020), 99.

[24]

Matt Pharr, Wenzel Jakob, and Greg Humphreys. 2016. Physically based rendering: From theory to implementation. Morgan Kaufmann.

[25]

François Pitié, Anil C. Kokaram, and Rozenn Dahyot. 2005. N-Dimensional Probablility Density Function Transfer and its Application to Colour Transfer. In 10th IEEE International Conference on Computer Vision. IEEE Computer Society. https://doi.org/10.1109/ICCV.2005.166

[26]

John Robson. 1966. Spatial and Temporal Contrast-Sensitivity Functions of the Visual System. Journal of The Optical Society of America 56 (08 1966). https://doi.org/10.1364/JOSA.56.001141

[27]

Corentin Salaün, Iliyan Georgiev, Hans-Peter Seidel, and Gurprit Singh. 2022. Scalable Multi-Class Sampling via Filtered Sliced Optimal Transport. ACM Trans. Graph. 41, 6, Article 261 (nov 2022), 14 pages. https://doi.org/10.1145/3550454.3555484

[28]

Christoph Schied, Anton Kaplanyan, Chris Wyman, Anjul Patney, Chakravarty R Alla Chaitanya, John Burgess, Shiqiu Liu, Carsten Dachsbacher, Aaron Lefohn, and Marco Salvi. 2017. Spatiotemporal variance-guided filtering: real-time reconstruction for path-traced global illumination. In Proceedings of High Performance Graphics. 1–12.

[29]

Christoph Schied, Christoph Peters, and Carsten Dachsbacher. 2018. Gradient estimation for real-time adaptive temporal filtering. Proceedings of the ACM on Computer Graphics and Interactive Techniques 1, 2 (2018), 1–16.

[30]

Mikio Shinya. 1993. Spatial Anti-Aliasing for Animation Sequences with Spatio-Temporal Filtering. In Proc. SIGGRAPH 93. 289–296.

[31]

Gurprit Singh, Cengiz Öztireli, Abdalla G.M. Ahmed, David Coeurjolly, Kartic Subr, Oliver Deussen, Victor Ostromoukhov, Ravi Ramamoorthi, and Wojciech Jarosz. 2019. Analysis of Sample Correlations for Monte Carlo Rendering. Computer Graphics Forum 38, 2 (2019), 473–491. https://doi.org/10.1111/cgf.13653 arXiv:https://onlinelibrary.wiley.com/doi/pdf/10.1111/cgf.13653

[32]

I.M Sobol’. 1967. On the distribution of points in a cube and the approximate evaluation of integrals. U. S. S. R. Comput. Math. and Math. Phys. 7, 4 (1967), 86–112. https://doi.org/10.1016/0041-5553(67)90144-9

[33]

R.A. Ulichney. 1988. Dithering with blue noise. Proc. IEEE 76, 1 (1988), 56–79. https://doi.org/10.1109/5.3288

[34]

C. Villani. 2008. Optimal Transport: Old and New. Springer Berlin Heidelberg. https://books.google.fr/books?id=hV8o5R7_5tkC

[35]

Andrew B Watson. 2013. High frame rates and human vision: A view through the window of visibility. SMPTE Motion Imaging Journal 122, 2 (2013), 18–32.

[36]

Andrew B Watson and Albert J Ahumada. 2016. The pyramid of visibility. Electronic Imaging 2016, 16 (2016), 1–6.

[37]

Andrew B. Watson, Albert J. Ahumada, and Joyce E. Farrell. 1986. Window of visibility: a psychophysical theory of fidelity in time-sampled visual motion displays. J. Opt. Soc. Am. A 3, 3 (1986), 300–307.

[38]

Alan Wolfe, Nathan Morrical, Tomas Akenine-Möller, and Ravi Ramamoorthi. 2022. Spatiotemporal Blue Noise Masks. In Eurographics Symposium on Rendering. https://doi.org/10.2312/sr.20221161

[39]

Sophie Wuerger, Maliha Ashraf, Minjung Kim, Jasna Martinovic, María Pérez-Ortiz, and Rafał K. Mantiuk. 2020. Spatio-chromatic contrast sensitivity under mesopic and photopic light levels. Journal of Vision 20, 4 (04 2020), 23–23. https://doi.org/10.1167/jov.20.4.23

[40]

Lei Yang, Shiqiu Liu, and Marco Salvi. 2020. A Survey of Temporal Antialiasing Techniques. Computer Graphics Forum 39, 2 (2020), 607–621. https://doi.org/10.1111/cgf.14018 arXiv:https://onlinelibrary.wiley.com/doi/pdf/10.1111/cgf.14018

[41]

Hector Yee, Sumanita Pattanaik, and Donald P. Greenberg. 2001. Spatiotemporal Sensitivity and Visual Attention for Efficient Rendering of Dynamic Environments. ACM Trans. Graph. 20, 1 (2001), 39–65.