“Anything to Glyph: Artistic Font Synthesis via Text-to-Image Diffusion Model” by Wang, Wu, Liu, Li, Meng, et al. …

Conference:

Type(s):

Title:

- Anything to Glyph: Artistic Font Synthesis via Text-to-Image Diffusion Model

Session/Category Title: Creative Expression

Presenter(s)/Author(s):

Abstract:

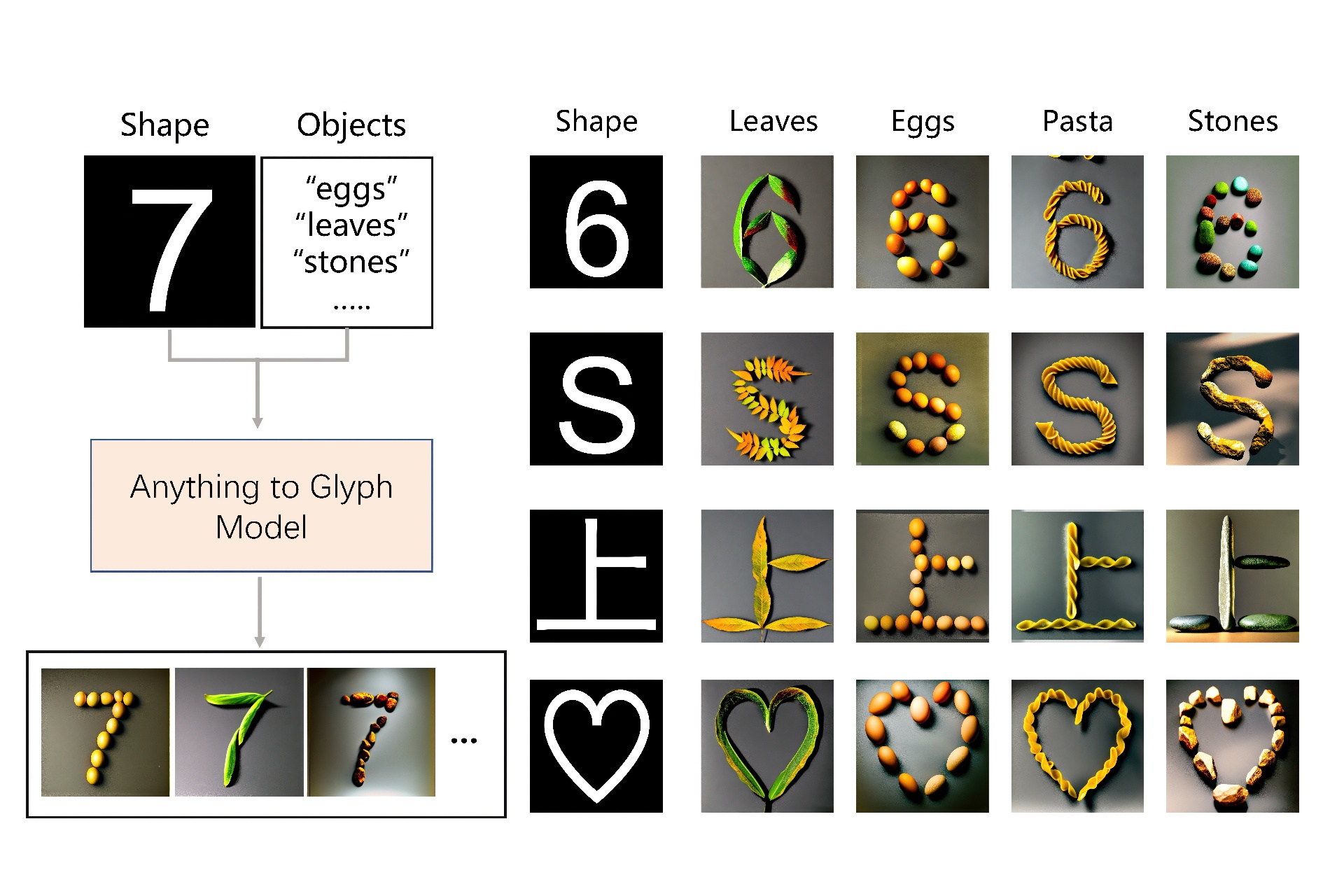

The automatic generation of artistic fonts is a challenging task that attracts many research interests. Previous methods specifically focus on glyph or texture style transfer. However, we often come across creative fonts composed of objects in posters or logos. These fonts have proven to be a challenge for existing methods as they struggle to generate similar designs. This paper proposes a novel method for generating creative artistic fonts using a pre-trained text-to-image diffusion model. Our model takes a shape image and a prompt describing an object as input and generates an artistic glyph image consisting of such objects. Specifically, we introduce a novel heatmap-based weak position constraint method to guide the positioning of objects in the generated image, and we also propose the Latent Space Semantic Augmentation Module that improves other information while constraining object position. Our approach is unique in that it can preserve the object’s original shape while constraining its position. And our training method requires only a small quantity of generated data, making it an efficient unsupervised learning approach. Experimental results demonstrate that our method can generate various glyphs, including Chinese, English, Japanese, and symbols, using different objects. We also conducted qualitative and quantitative comparisons with various position control methods for the diffusion model. The results indicate that our approach outperforms other methods in terms of visual quality, innovation, and user evaluation.

References:

[1]

Omri Avrahami, Dani Lischinski, and Ohad Fried. 2022. Blended diffusion for text-driven editing of natural images. In IEEE Conference on Computer Vision and Pattern Recognition. 18208–18218.

[2]

Kyungjune Baek, Yunjey Choi, Youngjung Uh, Jaejun Yoo, and Hyunjung Shim. 2021. Rethinking the truly unsupervised image-to-image translation. In IEEE/CVF International Conference on Computer Vision. 14154–14163.

[3]

Xu Chen, Lei Wu, 2021. MLFont: Few-Shot Chinese Font Generation via Deep Meta-Learning. In ICMR. 37–45.

[4]

Jooyoung Choi, Sungwon Kim, Yonghyun Jeong, Youngjune Gwon, and Sungroh Yoon. 2021. ILVR: Conditioning Method for Denoising Diffusion Probabilistic Models. In 2021 IEEE/CVF International Conference on Computer Vision. 14347–14356. https://doi.org/10.1109/ICCV48922.2021.01410

[5]

DeepFloydLab. 2023. Deepfloyd if. (2023). https://github.com/deep-floyd/IF

[6]

Prafulla Dhariwal and Alexander Nichol. 2021. Diffusion models beat gans on image synthesis. Conference and Workshop on Neural Information Processing Systems 34 (2021).

[7]

Patrick Esser, Robin Rombach, and Bjorn Ommer. 2021. Taming Transformers for High-Resolution Image Synthesis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 12873–12883.

[8]

Yue Gao, Yuan Guo, Zhouhui Lian, Yingmin Tang, and Jianguo Xiao. 2019. Artistic glyph image synthesis via one-stage few-shot learning. ACM Transactions on Graphics (TOG) 38, 6 (2019), 1–12.

[9]

Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2020. Generative adversarial networks. Commun. ACM 63, 11 (2020), 139–144.

[10]

Jonathan Ho, Ajay Jain, and Pieter Abbeel. 2020. Denoising diffusion probabilistic models. Advances in Neural Information Processing Systems 33 (2020), 6840–6851.

[11]

Jonathan Ho and Tim Salimans. 2021. Classifier-Free Diffusion Guidance. (2021). https://openreview.net/forum?id=qw8AKxfYbI

[12]

Shir Iluz, Yael Vinker, Amir Hertz, Daniel Berio, Daniel Cohen-Or, and Ariel Shamir. 2023. Word-as-image for semantic typography. arXiv preprint arXiv:2303.01818 (2023).

[13]

Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. 2017. Image-to-image translation with conditional adversarial networks. In IEEE Conference on Computer Vision and Pattern Recognition. 1125–1134.

[14]

Yue Jiang, Zhouhui Lian, Yingmin Tang, and Jianguo Xiao. 2017. DCFont: an end-to-end deep chinese font generation system. SIGGRAPH Asia 2017 Technical Briefs (2017).

[15]

Tero Karras, Samuli Laine, and Timo Aila. 2019. A style-based generator architecture for generative adversarial networks. In IEEE Conference on Computer Vision and Pattern Recognition. 4401–4410.

[16]

Xiang Li, Lei Wu, Xu Chen, Lei Meng, and Xiangxu Meng. 2022. DSE-Net: Artistic Font Image Synthesis via Disentangled Style Encoding. In ICME. 1–6.

[17]

Xiang Li, Lei Wu, Changshuo Wang, Lei Meng, and Xiangxu Meng. 2023b. Compositional Zero-Shot Artistic Font Synthesis. In Proceedings of the Thirty-Second International Joint Conference on Artificial Intelligence, IJCAI-23, Edith Elkind (Ed.). International Joint Conferences on Artificial Intelligence Organization, 1098–1106. https://doi.org/10.24963/ijcai.2023/122 Main Track.

[18]

Yuheng Li, Haotian Liu, Qingyang Wu, Fangzhou Mu, Jianwei Yang, Jianfeng Gao, Chunyuan Li, and Yong Jae Lee. 2023a. Gligen: Open-set grounded text-to-image generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 22511–22521.

[19]

Cheng Lu 2022a. DPM-Solver++: Fast Solver for Guided Sampling of Diffusion Probabilistic Models. arXiv preprint arXiv:2211.01095 (2022).

[20]

Cheng Lu, Yuhao Zhou, Fan Bao, Jianfei Chen, Chongxuan LI, and Jun Zhu. 2022b. DPM-Solver: A Fast ODE Solver for Diffusion Probabilistic Model Sampling in Around 10 Steps. 35 (2022), 5775–5787. https://proceedings.neurips.cc/paper_files/paper/2022/file/260a14acce2a89dad36adc8eefe7c59e-Paper-Conference.pdf

[21]

Timo Lüddecke and Alexander S Ecker. 2021. Prompt-based multi-modal image segmentation. arXiv preprint arXiv:2112.10003 (2021).

[22]

Haokai Ma, Xiangxian Li, Lei Meng, and Xiangxu Meng. 2021. Comparative Study of Adversarial Training Methods for Cold-Start Recommendation. In Proceedings of the 1st International Workshop on Adversarial Learning for Multimedia (Virtual Event, China) (ADVM ’21). Association for Computing Machinery, New York, NY, USA, 28–34. https://doi.org/10.1145/3475724.3483600

[23]

Chenlin Meng, Yutong He, Yang Song, Jiaming Song, Jiajun Wu, Jun-Yan Zhu, and Stefano Ermon. 2022. SDEdit: Guided Image Synthesis and Editing with Stochastic Differential Equations. (2022). https://openreview.net/forum?id=aBsCjcPu_tE

[24]

Chong Mou, Xintao Wang, Liangbin Xie, Jian Zhang, Zhongang Qi, Ying Shan, and Xiaohu Qie. 2023. T2i-adapter: Learning adapters to dig out more controllable ability for text-to-image diffusion models. arXiv preprint arXiv:2302.08453 (2023).

[25]

Alexander Quinn Nichol and Prafulla Dhariwal. 2021. Improved denoising diffusion probabilistic models. In International Conference on Machine Learning. PMLR, 8162–8171.

[26]

Taesung Park, Ming-Yu Liu, Ting-Chun Wang, and Jun-Yan Zhu. 2019. Semantic image synthesis with spatially-adaptive normalization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2337–2346.

[27]

Dustin Podell, Zion English, Kyle Lacey, Andreas Blattmann, Tim Dockhorn, Jonas Müller, Joe Penna, and Robin Rombach. 2023. SDXL: Improving Latent Diffusion Models for High-Resolution Image Synthesis. arXiv preprint arXiv:2307.01952 (2023).

[28]

Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, 2021. Learning transferable visual models from natural language supervision. In International Conference on Machine Learning. PMLR, 8748–8763.

[29]

Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. 2022. Hierarchical text-conditional image generation with clip latents. arXiv preprint arXiv:2204.06125 (2022).

[30]

Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. 2022. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10684–10695.

[31]

Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2015. U-net: Convolutional networks for biomedical image segmentation. In MICCAI. Springer, 234–241.

[32]

Chitwan Saharia, William Chan, Huiwen Chang, Chris Lee, Jonathan Ho, Tim Salimans, David Fleet, and Mohammad Norouzi. 2022. Palette: Image-to-image diffusion models. In ACM SIGGRAPH 2022 Conference Proceedings. 1–10.

[33]

Jiaming Song, Chenlin Meng, and Stefano Ermon. 2021a. Denoising Diffusion Implicit Models. (2021). https://openreview.net/forum?id=St1giarCHLP

[34]

Yang Song, Jascha Sohl-Dickstein, Diederik P Kingma, Abhishek Kumar, Stefano Ermon, and Ben Poole. 2021b. Score-Based Generative Modeling through Stochastic Differential Equations. (2021). https://openreview.net/forum?id=PxTIG12RRHS

[35]

Y. Su, X. Chen, L. Wu, and X. Meng. 2023. Learning Component-Level and Inter-Class Glyph Representation for few-shot Font Generation. In 2023 IEEE International Conference on Multimedia and Expo (ICME). IEEE Computer Society, Los Alamitos, CA, USA, 738–743. https://doi.org/10.1109/ICME55011.2023.00132

[36]

Aaron Van Den Oord, Oriol Vinyals, 2017. Neural discrete representation learning. Conference and Workshop on Neural Information Processing Systems 30 (2017).

[37]

Andrey Voynov, Kfir Aberman, and Daniel Cohen-Or. 2023. Sketch-Guided Text-to-Image Diffusion Models. In ACM SIGGRAPH 2023 Conference Proceedings (Los Angeles, CA, USA) (SIGGRAPH ’23). Association for Computing Machinery, New York, NY, USA, Article 55, 11 pages. https://doi.org/10.1145/3588432.3591560

[38]

Tengfei Wang, Ting Zhang, Bo Zhang, Hao Ouyang, Dong Chen, Qifeng Chen, and Fang Wen. 2022. Pretraining is all you need for image-to-image translation. arXiv preprint arXiv:2205.12952 (2022).

[39]

Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2018. High-resolution image synthesis and semantic manipulation with conditional gans. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 8798–8807.

[40]

Yangchen Xie, Xinyuan Chen, Li Sun, and Yue Lu. 2021. Dg-font: Deformable generative networks for unsupervised font generation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 5130–5140.

[41]

Shuai Yang, Jiaying Liu, Wenjing Wang, and Zongming Guo. 2019a. Tet-gan: Text effects transfer via stylization and destylization. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 33. 1238–1245.

[42]

Shuai Yang, Zhangyang Wang, and Jiaying Liu. 2022. Shape-Matching GAN++: Scale Controllable Dynamic Artistic Text Style Transfer. IEEE Transactions on Pattern Analysis and Machine Intelligence 44, 7 (2022), 3807–3820. https://doi.org/10.1109/TPAMI.2021.3055211

[43]

Shuai Yang, Zhangyang Wang, Zhaowen Wang, Ning Xu, Jiaying Liu, and Zongming Guo. 2019b. Controllable artistic text style transfer via shape-matching gan. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 4442–4451.

[44]

Lvmin Zhang and Maneesh Agrawala. 2023. Adding conditional control to text-to-image diffusion models. arXiv preprint arXiv:2302.05543 (2023).

[45]

Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A Efros. 2017. Unpaired image-to-image translation using cycle-consistent adversarial networks. In IEEE/CVF International Conference on Computer Vision. 2223–2232.