“Motion recommendation for online character control” by Cho, Kim, Park, Park and Noh

Conference:

Type(s):

Title:

- Motion recommendation for online character control

Session/Category Title: Character Stimulation

Presenter(s)/Author(s):

Abstract:

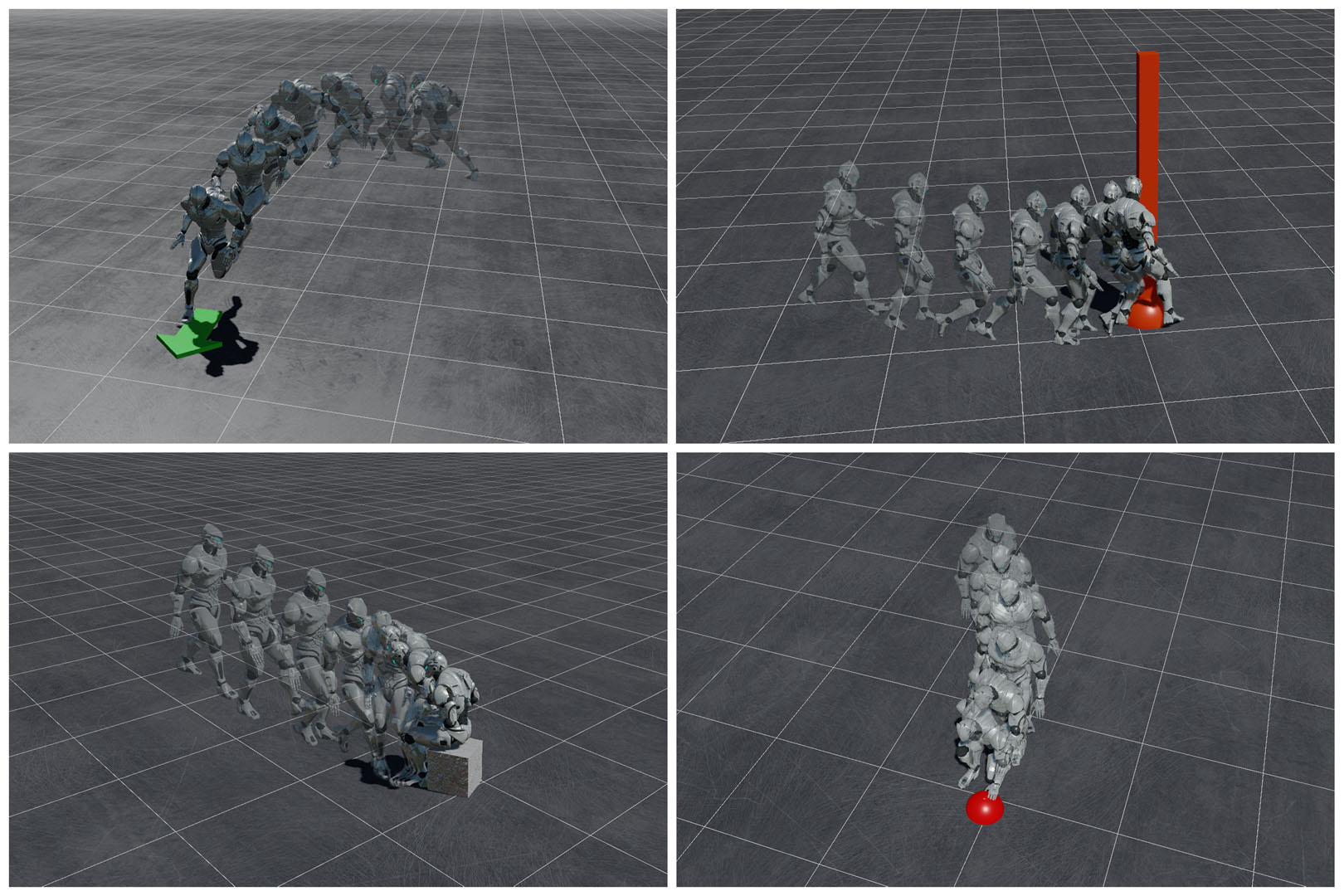

Reinforcement learning (RL) has been proven effective in many scenarios, including environment exploration and motion planning. However, its application in data-driven character control has produced relatively simple motion results compared to recent approaches that have used large complex motion data without RL. In this paper, we provide a real-time motion control method that can generate high-quality and complex motion results from various sets of unstructured data while retaining the advantage of using RL, which is the discovery of optimal behaviors by trial and error. We demonstrate the results for a character achieving different tasks, from simple direction control to complex avoidance of moving obstacles. Our system works equally well on biped/quadruped characters, with motion data ranging from 1 to 48 minutes, without any manual intervention. To achieve this, we exploit a finite set of discrete actions, where each action represents full-body future motion features. We first define a subset of actions that can be selected in each state and store these pieces of information in databases during the preprocessing step. The use of this subset of actions enables the effective learning of control policy even from a large set of motion data. To achieve interactive performance at run-time, we adopt a proposal network and a k-nearest neighbor action sampler.

References:

1. Eloi Alonso, Maxim Peter, David Goumard, and Joshua Romoff. 2020. Deep Reinforcement Learning for Navigation in AAA Video Games. arXiv preprint arXiv:2011.04764 (2020).

2. Okan Arikan and David A Forsyth. 2002. Interactive motion generation from examples. ACM Transactions on Graphics (TOG) 21, 3 (2002), 483–490.

3. Kai Arulkumaran, Marc Peter Deisenroth, Miles Brundage, and Anil Anthony Bharath. 2017. A brief survey of deep reinforcement learning. arXiv preprint arXiv:1708.05866 (2017).

4. Kevin Bergamin, Simon Clavet, Daniel Holden, and James Richard Forbes. 2019. DReCon: data-driven responsive control of physics-based characters. ACM Transactions on Graphics (TOG) 38, 6 (2019), 1–11.

5. David Bollo. 2016. Inertialization: High-Performance Animation Transitions in’Gears of War’. Proc. of GDC 2018 (2016).

6. David Bollo. 2017. High Performance Animation in Gears of War 4. In ACM SIGGRAPH 2017 Talks (Los Angeles, California) (SIGGRAPH ’17). Association for Computing Machinery, New York, NY, USA, Article 22, 2 pages.

7. Greg Brockman, Vicki Cheung, Ludwig Pettersson, Jonas Schneider, John Schulman, Jie Tang, and Wojciech Zaremba. 2016. Openai gym. arXiv preprint arXiv:1606.01540 (2016).

8. Michael Büttner and Simon Clavet. 2015. Motion Matching-The Road to Next Gen Animation. Proc. of Nucl. ai (2015).

9. Nuttapong Chentanez, Matthias Müller, Miles Macklin, Viktor Makoviychuk, and Stefan Jeschke. 2018. Physics-based motion capture imitation with deep reinforcement learning. In Proceedings of the 11th Annual International Conference on Motion, Interaction, and Games. AACM New York, NY, USA, New York, NY, USA, Article 1, 10 pages.

10. Gabriel Dulac-Arnold, Richard Evans, Hado van Hasselt, Peter Sunehag, Timothy Lillicrap, Jonathan Hunt, Timothy Mann, Theophane Weber, Thomas Degris, and Ben Coppin. 2015. Deep reinforcement learning in large discrete action spaces. arXiv preprint arXiv:1512.07679 (2015).

11. Félix G. Harvey, Mike Yurick, Derek Nowrouzezahrai, and Christopher Pal. 2020. Robust Motion In-Betweening. 39, 4 (2020).

12. Rachel Heck and Michael Gleicher. 2007. Parametric motion graphs. In Proceedings of the 2007 symposium on Interactive 3D graphics and games. Association for Computing Machinery, New York, NY, USA, 129–136.

13. Nicolas Heess, Dhruva TB, Srinivasan Sriram, Jay Lemmon, Josh Merel, Greg Wayne, Yuval Tassa, Tom Erez, Ziyu Wang, SM Eslami, et al. 2017. Emergence of locomotion behaviours in rich environments. arXiv preprint arXiv:1707.02286 (2017).

14. Daniel Holden, Oussama Kanoun, Maksym Perepichka, and Tiberiu Popa. 2020. Learned motion matching. ACM Transactions on Graphics (TOG) 39, 4 (2020), 53–1.

15. Daniel Holden, Taku Komura, and Jun Saito. 2017. Phase-functioned neural networks for character control. ACM Transactions on Graphics (TOG) 36, 4 (2017), 1–13.

16. Daniel Holden, Jun Saito, and Taku Komura. 2016. A deep learning framework for character motion synthesis and editing. ACM Transactions on Graphics (TOG) 35, 4 (2016), 1–11.

17. Daniel Holden, Jun Saito, Taku Komura, and Thomas Joyce. 2015. Learning motion manifolds with convolutional autoencoders. In SIGGRAPH Asia 2015 Technical Briefs. Association for Computing Machinery, New York, NY, USA, 1–4.

18. Yifeng Jiang, Tom Van Wouwe, Friedl De Groote, and C Karen Liu. 2019. Synthesis of biologically realistic human motion using joint torque actuation. ACM Transactions on Graphics (TOG) 38, 4 (2019), 1–12.

19. Lucas Kovar and Michael Gleicher. 2004. Automated extraction and parameterization of motions in large data sets. ACM Transactions on Graphics (ToG) 23, 3 (2004), 559–568.

20. Lucas Kovar, Michael Gleicher, and Frédéric Pighin. 2002. Motion Graphs. ACM Transactions on Graphics (TOG) 21, 3 (2002), 473–482.

21. Jehee Lee, Jinxiang Chai, Paul S. A. Reitsma, Jessica K. Hodgins, and Nancy S. Pollard. 2002. Interactive control of avatars animated with human motion data. ACM Transactions on Graphics (TOG) 21, 3 (2002), 491–500.

22. Jehee Lee and Kang Hoon Lee. 2006. Precomputing avatar behavior from human motion data. Graphical models 68, 2 (2006), 158–174.

23. Seunghwan Lee, Moonseok Park, Kyoungmin Lee, and Jehee Lee. 2019. Scalable muscle-actuated human simulation and control. ACM Transactions on Graphics (TOG) 38, 4 (2019), 1–13.

24. Yongjoon Lee, Seong Jae Lee, and Zoran Popović. 2009. Compact character controllers. ACM Transactions on Graphics (TOG) 28, 5 (2009), 1–8.

25. Yongjoon Lee, Kevin Wampler, Gilbert Bernstein, Jovan Popović, and Zoran Popović. 2010. Motion fields for interactive character locomotion. In ACM SIGGRAPH Asia 2010 papers. Association for Computing Machinery, New York, NY, USA, 1–8.

26. Sergey Levine, Yongjoon Lee, Vladlen Koltun, and Zoran Popović. 2011. Space-time planning with parameterized locomotion controllers. ACM Transactions on Graphics (TOG) 30, 3 (2011), 1–11.

27. Sergey Levine, Jack M Wang, Alexis Haraux, Zoran Popović, and Vladlen Koltun. 2012. Continuous character control with low-dimensional embeddings. ACM Transactions on Graphics (TOG) 31, 4 (2012), 1–10.

28. Hung Yu Ling, Fabio Zinno, George Cheng, and Michiel Van De Panne. 2020. Character controllers using motion vaes. ACM Transactions on Graphics (TOG) 39, 4 (2020), 40–1.

29. Libin Liu, Michiel Van De Panne, and KangKang Yin. 2016. Guided learning of control graphs for physics-based characters. ACM Transactions on Graphics (TOG) 35, 3 (2016), 1–14.

30. Libin Liu, KangKang Yin, Michiel van de Panne, Tianjia Shao, and Weiwei Xu. 2010. Sampling-based contact-rich motion control. In ACM SIGGRAPH 2010 papers. 1–10.

31. Ying-Sheng Luo, Jonathan Hans Soeseno, Trista Pei-Chun Chen, and Wei-Chao Chen. 2020. CARL: Controllable Agent with Reinforcement Learning for Quadruped Locomotion. ACM Transactions on Graphics (TOG) 39, 4 (2020), 1–10.

32. James McCann and Nancy Pollard. 2007. Responsive characters from motion fragments. In ACM SIGGRAPH 2007 papers. 6–es.

33. Josh Merel, Arun Ahuja, Vu Pham, Saran Tunyasuvunakool, Siqi Liu, Dhruva Tirumala, Nicolas Heess, and Greg Wayne. 2019a. Hierarchical Visuomotor Control of Humanoids. In International Conference on Learning Representations. https://openreview.net/forum?id=BJfYvo09Y7

34. Josh Merel, Leonard Hasenclever, Alexandre Galashov, Arun Ahuja, Vu Pham, Greg Wayne, Yee Whye Teh, and Nicolas Heess. 2019b. Neural Probabilistic Motor Primitives for Humanoid Control. In International Conference on Learning Representations. https://openreview.net/forum?id=BJl6TjRcY7

35. Jianyuan Min and Jinxiang Chai. 2012. Motion graphs++ a compact generative model for semantic motion analysis and synthesis. ACM Transactions on Graphics (TOG) 31, 6 (2012), 1–12.

36. Jianyuan Min, Yen-Lin Chen, and Jinxiang Chai. 2009. Interactive generation of human animation with deformable motion models. ACM Transactions on Graphics (TOG) 29, 1 (2009), 1–12.

37. Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Alex Graves, Ioannis Antonoglou, Daan Wierstra, and Martin Riedmiller. 2013. Playing atari with deep reinforcement learning. arXiv preprint arXiv:1312.5602 (2013).

38. Aaron van den Oord, Oriol Vinyals, and Koray Kavukcuoglu. 2017. Neural discrete representation learning. arXiv preprint arXiv:1711.00937 (2017).

39. Soohwan Park, Hoseok Ryu, Seyoung Lee, Sunmin Lee, and Jehee Lee. 2019. Learning predict-and-simulate policies from unorganized human motion data. ACM Transactions on Graphics (TOG) 38, 6 (2019), 1–11.

40. Xue Bin Peng, Pieter Abbeel, Sergey Levine, and Michiel van de Panne. 2018. Deepmimic: Example-guided deep reinforcement learning of physics-based character skills. ACM Transactions on Graphics (TOG) 37, 4 (2018), 1–14.

41. Xue Bin Peng, Glen Berseth, and Michiel Van de Panne. 2016. Terrain-adaptive locomotion skills using deep reinforcement learning. ACM Transactions on Graphics (TOG) 35, 4 (2016), 1–12.

42. Xue Bin Peng, Glen Berseth, KangKang Yin, and Michiel Van De Panne. 2017. Deeploco: Dynamic locomotion skills using hierarchical deep reinforcement learning. ACM Transactions on Graphics (TOG) 36, 4 (2017), 1–13.

43. Alla Safonova and Jessica K. Hodgins. 2007. Construction and Optimal Search of Interpolated Motion Graphs. ACM Transactions on Graphics (TOG) 26, 3 (2007), 106–es.

44. Sebastian Starke, He Zhang, Taku Komura, and Jun Saito. 2019. Neural state machine for character-scene interactions. ACM Transactions on Graphics (TOG) 38, 6, Article 209 (Nov. 2019), 14 pages.

45. Sebastian Starke, Yiwei Zhao, Taku Komura, and Kazi Zaman. 2020. Local motion phases for learning multi-contact character movements. ACM Transactions on Graphics (TOG) 39, 4 (2020), 54–1.

46. Adrien Treuille, Yongjoon Lee, and Zoran Popović. 2007. Near-optimal character animation with continuous control. In ACM SIGGRAPH 2007 papers. 7–es.

47. Tom Van de Wiele, David Warde-Farley, Andriy Mnih, and Volodymyr Mnih. 2020. Q-Learning in enormous action spaces via amortized approximate maximization. arXiv preprint arXiv:2001.08116 (2020).

48. Hado Van Hasselt, Arthur Guez, and David Silver. 2016. Deep reinforcement learning with double q-learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 30.

49. Tingwu Wang, Yunrong Guo, Maria Shugrina, and Sanja Fidler. 2020. UniCon: Universal Neural Controller For Physics-based Character Motion. arXiv preprint arXiv:2011.15119 (2020).

50. Jungdam Won and Jehee Lee. 2019. Learning body shape variation in physics-based characters. ACM Transactions on Graphics (TOG) 38, 6 (2019), 1–12.

51. Jungdam Won, Jongho Park, Kwanyu Kim, and Jehee Lee. 2017. How to train your dragon: example-guided control of flapping flight. ACM Transactions on Graphics (TOG) 36, 6 (2017), 1–13.

52. Jungdam Won, Jungnam Park, and Jehee Lee. 2018. Aerobatics control of flying creatures via self-regulated learning. ACM Transactions on Graphics (TOG) 37, 6 (2018), 1–10.

53. Zhaoming Xie, Hung Yu Ling, Nam Hee Kim, and Michiel van de Panne. 2020. ALL-STEPS: Curriculum-driven Learning of Stepping Stone Skills. In Proc. ACM SIGGRAPH / Eurographics Symposium on Computer Animation.

54. Wenhao Yu, Greg Turk, and C Karen Liu. 2018. Learning symmetric and low-energy locomotion. ACM Transactions on Graphics (TOG) 37, 4 (2018), 1–12.

55. He Zhang, Sebastian Starke, Taku Komura, and Jun Saito. 2018. Mode-adaptive neural networks for quadruped motion control. ACM Transactions on Graphics (TOG) 37, 4 (2018), 1–11.

56. Liming Zhao and Alla Safonova. 2009. Achieving good connectivity in motion graphs. Graphical Models 71, 4 (2009), 139–152.