“PhysCap: physically plausible monocular 3D motion capture in real time” by Shimada, Golyanik, Xu and Theobalt

Conference:

Type(s):

Title:

- PhysCap: physically plausible monocular 3D motion capture in real time

Session/Category Title: Learning to Move and Synthesize

Presenter(s)/Author(s):

Abstract:

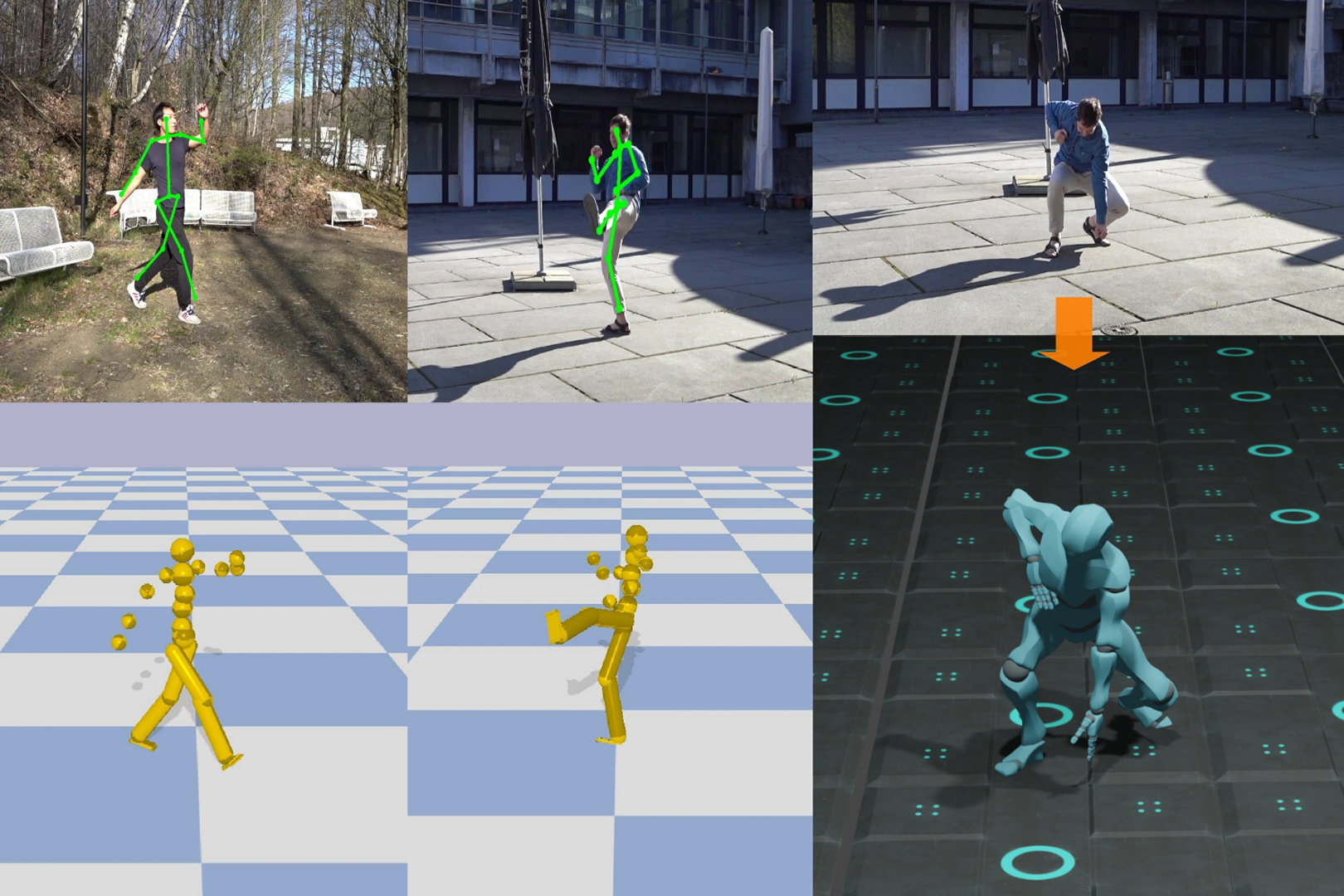

Marker-less 3D human motion capture from a single colour camera has seen significant progress. However, it is a very challenging and severely ill-posed problem. In consequence, even the most accurate state-of-the-art approaches have significant limitations. Purely kinematic formulations on the basis of individual joints or skeletons, and the frequent frame-wise reconstruction in state-of-the-art methods greatly limit 3D accuracy and temporal stability compared to multi-view or marker-based motion capture. Further, captured 3D poses are often physically incorrect and biomechanically implausible, or exhibit implausible environment interactions (floor penetration, foot skating, unnatural body leaning and strong shifting in depth), which is problematic for any use case in computer graphics.We, therefore, present PhysCap, the first algorithm for physically plausible, real-time and marker-less human 3D motion capture with a single colour camera at 25 fps. Our algorithm first captures 3D human poses purely kinematically. To this end, a CNN infers 2D and 3D joint positions, and subsequently, an inverse kinematics step finds space-time coherent joint angles and global 3D pose. Next, these kinematic reconstructions are used as constraints in a real-time physics-based pose optimiser that accounts for environment constraints (e.g., collision handling and floor placement), gravity, and biophysical plausibility of human postures. Our approach employs a combination of ground reaction force and residual force for plausible root control, and uses a trained neural network to detect foot contact events in images. Our method captures physically plausible and temporally stable global 3D human motion, without physically implausible postures, floor penetrations or foot skating, from video in real time and in general scenes. PhysCap achieves state-of-the-art accuracy on established pose benchmarks, and we propose new metrics to demonstrate the improved physical plausibility and temporal stability.

References:

1. Farhan A. Salem and Ayman Aly. 2015. PD Controller Structures: Comparison and Selection for an Electromechanical System. International Journal of Intelligent Systems and Applications (IJISA) 7, 2 (2015), 1–12.Google ScholarCross Ref

2. Adobe. 2020. Mixamo. https://www.mixamo.com/. Accessed: 2020-04-15.Google Scholar

3. Sheldon Andrews, Ivan Huerta, Taku Komura, Leonid Sigal, and Kenny Mitchell. 2016. Real-Time Physics-Based Motion Capture with Sparse Sensors. In European Conference on Visual Media Production (CVMP).Google Scholar

4. Mykhaylo Andriluka, Leonid Pishchulin, Peter Gehler, and Bernt Schiele. 2014. Human Pose Estimation: New Benchmark and State of the Art Analysis. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

5. Ronen Barzel, John F. Hughes, and Daniel N. Wood. 1996. Plausible Motion Simulation for Computer Graphics Animation. In Proceedings of the Eurographics Workshop on Computer Animation and Simulation.Google Scholar

6. Kevin Bergamin, Simon Clavet, Daniel Holden, and James Richard Forbes. 2019. DReCon: Data-Driven Responsive Control of Physics-Based Characters. ACM Transactions On Graphics (TOG) 38, 6 (2019).Google ScholarDigital Library

7. Liefeng Bo and Cristian Sminchisescu. 2010. Twin Gaussian Processes for Structured Prediction. International Journal of Computer Vision (IJCV) 87 (2010), 28–52.Google ScholarDigital Library

8. Federica Bogo, Angjoo Kanazawa, Christoph Lassner, Peter Gehler, Javier Romero, and Michael J. Black. 2016. Keep it SMPL: Automatic Estimation of 3D Human Pose and Shape from a Single Image. In European Conference on Computer Vision (ECCV).Google Scholar

9. Derek Bradley, Tiberiu Popa, Alla Sheffer, Wolfgang Heidrich, and Tamy Boubekeur. 2008. Markerless garment capture. ACM Transactions on Graphics (TOG) 27, 3 (2008), 99.Google ScholarDigital Library

10. Ernesto Brau and Hao Jiang. 2016. 3D Human Pose Estimation via Deep Learning from 2D Annotations. In International Conference on 3D Vision (3DV).Google ScholarCross Ref

11. Thomas Brox, Bodo Rosenhahn, Juergen Gall, and Daniel Cremers. 2010. Combined region- and motion-based 3D tracking of rigid and articulated objects. Transactions on Pattern Analysis and Machine Intelligence (TPAMI) 32, 3 (2010), 402–415.Google ScholarDigital Library

12. Cedric Cagniart, Edmond Boyer, and Slobodan Ilic. 2010. Free-Form Mesh Tracking: a Patch-Based Approach. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

13. Géry Casiez, Nicolas Roussel, and Daniel Vogel. 2012. 1 € Filter: A Simple Speed-Based Low-Pass Filter for Noisy Input in Interactive Systems. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems.Google ScholarDigital Library

14. Ching-Hang Chen and Deva Ramanan. 2017. 3D Human Pose Estimation = 2D Pose Estimation + Matching. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

15. Stelian Coros, Philippe Beaudoin, and Michiel van de Panne. 2010. Generalized Biped Walking Control. ACM Transactions On Graphics (TOG) 29, 4 (2010).Google ScholarDigital Library

16. Erwin Coumans and Yunfei Bai. 2016. Pybullet, a python module for physics simulation for games, robotics and machine learning. GitHub repository (2016).Google Scholar

17. Rishabh Dabral, Anurag Mundhada, Uday Kusupati, Safeer Afaque, Abhishek Sharma, and Arjun Jain. 2018. Learning 3D Human Pose from Structure and Motion. In European Conference on Computer Vision (ECCV).Google ScholarCross Ref

18. Edilson De Aguiar, Carsten Stoll, Christian Theobalt, Naveed Ahmed, Hans-Peter Seidel, and Sebastian Thrun. 2008. Performance Capture from Sparse Multi-View Video. ACM Transactions on Graphics (TOG) 27, 3 (2008).Google ScholarDigital Library

19. Ahmed Elhayek, Edilson de Aguiar, Arjun Jain, Jonathan Thompson, Leonid Pishchulin, Mykhaylo Andriluka, Christoph Bregler, Bernt Schiele, and Christian Theobalt. 2016. MARCOnI—ConvNet-Based MARker-Less Motion Capture in Outdoor and Indoor Scenes. Transactions on Pattern Analysis and Machine Intelligence (TPAMI) 39, 3 (2016), 501–514.Google ScholarDigital Library

20. Ahmed Elhayek, Carsten Stoll, Kwang In Kim, and Christian Theobalt. 2014. Outdoor Human Motion Capture by Simultaneous Optimization of Pose and Camera Parameters. Computer Graphics Forum (2014).Google Scholar

21. Petros Faloutsos, Michiel van de Panne, and Demetri Terzopoulos. 2001. Composable Controllers for Physics-Based Character Animation. In Annual Conference on Computer Graphics and Interactive Techniques. 251–260.Google Scholar

22. Roy Featherstone. 2014. Rigid body dynamics algorithms.Google Scholar

23. Martin L. Felis. 2017. RBDL: an Efficient Rigid-Body Dynamics Library using Recursive Algorithms. Autonomous Robots 41, 2 (2017), 495–511.Google ScholarDigital Library

24. Juergen Gall, Bodo Rosenhahn, Thomas Brox, and Hans-Peter Seidel. 2010. Optimization and Filtering for Human Motion Capture – a Multi-Layer Framework. International Journal of Computer Vision (IJCV) 87, 1 (2010), 75–92.Google ScholarDigital Library

25. Juergen Gall, Carsten Stoll, Edilson De Aguiar, Christian Theobalt, Bodo Rosenhahn, and Hans-Peter Seidel. 2009. Motion Capture Using Joint Skeleton Tracking and Surface Estimation. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

26. Marc Habermann, Weipeng Xu, Michael Zollhoefer, Gerard Pons-Moll, and Christian Theobalt. 2020. DeepCap: Monocular Human Performance Capture Using Weak Supervision. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

27. Marc Habermann, Weipeng Xu, Michael Zollhöfer, Gerard Pons-Moll, and Christian Theobalt. 2019. LiveCap: Real-Time Human Performance Capture From Monocular Video. ACM Transactions On Graphics (TOG) 38, 2 (2019), 14:1–14:17.Google ScholarDigital Library

28. Ikhsanul Habibie, Weipeng Xu, Dushyant Mehta, Gerard Pons-Moll, and Christian Theobalt. 2019. In the Wild Human Pose Estimation Using Explicit 2D Features and Intermediate 3D Representations. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

29. Mohamed Hassan, Vasileios Choutas, Dimitrios Tzionas, and Michael J. Black. 2019. Resolving 3D Human Pose Ambiguities with 3D Scene Constraints. In International Conference on Computer Vision (ICCV).Google Scholar

30. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep Residual Learning for Image Recognition. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

31. Catalin Ionescu, Dragos Papava, Vlad Olaru, and Cristian Sminchisescu. 2013. Human3.6M: Large Scale Datasets and Predictive Methods for 3D Human Sensing in Natural Environments. Transactions on Pattern Analysis and Machine Intelligence (TPAMI) 36, 7 (2013), 1325–1339.Google ScholarDigital Library

32. Yifeng Jiang, Tom Van Wouwe, Friedl De Groote, and C. Karen Liu. 2019. Synthesis of Biologically Realistic Human Motion Using Joint Torque Actuation. ACM Transactions On Graphics (TOG) 38, 4 (2019).Google ScholarDigital Library

33. S. Johnson and M. Everingham. 2011. Learning Effective Human Pose Estimation from Inaccurate Annotation. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

34. Angjoo Kanazawa, Michael J. Black, David W. Jacobs, and Jitendra Malik. 2018. End-to-end Recovery of Human Shape and Pose. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

35. Angjoo Kanazawa, Jason Y. Zhang, Panna Felsen, and Jitendra Malik. 2019. Learning 3D Human Dynamics from Video. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

36. Muhammed Kocabas, Nikos Athanasiou, and Michael J. Black. 2020. VIBE: Video Inference for Human Body Pose and Shape Estimation. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

37. Onorina Kovalenko, Vladislav Golyanik, Jameel Malik, Ahmed Elhayek, and Didier Stricker. 2019. Structure from Articulated Motion: Accurate and Stable Monocular 3D Reconstruction without Training Data. Sensors 19, 20 (2019).Google Scholar

38. Seunghwan Lee, Moonseok Park, Kyoungmin Lee, and Jehee Lee. 2019. Scalable Muscle-Actuated Human Simulation and Control. ACM Transactions On Graphics (TOG) 38, 4 (2019).Google ScholarDigital Library

39. Kenneth Levenberg. 1944. A method for the solution of certain non-linear problems in least squares. Quarterly Journal of Applied Mathmatics II, 2 (1944), 164–168.Google ScholarCross Ref

40. Sergey Levine and Jovan Popović. 2012. Physically Plausible Simulation for Character Animation. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation.Google Scholar

41. Zongmian Li, Jiri Sedlar, Justin Carpentier, Ivan Laptev, Nicolas Mansard, and Josef Sivic. 2019. Estimating 3D Motion and Forces of Person-Object Interactions from Monocular Video. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

42. Libin Liu, KangKang Yin, Michiel van de Panne, Tianjia Shao, and Weiwei Xu. 2010. Sampling-Based Contact-Rich Motion Control. ACM Transactions On Graphics (TOG) 29, 4 (2010), 128:1–128:10.Google ScholarDigital Library

43. Yebin Liu, Carsten Stoll, Juergen Gall, Hans-Peter Seidel, and Christian Theobalt. 2011. Markerless Motion Capture of Interacting Characters using Multi-View Image Segmentation. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

44. Adriano Macchietto, Victor Zordan, and Christian R. Shelton. 2009. Momentum Control for Balance. In ACM SIGGRAPH.Google Scholar

45. Donald W. Marquardt. 1963. An Algorithm for Least-Squares Estimation of Nonlinear Parameters. SIAM J. Appl. Math. 11, 2 (1963), 431–441.Google ScholarCross Ref

46. Ricardo Martin-Brualla, Rohit Pandey, Shuoran Yang, Pavel Pidlypenskyi, Jonathan Taylor, Julien Valentin, Sameh Khamis, Philip Davidson, Anastasia Tkach, Peter Lincoln, Adarsh Kowdle, Christoph Rhemann, Dan B Goldman, Cem Keskin, Steve Seitz, Shahram Izadi, and Sean Fanello. 2018. LookinGood: Enhancing Performance Capture with Real-Time Neural Re-Rendering. ACM Transactions On Graphics (TOG) 37, 6 (2018).Google ScholarDigital Library

47. Julieta Martinez, Rayat Hossain, Javier Romero, and James J. Little. 2017. A Simple Yet Effective Baseline for 3D Human Pose Estimation. In International Conference on Computer Vision (ICCV).Google Scholar

48. Dushyant Mehta, Helge Rhodin, Dan Casas, Pascal Fua, Oleksandr Sotnychenko, Weipeng Xu, and Christian Theobalt. 2017a. Monocular 3D Human Pose Estimation In The Wild Using Improved CNN Supervision. In International Conference on 3D Vision (3DV).Google ScholarCross Ref

49. Dushyant Mehta, Oleksandr Sotnychenko, Franziska Mueller, Weipeng Xu, Mohammad Elgharib, Hans-Peter Seidel, Helge Rhodin, Gerard Pons-Moll, and Christian Theobalt. 2020. XNect: Real-time Multi-Person 3D Motion Capture with a Single RGB Camera. ACM Transactions on Graphics (TOG) 39, 4.Google ScholarDigital Library

50. Dushyant Mehta, Srinath Sridhar, Oleksandr Sotnychenko, Helge Rhodin, Mohammad Shafiei, Hans-Peter Seidel, Weipeng Xu, Dan Casas, and Christian Theobalt. 2017b. VNect: Real-time 3D Human Pose Estimation with a Single RGB Camera. ACM Transactions on Graphics 36, 4, 14.Google ScholarDigital Library

51. Aron Monszpart, Paul Guerrero, Duygu Ceylan, Ersin Yumer, and Niloy J. Mitra. 2019. IMapper: Interaction-Guided Scene Mapping from Monocular Videos. ACM Transactions On Graphics (TOG) 38, 4 (2019).Google ScholarDigital Library

52. Francesc Moreno-Noguer. 2017. 3D Human Pose Estimation From a Single Image via Distance Matrix Regression. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

53. Shin’ichiro Nakaoka, Atsushi Nakazawa, Fumio Kanehiro, Kenji Kaneko, Mitsuharu Morisawa, Hirohisa Hirukawa, and Katsushi Ikeuchi. 2007. Learning from Observation Paradigm: Leg Task Models for Enabling a Biped Humanoid Robot to Imitate Human Dances. The International Journal of Robotics Research 26, 8 (2007), 829–844.Google ScholarDigital Library

54. Alejandro Newell, Kaiyu Yang, and Jia Deng. 2016. Stacked Hourglass Networks for Human Pose Estimation. In European Conference on Computer Vision (ECCV).Google Scholar

55. Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, et al. 2019. Pytorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems (NeurIPS). 8026–8037.Google Scholar

56. Georgios Pavlakos, Xiaowei Zhou, and Kostas Daniilidis. 2018. Ordinal Depth Supervision for 3D Human Pose Estimation. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

57. Georgios Pavlakos, Xiaowei Zhou, Konstantinos G Derpanis, and Kostas Daniilidis. 2017. Coarse-to-Fine Volumetric Prediction for Single-Image 3D Human Pose. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

58. Xue Bin Peng, Angjoo Kanazawa, Jitendra Malik, Pieter Abbeel, and Sergey Levine. 2018. SFV: Reinforcement Learning of Physical Skills from Videos. ACM Transactions On Graphics (TOG) 37, 6 (2018).Google ScholarDigital Library

59. Helge Rhodin, Mathieu Salzmann, and Pascal Fua. 2018. Unsupervised Geometry-Aware Representation Learning for 3D Human Pose Estimation. In European Conference on Computer Vision (ECCV).Google Scholar

60. Grégory Rogez, Philippe Weinzaepfel, and Cordelia Schmid. 2019. LCR-Net++: MultiPerson 2D and 3D Pose Detection in Natural Images. Transactions on Pattern Analysis and Machine Intelligence (TPAMI) (2019).Google Scholar

61. Erfan Shahabpoor and Aleksandar Pavic. 2017. Measurement of Walking Ground Reactions in Real-Life Environments: A Systematic Review of Techniques and Technologies. Sensors 17, 9 (2017), 2085.Google Scholar

62. Dana Sharon and Michiel van de Panne. 2005. Synthesis of Controllers for Stylized Planar Bipedal Walking. In International Conference on Robotics and Animation (ICRA).Google Scholar

63. Jonathan Starck and Adrian Hilton. 2007. Surface capture for performance-based animation. IEEE Computer Graphics and Applications (CGA) 27, 3 (2007), 21–31.Google ScholarDigital Library

64. Carsten Stoll, Nils Hasler, Juergen Gall, Hans-Peter Seidel, and Christian Theobalt. 2011. Fast articulated motion tracking using a sums of Gaussians body model. In International Conference on Computer Vision (ICCV).Google ScholarDigital Library

65. Bugra Tekin, Isinsu Katircioglu, Mathieu Salzmann, Vincent Lepetit, and Pascal Fua. 2016. Structured Prediction of 3D Human Pose with Deep Neural Networks. In British Machine Vision Conference (BMVC).Google ScholarCross Ref

66. Denis Tomè, Chris Russell, and Lourdes Agapito. 2017. Lifting from the Deep: Convolutional 3D Pose Estimation from a Single Image. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

67. Daniel Vlasic, Ilya Baran, Wojciech Matusik, and Jovan Popović. 2008. Articulated mesh animation from multi-view silhouettes. In ACM Transactions on Graphics (TOG), Vol. 27. 97.Google ScholarDigital Library

68. Daniel Vlasic, Pieter Peers, Ilya Baran, Paul Debevec, Jovan Popović, Szymon Rusinkiewicz, and Wojciech Matusik. 2009. Dynamic Shape Capture using MultiView Photometric Stereo. ACM Transactions on Graphics (TOG) 28, 5 (2009), 174.Google ScholarDigital Library

69. Marek Vondrak, Leonid Sigal, Jessica Hodgins, and Odest Jenkins. 2012. Video-based 3D Motion Capture Through Biped Control. ACM Transactions On Graphics (TOG) 31, 4 (2012), 1–12.Google ScholarDigital Library

70. Bastian Wandt and Bodo Rosenhahn. 2019. RepNet: Weakly Supervised Training of an Adversarial Reprojection Network for 3D Human Pose Estimation. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

71. Yangang Wang, Yebin Liu, Xin Tong, Qionghai Dai, and Ping Tan. 2018. Robust Nonrigid Motion Tracking and Surface Reconstruction Using L0 Regularization. IEEE Transctions on Visualization and Computer Graphics (TVCG) 24, 5 (2018), 1770–1783.Google ScholarCross Ref

72. Michael Waschbüsch, Stephan Würmlin, Daniel Cotting, Filip Sadlo, and Markus Gross. 2005. Scalable 3D Video of Dynamic Scenes. The Visual Computer 21, 8–10 (2005), 629–638.Google ScholarCross Ref

73. Xiaolin Wei and Jinxiang Chai. 2010. Videomocap: Modeling Physically Realistic Human Motion from Monocular Video Sequences. In ACM Transactions on Graphics (TOG), Vol. 29.Google ScholarDigital Library

74. Pawel Wrotek, Odest Chadwicke Jenkins, and Morgan McGuire. 2006. Dynamo: Dynamic, Data-Driven Character Control with Adjustable Balance. In ACM Sandbox Symposium on Video Games 2006.Google Scholar

75. Chenglei Wu, Kiran Varanasi, and Christian Theobalt. 2012. Full Body Performance Capture under Uncontrolled and Varying Illumination: A Shading-Based Approach. In European Conference on Computer Vision (ECCV).Google ScholarDigital Library

76. Lan Xu, Weipeng Xu, Vladislav Golyanik, Marc Habermann, Lu Fang, and Christian Theobalt. 2020. EventCap: Monocular 3D Capture of High-Speed Human Motions using an Event Camera. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

77. Wei Yang, Wanli Ouyang, Xiaolong Wang, Jimmy Ren, Hongsheng Li, and Xiaogang Wang. 2018. 3D Human Pose Estimation in the Wild by Adversarial Learning. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

78. Andrei Zanfir, Elisabeta Marinoiu, and Cristian Sminchisescu. 2018. Monocular 3D Pose and Shape Estimation of Multiple People in Natural Scenes – The Importance of Multiple Scene Constraints. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

79. Petrissa Zell, Bastian Wandt, and Bodo Rosenhahn. 2017. Joint 3D Human Motion Capture and Physical Analysis from Monocular Videos. In Computer Vision and Pattern Recognition (CVPR) Workshops.Google ScholarCross Ref

80. Peizhao Zhang, Kristin Siu, Jianjie Zhang, C Karen Liu, and Jinxiang Chai. 2014. Leveraging Depth Cameras and Wearable Pressure Sensors for Full-Body Kinematics and Dynamics Capture. ACM Transactions on Graphics (TOG) 33, 6 (2014), 1–14.Google ScholarDigital Library

81. Yuxiang Zhang, Liang An, Tao Yu, xiu Li, Kun Li, and Yebin Liu. 2020. 4D Association Graph for Realtime Multi-Person Motion Capture Using Multiple Video Cameras. In International Conference on Computer Vision (ICCV).Google ScholarCross Ref

82. Yu Zheng and Katsu Yamane. 2013. Human Motion Tracking Control with Strict Contact Force Constraints for Floating-Base Humanoid Robots. In International Conference on Humanoid Robots (Humanoids).Google Scholar

83. Xingyi Zhou, Qixing Huang, Xiao Sun, Xiangyang Xue, and Yichen Wei. 2017. Towards 3D Human Pose Estimation in the Wild: A Weakly-Supervised Approach. In International Conference on Computer Vision (ICCV).Google ScholarCross Ref

84. Xingyi Zhou, Xiao Sun, Wei Zhang, Shuang Liang, and Yichen Wei. 2016. Deep Kinematic Pose Regression. In European Conference on Computer Vision (ECCV).Google Scholar

85. Yuliang Zou, Jimei Yang, Duygu Ceylan, Jianming Zhang, Federico Perazzi, and Jia-Bin Huang. 2020. Reducing Footskate in Human Motion Reconstruction with Ground Contact Constraints. In Winter Conference on Applications of Computer Vision (WACV).Google Scholar