“The relightables: volumetric performance capture of humans with realistic relighting” by Guo, Lincoln, Davidson, Busch, Yu, et al. …

Conference:

Type(s):

Title:

- The relightables: volumetric performance capture of humans with realistic relighting

Session/Category Title:

- Light Hardware

Presenter(s)/Author(s):

- Kaiwen Guo

- Peter Lincoln

- Philip L. Davidson

- Jay Busch

- Xueming Yu

- Matt Whalen

- Geoff Harvey

- Sergio Orts-Escolano

- Rohit Pandey

- Jason Dourgarian

- Danhang Tang

- Anastasia Tkach

- Adarsh Kowdle

- Emily A. Cooper

- Mingsong Dou

- Sean Ryan Fanello

- Graham Fyffe

- Christoph Rhemann

- Jonathan Taylor

- Paul E. Debevec

- Shahram Izadi

Moderator(s):

Abstract:

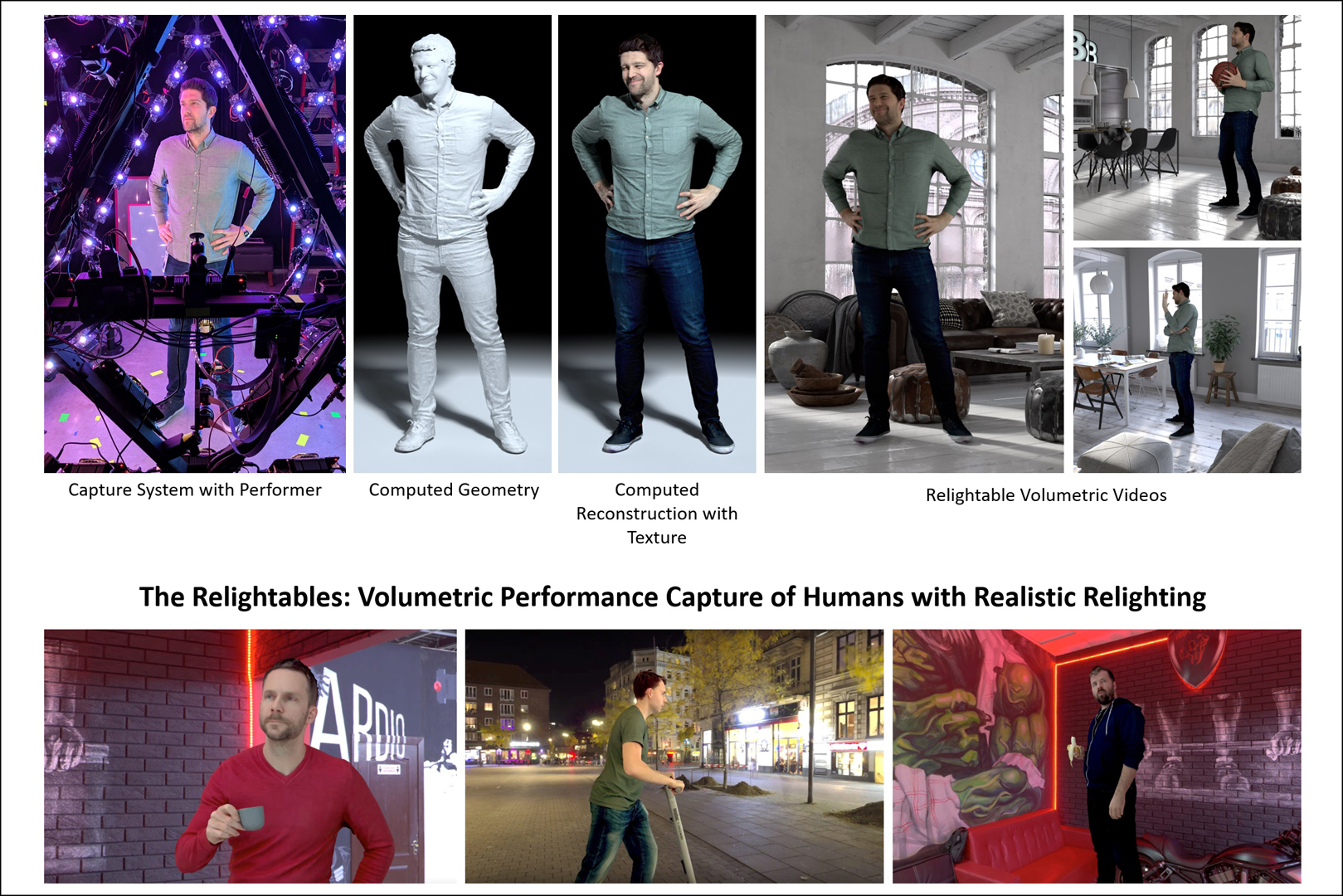

We present “The Relightables”, a volumetric capture system for photorealistic and high quality relightable full-body performance capture. While significant progress has been made on volumetric capture systems, focusing on 3D geometric reconstruction with high resolution textures, much less work has been done to recover photometric properties needed for relighting. Results from such systems lack high-frequency details and the subject’s shading is prebaked into the texture. In contrast, a large body of work has addressed relightable acquisition for image-based approaches, which photograph the subject under a set of basis lighting conditions and recombine the images to show the subject as they would appear in a target lighting environment. However, to date, these approaches have not been adapted for use in the context of a high-resolution volumetric capture system. Our method combines this ability to realistically relight humans for arbitrary environments, with the benefits of free-viewpoint volumetric capture and new levels of geometric accuracy for dynamic performances. Our subjects are recorded inside a custom geodesic sphere outfitted with 331 custom color LED lights, an array of high-resolution cameras, and a set of custom high-resolution depth sensors. Our system innovates in multiple areas: First, we designed a novel active depth sensor to capture 12.4 MP depth maps, which we describe in detail. Second, we show how to design a hybrid geometric and machine learning reconstruction pipeline to process the high resolution input and output a volumetric video. Third, we generate temporally consistent reflectance maps for dynamic performers by leveraging the information contained in two alternating color gradient illumination images acquired at 60Hz. Multiple experiments, comparisons, and applications show that The Relightables significantly improves upon the level of realism in placing volumetrically captured human performances into arbitrary CG scenes.

References:

1. Robert Anderson, David Gallup, Jonathan T Barron, Janne Kontkanen, Noah Snavely, Carlos Hernández, Sameer Agarwal, and Steven M Seitz. 2016. Jump: virtual reality video. ACM TOG (2016).Google Scholar

2. Guha Balakrishnan, Amy Zhao, Adrian V. Dalca, Frédo Durand, and John V. Guttag. 2018. Synthesizing Images of Humans in Unseen Poses. CVPR (2018).Google Scholar

3. Connelly Barnes, Eli Shechtman, Adam Finkelstein, and Dan B Goldman. 2009. Patch-Match: A Randomized Correspondence Algorithm for Structural Image Editing. ACM TOG (2009).Google Scholar

4. Jonathan T. Barron and Jitendra Malik. 2015. Shape, Illumination, and Reflectance from Shading. IEEE Trans. Pattern Anal. Mach. Intell. 37, 8 (2015).Google ScholarDigital Library

5. Thabo Beeler, Bernd Bickel, Paul Beardsley, Bob Sumner, and Markus Gross. 2010. High-quality Single-shot Capture of Facial Geometry. In ACM SIGGRAPH 2010.Google Scholar

6. Thabo Beeler, Fabian Hahn, Derek Bradley, Bernd Bickel, Paul Beardsley, Craig Gotsman, Robert W. Sumner, and Markus Gross. 2011. High-quality Passive Facial Performance Capture Using Anchor Frames. In ACM SIGGRAPH 2011.Google Scholar

7. Volker Blanz and Thomas Vetter. 1999. A Morphable Model for the Synthesis of 3D Faces. In SIGGRAPH, Vol. 99. 187–194.Google Scholar

8. Michael Bleyer, Christoph Rhemann, and Carsten Rother. 2011. PatchMatch Stereo-Stereo Matching with Slanted Support Windows.. In Bmvc, Vol. 11. 1–11.Google Scholar

9. Caroline Chan, Shiry Ginosar, Tinghui Zhou, and Alexei A. Efros. 2018. Everybody Dance Now. CoRR (2018).Google Scholar

10. Liang-Chieh Chen, George Papandreou, Iasonas Kokkinos, Kevin Murphy, and Alan L. Yuille. 2016. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. CoRR (2016).Google Scholar

11. Alvaro Collet, Ming Chuang, Pat Sweeney, Don Gillett, Dennis Evseev, David Calabrese, Hugues Hoppe, Adam Kirk, and Steve Sullivan. 2015. High-quality Streamable Free-viewpoint Video. ACM TOG (2015).Google Scholar

12. Paul Debevec, Tim Hawkins, Chris Tchou, Haarm-Pieter Duiker, Westley Sarokin, and Mark Sagar. 2000. Acquiring the Reflectance Field of a Human Face. In SIGGRAPH.Google Scholar

13. Paul Debevec, Yizhou Yu, and George Boshokov. 1998. Efficient View-Dependent Image-Based Rendering with Projective Texture-Mapping. In Rendering Techniques.Google Scholar

14. J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei. 2009. ImageNet: A Large-Scale Hierarchical Image Database. In CVPR09.Google Scholar

15. Mingsong Dou, Philip Davidson, Sean Ryan Fanello, Sameh Khamis, Adarsh Kowdle, Christoph Rhemann, Vladimir Tankovich, and Shahram Izadi. 2017. Motion2Fusion: Real-time Volumetric Performance Capture. SIGGRAPH Asia (2017).Google Scholar

16. Mingsong Dou, Sameh Khamis, Yury Degtyarev, Philip Davidson, Sean Ryan Fanello, Adarsh Kowdle, Sergio Orts Escolano, Christoph Rhemann, David Kim, Jonathan Taylor, Pushmeet Kohli, Vladimir Tankovich, and Shahram Izadi. 2016. Fusion4D: Real-time Performance Capture of Challenging Scenes. SIGGRAPH (2016).Google Scholar

17. Mingsong Dou, Jonathan Taylor, Henry Fuchs, Andrew Fitzgibbon, and Shahram Izadi. 2015. 3D scanning deformable objects with a single RGBD sensor. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 493–501.Google ScholarCross Ref

18. Ruofei Du, Ming Chuang, Wayne Chang, Hugues Hoppe, and Amitabh Varshney. 2019. Montage4D: Real-time Seamless Fusion and Stylization of Multiview Video Textures. Journal of Computer Graphics Techniques 8, 1 (17 January 2019).Google Scholar

19. Sean Ryan Fanello, Julien Valentin, Adarsh Kowdle, Christoph Rhemann, Vladimir Tankovich, Carlo Ciliberto, Philip Davidson, and Shahram Izadi. 2017a. Low Compute and Fully Parallel Computer Vision with HashMatch. In ICCV.Google Scholar

20. Sean Ryan Fanello, Julien Valentin, Christoph Rhemann, Adarsh Kowdle, Vladimir Tankovich, Philip Davidson, and Shahram Izadi. 2017b. UltraStereo: Efficient Learning-based Matching for Active Stereo Systems. In CVPR.Google Scholar

21. Graham Fyffe, Cyrus A. Wilson, and Paul Debevec. 2009. Cosine Lobe Based Relighting from Gradient Illumination Photographs. 100–108. Google ScholarDigital Library

22. Graham Fyffe, Tim Hawkins, Chris Watts, Wan-Chun Ma, and Paul Debevec. 2011. Comprehensive Facial Performance Capture. Eurographics (2011).Google Scholar

23. Silvano Galliani, Katrin Lasinger, and Konrad Schindler. 2015. Massively Parallel Multiview Stereopsis by Surface Normal Diffusion. The IEEE International Conference on Computer Vision (ICCV).Google ScholarDigital Library

24. Michael Garland and Paul S Heckbert. 1997. Surface simplification using quadric error metrics. In Proceedings of the 24th annual conference on Computer graphics and interactive techniques. ACM Press/Addison-Wesley Publishing Co., 209–216.Google ScholarDigital Library

25. Pablo Garrido, Levi Valgaert, Chenglei Wu, and Christian Theobalt. 2013. Reconstructing Detailed Dynamic Face Geometry from Monocular Video. ACM Trans. Graph. (Proc. SIGGRAPH Asia) 32, 6, Article 158 (Nov. 2013), 10 pages.Google Scholar

26. Pablo Garrido, Michael Zollhoefer, Dan Casas, Levi Valgaerts, Kiran Varanasi, Patrick Perez, and Christian Theobalt. 2016. Reconstruction of Personalized 3D Face Rigs from Monocular Video. (2016).Google Scholar

27. Paulo Gotardo, Jérémy Riviere, Derek Bradley, Abhijeet Ghosh, and Thabo Beeler. 2018. Practical Dynamic Facial Appearance Modeling and Acquisition. In SIGGRAPH Asia.Google Scholar

28. Kaiwen Guo, Jon Taylor, Sean Fanello, Andrea Tagliasacchi, Mingsong Dou, Philip Davidson, Adarsh Kowdle, and Shahram Izadi. 2018. TwinFusion: High Framerate Non-Rigid Fusion through Fast Correspondence Tracking. In 3DV.Google Scholar

29. Igor Guskov and Zoë J Wood. 2001. Topological noise removal. 2001 Graphics Interface Proceedings: Ottawa, Canada (2001), 19.Google ScholarDigital Library

30. Alexandru Eugen Ichim, Sofien Bouaziz, and Mark Pauly. 2015. Dynamic 3D Avatar Creation from Hand-held Video Input. ACM Trans. Graph. 34, 4, Article 45 (July 2015), 14 pages.Google ScholarDigital Library

31. International Electrotechnical Commission 2014. Safety of laser products – Part 1: Equipment classification and requirements (3 ed.). International Electrotechnical Commission. IEC 60825-1:2014.Google Scholar

32. Michael Kazhdan and Hugues Hoppe. 2013. Screened Poisson Surface Reconstruction. ACM TOG (2013).Google Scholar

33. Adarsh Kowdle, Christoph Rhemann, Sean Fanello, Andrea Tagliasacchi, Jon Taylor, Philip Davidson, Mingsong Dou, Kaiwen Guo, Cem Keskin, Sameh Khamis, David Kim, Danhang Tang, Vladimir Tankovich, Julien Valentin, and Shahram Izadi. 2018. The Need 4 Speed in Real-Time Dense Visual Tracking. SIGGRAPH Asia (2018).Google Scholar

34. Philipp Krähenbühl and Vladlen Koltun. 2011. Efficient Inference in Fully Connected CRFs with Gaussian Edge Potentials. In NIPS.Google Scholar

35. Chloe LeGendre, Wan-Chun Ma, Graham Fyffe, John Flynn, Laurent Charbonnel, Jay Busch, and Paul E. Debevec. 2019. DeepLight: Learning Illumination for Unconstrained Mobile Mixed Reality. CoRR abs/1904.01175 (2019). arXiv:1904.01175 http://arxiv.org/abs/1904.01175Google Scholar

36. V. Lempitsky and D. Ivanov. 2007. Seamless Mosaicing of Image-Based Texture Maps. In CVPR.Google Scholar

37. Guannan Li, Chenglei Wu, Carsten Stoll, Yebin Liu, Kiran Varanasi, Qionghai Dai, and Christian Theobalt. 2013b. Capturing Relightable Human Performances under General Uncontrolled Illumination. Computer Graphics Forum (Proc. EUROGRAPHICS 2013) (2013).Google ScholarCross Ref

38. Hao Li, Bart Adams, Leonidas J Guibas, and Mark Pauly. 2009. Robust single-view geometry and motion reconstruction. ACM Transactions on Graphics (ToG) 28, 5 (2009), 175.Google ScholarDigital Library

39. Hao Li, Etienne Vouga, Anton Gudym, Linjie Luo, Jonathan T Barron, and Gleb Gusev. 2013a. 3D self-portraits. ACM Transactions on Graphics (TOG) 32, 6 (2013), 187.Google ScholarDigital Library

40. Zhengqin Li, Kalyan Sunkavalli, and Manmohan Chandraker. 2018a. Materials for Masses: SVBRDF Acquisition with a Single Mobile Phone Image. In ECCV (Lecture Notes in Computer Science). Springer.Google Scholar

41. Zhengqin Li, Zexiang Xu, Ravi Ramamoorthi, Kalyan Sunkavalli, and Manmohan Chandraker. 2018b. Learning to Reconstruct Shape and Spatially-varying Reflectance from a Single Image. In SSIGGRAPH Asia.Google Scholar

42. Peter C Lincoln. 2017. Low Latency Displays for Augmented Reality. Ph.D. Dissertation. The University of North Carolina at Chapel Hill.Google Scholar

43. Stephen Lombardi, Tomas Simon, Jason Saragih, Gabriel Schwartz, Andreas Lehrmann, and Yaser Sheikh. 2019. Neural Volumes: Learning Dynamic Renderable Volumes from Images. SIGGRAPH (2019).Google ScholarDigital Library

44. Liqian Ma, Xu Jia, Qianru Sun, Bernt Schiele, Tinne Tuytelaars, and Luc Van Gool. 2017. Pose guided person image generation. In NIPS.Google Scholar

45. Liqian Ma, Qianru Sun, Stamatios Georgoulis, Luc Van Gool, Bernt Schiele, and Mario Fritz. 2018. Disentangled Person Image Generation. CVPR (2018).Google Scholar

46. Ricardo Martin-Brualla, Rohit Pandey, Shuoran Yang, Pavel Pidlypenskyi, Jonathan Taylor, Julien Valentin, Sameh Khamis, Philip Davidson, Anastasia Tkach, Peter Lincoln, Adarsh Kowdle, Christoph Rhemann, Dan B Goldman, Cem Keskin, Steve Seitz, Shahram Izadi, and Sean Fanello. 2018. LookinGood: Enhancing Performance Capture with Real-time Neural Re-Rendering. In SIGGRAPH Asia.Google Scholar

47. Abhimitra Meka, Gereon Fox, Michael Zollhöfer, Christian Richardt, and Christian Theobalt. 2017. Live User-Guided Intrinsic Video For Static Scene. IEEE Transactions on Visualization and Computer Graphics 23, 11 (2017).Google ScholarDigital Library

48. Abhimitra Meka, Christian Haene, Rohit Pandey, Michael Zollhoefer, Sean Fanello, Graham Fyffe, Adarsh Kowdle, Xueming Yu, Jay Busch, Jason Dourgarian, Peter Denny, Sofien Bouaziz, Peter Lincoln, Matt Whalen, Geoff Harvey, Jonathan Taylor, Shahram Izadi, Andrea Tagliasacchi, Paul Debevec, Christian Theobalt, Julien Valentin, and Christoph Rhemann. 2019. Deep Reflectance Fields – High-Quality Facial Reflectance Field Inference From Color Gradient Illumination. ACM Transactions on Graphics (Proceedings SIGGRAPH).Google Scholar

49. Microsoft. 2019. UVAtlas – isochart texture atlasing. (2019). http://github.com/Microsoft/UVAtlasGoogle Scholar

50. P. Mirdehghan, W. Chen, and K. N. Kutulakos. 2018. Optimal Structured Light a la Carte. In CVPR.Google Scholar

51. Giljoo Nam, Joo Ho Lee, Diego Gutierrez, and Min H. Kim. 2018. Practical SVBRDF Acquisition of 3D Objects with Unstructured Flash Photography. In SIGGRAPH Asia.Google Scholar

52. Natalia Neverova, Riza Alp Güler, and Iasonas Kokkinos. 2018. Dense Pose Transfer. ECCV (2018).Google Scholar

53. R. A. Newcombe, D. Fox, and S. M. Seitz. 2015. DynamicFusion: Reconstruction and tracking of non-rigid scenes in real-time. In CVPR.Google Scholar

54. Sergio Orts-Escolano, Christoph Rhemann, Sean Fanello, Wayne Chang, Adarsh Kowdle, Yury Degtyarev, David Kim, Philip L. Davidson, Sameh Khamis, Mingsong Dou, Vladimir Tankovich, Charles Loop, Qin Cai, Philip A. Chou, Sarah Mennicken, Julien Valentin, Vivek Pradeep, Shenlong Wang, Sing Bing Kang, Pushmeet Kohli, Yuliya Lutchyn, Cem Keskin, and Shahram Izadi. 2016. Holoportation: Virtual 3D Teleportation in Real-time. In UIST.Google Scholar

55. Rohit Pandey, Anastasia Tkach, Shuoran Yang, Pavel Pidlypenskyi, Jonathan Taylor, Ricardo Martin-Brualla, Andrea Tagliasacchi, George Papandreou, Philip Davidson, Cem Keskin, Shahram Izadi, and Sean Fanello. 2019. Volumetric Capture of Humans with a Single RGBD Camera via Semi-Parametric Learning. In CVPR.Google Scholar

56. Pieter Peers, Tim Hawkins, and Paul E. Debevec. 2006. A Reflective Light Stage. Technical Report.Google Scholar

57. Fabián Prada, Misha Kazhdan, Ming Chuang, Alvaro Collet, and Hugues Hoppe. 2017. Spatiotemporal Atlas Parameterization for Evolving Meshes. ACM TOG (2017).Google Scholar

58. Shunsuke Saito, Lingyu Wei, Liwen Hu, Koki Nagano, and Hao Li. 2017. Photorealistic Facial Texture Inference Using Deep Neural Networks. In CVPR. IEEE Computer Society, 2326–2335.Google Scholar

59. Pedro V. Sander, Steven J. Gortler, John Snyder, and Hugues Hoppe. 2002. Signal-specialized Parametrization. In Eurographics Workshop on Rendering.Google Scholar

60. Daniel Scharstein and Richard Szeliski. 2002. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. International journal of computer vision 47, 1–3 (2002), 7–42.Google Scholar

61. Johannes Lutz Schönberger, Enliang Zheng, Marc Pollefeys, and Jan-Michael Frahm. 2016. Pixelwise View Selection for Unstructured Multi-View Stereo. In European Conference on Computer Vision (ECCV).Google Scholar

62. Chenyang Si, Wei Wang, Liang Wang, and Tieniu Tan. 2018. Multistage Adversarial Losses for Pose-Based Human Image Synthesis. In CVPR.Google Scholar

63. K. Simonyan and A. Zisserman. 2014. Very Deep Convolutional Networks for Large-Scale Image Recognition. CoRR abs/1409.1556 (2014).Google Scholar

64. J. Starck and A. Hilton. 2007. Surface Capture for Performance-Based Animation. IEEE Computer Graphics and Applications (2007).Google Scholar

65. Robert W Sumner, Johannes Schmid, and Mark Pauly. 2007. Embedded deformation for shape manipulation. ACM Transactions on Graphics (TOG) 26, 3 (2007), 80.Google ScholarDigital Library

66. Tiancheng Sun, Jonathan T. Barron, Yun-Ta Tsai, Zexiang Xu, Xueming Yu, Graham Fyffe, Christoph Rhemann, Jay Busch, Paul Debevec, and Ravi Ramamoorthi. 2019. Single Image Portrait Relighting. ACM Transactions on Graphics (Proceedings SIGGRAPH).Google Scholar

67. L. M. Tanco and A. Hilton. 2000. Realistic synthesis of novel human movements from a database of motion capture examples. In Proceedings Workshop on Human Motion.Google ScholarCross Ref

68. Vladimir Tankovich, Michael Schoenberg, Sean Ryan Fanello, Adarsh Kowdle, Christoph Rhemann, Max Dzitsiuk, Mirko Schmidt, Julien Valentin, and Shahram Izadi. 2018. SOS: Stereo Matching in O(1) with Slanted Support Windows. IROS (2018).Google Scholar

69. Christian Theobalt, Naveed Ahmed, Hendrik P. A. Lensch, Marcus A. Magnor, and Hans-Peter Seidel. 2007. Seeing People in Different Light-Joint Shape, Motion, and Reflectance Capture. IEEE TVCG 13, 4 (2007), 663–674.Google Scholar

70. Justus Thies, Michael Zollhoefer, Marc Stamminger, Christian Theobalt, and Matthias Niessner. 2016. Face2Face: Real-Time Face Capture and Reenactment of RGB Videos. In Proc. CVPR.Google ScholarDigital Library

71. Zhen Wen, Zicheng Liu, and T. S. Huang. 2003. Face relighting with radiance environment maps. In CVPR.Google Scholar

72. Shuco Yamaguchi, Shunsuke Saito, Koki Nagano, Yajie Zhao, Weikai Chen, Kyle Olszewski, Shigeo Morishima, and Hao Li. 2018. High-fidelity Facial Reflectance and Geometry Inference from an Unconstrained Image. ACM Trans. Graph. 37, 4, Article 162 (July 2018).Google ScholarDigital Library

73. Jure Žbontar and Yann LeCun. 2016. Stereo Matching by Training a Convolutional Neural Network to Compare Image Patches. Journal of Machine Learning Research 17, 65 (2016), 1–32. http://jmlr.org/papers/v17/15-535.htmlGoogle Scholar

74. Zhengyou Zhang. 2000. A Flexible New Technique for Camera Calibration. IEEE Trans. Pattern Anal. Mach. Intell. 22, 11 (Nov. 2000), 1330–1334. Google ScholarDigital Library

75. Bo Zhao, Xiao Wu, Zhi-Qi Cheng, Hao Liu, and Jiashi Feng. 2017. Multi-View Image Generation from a Single-View. CoRR (2017).Google Scholar

76. Kun Zhou, John Synder, Baining Guo, and Heung-Yeung Shum. 2004. Iso-charts: Stretch-driven Mesh Parameterization Using Spectral Analysis. In Eurographics.Google ScholarDigital Library

77. Kun Zhou, Xi Wang, Yiying Tong, Mathieu Desbrun, Baining Guo, and Heung-Yeung Shum. 2005. TextureMontage. ACM TOG (2005).Google Scholar

78. Michael Zollhöfer, Matthias Nießner, Shahram Izadi, Christoph Rehmann, Christopher Zach, Matthew Fisher, Chenglei Wu, Andrew Fitzgibbon, Charles Loop, Christian Theobalt, and Marc Stamminger. 2014. Real-time Non-rigid Reconstruction using an RGB-D Camera. ACM TOG (2014).Google Scholar