“Depth from motion for smartphone AR”

Conference:

Type(s):

Title:

- Depth from motion for smartphone AR

Session/Category Title: Mixed reality

Presenter(s)/Author(s):

- Julien Valentin

- Adarsh Kowdle

- Jonathan T. Barron

- Neal Wadhwa

- Max Dzitsiuk

- Michael Schoenberg

- Vivek Verma

- Ambrus Csaszar

- Eric Turner

- Ivan Dryanovski

- Joao Afonso

- Jose Pascoal

- Konstantine Tsotsos

- Mira Leung

- Mirko Schmidt

- Onur Guleryuz

- Sameh Khamis

- Vladimir Tankovitch

- Sean Ryan Fanello

- Shahram Izadi

- Christoph Rhemann

Moderator(s):

Abstract:

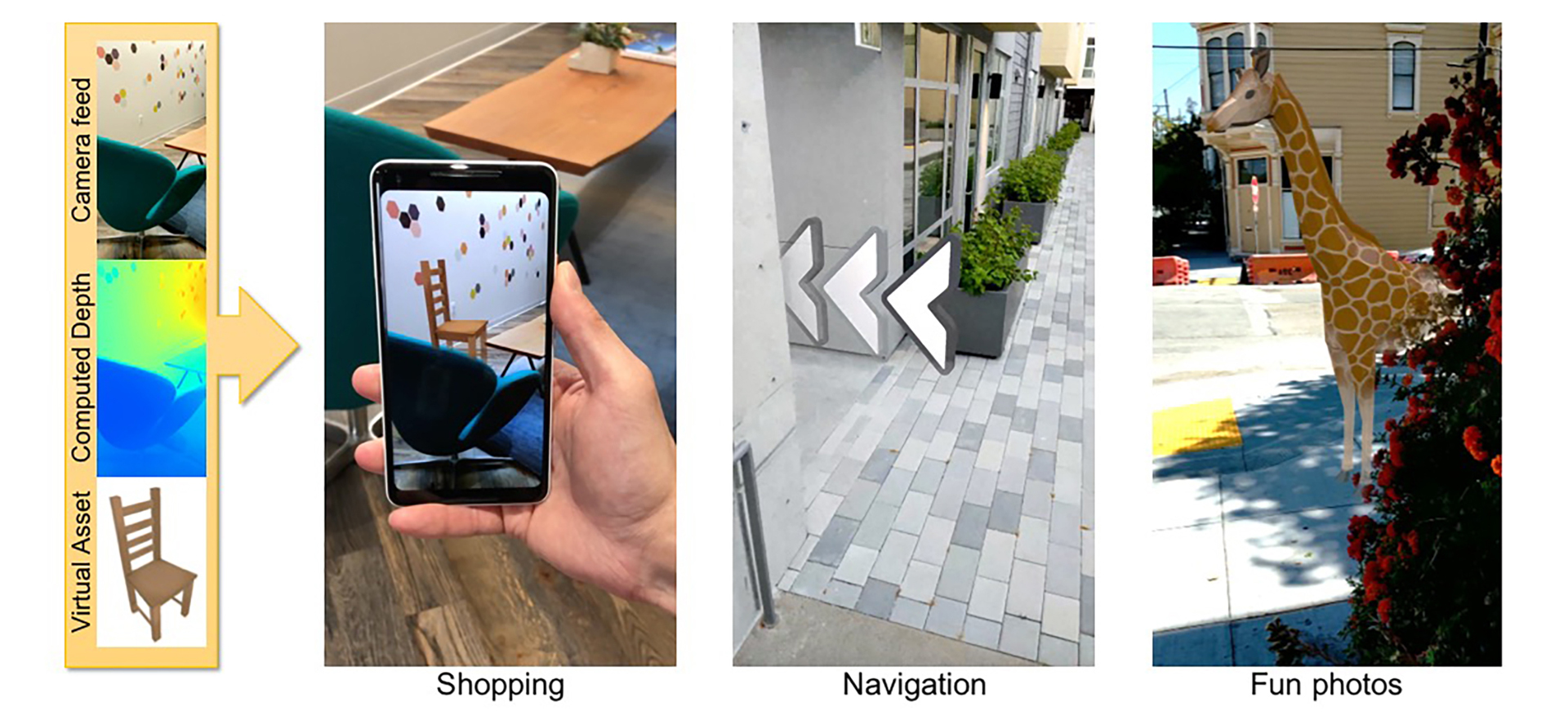

Augmented reality (AR) for smartphones has matured from a technology for earlier adopters, available only on select high-end phones, to one that is truly available to the general public. One of the key breakthroughs has been in low-compute methods for six degree of freedom (6DoF) tracking on phones using only the existing hardware (camera and inertial sensors). 6DoF tracking is the cornerstone of smartphone AR allowing virtual content to be precisely locked on top of the real world. However, to really give users the impression of believable AR, one requires mobile depth. Without depth, even simple effects such as a virtual object being correctly occluded by the real-world is impossible. However, requiring a mobile depth sensor would severely restrict the access to such features. In this article, we provide a novel pipeline for mobile depth that supports a wide array of mobile phones, and uses only the existing monocular color sensor. Through several technical contributions, we provide the ability to compute low latency dense depth maps using only a single CPU core of a wide range of (medium-high) mobile phones. We demonstrate the capabilities of our approach on high-level AR applications including real-time navigation and shopping.

References:

1. Robert Anderson, David Gallup, Jonathan T Barron, Janne Kontkanen, Noah Snavely, Carlos Hernández, Sameer Agarwal, and Steven M Seitz. 2016. Jump: Virtual Reality Video. SIGGRAPH Asia 35, 6 (2016), 198. Google ScholarDigital Library

2. Apple. 2018. ARKit | Apple Developer Documentation. (2018). https://developer.apple.com/documentation/arkitGoogle Scholar

3. Christian Bailer, Manuel Finckh, and Hendrik PA Lensch. 2012. Scale robust multi view stereo. In European Conference on Computer Vision. Springer, 398–411. Google ScholarDigital Library

4. Connelly Barnes, Eli Shechtman, Adam Finkelstein, and Dan B Goldman. 2009. Patch-Match: A randomized correspondence algorithm for structural image editing. ACM TOG 28, 24 (2009). Google ScholarDigital Library

5. Jonathan T Barron, Andrew Adams, YiChang Shih, and Carlos Hernández. 2015. Fast Bilateral-Space Stereo for Synthetic Defocus. CVPR (2015).Google Scholar

6. Jonathan T Barron and Ben Poole. 2016. The Fast Bilateral Solver. ECCV (2016).Google Scholar

7. Michael Bleyer, Christoph Rhemann, and Carsten Rother. 2011. PatchMatch Stereo-Stereo Matching with Slanted Support Windows. BMVC (2011).Google Scholar

8. Yuri Boykov, Olga Veksler, and Ramin Zabih. 2001. Fast approximate energy minimization via graph cuts. TPAMI (2001). Google ScholarDigital Library

9. Gary Bradski and Adrian Kaehler. 2000. OpenCV. Dr. Dobbs journal of software tools 3 (2000).Google Scholar

10. Prasun Choudhury and Jack Tumblin. 2005. The trilateral filter for high contrast images and meshes. SIGGRAPH Courses (2005). Google ScholarDigital Library

11. Antonio Criminisi, Jamie Shotton, Ender Konukoglu, et al. 2012. Decision forests: A unified framework for classification, regression, density estimation, manifold learning and semi-supervised learning. Foundations and Trends® in Computer Graphics and Vision (2012). Google ScholarDigital Library

12. David Eigen, Christian Puhrsch, and Rob Fergus. 2014. Depth map prediction from a single image using a multi-scale deep network. NIPS (2014). Google ScholarDigital Library

13. Daniel Evangelakos and Michael Mara. 2016. Extended TimeWarp latency compensation for virtual reality. Interactive 3D Graphics and Games (2016). Google ScholarDigital Library

14. Sean Ryan Fanello, Julien Valentin, Adarsh Kowdle, Christoph Rhemann, Vladimir Tankovich, Carlo Ciliberto, Philip Davidson, and Shahram Izadi. 2017a. Low Compute and Fully Parallel Computer Vision with HashMatch. ICCV (2017).Google Scholar

15. Sean Ryan Fanello, Julien Valentin, Christoph Rhemann, Adarsh Kowdle, Vladimir Tankovich, Philip Davidson, and Shahram Izadi. 2017b. UltraStereo: Efficient Learning-based Matching for Active Stereo Systems. CVPR (2017).Google Scholar

16. Olivier Faugeras, Quang-Tuan Luong, and T. Papadopoulou. 2001. The Geometry of Multiple Images: The Laws That Govern The Formation of Images of A Scene and Some of Their Applications. MIT Press. Google ScholarDigital Library

17. Yasutaka Furukawa, Brian Curless, Steven M. Seitz, and Richard Szeliski. 2009. Manhattan-World Stereo. CVPR (2009).Google Scholar

18. Ravi Garg, Vijay Kumar BG, Gustavo Carneiro, and Ian Reid. 2016. Unsupervised cnn for single view depth estimation: Geometry to the rescue. ECCV (2016).Google Scholar

19. Clément Godard, Oisin Mac Aodha, and Gabriel J Brostow. 2017. Unsupervised monocular depth estimation with left-right consistency. CVPR (2017).Google Scholar

20. Google. 2018. ARCore – Google Developers Documentation. (2018). https://developers.google.com/arGoogle Scholar

21. Rostam Affendi Hamzah and Haidi Ibrahim. 2016. Literature survey on stereo vision disparity map algorithms. Journal of Sensors (2016).Google Scholar

22. Richard Hartley and Andrew Zisserman. 2003. Multiple view geometry in computer vision. Cambridge university press. Google ScholarDigital Library

23. Richard I Hartley and Peter Sturm. 1997. Triangulation. Computer vision and image understanding (1997). Google ScholarDigital Library

24. Heiko Hirschmuller. 2008. Stereo processing by semiglobal matching and mutual information. TPAMI (2008). Google ScholarDigital Library

25. Asmaa Hosni, Christoph Rhemann, Michael Bleyer, and Margrit Gelautz. 2011. Temporally consistent disparity and optical flow via efficient spatio-temporal filtering. Pacific-Rim Symposium on Image and Video Technology (2011). Google ScholarDigital Library

26. Ramesh Jain, Sandra L Bartlett, and Nancy O’Brien. 1987. Motion stereo using ego-motion complex logarithmic mapping. IEEE Transactions on Pattern Analysis and Machine Intelligence 3 (1987), 356–369. Google ScholarDigital Library

27. Olaf Kähler, Victor Adrian Prisacariu, Carl Yuheng Ren, Xin Sun, Philip Torr, and David Murray. 2015. Very high frame rate volumetric integration of depth images on mobile devices. IEEE Transactions on Visualization and Computer Graphics (2015). Google ScholarDigital Library

28. Kevin Karsch, Ce Liu, and Sing Bing Kang. 2016. Depth Transfer: Depth Extraction from Videos Using Nonparametric Sampling. Dense Image Correspondences for Computer Vision (2016).Google Scholar

29. Alex Kendall, Hayk Martirosyan, Saumitro Dasgupta, Peter Henry, Ryan Kennedy, Abraham Bachrach, and Adam Bry. 2017. End-to-end learning of geometry and context for deep stereo regression. ICCV (2017).Google Scholar

30. Sameh Khamis, Sean Fanello, Christoph Rhemann, Julien Valentin, Adarsh Kowdle, and Shahram Izadi. 2018. StereoNet: Guided Hierarchical Refinement for Edge-Aware Depth Prediction. In ECCV.Google Scholar

31. Hanme Kim, Stefan Leutenegger, and Andrew J Davison. 2016. Real-time 3D reconstruction and 6-DoF tracking with an event camera. ECCV (2016).Google Scholar

32. K. Klasing, D. Althoff, D. Wollherr, and M. Buss. 2009. Comparison of surface normal estimation methods for range sensing applications. ICRA (2009). Google ScholarDigital Library

33. Kalin Kolev, Petri Tanskanen, Pablo Speciale, and Marc Pollefeys. 2014. Turning mobile phones into 3D scanners. CVPR (2014). Google ScholarDigital Library

34. Iro Laina, Christian Rupprecht, Vasileios Belagiannis, Federico Tombari, and Nassir Navab. 2016. Deeper Depth Prediction with Fully Convolutional Residual Networks. CoRR (2016). http://arxiv.org/abs/1606.00373Google Scholar

35. Ping Li, Dirk Farin, Rene Klein Gunnewiek, et al. 2006. On creating depth maps from monoscopic video using structure from motion. IEEE Workshop on Content Generation and Coding for 3D-Television (2006).Google Scholar

36. Fayao Liu, Chunhua Shen, Guosheng Lin, and Ian Reid. 2016. Learning depth from single monocular images using deep convolutional neural fields. IEEE transactions on pattern analysis and machine intelligence 38, 10 (2016), 2024–2039. Google ScholarDigital Library

37. Charles Loop and Zhengyou Zhang. 1999. Computing rectifying homographies for stereo vision. CVPR (1999).Google Scholar

38. Amrita Mazumdar, Armin Alaghi, Jonathan T. Barron, David Gallup, Luis Ceze, Mark Oskin, and Steven M. Seitz. 2017. A Hardware-friendly Bilateral Solver for Real-time Virtual Reality Video. High Performance Graphics (2017). Google ScholarDigital Library

39. Mark Mine and Gary Bishop. 1993. Just-in-time pixels. University of North Carolina at Chapel Hill Technical Report TR93-005 (1993). Google Scholar

40. Karsten Mühlmann, Dennis Maier, Jürgen Hesser, and Reinhard Männer. 2002. Calculating dense disparity maps from color stereo images, an efficient implementation. IJCV (2002). Google ScholarDigital Library

41. Ramakant Nevatia. 1976. Depth measurement by motion stereo. CGIP (1976).Google Scholar

42. R. A. Newcombe, S. J. Lovegrove, and A. J. Davison. 2011. DTAM: Dense tracking and mapping in real-time. In 2011 International Conference on Computer Vision. Google ScholarDigital Library

43. Manuel M. Oliveira, Brian Bowen, Richard Mckenna, and Yu sung Chang. 2001. Fast digital image inpainting. (2001).Google Scholar

44. Peter Ondrúška, Pushmeet Kohli, and Shahram Izadi. 2015. Mobilefusion: Real-time volumetric surface reconstruction and dense tracking on mobile phones. IEEE Transactions on Visualization and Computer Graphics (2015). Google ScholarDigital Library

45. Aäron van den Oord, Nal Kalchbrenner, Oriol Vinyals, Lasse Espeholt, Alex Graves, and Koray Kavukcuoglu. 2016. Conditional image generation with pixelcnn decoders. In Proceedings of the 30th International Conference on Neural Information Processing Systems. Curran Associates Inc., 4797–4805. Google ScholarDigital Library

46. Liyuan Pan, Yuchao Dai, Miaomiao Liu, and Fatih Porikli. 2018. Depth Map Completion by Jointly Exploiting Blurry Color Images and Sparse Depth Maps. WACV (2018).Google Scholar

47. Jiahao Pang, Wenxiu Sun, JS Ren, Chengxi Yang, and Qiong Yan. 2017. Cascade residual learning: A two-stage convolutional neural network for stereo matching. (2017).Google Scholar

48. Jaesik Park, Hyeongwoo Kim, Yu-Wing Tai, Michael S Brown, and In So Kweon. 2014. High-quality depth map upsampling and completion for RGB-D cameras. IEEE TIP (2014).Google ScholarCross Ref

49. Deepak Pathak, Philipp Krahenbuhl, Jeff Donahue, Trevor Darrell, and Alexei A Efros. 2016. Context encoders: Feature learning by inpainting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2536–2544.Google ScholarCross Ref

50. Marc Pollefeys, Reinhard Koch, and Luc Van Gool. 1999. A simple and efficient rectification method for general motion. ICCV (1999).Google Scholar

51. Vivek Pradeep, Christoph Rhemann, Shahram Izadi, Christopher Zach, Michael Bleyer, and Steven Bathiche. 2013. MonoFusion: Real-time 3D Reconstruction of Small Scenes with a Single Web Camera. ISMAR (2013).Google Scholar

52. Malcolm Reynolds, Jozef Doboš, Leto Peel, Tim Weyrich, and Gabriel J Brostow. 2011. Capturing time-of-flight data with confidence. CVPR. Google ScholarDigital Library

53. Christian Richardt, Douglas Orr, Ian Davies, Antonio Criminisi, and Neil A. Dodgson. 2010. Real-time Spatiotemporal Stereo Matching Using the Dual-Cross-Bilateral Grid. ECCV (2010). Google ScholarDigital Library

54. Christian Richardt, Carsten Stoll, Neil A Dodgson, Hans-Peter Seidel, and Christian Theobalt. 2012. Coherent spatiotemporal filtering, upsampling and rendering of RGBZ videos. Computer Graphics Forum (2012). Google ScholarDigital Library

55. Daniel Scharstein, Heiko Hirschmuller, York Kitajima, Greg Krathwohl, Nera Nesic, Xi Wang, and Porter Westling. 2014. High-Resolution Stereo Datasets with Subpixel-Accurate Ground Truth. GCPR (2014).Google Scholar

56. Daniel Scharstein and Richard Szeliski. 2002. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. IJCV (2002). Google ScholarDigital Library

57. Johannes L Schönberger, Enliang Zheng, Jan-Michael Frahm, and Marc Pollefeys. 2016. Pixelwise view selection for unstructured multi-view stereo. In European Conference on Computer Vision. Springer, 501–518.Google ScholarCross Ref

58. Thomas Schöps, Jakob Engel, and Daniel Cremers. 2014. Semi-dense visual odometry for AR on a smartphone. ISMAR (2014).Google Scholar

59. Thomas Schöps, Martin R Oswald, Pablo Speciale, Shuoran Yang, and Marc Pollefeys. 2017a. Real-Time View Correction for Mobile Devices. IEEE Transactions on Visualization and Computer Graphics (2017).Google ScholarDigital Library

60. Thomas Schöps, Torsten Sattler, Christian Häne, and Marc Pollefeys. 2017b. Large-scale outdoor 3D reconstruction on a mobile device. Computer Vision and Image Understanding (2017). Google ScholarDigital Library

61. Jianhong Shen and Tony F Chan. 2002. Mathematical models for local nontexture inpaintings. SIAM J. Appl. Math. (2002).Google Scholar

62. Nathan Silberman, Derek Hoiem, Pushmeet Kohli, and Rob Fergus. 2012. Indoor segmentation and support inference from rgbd images. In European Conference on Computer Vision. Springer, 746–760. Google ScholarDigital Library

63. Supasorn Suwajanakorn, Carlos Hernandez, and Steven M Seitz. 2015. Depth from focus with your mobile phone. CVPR (2015).Google Scholar

64. T. Taniai, Y. Matsushita, Y. Sato, and T. Naemura. 2018. Continuous 3D Label Stereo Matching using Local Expansion Moves. PAMI (2018).Google Scholar

65. Petri Tanskanen, Kalin Kolev, Lorenz Meier, Federico Camposeco, Olivier Saurer, and Marc Pollefeys. 2013. Live metric 3d reconstruction on mobile phones. ICCV (2013). Google ScholarDigital Library

66. JMP Van Waveren. 2016. The asynchronous time warp for virtual reality on consumer hardware. VRST (2016). Google ScholarDigital Library

67. Neal Wadhwa, Rahul Garg, David E. Jacobs, Bryan E. Feldman, Nori Kanazawa, Robert Carroll, Yair Movshovitz-Attias, Jonathan T. Barron, Yael Pritch, and Marc Levoy. 2018. Synthetic Depth-of-Field with a Single-Camera Mobile Phone. SIGGRAPH (2018). Google ScholarDigital Library

68. Caihua Wang, H. Tanahashi, H. Hirayu, Y. Niwa, and K. Yamamoto. 2001. Comparison of local plane fitting methods for range data. CVPR (2001).Google Scholar

69. Chamara Saroj Weerasekera, Thanuja Dharmasiri, Ravi Garg, Tom Drummond, and Ian Reid. 2018. Just-in-Time Reconstruction: Inpainting Sparse Maps using Single View Depth Predictors as Priors. arXiv preprint arXiv:1805.04239 (2018).Google Scholar

70. O. Woodford, P. Torr, I. Reid, and A. Fitzgibbon. 2009. Global Stereo Reconstruction under Second-Order Smoothness Priors. TPAMI (2009). Google ScholarDigital Library

71. Chi Zhang, Zhiwei Li, Rui Cai, Hongyang Chao, and Yong Rui. 2014. As-Rigid-As-Possible Stereo under Second Order Smoothness Priors. ECCV (2014).Google Scholar

72. Dandan Zhang and Yuejia Luo. 2012. Single-trial ERPs elicited by visual stimuli at two contrast levels: Analysis of ongoing EEG and latency/amplitude jitters. ISRA (2012).Google Scholar

73. Yinda Zhang and Thomas Funkhouser. 2018. Deep Depth Completion of a Single RGB-D Image. CVPR.Google Scholar

74. Enliang Zheng, Enrique Dunn, Vladimir Jojic, and Jan-Michael Frahm. 2014. Patchmatch based joint view selection and depthmap estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 1510–1517. Google ScholarDigital Library

75. Tinghui Zhou, Matthew Brown, Noah Snavely, and David G Lowe. 2017. Unsupervised learning of depth and ego-motion from video. CVPR (2017).Google Scholar