“Video quality assessment for computer graphics applications”

Conference:

Type(s):

Title:

- Video quality assessment for computer graphics applications

Session/Category Title: Image & video applications

Presenter(s)/Author(s):

Moderator(s):

Abstract:

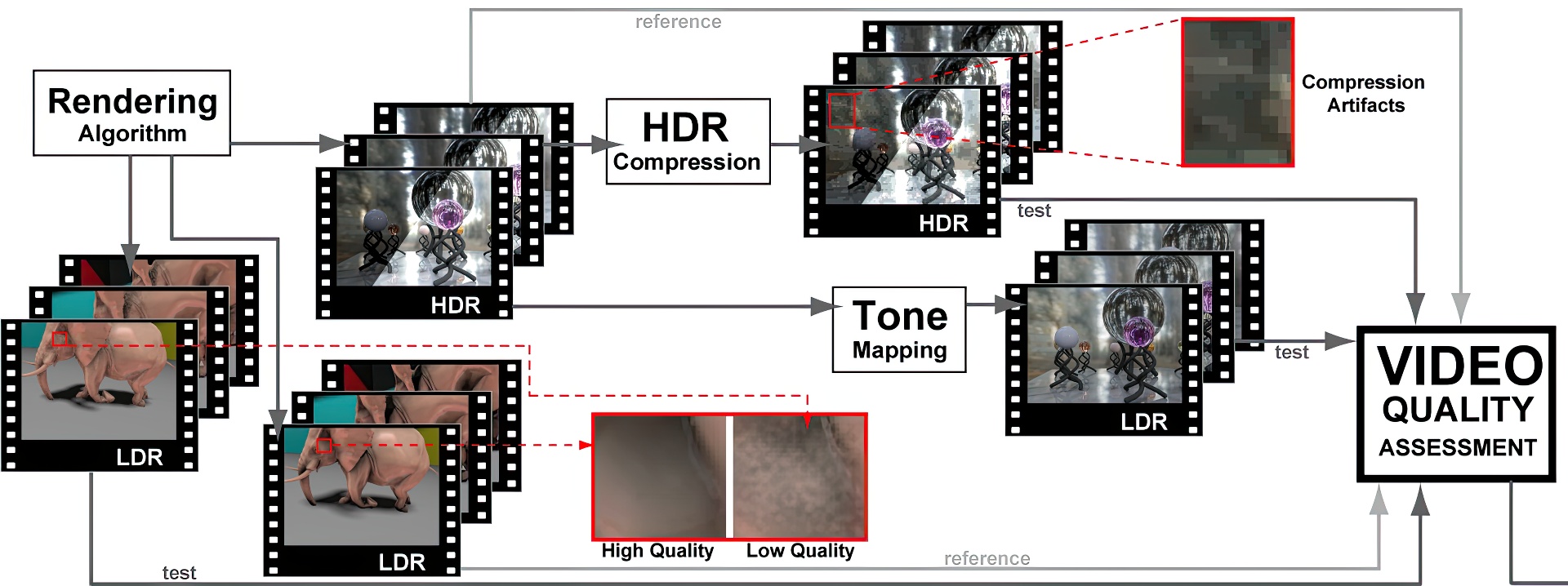

Numerous current Computer Graphics methods produce video sequences as their outcome. The merit of these methods is often judged by assessing the quality of a set of results through lengthy user studies. We present a full-reference video quality metric geared specifically towards the requirements of Computer Graphics applications as a faster computational alternative to subjective evaluation. Our metric can compare a video pair with arbitrary dynamic ranges, and comprises a human visual system model for a wide range of luminance levels, that predicts distortion visibility through models of luminance adaptation, spatiotemporal contrast sensitivity and visual masking. We present applications of the proposed metric to quality prediction of HDR video compression and temporal tone mapping, comparison of different rendering approaches and qualities, and assessing the impact of variable frame rate to perceived quality.

References:

1. Aydin, T. O., Mantiuk, R., Myszkowski, K., and Seidel, H.-P. 2008. Dynamic range independent image quality assessment. In Proc. of ACM SIGGRAPH, vol. 27(3). Article 69. Google ScholarDigital Library

2. Bavoil, L., Sainz, M., and Dimitrov, R. 2008. Image-space horizon-based ambient occlusion. In SIGGRAPH ’08: ACM SIGGRAPH 2008 talks, ACM, New York, NY, USA, 1–1. Google ScholarDigital Library

3. Bittner, J., Wimmer, M., Piringer, H., and Purgathofer, W. 2004. Coherent hierarchical culling: Hardware occlusion queries made useful. Computer Graphics Forum 23, 3 (Sept.), 615–624. Proceedings EUROGRAPHICS 2004.Google ScholarCross Ref

4. Bolin, M., and Meyer, G. 1998. A perceptually based adaptive sampling algorithm. In Proc. of Siggraph ’98, 299–310. Google ScholarDigital Library

5. Dachsbacher, C., and Stamminger, M. 2005. Reflective shadow maps. In I3D ’05: Proceedings of the 2005 symposium on Interactive 3D graphics and games, ACM, New York, NY, USA, 203–231. Google ScholarDigital Library

6. Daly, S. 1993. The Visible Differences Predictor: An algorithm for the assessment of image fidelity. In Digital Images and Human Vision, MIT Press, A. B. Watson, Ed., 179–206. Google ScholarDigital Library

7. Daly, S. J. 1998. Engineering observations from spatiovelocity and spatiotemporal visual models. SPIE, B. E. Rogowitz and T. N. Pappas, Eds., vol. 3299, 180–191.Google Scholar

8. Drago, F., Myszkowski, K., Annen, T., and N. Chiba. 2003. Adaptive logarithmic mapping for displaying high contrast scenes. Computer Graphics Forum 22, 3.Google ScholarCross Ref

9. Fattal, R., Lischinski, D., and Werman, M. 2002. Gradient domain high dynamic range compression. In SIGGRAPH ’02: Proceedings of the 29th annual conference on Computer graphics and interactive techniques, ACM Press, 249–256. Google ScholarDigital Library

10. Ferwerda, J., and Pellacini, F. 2003. Functional difference predictors (fdps): measuring meaningful image differences. In Signals, Systems and Computers, 2003. Conference Record of the Thirty-Seventh Asilomar Conference on, vol. 2, 1388–1392 Vol.2.Google Scholar

11. Fredericksen, R. E., H. R. F. 1998. Estimating multiple temporal mechanisms in human vision. In Vision Research, vol. 38, 1023–1040.Google ScholarCross Ref

12. Freeman, W. T., and Adelson, E. H. 1991. The design and use of steerable filters. Pattern Analysis and Machine Intelligence, IEEE Transactions on 13, 9, 891–906. Google ScholarDigital Library

13. Herzog, R., Eisemann, E., Myszkowski, K., and Seidel, H.-P. 2010. Spatio-temporal upsampling on the GPU. In I3D ’10: Proceedings of the 2010 symposium on Interactive 3D graphics and games, ACM, New York, NY, USA, 91–98. Google ScholarDigital Library

14. ITU-T. 1999. Subjective video quality assessment methods for multimedia applications.Google Scholar

15. Lindh, P., and van den Branden Lambrecht, C. 1996. Efficient spatio-temporal decomposition for perceptual processing of video sequences. In Proceedings of International Conference on Image Processing ICIP’96, IEEE, vol. 3 of Proc. of IEEE, 331–334.Google Scholar

16. Lubin, J. 1995. Vision Models for Target Detection and Recognition. World Scientific, ch. A Visual Discrimination Model for Imaging System Design and Evaluation, 245–283.Google Scholar

17. Lukin, A. 2009. Improved visible differences predictor using a complex cortex transform. GraphiCon, 145–150.Google Scholar

18. Mantiuk, R., Krawczyk, G., Myszkowski, K., and Seidel, H.-P. 2004. Perception-motivated high dynamic range video encoding. ACM Trans. Graph. 23, 3, 733–741. Google ScholarDigital Library

19. Mantiuk, R., Daly, S., Myszkowski, K., and Seidel, H.-P. 2005. Predicting visible differences in high dynamic range images – model and its calibration. In Human Vision and Electronic Imaging X, vol. 5666 of SPIE Proceedings Series, 204–214.Google Scholar

20. Masry, M. A., and Hemami, S. S. 2004. A metric for continuous quality evaluation of compressed video with severe distortions. Signal Processing: Image Communication 19, 2, 133–146.Google ScholarCross Ref

21. Myszkowski, K., Rokita, P., and Tawara, T. 2000. Perception-based fast rendering and antialiasing of walkthrough sequences. IEEE Transactions on Visualization and Computer Graphics 6, 4, 360–379. Google ScholarDigital Library

22. Myszkowski, K., Tawara, T., Akamine, H., and Seidel, H.-P. 2001. Perception-guided global illumination solution for animation rendering. In SIGGRAPH ’01: Proceedings of the 28th annual conference on Computer graphics and interactive techniques, ACM, New York, NY, USA, 221–230. Google ScholarDigital Library

23. Pattanaik, S. N., Tumblin, J. E., Yee, H., and Greenberg, D. P. 2000. Time-dependent visual adaptation for fast realistic image display. In Proc. of ACM SIGGRAPH 2000, 47–54. Google ScholarDigital Library

24. Reeves, W. T., Salesin, D. H., and Cook, R. L. 1987. Rendering antialiased shadows with depth maps. In SIGGRAPH ’87: Proceedings of the 14th annual conference on Computer graphics and interactive techniques, ACM, New York, NY, USA, 283–291. Google ScholarDigital Library

25. Ritschel, T., Grosch, T., and Seidel, H.-P. 2009. Approximating dynamic global illumination in image space. In I3D ’09: Proceedings of the 2009 symposium on Interactive 3D graphics and games, ACM, New York, NY, USA, 75–82. Google ScholarDigital Library

26. Rushmeier, H., Ward, G., Piatko, C., Sanders, P., and Rust, B. 1995. Comparing real and synthetic images: some ideas about metrics. In Rendering Techniques ’95, Springer, P. Hanrahan and W. Purgathofer, Eds., 82–91.Google Scholar

27. Sampat, M. P., Wang, Z., Gupta, S., Bovik, A. C., and Markey, M. K. 2009. Complex wavelet structural similarity: A new image similarity index. Image Processing, IEEE Transactions on 18, 11 (Nov.), 2385–2401. Google ScholarDigital Library

28. Schwarz, M., and Stamminger, M. 2009. On predicting visual popping in dynamic scenes. In APGV ’09: Proceedings of the 6th Symposium on Applied Perception in Graphics and Visualization, ACM, New York, NY, USA, 93–100. Google ScholarDigital Library

29. Seshadrinathan, K., and Bovik, A. 2007. A structural similarity metric for video based on motion models. In Acoustics, Speech and Signal Processing, 2007. ICASSP 2007. IEEE International Conference on, vol. 1, I-869–I-872.Google Scholar

30. Seshadrinathan, K., and Bovik, A. C. 2010. Motion tuned spatio-temporal quality assessment of natural videos. Image Processing, IEEE Transactions on 19, 2 (Feb.), 335–350. Google ScholarDigital Library

31. van den Branden Lambrecht, C., and Verscheure, O. 1996. Perceptual Quality Measure using a Spatio-Temporal Model of the Human Visual System. In IS&T/SPIE.Google Scholar

32. van den Branden Lambrecht, C., Costantini, D., Sicuranza, G., and Kunt, M. 1999. Quality assessment of motion rendition in video coding. Circuits and Systems for Video Technology, IEEE Transactions on 9, 5 (Aug), 766–782. Google ScholarDigital Library

33. Wandell, B. A. 1995. Foundations of Vision. Sinauer Associates, Inc.Google Scholar

34. Wang, Z., and Simoncelli, E. 2005. Translation insensitive image similarity in complex wavelet domain. In Acoustics, Speech, and Signal Processing, 2005. Proceedings. (ICASSP ’05). IEEE International Conference on, vol. 2, 573–576.Google Scholar

35. Watson, A. B., and Malo, J. 2002. Video quality measures based on the standard spatial observer. In ICIP (3), 41–44.Google Scholar

36. Watson, A. B., Hu, J., and Iii, J. F. M. 2001. DVQ: A digital video quality metric based on human vision. Journal of Electronic Imaging 10, 20–29.Google ScholarCross Ref

37. Watson, A. B. 1986. Temporal sensitivity. In Handbook of Perception and Human Performance, K. R. Boff, L. Kaufman, and J. P. Thomas, Eds. John Wiley and Sons, New York, 6-1-6-43.Google Scholar

38. Watson, A. 1987. The Cortex transform: rapid computation of simulated neural images. Comp. Vision Graphics and Image Processing 39, 311–327. Google ScholarDigital Library

39. Winkler, S. 1999. A perceptual distortion metric for digital color video. In Proceedings of the SPIE Conference on Human Vision and Electronic Imaging, IEEE, vol. 3644 of Controlling Chaos and Bifurcations in Engineering Systems, 175–184.Google Scholar

40. Winkler, S. 2005. Digital Video Quality: Vision Models and Metrics. Wiley.Google Scholar

41. Yee, H., Pattanaik, S., and Greenberg, D. P. 2001. Spatiotemporal sensitivity and visual attention for efficient rendering of dynamic environments. ACM Trans. Graph. 20, 1, 39–65. Google ScholarDigital Library