“Versatile Vision Foundation Model for Image and Video Colorization”

Conference:

Type(s):

Title:

- Versatile Vision Foundation Model for Image and Video Colorization

Presenter(s)/Author(s):

Abstract:

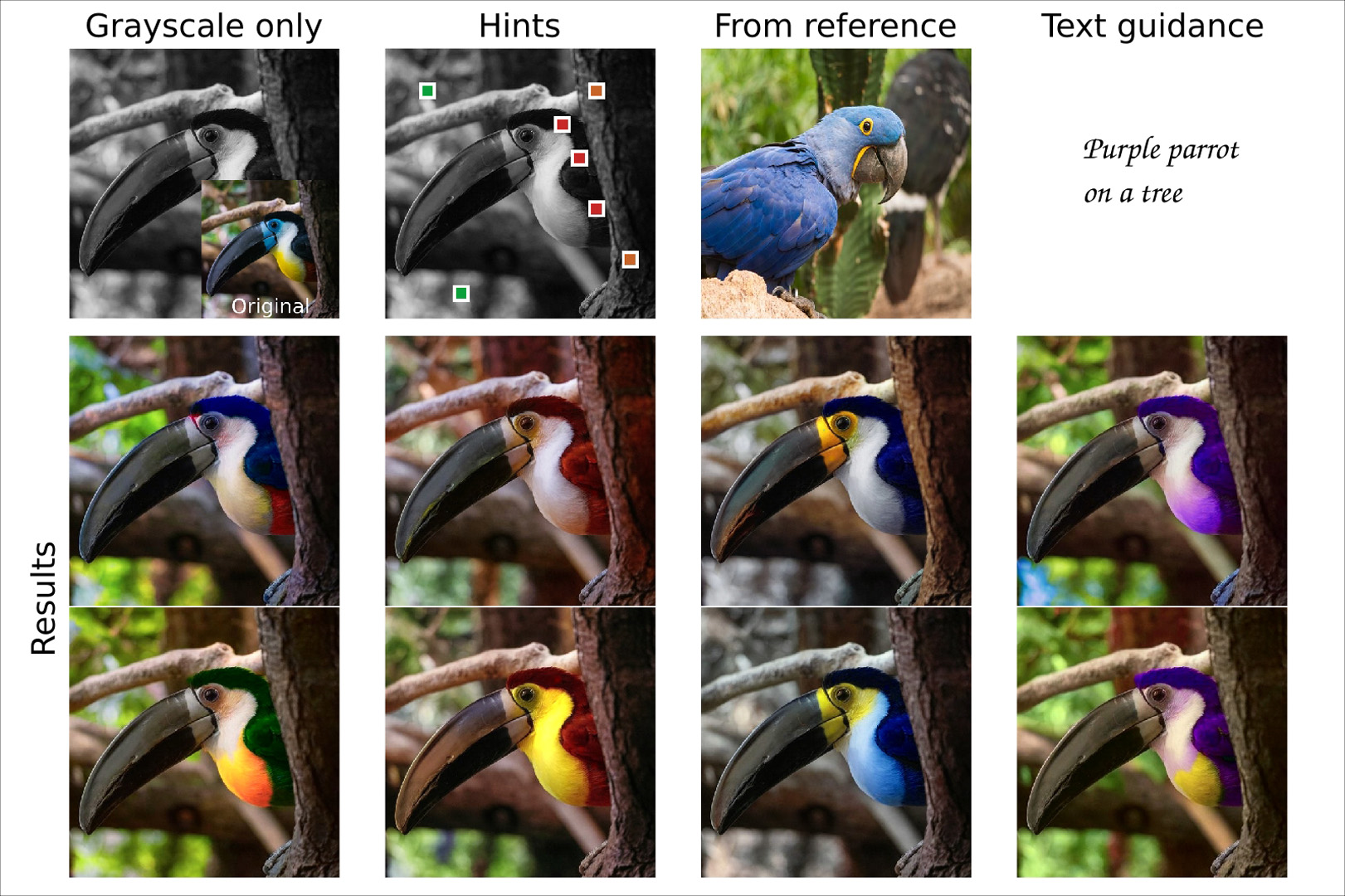

In this work, we show how a latent diffusion model, pre-trained on text-to-image synthesis, can be repurposed for image colorization and provide a flexible high-quality solution for a wide variety of scenarios: direct colorization with diverse results, user guidance through colors hints or text prompts, and finally video colorization.

References:

[1]

2017. America in color. https://www.smithsonianchannel.com/shows/america-in-color. Accessed: 2024-01-20.

[2]

Naofumi Akimoto, Akio Hayakawa, Andrew Shin, and Takuya Narihira. 2020. Reference-based video colorization with spatiotemporal correspondence. arXiv preprint arXiv:2011.12528 (2020).

[3]

PJ Alessi, M Brill, J Campos Acosta, E Carter, R Connelly, J Decarreau, R Harold, R Hirschler, B Jordan, C Kim, 2014. Colorimetry-part 6: CIEDE2000-colour-difference formula. ISO/CIE (2014), 11664?6.

[4]

Jason Antic. 2020. DeOldify. https://github.com/jantic/DeOldify. Accessed: 2024-01-20.

[5]

Yunpeng Bai, Chao Dong, Zenghao Chai, Andong Wang, Zhengzhuo Xu, and Chun Yuan. 2022. Semantic-sparse colorization network for deep exemplar-based colorization. In European Conference on Computer Vision. Springer, 505?521.

[6]

James Betker, Gabriel Goh, Li Jing, Tim Brooks, Jianfeng Wang, Linjie Li, Long Ouyang, Juntang Zhuang, Joyce Lee, Yufei Guo, 2023. Improving image generation with better captions. Computer Science. https://cdn. openai. com/papers/dall-e-3. pdf 2 (2023), 3.

[7]

Tim Brooks, Aleksander Holynski, and Alexei A Efros. 2023. Instructpix2pix: Learning to follow image editing instructions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 18392?18402.

[8]

Hernan Carrillo, Micha?l Cl?ment, Aur?lie Bugeau, and Edgar Simo-Serra. 2023. Diffusart: Enhancing Line Art Colorization with Conditional Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 3485?3489.

[9]

Zheng Chang, Shuchen Weng, Yu Li, Si Li, and Boxin Shi. 2022. L-CoDer: Language-based colorization with color-object decoupling transformer. In European Conference on Computer Vision. Springer, 360?375.

[10]

Zheng Chang, Shuchen Weng, Peixuan Zhang, Yu Li, Si Li, and Boxin Shi. 2023. L-CAD: Language-based Colorization with Any-level Descriptions using Diffusion Priors. In Thirty-seventh Conference on Neural Information Processing Systems.

[11]

Jianbo Chen, Yelong Shen, Jianfeng Gao, Jingjing Liu, and Xiaodong Liu. 2018. Language-based image editing with recurrent attentive models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 8721?8729.

[12]

Junsong Chen, Jincheng Yu, Chongjian Ge, Lewei Yao, Enze Xie, Yue Wu, Zhongdao Wang, James Kwok, Ping Luo, Huchuan Lu, and Zhenguo Li. 2023. PixArt-? : Fast Training of Diffusion Transformer for Photorealistic Text-to-Image Synthesis. arxiv:2310.00426 [cs.CV]

[13]

Zezhou Cheng, Qingxiong Yang, and Bin Sheng. 2015. Deep colorization. In Proceedings of the IEEE international conference on computer vision. 415?423.

[14]

Ethan Coen, Joel Coen, and Roger Deakins. 2001. Painting with Pixels. https://www.imdb.com/title/tt28520463/. Accessed: 2024-01-20.

[15]

Aditya Deshpande, Jiajun Lu, Mao-Chuang Yeh, Min Jin Chong, and David Forsyth. 2017. Learning diverse image colorization. In Proceedings of the IEEE conference on computer vision and pattern recognition. 6837?6845.

[16]

Zhi Dou, Ning Wang, Baopu Li, Zhihui Wang, Haojie Li, and Bin Liu. 2021. Dual color space guided sketch colorization. IEEE Transactions on Image Processing 30 (2021), 7292?7304.

[17]

Arpad E Elo and Sam Sloan. 1978. The rating of chessplayers: Past and present. (No Title) (1978).

[18]

Yuki Endo, Satoshi Iizuka, Yoshihiro Kanamori, and Jun Mitani. 2016. Deepprop: Extracting deep features from a single image for edit propagation. In Computer Graphics Forum, Vol. 35. Wiley Online Library, 189?201.

[19]

David Hasler and Sabine E Suesstrunk. 2003. Measuring colorfulness in natural images. In Human vision and electronic imaging VIII, Vol. 5007. SPIE, 87?95.

[20]

Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2015. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE international conference on computer vision. 1026?1034.

[21]

Mingming He, Dongdong Chen, Jing Liao, Pedro V Sander, and Lu Yuan. 2018. Deep exemplar-based colorization. ACM Transactions on Graphics (TOG) 37, 4 (2018), 1?16.

[22]

Amir Hertz, Ron Mokady, Jay Tenenbaum, Kfir Aberman, Yael Pritch, and Daniel Cohen-or. 2022. Prompt-to-Prompt Image Editing with Cross-Attention Control. In The Eleventh International Conference on Learning Representations.

[23]

Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. 2017. Gans trained by a two time-scale update rule converge to a local nash equilibrium. Advances in neural information processing systems 30 (2017).

[24]

Jonathan Ho, Ajay Jain, and Pieter Abbeel. 2020. Denoising diffusion probabilistic models. Advances in neural information processing systems 33 (2020), 6840?6851.

[25]

Jonathan Ho and Tim Salimans. 2022. Classifier-free diffusion guidance. arXiv preprint arXiv:2207.12598 (2022).

[26]

Zhitong Huang, Nanxuan Zhao, and Jing Liao. 2022. Unicolor: A unified framework for multi-modal colorization with transformer. ACM Transactions on Graphics (TOG) 41, 6 (2022), 1?16.

[27]

Satoshi Iizuka and Edgar Simo-Serra. 2019. Deepremaster: temporal source-reference attention networks for comprehensive video enhancement. ACM Transactions on Graphics (TOG) 38, 6 (2019), 1?13.

[28]

Satoshi Iizuka, Edgar Simo-Serra, and Hiroshi Ishikawa. 2016. Let there be color! joint end-to-end learning of global and local image priors for automatic image colorization with simultaneous classification. ACM Transactions on Graphics (ToG) 35, 4 (2016), 1?11.

[29]

Varun Jampani, Raghudeep Gadde, and Peter V Gehler. 2017. Video propagation networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 451?461.

[30]

Xiaozhong Ji, Boyuan Jiang, Donghao Luo, Guangpin Tao, Wenqing Chu, Zhifeng Xie, Chengjie Wang, and Ying Tai. 2022. ColorFormer: Image colorization via color memory assisted hybrid-attention transformer. In European Conference on Computer Vision. Springer, 20?36.

[31]

Xiaoyang Kang, Xianhui Lin, Kai Zhang, Zheng Hui, Wangmeng Xiang, Jun-Yan He, Xiaoming Li, Peiran Ren, Xuansong Xie, Radu Timofte, 2023a. NTIRE 2023 video colorization challenge. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 1570?1581.

[32]

Xiaoyang Kang, Tao Yang, Wenqi Ouyang, Peiran Ren, Lingzhi Li, and Xuansong Xie. 2023b. DDColor: Towards Photo-Realistic Image Colorization via Dual Decoders. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 328?338.

[33]

Bahjat Kawar, Shiran Zada, Oran Lang, Omer Tov, Huiwen Chang, Tali Dekel, Inbar Mosseri, and Michal Irani. 2023. Imagic: Text-based real image editing with diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 6007?6017.

[34]

Bingxin Ke, Anton Obukhov, Shengyu Huang, Nando Metzger, Rodrigo Caye Daudt, and Konrad Schindler. 2023. Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation. arXiv preprint arXiv:2312.02145 (2023).

[35]

Levon Khachatryan, Andranik Movsisyan, Vahram Tadevosyan, Roberto Henschel, Zhangyang Wang, Shant Navasardyan, and Humphrey Shi. 2023. Text2video-zero: Text-to-image diffusion models are zero-shot video generators. arXiv preprint arXiv:2303.13439 (2023).

[36]

Geonung Kim, Kyoungkook Kang, Seongtae Kim, Hwayoon Lee, Sehoon Kim, Jonghyun Kim, Seung-Hwan Baek, and Sunghyun Cho. 2022. Bigcolor: colorization using a generative color prior for natural images. In European Conference on Computer Vision. Springer, 350?366.

[37]

Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alexander C Berg, Wan-Yen Lo, 2023. Segment anything. arXiv preprint arXiv:2304.02643 (2023).

[38]

Manoj Kumar, Dirk Weissenborn, and Nal Kalchbrenner. 2021. Colorization transformer. arXiv preprint arXiv:2102.04432 (2021).

[39]

Gustav Larsson, Michael Maire, and Gregory Shakhnarovich. 2016. Learning representations for automatic colorization. In Computer Vision?ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11?14, 2016, Proceedings, Part IV 14. Springer, 577?593.

[40]

Anat Levin, Dani Lischinski, and Yair Weiss. 2004. Colorization using optimization. In ACM SIGGRAPH 2004 Papers. 689?694.

[41]

Junnan Li, Dongxu Li, Caiming Xiong, and Steven Hoi. 2022. Blip: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In International Conference on Machine Learning. PMLR, 12888?12900.

[42]

Jianxin Lin, Peng Xiao, Yijun Wang, Rongju Zhang, and Xiangxiang Zeng. 2023. DiffColor: Toward High Fidelity Text-Guided Image Colorization with Diffusion Models. arXiv preprint arXiv:2308.01655 (2023).

[43]

Tsung-Yi Lin, Michael Maire, Serge Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Doll?r, and C Lawrence Zitnick. 2014. Microsoft coco: Common objects in context. In Computer Vision?ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V 13. Springer, 740?755.

[44]

Hanyuan Liu, Minshan Xie, Jinbo Xing, Chengze Li, and Tien-Tsin Wong. 2023a. Video Colorization with Pre-trained Text-to-Image Diffusion Models. arXiv preprint arXiv:2306.01732 (2023).

[45]

Hanyuan Liu, Jinbo Xing, Minshan Xie, Chengze Li, and Tien-Tsin Wong. 2023b. Improved Diffusion-based Image Colorization via Piggybacked Models. arXiv preprint arXiv:2304.11105 (2023).

[46]

Yihao Liu, Hengyuan Zhao, Kelvin C. K. Chan, Xintao Wang, Chen Change Loy, Yu Qiao, and Chao Dong. 2021. Temporally Consistent Video Colorization with Deep Feature Propagation and Self-regularization Learning. arxiv:2110.04562 [cs.CV]

[47]

Grace Luo, Lisa Dunlap, Dong Huk Park, Aleksander Holynski, and Trevor Darrell. 2023. Diffusion Hyperfeatures: Searching Through Time and Space for Semantic Correspondence. arXiv preprint arXiv:2305.14334 (2023).

[48]

Varun Manjunatha, Mohit Iyyer, Jordan Boyd-Graber, and Larry Davis. 2018. Learning to color from language. arXiv preprint arXiv:1804.06026 (2018).

[49]

Ron Mokady, Amir Hertz, Kfir Aberman, Yael Pritch, and Daniel Cohen-Or. 2023. Null-text inversion for editing real images using guided diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 6038?6047.

[50]

Dustin Podell, Zion English, Kyle Lacey, Andreas Blattmann, Tim Dockhorn, Jonas M?ller, Joe Penna, and Robin Rombach. 2023. Sdxl: Improving latent diffusion models for high-resolution image synthesis. arXiv preprint arXiv:2307.01952 (2023).

[51]

Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, 2021. Learning transferable visual models from natural language supervision. In International conference on machine learning. PMLR, 8748?8763.

[52]

Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Bj?rn Ommer. 2022. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 10684?10695.

[53]

Chitwan Saharia, William Chan, Huiwen Chang, Chris Lee, Jonathan Ho, Tim Salimans, David Fleet, and Mohammad Norouzi. 2022. Palette: Image-to-image diffusion models. In ACM SIGGRAPH 2022 Conference Proceedings. 1?10.

[54]

Saurabh Saxena, Charles Herrmann, Junhwa Hur, Abhishek Kar, Mohammad Norouzi, Deqing Sun, and David J Fleet. 2023a. The Surprising Effectiveness of Diffusion Models for Optical Flow and Monocular Depth Estimation. arXiv preprint arXiv:2306.01923 (2023).

[55]

Saurabh Saxena, Abhishek Kar, Mohammad Norouzi, and David J Fleet. 2023b. Monocular depth estimation using diffusion models. arXiv preprint arXiv:2302.14816 (2023).

[56]

Hamid R Sheikh and Alan C Bovik. 2006. Image information and visual quality. IEEE Transactions on image processing 15, 2 (2006), 430?444.

[57]

Min Shi, Jia-Qi Zhang, Shu-Yu Chen, Lin Gao, Yu-Kun Lai, and Fang-Lue Zhang. 2023. Reference-based deep line art video colorization. IEEE Transactions on Visualization & Computer Graphics 29, 06 (2023), 2965?2979.

[58]

Jiaming Song, Chenlin Meng, and Stefano Ermon. 2020. Denoising diffusion implicit models. arXiv preprint arXiv:2010.02502 (2020).

[59]

StabilityAI. 2022. Stable Diffusion v2.1. https://stability.ai/news/stablediffusion2-1-release7-dec-2022

[60]

Jheng-Wei Su, Hung-Kuo Chu, and Jia-Bin Huang. 2020. Instance-aware image colorization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 7968?7977.

[61]

Luming Tang, Menglin Jia, Qianqian Wang, Cheng Perng Phoo, and Bharath Hariharan. 2023. Emergent Correspondence from Image Diffusion. arXiv preprint arXiv:2306.03881 (2023).

[62]

Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, ?ukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. Advances in neural information processing systems 30 (2017).

[63]

Patricia Vitoria, Lara Raad, and Coloma Ballester. 2020. Chromagan: Adversarial picture colorization with semantic class distribution. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 2445?2454.

[64]

Hanzhang Wang, Deming Zhai, Xianming Liu, Junjun Jiang, and Wen Gao. 2023. Unsupervised deep exemplar colorization via pyramid dual non-local attention. IEEE Transactions on Image Processing (2023).

[65]

Zhou Wang and Alan C Bovik. 2002. A universal image quality index. IEEE signal processing letters 9, 3 (2002), 81?84.

[66]

Tomihisa Welsh, Michael Ashikhmin, and Klaus Mueller. 2002. Transferring color to greyscale images. In Proceedings of the 29th annual conference on Computer graphics and interactive techniques. 277?280.

[67]

Shuchen Weng, Jimeng Sun, Yu Li, Si Li, and Boxin Shi. 2022a. CT 2: Colorization transformer via color tokens. In European Conference on Computer Vision. Springer, 1?16.

[68]

Shuchen Weng, Hao Wu, Zheng Chang, Jiajun Tang, Si Li, and Boxin Shi. 2022b. L-code: Language-based colorization using color-object decoupled conditions. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 36. 2677?2684.

[69]

Yanze Wu, Xintao Wang, Yu Li, Honglun Zhang, Xun Zhao, and Ying Shan. 2021. Towards vivid and diverse image colorization with generative color prior. In Proceedings of the IEEE/CVF international conference on computer vision. 14377?14386.

[70]

Menghan Xia, Wenbo Hu, Tien-Tsin Wong, and Jue Wang. 2022. Disentangled Image Colorization via Global Anchors. ACM Transactions on Graphics (TOG) 41, 6 (2022), 204:1?204:13.

[71]

Yi Xiao, Peiyao Zhou, Yan Zheng, and Chi-Sing Leung. 2019. Interactive deep colorization using simultaneous global and local inputs. In ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 1887?1891.

[72]

Zhongyou Xu, Tingting Wang, Faming Fang, Yun Sheng, and Guixu Zhang. 2020. Stylization-based architecture for fast deep exemplar colorization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 9363?9372.

[73]

Dingkun Yan, Ryogo Ito, Ryo Moriai, and Suguru Saito. 2023. Two-Step Training: Adjustable Sketch Colourization via Reference Image and Text Tag. In Computer Graphics Forum. Wiley Online Library.

[74]

Yixin Yang, Zhongzheng Peng, Xiaoyu Du, Zhulin Tao, Jinhui Tang, and Jinshan Pan. 2022. BiSTNet: Semantic Image Prior Guided Bidirectional Temporal Feature Fusion for Deep Exemplar-based Video Colorization. arXiv preprint arXiv:2212.02268 (2022).

[75]

Wang Yin, Peng Lu, Zhaoran Zhao, and Xujun Peng. 2021. Yes,” Attention Is All You Need”, for Exemplar based Colorization. In Proceedings of the 29th ACM international conference on multimedia. 2243?2251.

[76]

Jooyeol Yun, Sanghyeon Lee, Minho Park, and Jaegul Choo. 2023. iColoriT: Towards Propagating Local Hints to the Right Region in Interactive Colorization by Leveraging Vision Transformer. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 1787?1796.

[77]

Bo Zhang, Mingming He, Jing Liao, Pedro V Sander, Lu Yuan, Amine Bermak, and Dong Chen. 2019. Deep exemplar-based video colorization. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 8052?8061.

[78]

Lvmin Zhang, Anyi Rao, and Maneesh Agrawala. 2023. Adding conditional control to text-to-image diffusion models. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 3836?3847.

[79]

Richard Zhang, Phillip Isola, and Alexei A Efros. 2016. Colorful image colorization. In Computer Vision?ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part III 14. Springer, 649?666.

[80]

Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In CVPR.

[81]

Richard Zhang, Jun-Yan Zhu, Phillip Isola, Xinyang Geng, Angela S Lin, Tianhe Yu, and Alexei A Efros. 2017. Real-time user-guided image colorization with learned deep priors. arXiv preprint arXiv:1705.02999 (2017).

[82]

Jiaojiao Zhao, Jungong Han, Ling Shao, and Cees GM Snoek. 2020. Pixelated semantic colorization. International Journal of Computer Vision 128 (2020), 818?834.

[83]

Yuzhi Zhao, Lai-Man Po, Wing Yin Yu, Yasar Abbas Ur Rehman, Mengyang Liu, Yujia Zhang, and Weifeng Ou. 2022. VCGAN: video colorization with hybrid generative adversarial network. IEEE Transactions on Multimedia (2022).