“Bare hand interaction in tabletop augmented reality” by Fernandes and Fernández

Conference:

Type(s):

Title:

- Bare hand interaction in tabletop augmented reality

Presenter(s)/Author(s):

Abstract:

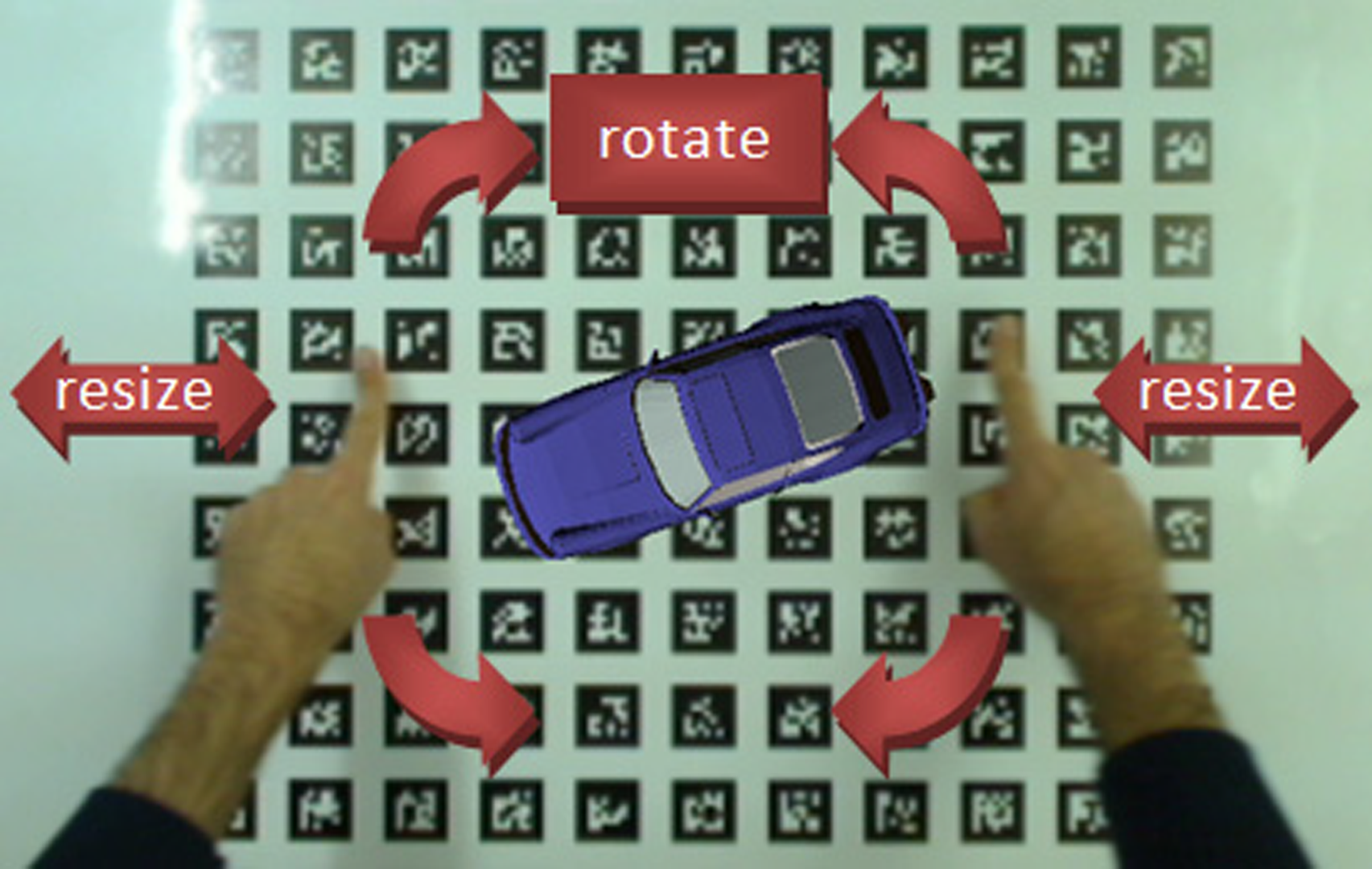

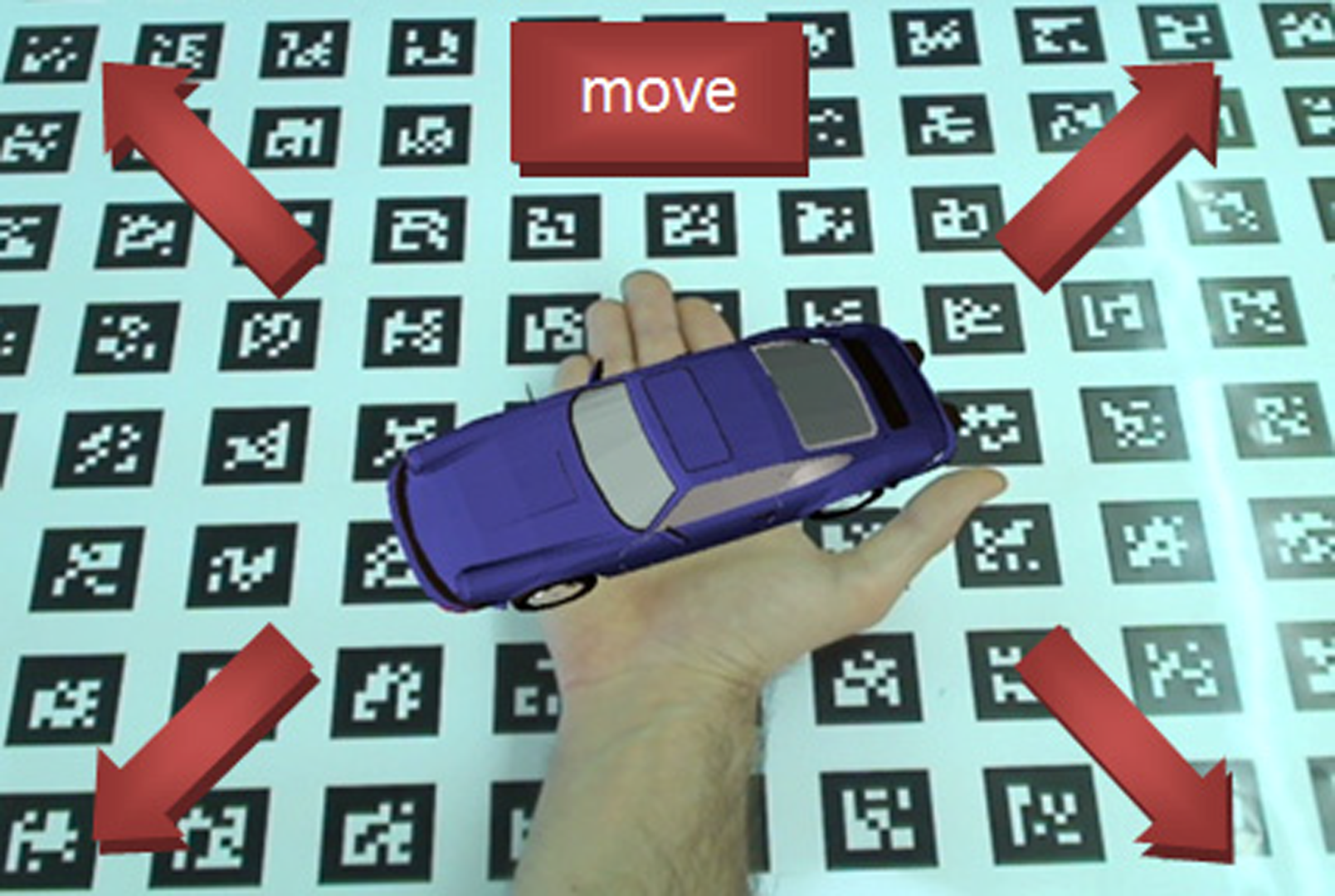

Augmented Reality (AR) techniques have been applied to many application areas; however, there is still research that needs to be conducted on the best way to interact with AR content. Since hands are our main means of interaction with objects in real life, it would be natural for AR interfaces to allow free hand interaction with virtual objects. We present a system that tracks the 2D position of the user’s hands on a tabletop surface, allowing the user to move, rotate and resize the virtual objects over this surface. Our implementation is based on a computer vision tracking system that processes the video stream of a single usb camera.

References:

1. Bradski, G. and Kaehler, A. 2008. Learning OpenCV: Computer vision with the OpenCV library. O’Reilly Media.

2. Fiala, M. 2005. ARTag, a fiducial marker system using digital techniques. Computer Vision and Pattern Recognition, 2005. CVPR 2005. IEEE Computer Society Conference on, 590–596 vol. 592.

3. Freund, Y. and Schapire, R. 1996. Experiments with a New Boosting Algorithm. International Conference on Machine Learning, 148–156.

4. Song, P., Winkler, S., Gilani, S. and Zhou, Z. 2007. Vision-Based Projected Tabletop Interface for Finger Interactions. In Human-Computer Interaction, 49–58.