“LipMouse: novel multimodal human-computer interaction interface” by Dalka and Czyzewski

Conference:

Type(s):

Title:

- LipMouse: novel multimodal human-computer interaction interface

Presenter(s)/Author(s):

Abstract:

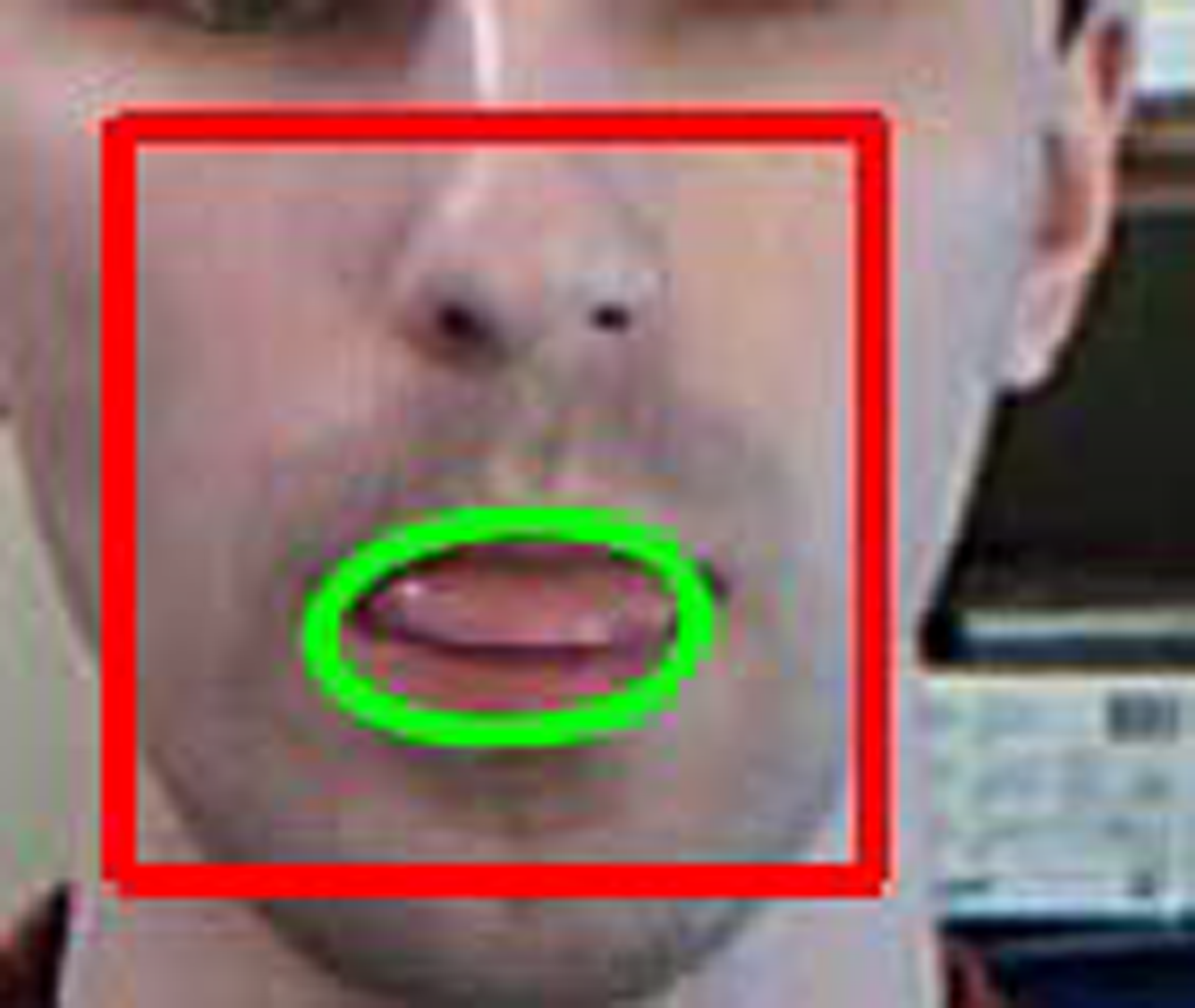

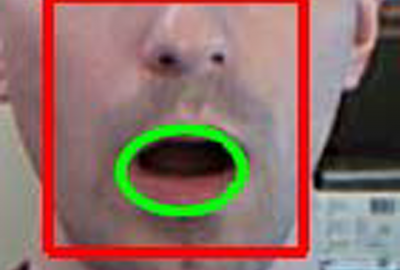

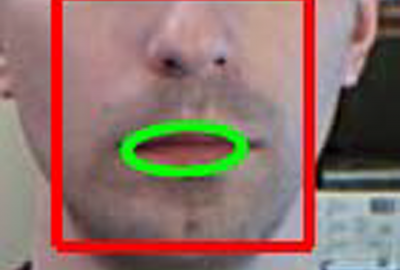

The main goal of each HCI application is to make working with a computer as natural, intuitive and effective as possible. One of the main areas of applications of new human-computer interfaces is making possible to use computers for people with permanent or temporal motor disabilities in an efficient way. There are two main types of such solutions [Aggarwal and Cai 1999]. The first group utilizes devices mounted directly on the user’s body. Applications in the second group are contactless and they use remote sensors only, therefore they are much more comfortable for a user. Amongst contactless solutions, vision-based human-computer interfaces are the most promising ones. They utilize cameras and image processing algorithms to detect signs and gestures made by a user and execute configured actions. The most common vision-based applications employ eye and hand tracking [Shin and Chun 2007].

References:

1. Aggarwal J. K., Cai Q. 1999. Human Motion Analysis: A Review. CVIU(73), No. 3, pp. 428–440, March.

2. Leung S., Wang S., Lau W. 2004. Lip image segmentation using fuzzy clustering incorporating an elliptic shape function. IEEE Trans. on Image Processing, vol. 13, no. 1, pp. 51–62, Jan.

3. Shin G., Chun J. 2007. Vision-based Multimodal Human Computer Interface based on Parallel Tracking of Eye and Hand Motion. Int. Conf. on Conv. Inf. Techn., p. 2443–2448.

4. Viola P., Jones M. 2001. Rapid Object Detection using a Boosted Cascade of Simple Features. IEEE CVPR.