“Neural Face Rigging for Animating and Retargeting Facial Meshes in the Wild” by Qin, Saito, Aigerman, Groueix and Komura

Conference:

Type(s):

Title:

- Neural Face Rigging for Animating and Retargeting Facial Meshes in the Wild

Session/Category Title: Making Faces With Neural Avatars

Presenter(s)/Author(s):

Moderator(s):

Abstract:

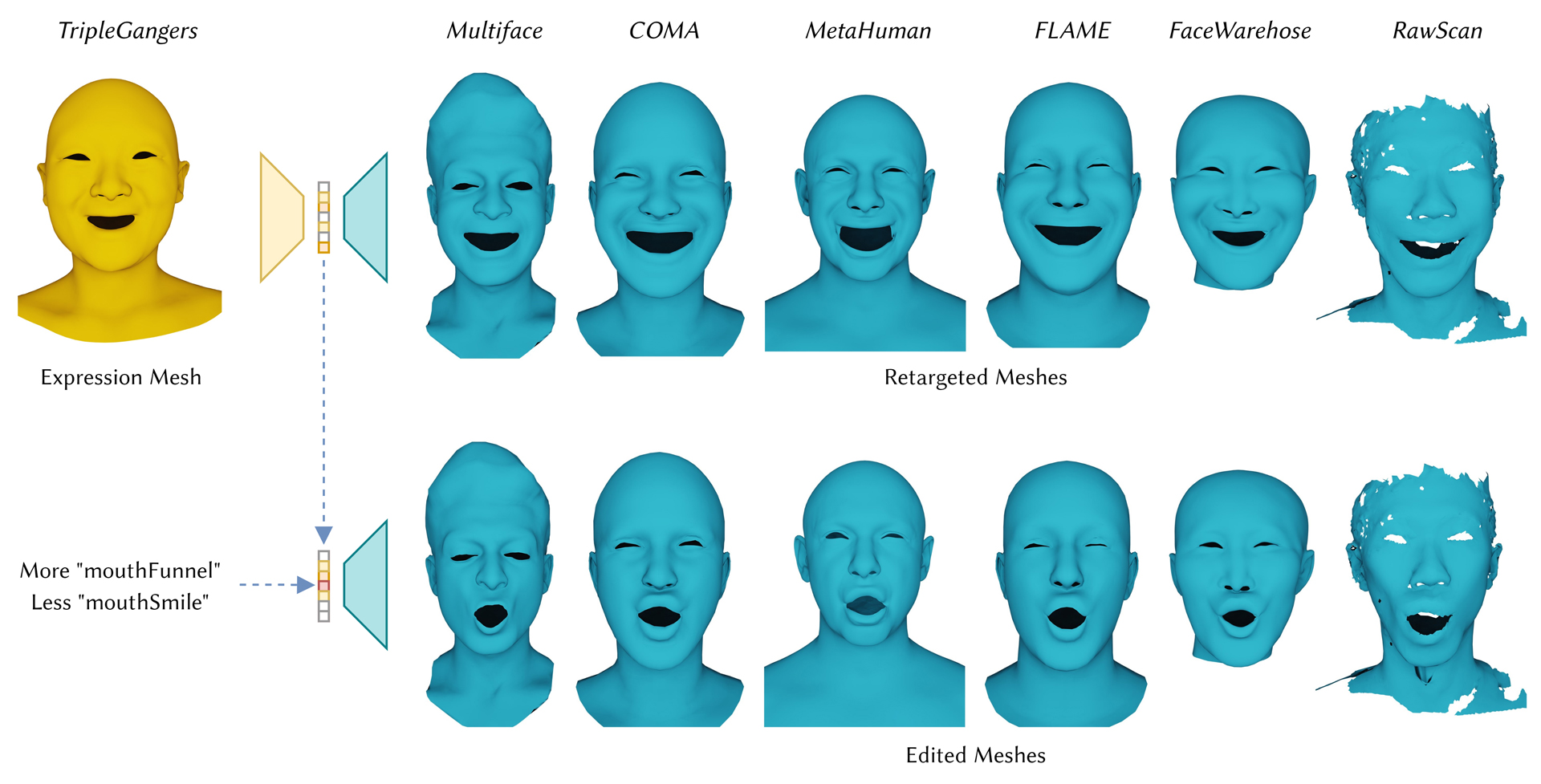

We propose an end-to-end deep-learning approach for automatic rigging and retargeting of 3D models of human faces in the wild. Our approach, called Neural Face Rigging (NFR), holds three key properties: (i) NFR’s expression space maintains human-interpretable editing parameters for artistic controls; (ii) NFR is readily applicable to arbitrary facial meshes with different connectivity and expressions; (iii) NFR can encode and produce fine-grained details of complex expressions performed by arbitrary subjects. To the best of our knowledge, NFR is the first approach to provide realistic and controllable deformations of in-the-wild facial meshes, without the manual creation of blendshapes or correspondence. We design a deformation autoencoder and train it through a multi-dataset training scheme, which benefits from the unique advantages of two data sources: a linear 3DMM with interpretable control parameters as in FACS and 4D captures of real faces with fine-grained details. Through various experiments, we show NFR’s ability to automatically produce realistic and accurate facial deformations across a wide range of existing datasets and noisy facial scans in-the-wild, while providing artist-controlled, editable parameters.

References:

1. Noam Aigerman, Kunal Gupta, Vladimir G. Kim, Siddhartha Chaudhuri, Jun Saito, and Thibault Groueix. 2022. Neural Jacobian Fields: Learning Intrinsic Mappings of Arbitrary Meshes. ACM Trans. Graph. 41, 4, Article 109 (jul 2022), 17 pages. https://doi.org/10.1145/3528223.3530141

2. Stephen W Bailey, Dalton Omens, Paul Dilorenzo, and James F O’Brien. 2020. Fast and deep facial deformations. ACM Transactions on Graphics (TOG) 39, 4 (2020), 94–1.

3. Volker Blanz and Thomas Vetter. 1999. A morphable model for the synthesis of 3D faces. In Proceedings of the 26th annual conference on Computer graphics and interactive techniques. 187–194.

4. Giorgos Bouritsas, Sergiy Bokhnyak, Stylianos Ploumpis, Michael Bronstein, and Stefanos Zafeiriou. 2019. Neural 3d morphable models: Spiral convolutional networks for 3d shape representation learning and generation. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 7213–7222.

5. Alan Brunton, Timo Bolkart, and Stefanie Wuhrer. 2014. Multilinear wavelets: A statistical shape space for human faces. In European Conference on Computer Vision. Springer, 297–312.

6. Chen Cao, Yanlin Weng, Shun Zhou, Yiying Tong, and Kun Zhou. 2013. Facewarehouse: A 3d facial expression database for visual computing. IEEE Transactions on Visualization and Computer Graphics 20, 3 (2013), 413–425.

7. Ozan Cetinaslan and Verónica Orvalho. 2020a. Sketching Manipulators for Localized Blendshape Editing. Graphical Models 108 (2020), 101059.

8. Ozan Cetinaslan and Verónica Orvalho. 2020b. Stabilized blendshape editing using localized Jacobian transpose descent. Graphical Models 112 (2020), 101091.

9. Prashanth Chandran, Derek Bradley, Markus Gross, and Thabo Beeler. 2020. Semantic deep face models. In 2020 International Conference on 3D Vision (3DV). IEEE, 345–354.

10. Prashanth Chandran, Gaspard Zoss, Markus Gross, Paulo Gotardo, and Derek Bradley. 2022a. Facial Animation with Disentangled Identity and Motion using Transformers. ACM/Eurographics Symposium on Computer Animation (2022).

11. Prashanth Chandran, Gaspard Zoss, Markus Gross, Paulo Gotardo, and Derek Bradley. 2022b. Shape Transformers: Topology-Independent 3D Shape Models Using Transformers. In Computer Graphics Forum, Vol. 41. Wiley Online Library, 195–207.

12. Byoungwon Choe and Hyeong-Seok Ko. 2006. Analysis and synthesis of facial expressions with hand-generated muscle actuation basis. In ACM SIGGRAPH 2006 Courses. 21–es.

13. Byoungwon Choe, Hanook Lee, and Hyeong-Seok Ko. 2001. Performance-driven muscle-based facial animation. The Journal of Visualization and Computer Animation 12, 2 (2001), 67–79.

14. Byungkuk Choi, Haekwang Eom, Benjamin Mouscadet, Stephen Cullingford, Kurt Ma, Stefanie Gassel, Suzi Kim, Andrew Moffat, Millicent Maier, Marco Revelant, Joe Letteri, and Karan Singh. 2022. Animatomy: An Animator-Centric, Anatomically Inspired System for 3D Facial Modeling, Animation and Transfer. In SIGGRAPH Asia 2022 Conference Papers (Daegu, Republic of Korea) (SA ’22). Association for Computing Machinery, New York, NY, USA, Article 16, 9 pages. https://doi.org/10.1145/3550469.3555398

15. Bernhard Egger, William A. P. Smith, Ayush Tewari, Stefanie Wuhrer, Michael Zollhoefer, Thabo Beeler, Florian Bernard, Timo Bolkart, Adam Kortylewski, Sami Romdhani, Christian Theobalt, Volker Blanz, and Thomas Vetter. 2020. 3D Morphable Face Models – Past, Present and Future. ACM Transactions on Graphics 39, 5 (August 2020). https://doi.org/10.1145/3395208

16. Paul Ekman and Wallace V. Friesen. 1978. Facial action coding system: a technique for the measurement of facial movement. In Consulting Psychologists Press.

17. Lin Gao, Jie Yang, Yi-Ling Qiao, Yu-Kun Lai, Paul L Rosin, Weiwei Xu, and Shihong Xia. 2018. Automatic unpaired shape deformation transfer. ACM Transactions on Graphics (TOG) 37, 6 (2018), 1–15.

18. Shunwang Gong, Lei Chen, Michael Bronstein, and Stefanos Zafeiriou. 2019. Spiralnet++: A fast and highly efficient mesh convolution operator. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops. 0–0.

19. P Huber, G Hu, R Tena, P Mortazavian, P Koppen, WJ Christmas, M Ratsch, and J Kittler. 2016. A Multiresolution 3D Morphable Face Model and Fitting Framework.

20. Zi-Hang Jiang, Qianyi Wu, Keyu Chen, and Juyong Zhang. 2019. Disentangled representation learning for 3d face shape. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 11957–11966.

21. John P Lewis, Ken Anjyo, Taehyun Rhee, Mengjie Zhang, Frederic H Pighin, and Zhigang Deng. 2014. Practice and theory of blendshape facial models.Eurographics (State of the Art Reports) 1, 8 (2014), 2.

22. John P Lewis and Ken-ichi Anjyo. 2010. Direct manipulation blendshapes. IEEE Computer Graphics and Applications 30, 4 (2010), 42–50.

23. Hao Li, Thibaut Weise, and Mark Pauly. 2010. Example-based facial rigging. Acm transactions on graphics (tog) 29, 4 (2010), 1–6.

24. Ruilong Li, Karl Bladin, Yajie Zhao, Chinmay Chinara, Owen Ingraham, Pengda Xiang, Xinglei Ren, Pratusha Prasad, Bipin Kishore, Jun Xing, 2020. Learning formation of physically-based face attributes. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 3410–3419.

25. Tianye Li, Timo Bolkart, Michael J Black, Hao Li, and Javier Romero. 2017. Learning a model of facial shape and expression from 4D scans.ACM Trans. Graph. 36, 6 (2017), 194–1.

26. Federico Monti, Davide Boscaini, Jonathan Masci, Emanuele Rodola, Jan Svoboda, and Michael M Bronstein. 2017. Geometric deep learning on graphs and manifolds using mixture model cnns. In Proceedings of the IEEE conference on computer vision and pattern recognition. 5115–5124.

27. Lucio Moser, Chinyu Chien, Mark Williams, Jose Serra, Darren Hendler, and Doug Roble. 2021. Semi-supervised video-driven facial animation transfer for production. ACM Transactions on Graphics (TOG) 40, 6 (2021), 1–18.

28. Pascal Paysan, Reinhard Knothe, Brian Amberg, Sami Romdhani, and Thomas Vetter. 2009. A 3D Face Model for Pose and Illumination Invariant Face Recognition. In 2009 Sixth IEEE International Conference on Advanced Video and Signal Based Surveillance. 296–301. https://doi.org/10.1109/AVSS.2009.58

29. Charles R Qi, Hao Su, Kaichun Mo, and Leonidas J Guibas. 2017. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition. 652–660.

30. Anurag Ranjan, Timo Bolkart, Soubhik Sanyal, and Michael J Black. 2018. Generating 3D faces using convolutional mesh autoencoders. In Proceedings of the European conference on computer vision (ECCV). 704–720.

31. Richard A. Roberts, Rafael Kuffner dos Anjos, Akinobu Maejima, and Ken Anjyo. 2021. Deformation transfer survey. Computers Graphics (2021). https://doi.org/10.1016/j.cag.2020.10.004

32. Yeongho Seol, Jaewoo Seo, Paul Hyunjin Kim, John P Lewis, and Junyong Noh. 2011. Artist friendly facial animation retargeting. ACM Transactions on Graphics (TOG) 30, 6 (2011), 1–10.

33. Nicholas Sharp, Souhaib Attaiki, Keenan Crane, and Maks Ovsjanikov. 2022. Diffusionnet: Discretization agnostic learning on surfaces. ACM Transactions on Graphics (TOG) 41, 3 (2022), 1–16.

34. Steven L. Song, Weiqi Shi, and Michael Reed. 2020. Accurate Face Rig Approximation with Deep Differential Subspace Reconstruction. ACM Trans. Graph. 39, 4, Article 34 (aug 2020), 12 pages. https://doi.org/10.1145/3386569.3392491

35. Robert W Sumner and Jovan Popović. 2004. Deformation transfer for triangle meshes. ACM Transactions on graphics (TOG) 23, 3 (2004), 399–405.

36. Qingyang Tan, Lin Gao, Yu-Kun Lai, and Shihong Xia. 2018. Variational Autoencoders for Deforming 3D Mesh Models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

37. Ayush Tewari, Michael Zollhofer, Hyeongwoo Kim, Pablo Garrido, Florian Bernard, Patrick Perez, and Christian Theobalt. 2017. Mofa: Model-based deep convolutional face autoencoder for unsupervised monocular reconstruction. In Proceedings of the IEEE International Conference on Computer Vision Workshops. 1274–1283.

38. Nitika Verma, Edmond Boyer, and Jakob Verbeek. 2018. Feastnet: Feature-steered graph convolutions for 3d shape analysis. In Proceedings of the IEEE conference on computer vision and pattern recognition. 2598–2606.

39. Noranart Vesdapunt, Mitch Rundle, HsiangTao Wu, and Baoyuan Wang. 2020. JNR: Joint-based neural rig representation for compact 3D face modeling. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XVIII 16. Springer, 389–405.

40. Chenglei Wu, Derek Bradley, Markus Gross, and Thabo Beeler. 2016. An anatomically-constrained local deformation model for monocular face capture. ACM transactions on graphics (TOG) 35, 4 (2016), 1–12.

41. Cheng-hsin Wuu, Ningyuan Zheng, Scott Ardisson, Rohan Bali, Danielle Belko, Eric Brockmeyer, Lucas Evans, Timothy Godisart, Hyowon Ha, Alexander Hypes, Taylor Koska, Steven Krenn, Stephen Lombardi, Xiaomin Luo, Kevyn McPhail, Laura Millerschoen, Michal Perdoch, Mark Pitts, Alexander Richard, Jason Saragih, Junko Saragih, Takaaki Shiratori, Tomas Simon, Matt Stewart, Autumn Trimble, Xinshuo Weng, David Whitewolf, Chenglei Wu, Shoou-I Yu, and Yaser Sheikh. 2022. Multiface: A Dataset for Neural Face Rendering. In arXiv. https://doi.org/10.48550/ARXIV.2207.11243

42. Lingchen Yang, Byungsoo Kim, Gaspard Zoss, Baran Gözcü, Markus Gross, and Barbara Solenthaler. 2022. Implicit Neural Representation for Physics-Driven Actuated Soft Bodies. ACM Trans. Graph. 41, 4, Article 122 (jul 2022), 10 pages. https://doi.org/10.1145/3528223.3530156

43. Yi Zhou, Chenglei Wu, Zimo Li, Chen Cao, Yuting Ye, Jason Saragih, Hao Li, and Yaser Sheikh. 2020. Fully convolutional mesh autoencoder using efficient spatially varying kernels. Advances in Neural Information Processing Systems 33 (2020), 9251–9262.