“ManipNet: neural manipulation synthesis with a hand-object spatial representation” by Zhang, Ye, Shiratori and Komura

Conference:

Type(s):

Title:

- ManipNet: neural manipulation synthesis with a hand-object spatial representation

Presenter(s)/Author(s):

Abstract:

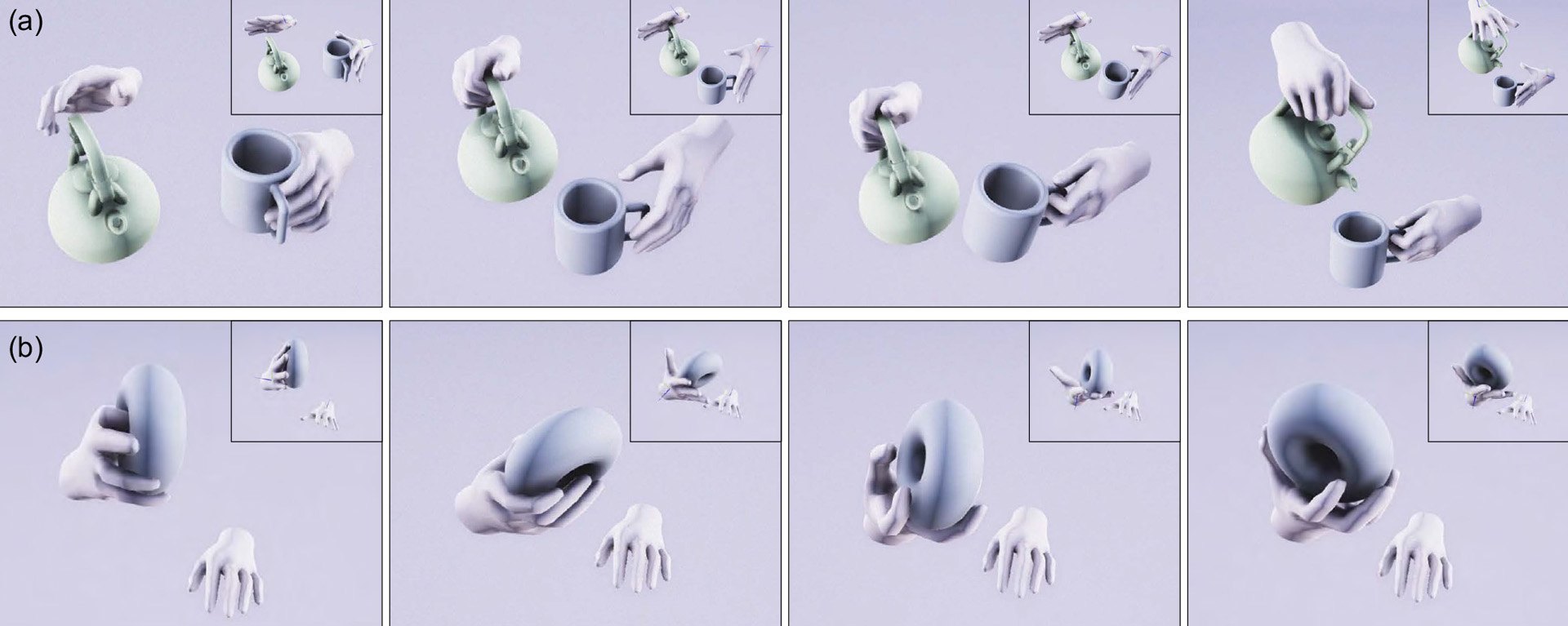

Natural hand manipulations exhibit complex finger maneuvers adaptive to object shapes and the tasks at hand. Learning dexterous manipulation from data in a brute force way would require a prohibitive amount of examples to effectively cover the combinatorial space of 3D shapes and activities. In this paper, we propose a hand-object spatial representation that can achieve generalization from limited data. Our representation combines the global object shape as voxel occupancies with local geometric details as samples of closest distances. This representation is used by a neural network to regress finger motions from input trajectories of wrists and objects. Specifically, we provide the network with the current finger pose, past and future trajectories, and the spatial representations extracted from these trajectories. The network then predicts a new finger pose for the next frame as an autoregressive model. With a carefully chosen hand-centric coordinate system, we can handle single-handed and two-handed motions in a unified framework. Learning from a small number of primitive shapes and kitchenware objects, the network is able to synthesize a variety of finger gaits for grasping, in-hand manipulation, and bimanual object handling on a rich set of novel shapes and functional tasks. We also demonstrate a live demo of manipulating virtual objects in real-time using a simple physical prop. Our system is useful for offline animation or real-time applications forgiving to a small delay.

References:

1. Mart?n Abadi, Paul Barham, Jianmin Chen, Zhifeng Chen, Andy Davis, Jeffrey Dean, Matthieu Devin, Sanjay Ghemawat, Geoffrey Irving, Michael Isard, Manjunath Kudlur, Josh Levenberg, Rajat Monga, Sherry Moore, Derek G. Murray, Benoit Steiner, Paul Tucker, Vijay Vasudevan, Pete Warden, Martin Wicke, Yuan Yu, and Xiaoqiang Zheng. 2016. TensorFlow: A System for Large-scale Machine Learning. In Proceedings of the 12th USENIX Conference on Operating Systems Design and Implementation (Savannah, GA, USA) (OSDI’16). USENIX Association, Berkeley, CA, USA, 265–283. http://dl.acm.org/citation.cfm?id=3026877.3026899Google Scholar

2. Simon Alexanderson, Gustav Eje Henter, Taras Kucherenko, and Jonas Beskow. 2020. Style-Controllable Speech-Driven Gesture Synthesis Using Normalising Flows. In Computer Graphics Forum, Vol. 39. Wiley Online Library, 487–496.Google Scholar

3. Marcin Andrychowicz, Bowen Baker, Maciek Chociej, Rafal Jozefowicz, Bob McGrew, Jakub Pachocki, Arthur Petron, Matthias Plappert, Glenn Powell, Alex Ray, et al. 2020. Learning dexterous in-hand manipulation. The International Journal of Robotics Research 39, 1 (2020), 3–20.Google ScholarDigital Library

4. Apple. [Online; accessed 27-January-2021]. Augmented Reality: Introducing ARKit 4. https://developer.apple.com/augmented-reality/arkit/.Google Scholar

5. Andreas Aristidou and Joan Lasenby. 2011. FABRIK: A fast, iterative solver for the Inverse Kinematics problem. Graphical Models 73, 5 (2011), 243–260.Google ScholarDigital Library

6. Luca Ballan, Aparna Taneja, J?rgen Gall, Luc Van Gool, and Marc Pollefeys. 2012. Motion capture of hands in action using discriminative salient points. In European Conference on Computer Vision. Springer, 640–653.Google ScholarDigital Library

7. David Barraff. 1997. An introduction to physically based modeling: Rigid body simulation I – unconstrained rigid body dynamics. In ACM SIGGRAPH Courses.Google Scholar

8. Kevin Bergamin, Simon Clavet, Daniel Holden, and James Richard Forbes. 2019. DReCon: data-driven responsive control of physics-based characters. ACM Transactions on Graphics (TOG) 38, 6 (2019), 1–11.Google ScholarDigital Library

9. Samarth Brahmbhatt, Ankur Handa, James Hays, and Dieter Fox. 2019. Contactgrasp: Functional multi-finger grasp synthesis from contact. arXiv preprint arXiv:1904.03754 (2019).Google Scholar

10. Samarth Brahmbhatt, Chengcheng Tang, Christopher D Twigg, Charles C Kemp, and James Hays. 2020. ContactPose: A dataset of grasps with object contact and hand pose. arXiv preprint arXiv:2007.09545 (2020).Google Scholar

11. Yevgen Chebotar, Ankur Handa, Viktor Makoviychuk, Miles Macklin, Jan Issac, Nathan Ratliff, and Dieter Fox. 2019. Closing the sim-to-real loop: Adapting simulation randomization with real world experience. In 2019 International Conference on Robotics and Automation (ICRA). IEEE, 8973–8979.Google ScholarDigital Library

12. Angela Dai, Charles Ruizhongtai Qi, and Matthias Nie?ner. 2017. Shape Completion using 3D-Encoder-Predictor CNNs and Shape Synthesis. In Proc. Computer Vision and Pattern Recognition (CVPR), IEEE.Google ScholarCross Ref

13. Facebook. [Online; accessed 27-January-2021]. Spark AR Studio. https://sparkar.facebook.com/ar-studio/.Google Scholar

14. Liuhao Ge, Zhou Ren, Yuncheng Li, Zehao Xue, Yingying Wang, Jianfei Cai, and Junsong Yuan. 2019. 3d hand shape and pose estimation from a single rgb image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10833–10842.Google ScholarCross Ref

15. Google. [Online; accessed 27-January-2021]. MediaPipe. https://google.github.io/mediapipe/.Google Scholar

16. Li Han and Jeffrey C Trinkle. 1998. Dextrous manipulation by rolling and finger gaiting. In Proceedings. 1998 IEEE International Conference on Robotics and Automation (Cat. No. 98CH36146), Vol. 1. IEEE, 730–735.Google ScholarCross Ref

17. Shangchen Han, Beibei Liu, Randi Cabezas, Christopher D. Twigg, Peizhao Zhang, Jeff Petkau, Tsz-Ho Yu, Chun-Jung Tai, Muzaffer Akbay, Zheng Wang, Asaf Nitzan, Gang Dong, Yuting Ye, Lingling Tao, Chengde Wan, and Robert Wang. 2020. MEgATrack: Monochrome Egocentric Articulated Hand-Tracking for Virtual Reality. ACM Transactions on Graphics (TOG) 39, 4 (2020).Google ScholarDigital Library

18. Shangchen Han, Beibei Liu, Robert Wang, Yuting Ye, Christopher D Twigg, and Kenrick Kin. 2018. Online optical marker-based hand tracking with deep labels. ACM Transactions on Graphics (TOG) 37, 4 (2018), 1–10.Google ScholarDigital Library

19. Yana Hasson, Gul Varol, Dimitrios Tzionas, Igor Kalevatykh, Michael J. Black, Ivan Laptev, and Cordelia Schmid. 2019. Learning Joint Reconstruction of Hands and Manipulated Objects. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarCross Ref

20. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition. 770–778.Google Scholar

21. Gustav Eje Henter, Simon Alexanderson, and Jonas Beskow. 2020. Moglow: Probabilistic and controllable motion synthesis using normalising flows. ACM Transactions on Graphics (TOG) 39, 6 (2020), 1–14.Google ScholarDigital Library

22. Daniel Holden. 2018. Robust solving of optical motion capture data by denoising. ACM Transactions on Graphics (TOG) 37, 4 (2018), 1–12.Google ScholarDigital Library

23. Daniel Holden, Taku Komura, and Jun Saito. 2017. Phase-functioned neural networks for character control. ACM Transactions on Graphics (TOG) 36, 4 (2017), 1–13.Google ScholarDigital Library

24. Jiawei Hong, Gerardo Lafferriere, Bhubaneswar Mishra, and Xiaonan Tan. 1990. Fine manipulation with multifinger hands. In Proceedings., IEEE International Conference on Robotics and Automation. IEEE, 1568–1573.Google ScholarCross Ref

25. Ruizhen Hu, Manolis Savva, and Oliver van Kaick. 2018. Functionality representations and applications for shape analysis. In Computer Graphics Forum, Vol. 37. Wiley Online Library, 603–624.Google Scholar

26. Ruizhen Hu, Zihao Yan, Jingwen Zhang, Oliver Van Kaick, Ariel Shamir, Hao Zhang, and Hui Huang. 2020. Predictive and generative neural networks for object functionality. arXiv preprint arXiv:2006.15520 (2020).Google Scholar

27. Nathana?l Jarrass?, Adriano Tacilo Ribeiro, Anis Sahbani, Wael Bachta, and Agnes Roby-Brami. 2014. Analysis of hand synergies in healthy subjects during bimanual manipulation of various objects. Journal of neuroengineering and rehabilitation 11, 1 (2014), 1–11.Google ScholarCross Ref

28. Sophie J?rg, Jessica Hodgins, and Alla Safonova. 2012. Data-driven finger motion synthesis for gesturing characters. ACM Transactions on Graphics (TOG) 31, 6 (2012), 1–7.Google ScholarDigital Library

29. Korrawe Karunratanakul, Jinlong Yang, Yan Zhang, Michael Black, Krikamol Muandet, and Siyu Tang. 2020. Grasping Field: Learning Implicit Representations for Human Grasps. In International Conference on 3D Vision (3DV).Google Scholar

30. Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).Google Scholar

31. Paul G Kry and Dinesh K Pai. 2006. Interaction capture and synthesis. ACM Transactions on Graphics (TOG) 25, 3 (2006), 872–880.Google ScholarDigital Library

32. Nikolaos Kyriazis and Antonis Argyros. 2014. Scalable 3d tracking of multiple interacting objects. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 3430–3437.Google ScholarDigital Library

33. Manfred Lau, Kapil Dev, Weiqi Shi, Julie Dorsey, and Holly Rushmeier. 2016. Tactile mesh saliency. ACM Transactions on Graphics (TOG) 35, 4 (2016), 1–11.Google ScholarDigital Library

34. Magic Leap. [Online; accessed 27-January-2021]. Hand Tracking. https://developer.magicleap.com/en-us/learn/guides/lumin-sdk-handtracking.Google Scholar

35. Gilwoo Lee, Zhiwei Deng, Shugao Ma, Takaaki Shiratori, Siddhartha S. Srinivasa, and Yaser Sheikh. 2019. Talking With Hands 16.2M: A Large-Scale Dataset of Synchronized Body-Finger Motion and Audio for Conversational Motion Analysis and Synthesis. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV).Google ScholarCross Ref

36. Ian Lenz, Honglak Lee, and Ashutosh Saxena. 2015. Deep learning for detecting robotic grasps. The International Journal of Robotics Research 34, 4-5 (2015), 705–724.Google ScholarDigital Library

37. Sergey Levine, Chelsea Finn, Trevor Darrell, and Pieter Abbeel. 2016. End-to-end training of deep visuomotor policies. The Journal of Machine Learning Research 17, 1 (2016), 1334–1373.Google ScholarDigital Library

38. Sergey Levine, Peter Pastor, Alex Krizhevsky, Julian Ibarz, and Deirdre Quillen. 2018. Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection. The International Journal of Robotics Research 37, 4-5 (2018), 421–436.Google Scholar

39. Ying Li, Jiaxin L Fu, and Nancy S Pollard. 2007. Data-driven grasp synthesis using shape matching and task-based pruning. IEEE Transactions on Visualization and Computer Graphics 13, 4 (2007), 732–747.Google ScholarDigital Library

40. C Karen Liu. 2009. Dextrous manipulation from a grasping pose. ACM Transactions on Graphics (TOG) 28, 3 (2009), 1–6.Google ScholarDigital Library

41. Min Liu, Zherong Pan, Kai Xu, Kanishka Ganguly, and Dinesh Manocha. 2019. Generating Grasp Poses for a High-DOF Gripper Using Neural Networks.Google Scholar

42. Anna Majkowska, Victor Zordan, and Petros Faloutsos. 2006. Automatic splicing for hand and body animations. In ACM SIGGRAPH 2006 Sketches. 32–es.Google ScholarDigital Library

43. Josh Merel, Saran Tunyasuvunakool, Arun Ahuja, Yuval Tassa, Leonard Hasenclever, Vu Pham, Tom Erez, Greg Wayne, and Nicolas Heess. 2020. Catch & Carry: reusable neural controllers for vision-guided whole-body tasks. ACM Transactions on Graphics (TOG) 39, 4 (2020), 39–1.Google ScholarDigital Library

44. Microsoft. [Online; accessed 27-January-2021]. Microsoft Mixed Reality Toolkit: Hand Tracking. https://microsoft.github.io/MixedRealityToolkit-Unity/Documentation/Input/HandTracking.html.Google Scholar

45. Gyeongsik Moon, Shoou-I Yu, He Wen, Takaaki Shiratori, and Kyoung Mu Lee. 2020. InterHand2.6M: A Dataset and Baseline for 3D Interacting Hand Pose Estimation from a Single RGB Image. arXiv preprint arXiv:2008.09309 (2020).Google Scholar

46. Igor Mordatch, Zoran Popovi?, and Emanuel Todorov. 2012. Contact-invariant optimization for hand manipulation. In Proceedings of the ACM SIGGRAPH/Eurographics symposium on computer animation. 137–144.Google Scholar

47. Franziska Mueller, Micah Davis, Florian Bernard, Oleksandr Sotnychenko, Mickeal Verschoor, Miguel A. Otaduy, Dan Casas, and Christian Theobalt. 2019. Real-time Pose and Shape Reconstruction of Two Interacting Hands With a Single Depth Camera. ACM Transactions on Graphics (TOG) 38, 4 (2019).Google ScholarDigital Library

48. Franziska Mueller, Dushyant Mehta, Oleksandr Sotnychenko, Srinath Sridhar, Dan Casas, and Christian Theobalt. 2017. Real-time Hand Tracking under Occlusion from an Egocentric RGB-D Sensor. In Proceedings of the International Conference on Computer Vision (ICCV).Google Scholar

49. Oculus. [Online; accessed 27-January-2021]. Oculus Touch Controllers. https://developer.oculus.com/documentation/native/pc/dg-input-touch-overview/.Google Scholar

50. Jeong Joon Park, Peter Florence, Julian Straub, Richard Newcombe, and Steven Love-grove. 2019a. Deepsdf: Learning continuous signed distance functions for shape representation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 165–174.Google ScholarCross Ref

51. Soohwan Park, Hoseok Ryu, Seyoung Lee, Sunmin Lee, and Jehee Lee. 2019b. Learning predict-and-simulate policies from unorganized human motion data. ACM Transactions on Graphics (TOG) 38, 6 (2019), 1–11.Google ScholarDigital Library

52. Xue Bin Peng, Pieter Abbeel, Sergey Levine, and Michiel van de Panne. 2018. Deepmimic: Example-guided deep reinforcement learning of physics-based character skills. ACM Transactions on Graphics (TOG) 37, 4 (2018), 1–14.Google ScholarDigital Library

53. Xue Bin Peng, Glen Berseth, KangKang Yin, and Michiel Van De Panne. 2017. Deeploco: Dynamic locomotion skills using hierarchical deep reinforcement learning. ACM Transactions on Graphics (TOG) 36, 4 (2017), 1–13.Google ScholarDigital Library

54. S?ren Pirk, Vojtech Krs, Kaimo Hu, Suren Deepak Rajasekaran, Hao Kang, Yusuke Yoshiyasu, Bedrich Benes, and Leonidas J Guibas. 2017. Understanding and exploiting object interaction landscapes. ACM Transactions on Graphics (TOG) 36, 3 (2017), 1–14.Google ScholarDigital Library

55. Nancy S Pollard and Victor Brian Zordan. 2005. Physically based grasping control from example. In Proceedings of the 2005 ACM SIGGRAPH/Eurographics symposium on Computer animation. 311–318.Google ScholarDigital Library

56. Charles R Qi, Hao Su, Kaichun Mo, and Leonidas J Guibas. 2017a. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition. 652–660.Google Scholar

57. Charles Ruizhongtai Qi, Li Yi, Hao Su, and Leonidas J Guibas. 2017b. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Advances in neural information processing systems. 5099–5108.Google Scholar

58. Aravind Rajeswaran, Vikash Kumar, Abhishek Gupta, Giulia Vezzani, John Schulman, Emanuel Todorov, and Sergey Levine. 2017. Learning complex dexterous manipulation with deep reinforcement learning and demonstrations. arXiv preprint arXiv:1709.10087 (2017).Google Scholar

59. Javier Romero, Dimitrios Tzionas, and Michael J. Black. 2017. Embodied Hands: Modeling and Capturing Hands and Bodies Together. ACM Transactions on Graphics, (Proc. SIGGRAPH Asia) 36, 6 (Nov. 2017), 245:1–245:17. Google ScholarDigital Library

60. M Santello, M Flanders, and J F Soechting. 1998. Postural hand synergies for tool use. J Neurosci 18, 23 (Dec 1998), 10105–10115.Google ScholarCross Ref

61. M Santello, M Flanders, and J F Soechting. 2002. Patterns of hand motion during grasping and the influence of sensory guidance. J Neurosci 22, 4 (Feb 2002), 1426–35.Google ScholarCross Ref

62. Gerrit Schoettler, Ashvin Nair, Jianlan Luo, Shikhar Bahl, Juan Aparicio Ojea, Eugen Solowjow, and Sergey Levine. 2019. Deep reinforcement learning for industrial insertion tasks with visual inputs and natural rewards. arXiv preprint arXiv:1906.05841 (2019).Google Scholar

63. Jamie Shotton, Andrew Fitzgibbon, Mat Cook, Toby Sharp, Mark Finocchio, Richard Moore, Alex Kipman, and Andrew Blake. 2011. Real-time human pose recognition in parts from single depth images. In CVPR 2011. Ieee, 1297–1304.Google ScholarDigital Library

64. Tomas Simon, Hanbyul Joo, Iain Matthews, and Yaser Sheikh. 2017. Hand Keypoint Detection in Single Images Using Multiview Bootstrapping. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarCross Ref

65. Shuran Song, Fisher Yu, Andy Zeng, Angel X Chang, Manolis Savva, and Thomas Funkhouser. 2017. Semantic scene completion from a single depth image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 1746–1754.Google ScholarCross Ref

66. Srinath Sridhar, Franziska Mueller, Michael Zollhoefer, Dan Casas, Antti Oulasvirta, and Christian Theobalt. 2016. Real-time Joint Tracking of a Hand Manipulating an Object from RGB-D Input. In Proceedings of European Conference on Computer Vision (ECCV). 17. http://handtracker.mpi-inf.mpg.de/projects/RealtimeHO/Google ScholarCross Ref

67. Nitish Srivastava, Geoffrey Hinton, Alex Krizhevsky, Ilya Sutskever, and Ruslan Salakhutdinov. 2014. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. The Journal of Machine Learning Research 15, 1 (2014), 1929–1958. http://dl.acm.org/citation.cfm?id=2627435.2670313Google ScholarDigital Library

68. Sebastian Starke, He Zhang, Taku Komura, and Jun Saito. 2019. Neural state machine for character-scene interactions. ACM Trans. Graph. 38, 6 (2019), 209–1.Google ScholarDigital Library

69. Omid Taheri, Nima Ghorbani, Michael J. Black, and Dimitrios Tzionas. 2020. GRAB: A Dataset of Whole-Body Human Grasping of Objects. In European Conference on Computer Vision (ECCV). https://grab.is.tue.mpg.deGoogle Scholar

70. Jonathan Tompson, Murphy Stein, Yann Lecun, and Ken Perlin. 2014. Real-time continuous pose recovery of human hands using convolutional networks. ACM Transactions on Graphics (ToG) 33, 5 (2014), 1–10.Google ScholarDigital Library

71. Fran?ois Touvet, Agn?s Roby-Brami, Marc A Maier, and Selim Eskiizmirliler. 2014. Grasp: combined contribution of object properties and task constraints on hand and finger posture. Experimental brain research 232, 10 (2014), 3055–3067.Google Scholar

72. Dimitrios Tzionas, Luca Ballan, Abhilash Srikantha, Pablo Aponte, Marc Pollefeys, and Juergen Gall. 2016. Capturing Hands in Action using Discriminative Salient Points and Physics Simulation. International Journal of Computer Vision 118, 2 (2016), 172–193.Google ScholarDigital Library

73. Ultraleap. [Online; accessed 27-January-2021]. Gemini: Fifth-generation hand tracking platform”. https://www.ultraleap.com/tracking/gemini-hand-tracking-platform/.Google Scholar

74. Vive. [Online; accessed 27-January-2021]. VIVE TRACKER: GO BEYOND VR CONTROLLERS. https://www.vive.com/us/accessory/vive-tracker/.Google Scholar

75. He Wang, S?ren Pirk, Ersin Yumer, Vladimir G Kim, Ozan Sener, Srinath Sridhar, and Leonidas J Guibas. 2019. Learning a Generative Model for Multi-Step Human-Object Interactions from Videos. In Computer Graphics Forum, Vol. 38. Wiley Online Library, 367–378.Google Scholar

76. Jiayi Wang, Franziska Mueller, Florian Bernard, Suzanne Sorli, Oleksandr Sotnychenko, Neng Qian, Miguel A. Otaduy, Dan Casas, and Christian Theobalt. 2020. RGB2Hands: Real-Time Tracking of 3D Hand Interactions from Monocular RGB Video. ACM Transactions on Graphics (TOG) 39, 6 (12 2020).Google ScholarDigital Library

77. Nkenge Wheatland, Yingying Wang, Huaguang Song, Michael Neff, Victor Zordan, and Sophie J?rg. 2015. State of the Art in Hand and Finger Modeling and Animation. Computer Graphics Forum 34, 2 (2015), 735–760.Google ScholarDigital Library

78. Jungdam Won and Jehee Lee. 2019. Learning body shape variation in physics-based characters. ACM Transactions on Graphics (TOG) 38, 6 (2019), 1–12.Google ScholarDigital Library

79. Zhirong Wu, Shuran Song, Aditya Khosla, Fisher Yu, Linguang Zhang, Xiaoou Tang, and Jianxiong Xiao. 2015. 3d shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE conference on computer vision and pattern recognition. 1912–1920.Google Scholar

80. Yuting Ye and C Karen Liu. 2012. Synthesis of detailed hand manipulations using contact sampling. ACM Transactions on Graphics (TOG) 31, 4 (2012), 1–10.Google ScholarDigital Library

81. Shanxin Yuan, Guillermo Garcia-Hernando, Bj?rn Stenger, Gyeongsik Moon, Ju Yong Chang, Kyoung Mu Lee, Pavlo Molchanov, Jan Kautz, Sina Honari, Liuhao Ge, Junsong Yuan, Xinghao Chen, Guijin Wang, Fan Yang, Kai Akiyama, Yang Wu, Qingfu Wan, Meysam Madadi, Sergio Escalera, Shile Li, Dongheui Lee, Iason Oikonomidis, Antonis Argyros, and Tae-Kyun Kim. 2018. Depth-Based 3D Hand Pose Estimation: From Current Achievements to Future Goals. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 2636–2645.Google ScholarCross Ref

82. Hao Zhang, Zi-Hao Bo, Jun-Hai Yong, and Feng Xu. 2019. InteractionFusion: Real-Time Reconstruction of Hand Poses and Deformable Objects in Hand-Object Interactions. ACM Transactions on Graphics 38, 4 (2019).Google ScholarDigital Library

83. He Zhang, Sebastian Starke, Taku Komura, and Jun Saito. 2018a. Mode-adaptive neural networks for quadruped motion control. ACM Transactions on Graphics (TOG) 37, 4 (2018), 1–11.Google ScholarDigital Library

84. Yulun Zhang, Yapeng Tian, Yu Kong, Bineng Zhong, and Yun Fu. 2018b. Residual dense network for image super-resolution. In Proceedings of the IEEE conference on computer vision and pattern recognition. 2472–2481.Google ScholarCross Ref

85. Wenping Zhao, Jianjie Zhang, Jianyuan Min, and Jinxiang Chai. 2013. Robust realtime physics-based motion control for human grasping. ACM Transactions on Graphics (TOG) 32, 6 (2013), 1–12.Google ScholarDigital Library

86. Christian Zimmermann and Thomas Brox. 2017. Learning to Estimate 3D Hand Pose From Single RGB Images. In Proceedings of the IEEE International Conference on Computer Vision (ICCV).Google ScholarCross Ref

87. Paula Zuccotti. 2015. Every Thing We Touch: A 24-Hour Inventory of Our Lives. Viking.Google Scholar