“VMesh: Hybrid Volume-Mesh Representation for Efficient View Synthesis” by Guo, Cao, Wang, Shan, Zhang, et al. …

Conference:

Type(s):

Title:

- VMesh: Hybrid Volume-Mesh Representation for Efficient View Synthesis

Session/Category Title:

- View Synthesis

Presenter(s)/Author(s):

Abstract:

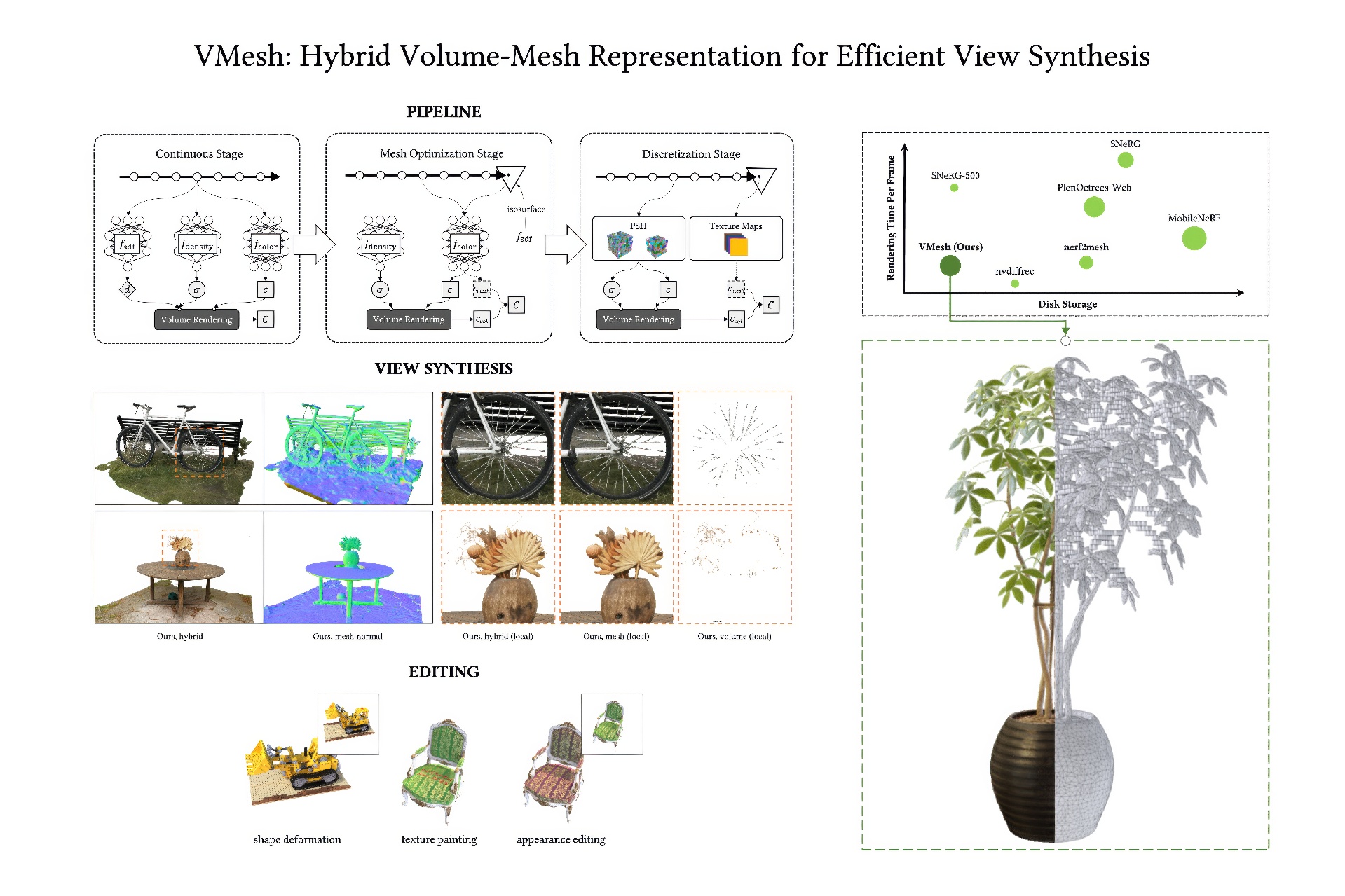

With the emergence of neural radiance fields (NeRFs), view synthesis quality has reached an unprecedented level. Compared to traditional mesh-based assets, this volumetric representation is more powerful in expressing scene geometry but inevitably suffers from high rendering costs and can hardly be involved in further processes like editing, posing significant difficulties in combination with the existing graphics pipeline. In this paper, we present a hybrid volume-mesh representation, VMesh, which depicts an object with a textured mesh along with an auxiliary sparse volume. VMesh retains the advantages of mesh-based assets, such as efficient rendering and compact storage, while also incorporating the ability to represent subtle geometric structures provided by the volumetric counterpart. VMesh can be obtained from multi-view images of an object and renders at 2K 60FPS on common consumer devices with high fidelity, unleashing new opportunities for real-time immersive applications.

References:

[1]

Jonathan T. Barron, Ben Mildenhall, Dor Verbin, Pratul P. Srinivasan, and Peter Hedman. 2022. Mip-NeRF 360: Unbounded Anti-Aliased Neural Radiance Fields. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, June 18-24, 2022. IEEE, New Orleans, 5460–5469. https://doi.org/10.1109/CVPR52688.2022.00539

[2]

Aljaz Bozic, Denis Gladkov, Luke Doukakis, and Christoph Lassner. 2022. Neural Assets: Volumetric Object Capture and Rendering for Interactive Environments. CoRR abs/2212.06125 (2022). https://doi.org/10.48550/arXiv.2212.06125 arXiv:2212.06125

[3]

Zhiqin Chen, Thomas A. Funkhouser, Peter Hedman, and Andrea Tagliasacchi. 2022. MobileNeRF: Exploiting the Polygon Rasterization Pipeline for Efficient Neural Field Rendering on Mobile Architectures. CoRR abs/2208.00277 (2022). https://doi.org/10.48550/arXiv.2208.00277 arXiv:2208.00277

[4]

Cyril Crassin, Fabrice Neyret, Sylvain Lefebvre, and Elmar Eisemann. 2009. GigaVoxels: ray-guided streaming for efficient and detailed voxel rendering. In Proceedings of the 2009 Symposium on Interactive 3D Graphics, SI3D 2009, February 27 – March 1, 2009, Boston, Massachusetts, USA, Eric Haines, Morgan McGuire, Daniel G. Aliaga, Manuel M. Oliveira, and Stephen N. Spencer (Eds.). ACM, Boston, 15–22. https://doi.org/10.1145/1507149.1507152

[5]

Philippe Decaudin and Fabrice Neyret. 2004. Rendering Forest Scenes in Real-Time. In Proceedings of the 15th Eurographics Workshop on Rendering Techniques, Norköping, Sweden, June 21-23, 2004, Alexander Keller and Henrik Wann Jensen (Eds.). Eurographics Association, Norköping, 93–102. https://doi.org/10.2312/EGWR/EGSR04/093-102

[6]

Philippe Decaudin and Fabrice Neyret. 2009. Volumetric Billboards. Comput. Graph. Forum 28, 8 (2009), 2079–2089. https://doi.org/10.1111/j.1467-8659.2009.01354.x

[7]

Alex Evans. 2015. Learning from failure: a survey of promising, unconventional and mostly abandoned renderers for ‘dreams ps4’, a geometrically dense, painterly ugc game. Advances in Real-Time Rendering in Games. MediaMolecule, SIGGRAPH 2 (2015).

[8]

Sara Fridovich-Keil, Alex Yu, Matthew Tancik, Qinhong Chen, Benjamin Recht, and Angjoo Kanazawa. 2022. Plenoxels: Radiance Fields without Neural Networks. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, June 18-24, 2022. IEEE, New Orleans, 5491–5500. https://doi.org/10.1109/CVPR52688.2022.00542

[9]

Stephan J. Garbin, Marek Kowalski, Matthew Johnson, Jamie Shotton, and Julien P. C. Valentin. 2021. FastNeRF: High-Fidelity Neural Rendering at 200FPS. In 2021 IEEE/CVF International Conference on Computer Vision, ICCV 2021, Montreal, QC, Canada, October 10-17, 2021. IEEE, Montreal, 14326–14335. https://doi.org/10.1109/ICCV48922.2021.01408

[10]

Michael Garland and Paul S. Heckbert. 1997. Surface simplification using quadric error metrics. In Proceedings of the 24th Annual Conference on Computer Graphics and Interactive Techniques, SIGGRAPH 1997, Los Angeles, CA, USA, August 3-8, 1997, G. Scott Owen, Turner Whitted, and Barbara Mones-Hattal (Eds.). ACM, Los Angeles, 209–216. https://doi.org/10.1145/258734.258849

[11]

Jon Hasselgren, Nikolai Hofmann, and Jacob Munkberg. 2022. Shape, Light, and Material Decomposition from Images using Monte Carlo Rendering and Denoising. In NeurIPS. Curran Associates, New Orleans. http://papers.nips.cc/paper_files/paper/2022/hash/8fcb27984bf16ca03cad643244ec470d-Abstract-Conference.html

[12]

Peter Hedman, Pratul P. Srinivasan, Ben Mildenhall, Jonathan T. Barron, and Paul E. Debevec. 2021. Baking Neural Radiance Fields for Real-Time View Synthesis. In 2021 IEEE/CVF International Conference on Computer Vision, ICCV 2021, Montreal, QC, Canada, October 10-17, 2021. IEEE, Montreal, 5855–5864. https://doi.org/10.1109/ICCV48922.2021.00582

[13]

jpcy. 2022. xatlas. https://github.com/jpcy/xatlas.

[14]

Samuli Laine, Janne Hellsten, Tero Karras, Yeongho Seol, Jaakko Lehtinen, and Timo Aila. 2020. Modular primitives for high-performance differentiable rendering. ACM Trans. Graph. 39, 6 (2020), 194:1–194:14. https://doi.org/10.1145/3414685.3417861

[15]

Sylvain Lefebvre and Hugues Hoppe. 2006. Perfect spatial hashing. ACM Trans. Graph. 25, 3 (2006), 579–588. https://doi.org/10.1145/1141911.1141926

[16]

Gengyan Li, Abhimitra Meka, Franziska Müller, Marcel C. Bühler, and Otmar Hilliges. 2022. EyeNeRF: A Hybrid Representation for Photorealistic Synthesis, Animation and Relighting of Human Eyes. CoRR abs/2206.08428 (2022).

[17]

William E. Lorensen and Harvey E. Cline. 1987. Marching cubes: A high resolution 3D surface construction algorithm. In Proceedings of the 14th Annual Conference on Computer Graphics and Interactive Techniques, SIGGRAPH 1987, Anaheim, California, USA, July 27-31, 1987, Maureen C. Stone (Ed.). ACM, Anaheim, 163–169. https://doi.org/10.1145/37401.37422

[18]

Guillaume Loubet and Fabrice Neyret. 2017. Hybrid mesh-volume LoDs for all-scale pre-filtering of complex 3D assets. Comput. Graph. Forum 36, 2 (2017), 431–442.

[19]

Haimin Luo, Teng Xu, Yuheng Jiang, Chenglin Zhou, Qiwei Qiu, Yingliang Zhang, Wei Yang, Lan Xu, and Jingyi Yu. 2022. Artemis: articulated neural pets with appearance and motion synthesis. ACM Trans. Graph. 41, 4 (2022), 164:1–164:19. https://doi.org/10.1145/3528223.3530086

[20]

Ben Mildenhall, Pratul P Srinivasan, Matthew Tancik, Jonathan T Barron, Ravi Ramamoorthi, and Ren Ng. 2020. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. In European Conference on Computer Vision. Springer, virtual, 405–421.

[21]

mrdoob. 2023. threejs. https://github.com/mrdoob/three.js.

[22]

Thomas Müller, Alex Evans, Christoph Schied, and Alexander Keller. 2022. Instant neural graphics primitives with a multiresolution hash encoding. ACM Trans. Graph. 41, 4 (2022), 102:1–102:15. https://doi.org/10.1145/3528223.3530127

[23]

Jacob Munkberg, Wenzheng Chen, Jon Hasselgren, Alex Evans, Tianchang Shen, Thomas Müller, Jun Gao, and Sanja Fidler. 2022. Extracting Triangular 3D Models, Materials, and Lighting From Images. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, June 18-24, 2022. IEEE, New Orleans, 8270–8280. https://doi.org/10.1109/CVPR52688.2022.00810

[24]

Nikhila Ravi, Jeremy Reizenstein, David Novotný, Taylor Gordon, Wan-Yen Lo, Justin Johnson, and Georgia Gkioxari. 2020. Accelerating 3D Deep Learning with PyTorch3D. CoRR abs/2007.08501 (2020). arXiv:2007.08501https://arxiv.org/abs/2007.08501

[25]

Christian Reiser, Richard Szeliski, Dor Verbin, Pratul P. Srinivasan, Ben Mildenhall, Andreas Geiger, Jonathan T. Barron, and Peter Hedman. 2023. MERF: Memory-Efficient Radiance Fields for Real-time View Synthesis in Unbounded Scenes. CoRR abs/2302.12249 (2023). https://doi.org/10.48550/arXiv.2302.12249 arXiv:2302.12249

[26]

Tianchang Shen, Jun Gao, Kangxue Yin, Ming-Yu Liu, and Sanja Fidler. 2021. Deep Marching Tetrahedra: a Hybrid Representation for High-Resolution 3D Shape Synthesis. In Advances in Neural Information Processing Systems 34: Annual Conference on Neural Information Processing Systems 2021, NeurIPS 2021, December 6-14, 2021, virtual, Marc’Aurelio Ranzato, Alina Beygelzimer, Yann N. Dauphin, Percy Liang, and Jennifer Wortman Vaughan (Eds.). Curran Associates, virtual, 6087–6101. https://proceedings.neurips.cc/paper/2021/hash/30a237d18c50f563cba4531f1db44acf-Abstract.html

[27]

Cheng Sun, Min Sun, and Hwann-Tzong Chen. 2022. Direct Voxel Grid Optimization: Super-fast Convergence for Radiance Fields Reconstruction. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, June 18-24, 2022. IEEE, New Orleans, 5449–5459. https://doi.org/10.1109/CVPR52688.2022.00538

[28]

Jiaxiang Tang, Hang Zhou, Xiaokang Chen, Tianshu Hu, Errui Ding, Jingdong Wang, and Gang Zeng. 2023. Delicate Textured Mesh Recovery from NeRF via Adaptive Surface Refinement. CoRR abs/2303.02091 (2023). https://doi.org/10.48550/arXiv.2303.02091 arXiv:2303.02091

[29]

Dor Verbin, Peter Hedman, Ben Mildenhall, Todd E. Zickler, Jonathan T. Barron, and Pratul P. Srinivasan. 2022. Ref-NeRF: Structured View-Dependent Appearance for Neural Radiance Fields. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, June 18-24, 2022. IEEE, New Orleans, 5481–5490. https://doi.org/10.1109/CVPR52688.2022.00541

[30]

Peng Wang, Lingjie Liu, Yuan Liu, Christian Theobalt, Taku Komura, and Wenping Wang. 2021. NeuS: Learning Neural Implicit Surfaces by Volume Rendering for Multi-view Reconstruction. Advances in Neural Information Processing Systems 34 (2021), 27171–27183.

[31]

Suttisak Wizadwongsa, Pakkapon Phongthawee, Jiraphon Yenphraphai, and Supasorn Suwajanakorn. 2021. NeX: Real-Time View Synthesis With Neural Basis Expansion. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2021, virtual, June 19-25, 2021. Computer Vision Foundation / IEEE, virtual, 8534–8543. https://doi.org/10.1109/CVPR46437.2021.00843

[32]

Bangbang Yang, Chong Bao, Junyi Zeng, Hujun Bao, Yinda Zhang, Zhaopeng Cui, and Guofeng Zhang. 2022. NeuMesh: Learning Disentangled Neural Mesh-based Implicit Field for Geometry and Texture Editing. CoRR abs/2207.11911 (2022).

[33]

Lior Yariv, Jiatao Gu, Yoni Kasten, and Yaron Lipman. 2021. Volume rendering of neural implicit surfaces. Advances in Neural Information Processing Systems 34 (2021), 4805–4815.

[34]

Lior Yariv, Peter Hedman, Christian Reiser, Dor Verbin, Pratul P. Srinivasan, Richard Szeliski, Jonathan T. Barron, and Ben Mildenhall. 2023. BakedSDF: Meshing Neural SDFs for Real-Time View Synthesis. CoRR abs/2302.14859 (2023). https://doi.org/10.48550/arXiv.2302.14859 arXiv:2302.14859

[35]

Alex Yu, Ruilong Li, Matthew Tancik, Hao Li, Ren Ng, and Angjoo Kanazawa. 2021. PlenOctrees for Real-time Rendering of Neural Radiance Fields. In 2021 IEEE/CVF International Conference on Computer Vision, ICCV 2021, Montreal, QC, Canada, October 10-17, 2021. IEEE, Montreal, 5732–5741. https://doi.org/10.1109/ICCV48922.2021.00570

[36]

Yu-Jie Yuan, Yang-Tian Sun, Yu-Kun Lai, Yuewen Ma, Rongfei Jia, and Lin Gao. 2022. NeRF-Editing: Geometry Editing of Neural Radiance Fields. CoRR abs/2205.04978 (2022).

[37]

Kai Zhang, Fujun Luan, Qianqian Wang, Kavita Bala, and Noah Snavely. 2021. PhySG: Inverse Rendering With Spherical Gaussians for Physics-Based Material Editing and Relighting. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2021, virtual, June 19-25, 2021. Computer Vision Foundation / IEEE, virtual, 5453–5462. https://doi.org/10.1109/CVPR46437.2021.00541

[38]

Kai Zhang, Gernot Riegler, Noah Snavely, and Vladlen Koltun. 2020. NeRF++: Analyzing and Improving Neural Radiance Fields. CoRR abs/2010.07492 (2020). arXiv:2010.07492https://arxiv.org/abs/2010.07492

[39]

Wojciech Zielonka, Timo Bolkart, and Justus Thies. 2022. Instant Volumetric Head Avatars. CoRR abs/2211.12499 (2022). https://doi.org/10.48550/arXiv.2211.12499 arXiv:2211.12499