“Trainable videorealistic speech animation”

Conference:

Type(s):

Title:

- Trainable videorealistic speech animation

Presenter(s)/Author(s):

Abstract:

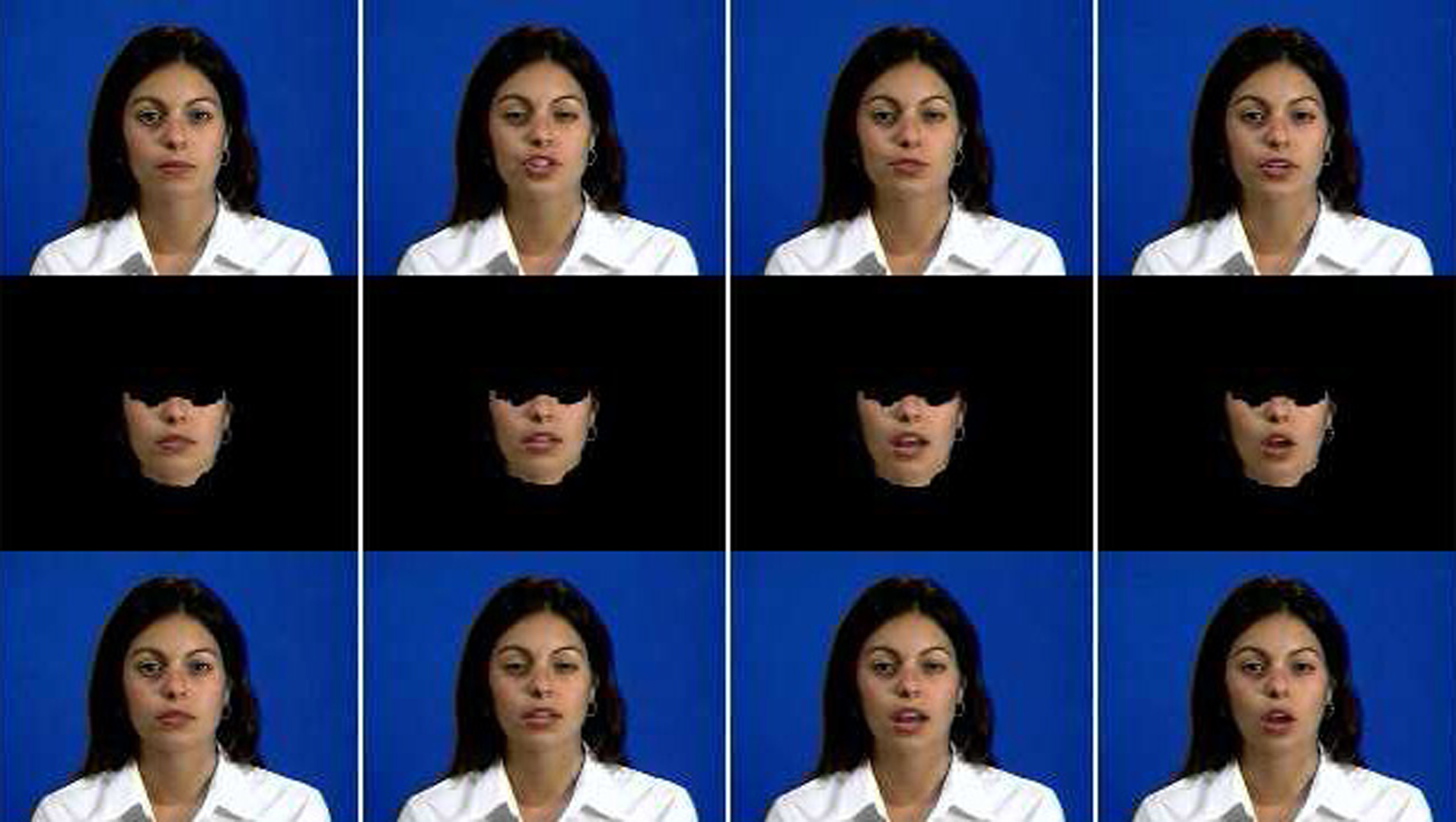

We describe how to create with machine learning techniques a generative, speech animation module. A human subject is first recorded using a videocamera as he/she utters a predetermined speech corpus. After processing the corpus automatically, a visual speech module is learned from the data that is capable of synthesizing the human subject’s mouth uttering entirely novel utterances that were not recorded in the original video. The synthesized utterance is re-composited onto a background sequence which contains natural head and eye movement. The final output is videorealistic in the sense that it looks like a video camera recording of the subject. At run time, the input to the system can be either real audio sequences or synthetic audio produced by a text-to-speech system, as long as they have been phonetically aligned.The two key contributions of this paper are 1) a variant of the multidimensional morphable model (MMM) to synthesize new, previously unseen mouth configurations from a small set of mouth image prototypes; and 2) a trajectory synthesis technique based on regularization, which is automatically trained from the recorded video corpus, and which is capable of synthesizing trajectories in MMM space corresponding to any desired utterance.

References:

1. BARRON, J. L., FLEET, D. J., AND BEAUCHEMIN, S. S. 1994. Performance of optical flow techniques. International Journal of Computer Vision 12, 1, 43-77. Google Scholar

2. BEIER, T., AND NEELY, S. 1992. Feature-based image metamorphosis. In Computer Graphics (Proceedings of ACM SIGGRAPH 92), vol. 26(2), ACM, 35-42. Google Scholar

3. BERGEN, J., ANANDAN, P., HANNA, K., AND HINGORANI, R. 1992. Hierarchical model-based motion estimation. In Proceedings of the European Conference on Computer Vision, 237-252. Google Scholar

4. BEYMER, D., AND POGGIO, T. 1996. Image representations for visual learning. Science 272, 1905-1909.Google Scholar

5. BEYMER, D., SHASHUA, A., AND POGGIO, T. 1993. Example based image analysis and synthesis. Tech. Rep. 1431, MIT AI Lab. Google Scholar

6. BISHOP, C. M. 1995. Neural Networks for Pattern Recognition. Clarendon Press, Oxford. Google Scholar

7. BLACK, A., AND TAYLOR, P. 1997. The Festival Speech Synthesis System. University of Edinburgh.Google Scholar

8. BLACK, M., FLEET, D., AND YACOOB, Y. 2000. Robustly estimating changes in image appearance. Computer Vision and Image Understanding, Special Issue on Robust Statistical Techniques in Image Understanding, 8-31. Google Scholar

9. BLANZ, V., AND VETTER, T. 1999. A morphable model for the synthesis of 3D faces. In Proceedings of SIGGRAPH 2001, ACM Press / ACM SIGGRAPH, Los Angeles, A. Rockwood, Ed., Computer Graphics Proceedings, Annual Conference Series, ACM, 187-194. Google Scholar

10. BRAND, M., AND HERTZMANN, A. 2000. Style machines. In Proceedings of SIGGRAPH 2000, ACM Press / ACM SIGGRAPH, K. Akeley, Ed., Computer Graphics Proceedings, Annual Conference Series, ACM, 183-192. Google Scholar

11. BRAND, M. 1999. Voice puppetry. In Proceedings of SIGGRAPH 1999, ACM Press / ACM SIGGRAPH, Los Angeles, A. Rockwood, Ed., Computer Graphics Proceedings, Annual Conference Series, ACM, 21-28. Google Scholar

12. BREGLER, C., COVELL, M., AND SLANEY, M. 1997. Video rewrite: Driving visual speech with audio. In Proceedings of SIGGRAPH 1997, ACM Press / ACM SIGGRAPH, Los Angeles, CA, Computer Graphics Proceedings, Annual Conference Series, ACM, 353-360. Google Scholar

13. BROOKE, N., AND SCOTT, S. 1994. Computer graphics animations of talking faces based on stochastic models. In Intl. Symposium on Speech, Image Processing, and Neural Networks.Google Scholar

14. BURT, P. J., AND ADELSON, E. H. 1983. The laplacian pyramid as a compact image code. IEEE Trans. on Communications COM-31, 4 (Apr.), 532-540.Google Scholar

15. CHEN, S. E., AND WILLIAMS, L. 1993. View interpolation for image synthesis. In Proceedings of SIGGRAPH 1993, ACM Press / ACM SIGGRAPH, Anaheim, CA, Computer Graphics Proceedings, Annual Conference Series, ACM, 279-288. Google Scholar

16. COHEN, M. M., AND MASSARO, D. W. 1993. Modeling coarticulation in synthetic visual speech. In Models and Techniques in Computer Animation, N. M. Thalmann and D. Thalmann, Eds. Springer-Verlag, Tokyo, 139-156.Google Scholar

17. COOTES, T. F., EDWARDS, G. J., AND TAYLOR, C. J. 1998. Active appearance models. In Proceedings of the European Conference on Computer Vision. Google Scholar

18. CORMEN, T. H., LEISERSON, C. E., AND RIVEST, R. L. 1989. Introduction to Algorithms. The MIT Press and McGraw-Hill Book Company. Google Scholar

19. COSATTO, E., AND GRAF, H. 1998. Sample-based synthesis of photorealistic talking heads. In Proceedings of Computer Animation ’98, 103-110. Google Scholar

20. EZZAT, T., AND POGGIO, T. 2000. Visual speech synthesis by morphing visemes. International Journal of Computer Vision 38, 45-57. Google Scholar

21. GIROSI, F., JONES, M., AND POGGIO, T. 1993. Priors, stabilizers, and basis functions: From regularization to radial, tensor, and additive splines. Tech. Rep. 1430, MIT AI Lab, June. Google Scholar

22. GUENTER, B., GRIMM, C., WOOD, D., MALVAR, H., AND PIGHIN, F. 1998. Making faces. In Proceedings of SIGGRAPH 1998, ACM Press / ACM SIGGRAPH, Orlando, FL, Computer Graphics Proceedings, Annual Conference Series, ACM, 55-66. Google Scholar

23. HORN, B. K. P., AND SCHUNCK, B. G. 1981. Determining optical flow. Artificial Intelligence 17, 185-203.Google Scholar

24. HUANG, X., ALLEVA, F., HON, H.-W., HWANG, M.-Y., LEE, K.-F., AND ROSENFELD, R. 1993. The SPHINX-II speech recognition system: an overview (http://sourceforge.net/projects/cmusphinx/). Computer Speech and Language 7, 2, 137-148.Google Scholar

25. JONES, M., AND POGGIO, T. 1998. Multidimensional morphable models: A framework for representing and maching object classes. In Proceedings of the International Conference on Computer Vision. Google Scholar

26. LEE, S. Y., CHWA, K. Y., SHIN, S. Y., AND WOLBERG, G. 1995. Image metemorphosis using snakes and free-form deformations. In Proceedings of SIGGRAPH 1995, ACM Press / ACM SIGGRAPH, vol. 29 of Computer Graphics Proceedings, Annual Conference Series, ACM, 439-448. Google Scholar

27. LEE, Y., TERZOPOULOS, D., AND WATERS, K. 1995. Realistic modeling for facial animation. In Proceedings of SIGGRAPH 1995, ACM Press / ACM SIGGRAPH, Los Angeles, California, Computer Graphics Proceedings, Annual Conference Series, ACM, 55-62. Google Scholar

28. LEE, S. Y., WOLBERG, G., AND SHIN, S. Y. 1998. Polymorph: An algorithm for morphing among multiple images. IEEE Computer Graphics Applications 18, 58-71. Google Scholar

29. LEGOFF, B., AND BENOIT, C. 1996. A text-to-audiovisual-speech synthesizer for french. In Proceedings of the International Conference on Spoken Language Processing (ICSLP).Google Scholar

30. MASUKO, T., KOBAYASHI, T., TAMURA, M., MASUBUCHI, J., AND TOKUDA, K. 1998. Text-to-visual speech synthesis based on parameter generation from hmm. In ICASSP.Google Scholar

31. MOULINES, E., AND CHARPENTIER, F. 1990. Pitch-synchronous waveform processing techniques for text-to-speech synthesis using diphones. Speech Communication 9, 453-467. Google Scholar

32. PARKE, F. I. 1974. A parametric model of human faces. PhD thesis, University of Utah. Google Scholar

33. PEARCE, A., WYVILL, B., WYVILL, G., AND HILL, D. 1986. Speech and expression: A computer solution to face animation. In Graphics Interface. Google Scholar

34. PIGHIN, F., HECKER, J., LISCHINSKI, D., SZELISKI, R., AND SALESIN, D. 1998. Synthesizing realistic facial expressions from photographs. In Proceedings of SIGGRAPH 1998, ACM Press / ACM SIGGRAPH, Orlando, FL, Computer Graphics Proceedings, Annual Conference Series, ACM, 75-84. Google Scholar

35. POGGIO, T., AND VETTER, T. 1992. Recognition and structure from one 2D model view: observations on prototypes, object classes and symmetries. Tech. Rep. 1347, Artificial Intelligence Laboratory, Massachusetts Institute of Technology. Google Scholar

36. ROWEIS, S. 1998. EM algorithms for PCA and SPCA. In Advances in Neural Information Processing Systems, The MIT Press, M. I. Jordan, M. J. Kearns, and S. A. Solla, Eds., vol. 10. Google Scholar

37. SCOTT, K., KAGELS, D., WATSON, S., ROM, H., WRIGHT, J., LEE, M., AND HUSSEY, K. 1994. Synthesis of speaker facial movement to match selected speech sequences. In Proceedings of the Fifth Australian Conference on Speech Science and Technology, vol. 2, 620-625.Google Scholar

38. SJLANDER, K., AND BESKOW, J. 2000. Wavesurfer – an open source speech tool. In Proc of ICSLP, vol. 4, 464-467.Google Scholar

39. TENENBAUM, J. B., DE SILVA, V., AND LANGFORD, J. C. 2000. A global geometric framework for nonlinear dimensionality reduction. Science 290 (Dec), 2319-2323.Google Scholar

40. TIPPING, M. E., AND BISHOP, C. M. 1999. Mixtures of probabilistic principal component analyzers. Neural Computation 11, 2, 443-482. Google Scholar

41. WAHBA, G. 1900. Splines Models for Observational Data. Series in Applied Mathematics, Vol. 59, SIAM, Philadelphia.Google Scholar

42. WATERS, K. 1987. A muscle model for animating three-dimensional facial expressions. In Computer Graphics (Proceedings of ACM SIGGRAPH 87), vol. 21(4), ACM, 17-24. Google Scholar

43. WATSON, S., WRIGHT, J., SCOTT, K., KAGELS, D., FREDA, D., AND HUSSEY, K. 1997. An advanced morphing algorithm for interpolating phoneme images to simulate speech. Jet Propulsion Laboratory, California Institute of Technology.Google Scholar

44. WOLBERG, G. 1990. Digital Image Warping. IEEE Computer Society Press, Los Alamitos, CA. Google Scholar