“Towards Motion Metamers for Foveated Rendering”

Conference:

Type(s):

Title:

- Towards Motion Metamers for Foveated Rendering

Presenter(s)/Author(s):

Abstract:

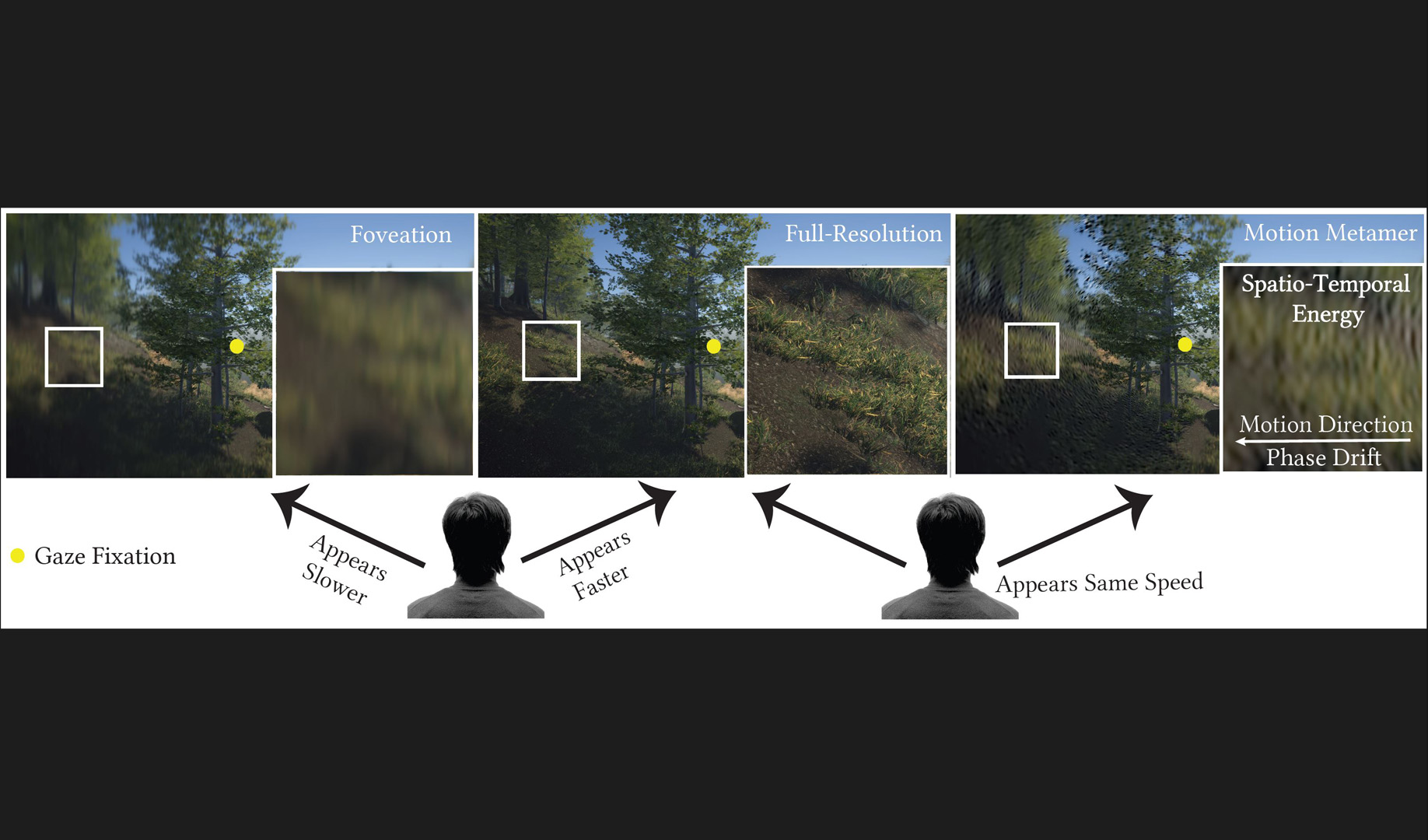

We demonstrate that foveated rendering may damage motion perception in AR/VR, leading to an under-estimation of cues such as velocity. To mitigate this, we propose the concept of motion metamers; videos that are structurally different but induce the same spatial and motion perception for the human visual system.

References:

[1]

Edward H. Adelson and James R. Bergen. 1985. Spatiotemporal energy models for the perception of motion. Journal of the Optical Society of America. A, Optics and image science 2 2 (1985), 284–99.

[2]

Rachel Albert, Anjul Patney, David Luebke, and Joohwan Kim. 2017. Latency requirements for foveated rendering in virtual reality. ACM Trans. Appl. Percept. 14, 4 (2017).

[3]

Horace B. Barlow. 1961. Possible principles underlying the transformation of sensory messages. Sensory communication 1, 01 (1961).

[4]

Ryan Beams, Brendan Collins, Andrea Seung Kim, and Aldo Badano. 2020. Angular Dependence of the Spatial Resolution in Virtual Reality Displays. 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (2020), 836–841.

[5]

Vincent A. Billock and Thomas H. Harding. 1996. Evidence of spatial and temporal channels in the correlational structure of human spatiotemporal contrast sensitivity. The Journal of Physiology 490 (1996).

[6]

William F. Broderick, Gizem Rufo, Jonathan A. Winawer, and Eero P. Simoncelli. 2023. Foveated metamers of the early visual system. bioRxiv (2023).

[7]

Petr Kellnhofer Brooke Krajancich and Gordon Wetzstein. 2021. A perceptual model for eccentricity-dependent spatio-temporal flicker fusion and its applications to foveated graphics. ACM Transactions on Graphics (TOG) 40 (2021), 1–11.

[8]

D C Burr, John Ross, and Maria Concetta Morrone. 1986. Seeing objects in motion. Proceedings of the Royal Society of London. Series B. Biological Sciences 227 (1986), 249–265.

[9]

Scott Daly. 2001. Engineering observations from spatiovelocity and spatiotemporal visual models. In Vision Models and Applications to Image and Video Processing. Springer, 179–200.

[10]

Arturo Deza, Aditya Jonnalagadda, and Miguel P. Eckstein. 2019. Towards metamerism via foveated style transfer. In International Conference on Learning Representations. Openreview.net, New Orleans, USA.

[11]

Bernhard Josef Lachenmayr E. Hartmann and Hans Brettel. 1979. The peripheral critical flicker frequency. Vision Research 19 (1979), 1019–1023.

[12]

Sigm. Exner. 1886. Ein Versuch ?ber die Netzhautperipherie als Organ zur Wahrnehmung von Bewegungen. Archiv f?r die gesamte Physiologie des Menschen und der Tiere 38 (1886), 217–218.

[13]

David Finlay. 1982. Motion Perception in the Peripheral Visual Field. Perception 11 (1982), 457–462.

[14]

Jeremy Freeman and Eero P. Simoncelli. 2011. Metamers of the ventral stream. Nature neuroscience 14, 9 (2011), 1195–1201.

[15]

William T. Freeman, Edward H. Adelson, and David J. Heeger. 1991. Motion without movement. SIGGRAPH (1991).

[16]

Brian Guenter, Mark Finch, Steven Drucker, Desney Tan, and John Snyder. 2012. Foveated 3D graphics. ACM Transactions on Graphics (TOG) 31, 6 (2012).

[17]

David Hoffman, Zoe Meraz, and Eric Turner. 2018. Limits of peripheral acuity and implications for VR system design. Journal of the Society for Information Display 26, 8 (2018), 483–495.

[18]

David Kane, Peter J. Bex, and Steven C. Dakin. 2011. Quantifying “the aperture problem” for judgments of motion direction in natural scenes. Journal of vision 11 3 (2011).

[19]

Anton Kaplanyan, Anton Sochenov, Thomas Leimk?hler, Mikhail Okunev, Todd Goodall, and Gizem Rufo. 2019. DeepFovea: Neural reconstruction for foveated rendering and video compression using learned statistics of natural videos. ACM Transactions on Graphics (TOG) 38, 6 (2019).

[20]

G.S. Klein. 1941. The relation between motion and form acuity in para-foveal and peripheral vision and related phenomena. Archives of Psychology (1941).

[21]

Brooke Krajancich, Petr Kellnhofer, and Gordon Wetzstein. 2023. Towards Attention-aware Foveated Rendering. ACM Transactions on Graphics (TOG) 42 (2023), 1–10.

[22]

Ares Lagae, Sylvain Lefebvre, George Drettakis, and Philip Dutr?. 2009. Procedural noise using sparse Gabor convolution. ACM Transactions on Graphics (TOG) 28, 3 (2009).

[23]

Gordon E. Legge and John M. Foley. 1980. Contrast masking in human vision. Journal of the Optical Society of America 70 12 (1980), 1458–71.

[24]

Th?llys Lisboa, Hor?cio Mac?do, Thiago Porcino, Eder Oliveira, Daniela Trevisan, and Esteban Clua. 2023b. Is Foveated Rendering Perception Affected by Users’ Motion?. In 2023 IEEE International Symposium on Mixed and Augmented Reality (ISMAR). IEEE, 1104–1112.

[25]

Th?llys Lisboa, Hor?cio Mac?do, Thiago Malheiros Porcino, Eder de Oliveira, Daniela Trevisan, and Esteban Walter Gonzalez Clua. 2023a. Is Foveated Rendering Perception Affected by Users’ Motion? 2023 IEEE International Symposium on Mixed and Augmented Reality (ISMAR) (2023), 1104–1112.

[26]

Rafa? Mantiuk, Maliha Ashraf, and Alexandre Chapiro. 2022. stelaCSF: a unified model of contrast sensitivity as the function of spatio-temporal frequency, eccentricity, luminance and area. ACM Transactions on Graphics (TOG) 41 (2022), 145:1–145:16.

[27]

Rafa? K. Mantiuk, Gyorgy Denes, Alexandre Chapiro, Anton Kaplanyan, Gizem Rufo, Romain Bachy, Trisha Lian, and Anjul Patney. 2021. FovVideoVDP: A visible difference predictor for wide field-of-view video. ACM Transactions on Graphics (TOG) 40, 4 (2021).

[28]

Xiaoxu Meng, Ruofei Du, Matthias Zwicker, and Amitabh Varshney. 2018. Kernel foveated rendering. Proc. of the ACM on Computer Graphics and Interactive Techniques 1, 1 (2018).

[29]

Bipul Mohanto, A. B. M. Tariqul Islam, Enrico Gobbetti, and Oliver Staadt. 2021. An integrative view of foveated rendering. Computers & Graphics (2021).

[30]

Fredo Durand Neal Wadhwa, Michael Rubinstein and William T. Freeman. 2013. Phase-based video motion processing. ACM Transactions on Graphics (TOG) 32 (2013), 1–10.

[31]

Anjul Patney, Marco Salvi, Joohwan Kim, Anton Kaplanyan, Chris Wyman, Nir Benty, David Luebke, and Aaron Lefohn. 2016. Towards foveated rendering for gaze-tracked virtual reality. ACM Transactions on Graphics (TOG) 35, 6 (2016).

[32]

Eli Peli. 2001. Contrast sensitivity function and image discrimination. Journal of the Optical Society of America. A, Optics, image science, and vision 18 2 (2001), 283–93.

[33]

Javier Portilla and Eero P. Simoncelli. 2000. A parametric texture model based on joint statistics of complex wavelet coefficients. Int J Comput Vision 40, 1 (2000), 49–70.

[34]

R Sekuler. 1875. Visual motion perception. Handbook of Perception (1875).

[35]

Eero P. Simoncelli and Bruno A. Olshausen. 2001. Natural image statistics and neural representation. Annual review of neuroscience 24, 1 (2001), 1193–1216.

[36]

Michael Stengel, Steve Grogorick, Martin Eisemann, and Marcus Magnor. 2016. Adaptive image-space sampling for gaze-contingent real-time rendering. In Comput Graph Forum, Vol. 35. Wiley Online Library, 129–139.

[37]

Leland S. Stone and Peter Thompson. 1992. Human speed perception is contrast dependent. Vision Research 32 (1992), 1535–1549.

[38]

Luca Surace, Cara Tursun, Ufuk Celikcan, and Piotr Didyk. 2023. Gaze-Contingent Perceptual Level of Detail Prediction. Eurographics Symposium on Rendering (EGSR) (2023).

[39]

Taimoor Tariq, Cara Tursun, and Piotr Didyk. 2022. Noise-based enhancement for foveated rendering. ACM Transactions on Graphics (TOG) 41 (2022), 1–14.

[40]

L. N. Thibos, D. L. Still, and A. Bradley. 1996. Characterization of spatial aliasing and contrast sensitivity in peripheral vision. Vision Res. 36, 2 (1996), 249–258.

[41]

L. N. Thibos and D. J. Walsh. 1985. Detection of high frequency gratings in the periphery. J. Opt. Soc. Am. A 2 (1985).

[42]

Okan Tarhan Tursun, Elena Arabadzhiyska-Koleva, Marek Wernikowski, Rados?aw Mantiuk, Hans-Peter Seidel, Karol Myszkowski, and Piotr Didyk. 2019. Luminance-contrast-aware foveated rendering. ACM Transactions on Graphics (TOG) 38, 4 (2019).

[43]

Jan P. H. van Santen and George Sperling. 1985. Elaborated Reichardt detectors. Journal of the Optical Society of America. A, Optics and image science 2 2 (1985), 300–21.

[44]

David R. Walton, Rafael Kuffner Dos Anjos, Sebastian Friston, David Swapp, Kaan Ak?it, Anthony Steed, and Tobias Ritschel. 2021. Beyond blur: real-time ventral metamers for foveated rendering. ACM Transactions on Graphics (TOG) 40, 4 (2021).

[45]

Andrew B. Watson. 1998. Toward a perceptual video-quality metric. In Electronic imaging.

[46]

Andrew B. Watson. 2013. High Frame Rates and Human Vision: A View through the Window of Visibility. Smpte Motion Imaging Journal 122 (2013), 18–32.

[47]

Andrew B. Watson and Albert J. Ahumada. 1985. Model of human visual-motion sensing. Journal of the Optical Society of America. A, Optics and image science 2 2 (1985), 322–41.

[48]

Reichardt Werner. 1961. Autocorrelation, a principle for the evaluation of sensory information by the central nervous system.

[49]

Wenjun Kevin Zeng, Scott J. Daly, and Shawmin Lei. 2000. Point-wise extended visual masking for JPEG-2000 image compression. International Conference on Image Processing (ICIP) 1 (2000), 657–660 vol.1.