“The visual microphone: passive recovery of sound from video” by Davis, Rubinstein, Wadhwa, Mysore, Durand, et al. …

Conference:

Type(s):

Title:

- The visual microphone: passive recovery of sound from video

Session/Category Title:

- Video Applications

Presenter(s)/Author(s):

Moderator(s):

Abstract:

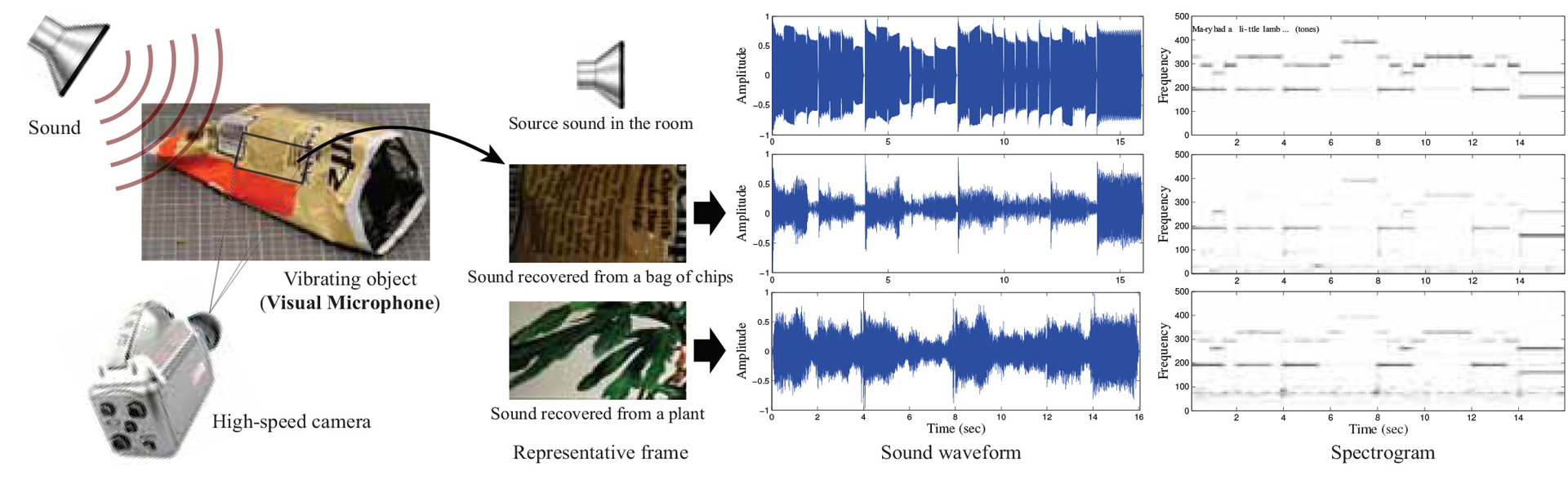

When sound hits an object, it causes small vibrations of the object’s surface. We show how, using only high-speed video of the object, we can extract those minute vibrations and partially recover the sound that produced them, allowing us to turn everyday objects—a glass of water, a potted plant, a box of tissues, or a bag of chips—into visual microphones. We recover sounds from high-speed footage of a variety of objects with different properties, and use both real and simulated data to examine some of the factors that affect our ability to visually recover sound. We evaluate the quality of recovered sounds using intelligibility and SNR metrics and provide input and recovered audio samples for direct comparison. We also explore how to leverage the rolling shutter in regular consumer cameras to recover audio from standard frame-rate videos, and use the spatial resolution of our method to visualize how sound-related vibrations vary over an object’s surface, which we can use to recover the vibration modes of an object.

References:

1. Ait-Aider, O., Bartoli, A., and Andreff, N. 2007. Kinematics from lines in a single rolling shutter image. In Computer Vision and Pattern Recognition, 2007. CVPR’07. IEEE Conference on, IEEE, 1–6. Google ScholarDigital Library

2. Boll, S. 1979. Suppression of acoustic noise in speech using spectral subtraction. Acoustics, Speech and Signal Processing, IEEE Transactions on 27, 2, 113–120.Google Scholar

3. Chen, J., Wadhwa, N., Cha, Y.-J., Durand, F., Freeman, W. T., and Buyukozturk, O. 2014. Structural modal identification through high speed camera video: Motion magnification. Proceedings of the 32nd International Modal Analysis Conference (to appear).Google Scholar

4. De Cheveigné, A., and Kawahara, H. 2002. Yin, a fundamental frequency estimator for speech and music. The Journal of the Acoustical Society of America 111, 4, 1917–1930.Google ScholarCross Ref

5. D’Emilia, G., Razzè, L., and Zappa, E. 2013. Uncertainty analysis of high frequency image-based vibration measurements. Measurement 46, 8, 2630–2637. Google ScholarDigital Library

6. Fisher, W. M., Doddington, G. R., and Goudie-Marshall, K. M. 1986. The darpa speech recognition research database: specifications and status. In Proc. DARPA Workshop on speech recognition, 93–99.Google Scholar

7. Gautama, T., and Van Hulle, M. 2002. A phase-based approach to the estimation of the optical flow field using spatial filtering. Neural Networks, IEEE Transactions on 13, 5 (sep), 1127–1136. Google ScholarDigital Library

8. Grundmann, M., Kwatra, V., Castro, D., and Essa, I. 2012. Calibration-free rolling shutter removal. In Computational Photography (ICCP), 2012 IEEE International Conference on, IEEE, 1–8.Google Scholar

9. Hansen, J. H., and Pellom, B. L. 1998. An effective quality evaluation protocol for speech enhancement algorithms. In ICSLP, vol. 7, 2819–2822.Google Scholar

10. Janssen, A., Veldhuis, R., and Vries, L. 1986. Adaptive interpolation of discrete-time signals that can be modeled as autoregressive processes. Acoustics, Speech and Signal Processing, IEEE Transactions on 34, 2, 317–330.Google Scholar

11. Jansson, E., Molin, N.-E., and Sundin, H. 1970. Resonances of a violin body studied by hologram interferometry and acoustical methods. Physica scripta 2, 6, 243.Google Scholar

12. Liu, C., Torralba, A., Freeman, W. T., Durand, F., and Adelson, E. H. 2005. Motion magnification. ACM Trans. Graph. 24 (Jul), 519–526. Google ScholarDigital Library

13. Loizou, P. C. 2005. Speech enhancement based on perceptually motivated bayesian estimators of the magnitude spectrum. Speech and Audio Processing, IEEE Transactions on 13, 5, 857–869. Google ScholarDigital Library

14. Meingast, M., Geyer, C., and Sastry, S. 2005. Geometric models of rolling-shutter cameras. arXiv preprint cs/0503076. Google ScholarDigital Library

15. Morlier, J., Salom, P., and Bos, F. 2007. New image processing tools for structural dynamic monitoring. Key Engineering Materials 347, 239–244.Google ScholarCross Ref

16. Nakamura, J. 2005. Image sensors and signal processing for digital still cameras. CRC Press. Google ScholarDigital Library

17. Poe, E. A. 1845. The Raven. Google ScholarDigital Library

18. Portilla, J., and Simoncelli, E. P. 2000. A parametric texture model based on joint statistics of complex wavelet coefficients. Int. J. Comput. Vision 40, 1 (Oct.), 49–70. Google ScholarDigital Library

19. Powell, R. L., and Stetson, K. A. 1965. Interferometric vibration analysis by wavefront reconstruction. JOSA 55, 12, 1593–1597. Google ScholarDigital Library

20. Quackenbush, S. R., Barnwell, T. P., and Clements, M. A. 1988. Objective measures of speech quality. Prentice Hall Englewood Cliffs, NJ.Google Scholar

21. Rothberg, S., Baker, J., and Halliwell, N. A. 1989. Laser vibrometry: pseudo-vibrations. Journal of Sound and Vibration 135, 3, 516–522.Google ScholarCross Ref

22. Rubinstein, M. 2014. Analysis and Visualization of Temporal Variations in Video. PhD thesis, Massachusetts Institute of Technology.Google Scholar

23. Simoncelli, E. P., Freeman, W. T., Adelson, E. H., and Heeger, D. J. 1992. Shiftable multi-scale transforms. IEEE Trans. Info. Theory 2, 38, 587–607. Google ScholarDigital Library

24. Stanbridge, A., and Ewins, D. 1999. Modal testing using a scanning laser doppler vibrometer. Mechanical Systems and Signal Processing 13, 2, 255–270.Google ScholarCross Ref

25. Taal, C. H., Hendriks, R. C., Heusdens, R., and Jensen, J. 2011. An algorithm for intelligibility prediction of time–frequency weighted noisy speech. Audio, Speech, and Language Processing, IEEE Transactions on 19, 7, 2125–2136. Google ScholarDigital Library

26. Wadhwa, N., Rubinstein, M., Durand, F., and Freeman, W. T. 2013. Phase-based video motion processing. ACM Transactions on Graphics (TOG) 32, 4, 80. Google ScholarDigital Library

27. Wadhwa, N., Rubinstein, M., Durand, F., and Freeman, W. T. 2014. Riesz pyramid for fast phase-based video magnification. In Computational Photography (ICCP), 2014 IEEE International Conference on, IEEE.Google Scholar

28. Wu, H.-Y., Rubinstein, M., Shih, E., Guttag, J., Durand, F., and Freeman, W. 2012. Eulerian video magnification for revealing subtle changes in the world. ACM Transactions on Graphics (TOG) 31, 4, 65. Google ScholarDigital Library

29. Zalevsky, Z., Beiderman, Y., Margalit, I., Gingold, S., Teicher, M., Mico, V., and Garcia, J. 2009. Simultaneous remote extraction of multiple speech sources and heart beats from secondary speckles pattern. Opt. Express 17, 24, 21566–21580.Google ScholarCross Ref