“The Chosen One: Consistent Characters in Text-to-image Diffusion Models”

Conference:

Type(s):

Title:

- The Chosen One: Consistent Characters in Text-to-image Diffusion Models

Presenter(s)/Author(s):

Abstract:

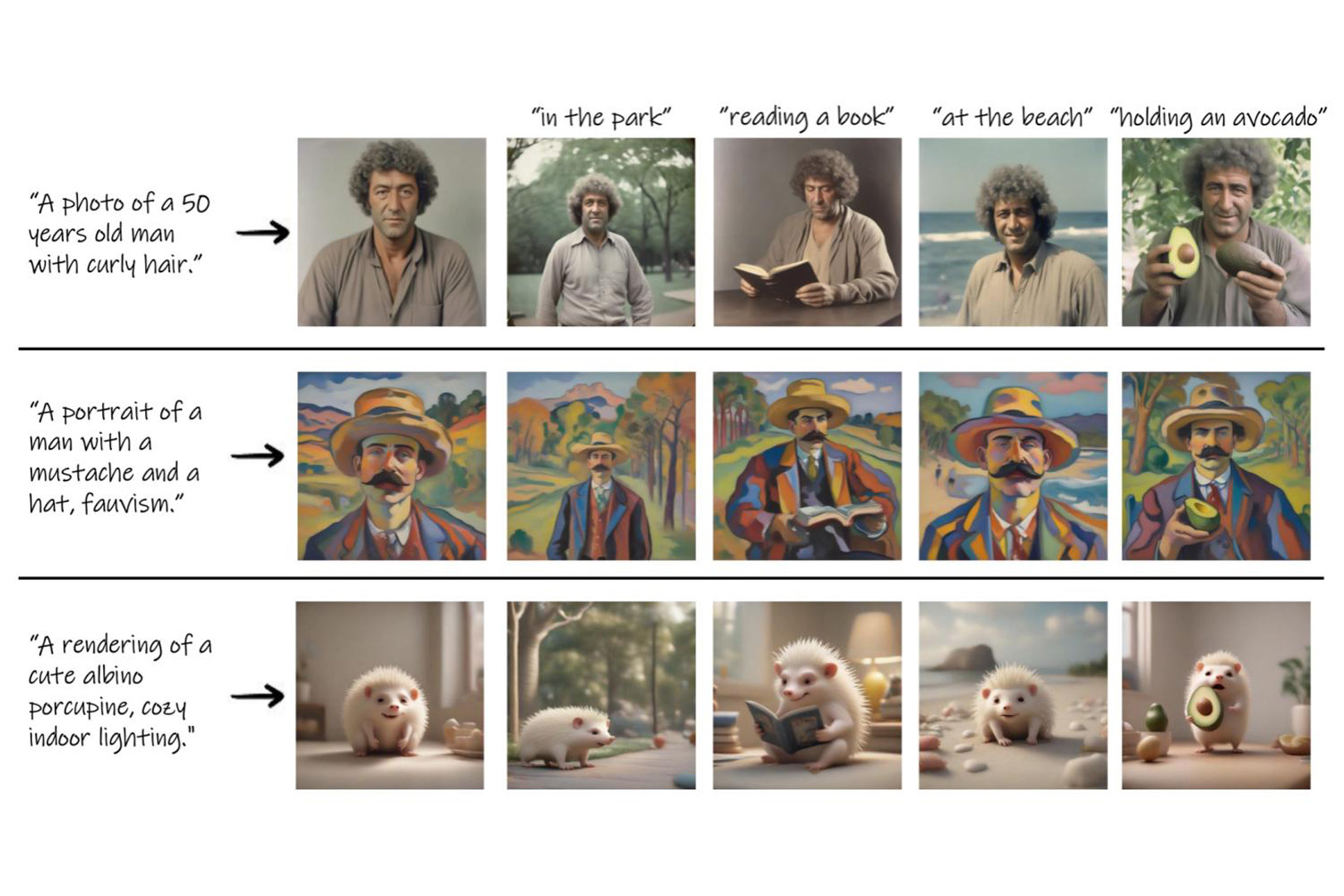

Given a text prompt describing a character, our method distills a representation that enables consistent depiction of the same character in novel contexts.

References:

[1]

Namhyuk Ahn, Junsoo Lee, Chunggi Lee, Kunhee Kim, Daesik Kim, Seung-Hun Nam, and Kibeom Hong. 2023. DreamStyler: Paint by Style Inversion with Text-to-Image Diffusion Models. ArXiv abs/2309.06933 (2023). https://api.semanticscholar.org/CorpusID:261706081

[2]

Yuval Alaluf, Elad Richardson, Gal Metzer, and Daniel Cohen-Or. 2023. A Neural Space-Time Representation for Text-to-Image Personalization. ArXiv abs/2305.15391 (2023). https://api.semanticscholar.org/CorpusID:258866047

[3]

Amazon. 2023. Amazon Mechanical Turk. https://www.mturk.com/.

[4]

Moab Arar, Rinon Gal, Yuval Atzmon, Gal Chechik, Daniel Cohen-Or, Ariel Shamir, and Amit H Bermano. 2023. Domain-agnostic tuning-encoder for fast personalization of text-to-image models. arXiv preprint arXiv:2307.06925 (2023).

[5]

David Arthur and Sergei Vassilvitskii. 2007. k-means++: the advantages of careful seeding. In ACM-SIAM Symposium on Discrete Algorithms. https://api.semanticscholar.org/CorpusID:1782131

[6]

Omri Avrahami, Kfir Aberman, Ohad Fried, Daniel Cohen-Or, and Dani Lischinski. 2023a. Break-A-Scene: Extracting Multiple Concepts from a Single Image. ArXiv abs/2305.16311 (2023). https://api.semanticscholar.org/CorpusID:258888228

[7]

Omri Avrahami, Ohad Fried, and Dani Lischinski. 2023b. Blended Latent Diffusion. ACM Trans. Graph. 42, 4, Article 149 (jul 2023), 11 pages. https://doi.org/10.1145/3592450

[8]

Omri Avrahami, Thomas Hayes, Oran Gafni, Sonal Gupta, Yaniv Taigman, Devi Parikh, Dani Lischinski, Ohad Fried, and Xi Yin. 2023c. SpaText: Spatio-Textual Representation for Controllable Image Generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 18370?18380.

[9]

Omri Avrahami, Dani Lischinski, and Ohad Fried. 2022. Blended Diffusion for Text-Driven Editing of Natural Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 18208?18218.

[10]

Yogesh Balaji, Seungjun Nah, Xun Huang, Arash Vahdat, Jiaming Song, Qinsheng Zhang, Karsten Kreis, Miika Aittala, Timo Aila, Samuli Laine, Bryan Catanzaro, Tero Karras, and Ming-Yu Liu. 2022. eDiff-I: Text-to-Image Diffusion Models with an Ensemble of Expert Denoisers. ArXiv abs/2211.01324 (2022). https://api.semanticscholar.org/CorpusID:253254800

[11]

Omer Bar-Tal, Dolev Ofri-Amar, Rafail Fridman, Yoni Kasten, and Tali Dekel. 2022. Text2live: Text-driven layered image and video editing. In European conference on computer vision. Springer, 707?723.

[12]

Sagie Benaim, Frederik Warburg, Peter Ebert Christensen, and Serge J. Belongie. 2022. Volumetric Disentanglement for 3D Scene Manipulation. ArXiv abs/2206.02776 (2022). https://api.semanticscholar.org/CorpusID:249394623

[13]

Mingdeng Cao, Xintao Wang, Zhongang Qi, Ying Shan, Xiaohu Qie, and Yinqiang Zheng. 2023. MasaCtrl: Tuning-Free Mutual Self-Attention Control for Consistent Image Synthesis and Editing. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). 22560?22570.

[14]

Mathilde Caron, Hugo Touvron, Ishan Misra, Herv? Jegou, Julien Mairal, Piotr Bojanowski, and Armand Joulin. 2021. Emerging Properties in Self-Supervised Vision Transformers. In 2021 IEEE/CVF International Conference on Computer Vision (ICCV). 9630?9640.

[15]

Hila Chefer, Yuval Alaluf, Yael Vinker, Lior Wolf, and Daniel Cohen-Or. 2023. Attend-and-Excite: Attention-Based Semantic Guidance for Text-to-Image Diffusion Models. ACM Transactions on Graphics (TOG) 42 (2023), 1 ? 10. https://api.semanticscholar.org/CorpusID:256416326

[16]

Wenhu Chen, Hexiang Hu, Yandong Li, Nataniel Rui, Xuhui Jia, Ming-Wei Chang, and William W. Cohen. 2023a. Subject-driven Text-to-Image Generation via Apprenticeship Learning. ArXiv abs/2304.00186 (2023).

[17]

Xi Chen, Lianghua Huang, Yu Liu, Yujun Shen, Deli Zhao, and Hengshuang Zhao. 2023b. AnyDoor: Zero-shot Object-level Image Customization. ArXiv abs/2307.09481 (2023). https://api.semanticscholar.org/CorpusID:259951373

[18]

Guillaume Couairon, Marlene Careil, Matthieu Cord, St?phane Lathuili?re, and Jakob Verbeek. 2023. Zero-shot spatial layout conditioning for text-to-image diffusion models. ArXiv abs/2306.13754 (2023). https://api.semanticscholar.org/CorpusID:259252153

[19]

AI Foundations. 2023. How to Create Consistent Characters in Midjourney. https://www.youtube.com/watch?v=Z7_ta3RHijQ.

[20]

Rafail Fridman, Amit Abecasis, Yoni Kasten, and Tali Dekel. 2023. SceneScape: Text-Driven Consistent Scene Generation. ArXiv abs/2302.01133 (2023). https://api.semanticscholar.org/CorpusID:256503775

[21]

Rinon Gal, Yuval Alaluf, Yuval Atzmon, Or Patashnik, Amit Haim Bermano, Gal Chechik, and Daniel Cohen-or. 2022. An Image is Worth One Word: Personalizing Text-to-Image Generation using Textual Inversion. In The Eleventh International Conference on Learning Representations.

[22]

Rinon Gal, Moab Arar, Yuval Atzmon, Amit H Bermano, Gal Chechik, and Daniel Cohen-Or. 2023. Encoder-based domain tuning for fast personalization of text-to-image models. ACM Transactions on Graphics (TOG) 42, 4 (2023), 1?13.

[23]

Songwei Ge, Taesung Park, Jun-Yan Zhu, and Jia-Bin Huang. 2023. Expressive Text-to-Image Generation with Rich Text. ArXiv abs/2304.06720 (2023). https://api.semanticscholar.org/CorpusID:258108187

[24]

Michal Geyer, Omer Bar-Tal, Shai Bagon, and Tali Dekel. 2023. Tokenflow: Consistent diffusion features for consistent video editing. arXiv preprint arXiv:2307.10373 (2023).

[25]

Yuan Gong, Youxin Pang, Xiaodong Cun, Menghan Xia, Haoxin Chen, Longyue Wang, Yong Zhang, Xintao Wang, Ying Shan, and Yujiu Yang. 2023. TaleCrafter: Interactive Story Visualization with Multiple Characters. ArXiv abs/2305.18247 (2023). https://api.semanticscholar.org/CorpusID:258960665

[26]

Ori Gordon, Omri Avrahami, and Dani Lischinski. 2023. Blended-NeRF: Zero-Shot Object Generation and Blending in Existing Neural Radiance Fields. ArXiv abs/2306.12760 (2023). https://api.semanticscholar.org/CorpusID:259224726

[27]

Ligong Han, Yinxiao Li, Han Zhang, Peyman Milanfar, Dimitris N. Metaxas, and Feng Yang. 2023. SVDiff: Compact Parameter Space for Diffusion Fine-Tuning. ArXiv abs/2303.11305 (2023).

[28]

Amir Hertz, Kfir Aberman, and Daniel Cohen-Or. 2023. Delta denoising score. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 2328?2337.

[29]

Amir Hertz, Ron Mokady, Jay Tenenbaum, Kfir Aberman, Yael Pritch, and Daniel Cohen-Or. 2022. Prompt-to-prompt image editing with cross attention control. arXiv preprint arXiv:2208.01626 (2022).

[30]

Geoffrey E. Hinton and Sam T. Roweis. 2002. Stochastic Neighbor Embedding. In NIPS. https://api.semanticscholar.org/CorpusID:20240

[31]

Jonathan Ho, Ajay Jain, and Pieter Abbeel. 2020. Denoising Diffusion Probabilistic Models. In Proc. NeurIPS.

[32]

Lukas H?llein, Ang Cao, Andrew Owens, Justin Johnson, and Matthias Nie?ner. 2023. Text2Room: Extracting Textured 3D Meshes from 2D Text-to-Image Models. ArXiv abs/2303.11989 (2023). https://api.semanticscholar.org/CorpusID:257636653

[33]

Eliahu Horwitz and Yedid Hoshen. 2022. Conffusion: Confidence Intervals for Diffusion Models. ArXiv abs/2211.09795 (2022).

[34]

Edward J Hu, Phillip Wallis, Zeyuan Allen-Zhu, Yuanzhi Li, Shean Wang, Lu Wang, Weizhu Chen, 2021. LoRA: Low-Rank Adaptation of Large Language Models. In International Conference on Learning Representations.

[35]

Gabriel Ilharco, Mitchell Wortsman, Ross Wightman, Cade Gordon, Nicholas Carlini, Rohan Taori, Achal Dave, Vaishaal Shankar, Hongseok Namkoong, John Miller, Hannaneh Hajishirzi, Ali Farhadi, and Ludwig Schmidt. 2021. OpenCLIP. https://doi.org/10.5281/zenodo.5143773

[36]

Shira Iluz, Yael Vinker, Amir Hertz, Daniel Berio, Daniel Cohen-Or, and Ariel Shamir. 2023. Word-As-Image for Semantic Typography. ACM Transactions on Graphics (TOG) 42 (2023), 1 ? 11. https://api.semanticscholar.org/CorpusID:257353586

[37]

Hyeonho Jeong, Gihyun Kwon, and Jong-Chul Ye. 2023. Zero-shot Generation of Coherent Storybook from Plain Text Story using Diffusion Models. ArXiv abs/2302.03900 (2023). https://api.semanticscholar.org/CorpusID:256662241

[38]

Xuhui Jia, Yang Zhao, Kelvin C. K. Chan, Yandong Li, Han-Ying Zhang, Boqing Gong, Tingbo Hou, H. Wang, and Yu-Chuan Su. 2023. Taming Encoder for Zero Fine-tuning Image Customization with Text-to-Image Diffusion Models. ArXiv abs/2304.02642 (2023).

[39]

JoshGreat. 2023. 8 ways to generate consistent characters (for comics, storyboards, books etc) : StableDiffusion. https://www.reddit.com/r/StableDiffusion/comments/10yxz3m/8_ways_to_generate_consistent_characters_for/.

[40]

Bahjat Kawar, Shiran Zada, Oran Lang, Omer Tov, Huiwen Chang, Tali Dekel, Inbar Mosseri, and Michal Irani. 2023. Imagic: Text-based real image editing with diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 6007?6017.

[41]

Nupur Kumari, Bingliang Zhang, Richard Zhang, Eli Shechtman, and Jun-Yan Zhu. 2023. Multi-concept customization of text-to-image diffusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 1931?1941.

[42]

Dongxu Li, Junnan Li, and Steven C. H. Hoi. 2023. BLIP-Diffusion: Pre-trained Subject Representation for Controllable Text-to-Image Generation and Editing. ArXiv abs/2305.14720 (2023). https://api.semanticscholar.org/CorpusID:258865473

[43]

Yitong Li, Zhe Gan, Yelong Shen, Jingjing Liu, Yu Cheng, Yuexin Wu, Lawrence Carin, David Carlson, and Jianfeng Gao. 2019. StoryGAN: A Sequential Conditional GAN for Story Visualization. CVPR (2019).

[44]

Shaoteng Liu, Yuechen Zhang, Wenbo Li, Zhe Lin, and Jiaya Jia. 2023a. Video-p2p: Video editing with cross-attention control. arXiv preprint arXiv:2303.04761 (2023).

[45]

Shaoteng Liu, Yuecheng Zhang, Wenbo Li, Zhe Lin, and Jiaya Jia. 2023b. Video-P2P: Video Editing with Cross-attention Control. ArXiv abs/2303.04761 (2023). https://api.semanticscholar.org/CorpusID:257405406

[46]

Adyasha Maharana, Darryl Hannan, and Mohit Bansal. 2022. Storydall-e: Adapting pretrained text-to-image transformers for story continuation. In European Conference on Computer Vision. Springer, 70?87.

[47]

Chenlin Meng, Yutong He, Yang Song, Jiaming Song, Jiajun Wu, Jun-Yan Zhu, and Stefano Ermon. 2021. SDEdit: Guided Image Synthesis and Editing with Stochastic Differential Equations. In International Conference on Learning Representations.

[48]

Gal Metzer, Elad Richardson, Or Patashnik, Raja Giryes, and Daniel Cohen-Or. 2023. Latent-nerf for shape-guided generation of 3d shapes and textures. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 12663?12673.

[49]

Ron Mokady, Amir Hertz, Kfir Aberman, Yael Pritch, and Daniel Cohen-Or. 2023. Null-text inversion for editing real images using guided diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 6038?6047.

[50]

Eyal Molad, Eliahu Horwitz, Dani Valevski, Alex Rav Acha, Y. Matias, Yael Pritch, Yaniv Leviathan, and Yedid Hoshen. 2023. Dreamix: Video Diffusion Models are General Video Editors. ArXiv abs/2302.01329 (2023).

[51]

Chong Mou, Xintao Wang, Liangbin Xie, Yanze Wu, Jian Zhang, Zhongang Qi, Ying Shan, and Xiaohu Qie. 2023. T2i-adapter: Learning adapters to dig out more controllable ability for text-to-image diffusion models. arXiv preprint arXiv:2302.08453 (2023).

[52]

Alex Nichol, Prafulla Dhariwal, Aditya Ramesh, Pranav Shyam, Pamela Mishkin, Bob McGrew, Ilya Sutskever, and Mark Chen. 2021. GLIDE: Towards Photorealistic Image Generation and Editing with Text-Guided Diffusion Models. In International Conference on Machine Learning. https://api.semanticscholar.org/CorpusID:245335086

[53]

OpenAI. 2022. ChatGPT. https://chat.openai.com/. Accessed: 2023-10-15.

[54]

Maxime Oquab, Timoth?e Darcet, Th?o Moutakanni, Huy Q. Vo, Marc Szafraniec, Vasil Khalidov, Pierre Fernandez, Daniel Haziza, Francisco Massa, Alaaeldin El-Nouby, Mahmoud Assran, Nicolas Ballas, Wojciech Galuba, Russ Howes, Po-Yao (Bernie) Huang, Shang-Wen Li, Ishan Misra, Michael G. Rabbat, Vasu Sharma, Gabriel Synnaeve, Huijiao Xu, Herv? J?gou, Julien Mairal, Patrick Labatut, Armand Joulin, and Piotr Bojanowski. 2023. DINOv2: Learning Robust Visual Features without Supervision. ArXiv abs/2304.07193 (2023). https://api.semanticscholar.org/CorpusID:258170077

[55]

Or Patashnik, Daniel Garibi, Idan Azuri, Hadar Averbuch-Elor, and Daniel Cohen-Or. 2023. Localizing Object-level Shape Variations with Text-to-Image Diffusion Models. ArXiv abs/2303.11306 (2023).

[56]

Ryan Po, Wang Yifan, Vladislav Golyanik, Kfir Aberman, Jonathan T. Barron, Amit H. Bermano, Eric Ryan Chan, Tali Dekel, Aleksander Holynski, Angjoo Kanazawa, C. Karen Liu, Lingjie Liu, Ben Mildenhall, Matthias Nie?ner, Bjorn Ommer, Christian Theobalt, Peter Wonka, and Gordon Wetzstein. 2023. State of the Art on Diffusion Models for Visual Computing. ArXiv abs/2310.07204 (2023). https://api.semanticscholar.org/CorpusID:263835355

[57]

Dustin Podell, Zion English, Kyle Lacey, A. Blattmann, Tim Dockhorn, Jonas Muller, Joe Penna, and Robin Rombach. 2023. SDXL: Improving Latent Diffusion Models for High-Resolution Image Synthesis. ArXiv abs/2307.01952 (2023). https://api.semanticscholar.org/CorpusID:259341735

[58]

Ben Poole, Ajay Jain, Jonathan T Barron, and Ben Mildenhall. 2022. Dreamfusion: Text-to-3d using 2d diffusion. arXiv preprint arXiv:2209.14988 (2022).

[59]

Chenyang Qi, Xiaodong Cun, Yong Zhang, Chenyang Lei, Xintao Wang, Ying Shan, and Qifeng Chen. 2023. Fatezero: Fusing attentions for zero-shot text-based video editing. arXiv preprint arXiv:2303.09535 (2023).

[60]

Sigal Raab, Inbal Leibovitch, Guy Tevet, Moab Arar, Amit H. Bermano, and Daniel Cohen-Or. 2023. Single Motion Diffusion. ArXiv abs/2302.05905 (2023). https://api.semanticscholar.org/CorpusID:256827051

[61]

Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, and Ilya Sutskever. 2021. Learning Transferable Visual Models From Natural Language Supervision. In International Conference on Machine Learning.

[62]

Tanzila Rahman, Hsin-Ying Lee, Jian Ren, S. Tulyakov, Shweta Mahajan, and Leonid Sigal. 2022. Make-A-Story: Visual Memory Conditioned Consistent Story Generation. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2022), 2493?2502. https://api.semanticscholar.org/CorpusID:254017562

[63]

Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. 2022. Hierarchical text-conditional image generation with CLIP latents. arXiv preprint arXiv:2204.06125 (2022).

[64]

Elad Richardson, Kfir Goldberg, Yuval Alaluf, and Daniel Cohen-Or. 2023a. ConceptLab: Creative Generation using Diffusion Prior Constraints. arXiv preprint arXiv:2308.02669 (2023).

[65]

Elad Richardson, Gal Metzer, Yuval Alaluf, Raja Giryes, and Daniel Cohen-Or. 2023b. TEXTure: Text-Guided Texturing of 3D Shapes. ACM SIGGRAPH 2023 Conference Proceedings (2023). https://api.semanticscholar.org/CorpusID:256597953

[66]

Robin Rombach, A. Blattmann, Dominik Lorenz, Patrick Esser, and Bj?rn Ommer. 2021. High-Resolution Image Synthesis with Latent Diffusion Models. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2021), 10674?10685.

[67]

Nataniel Ruiz, Yuanzhen Li, Varun Jampani, Yael Pritch, Michael Rubinstein, and Kfir Aberman. 2023. DreamBooth: Fine tuning text-to-image diffusion models for subject-driven generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 22500?22510.

[68]

Simo Ryu. 2022. Low-rank Adaptation for Fast Text-to-Image Diffusion Fine-tuning. https://github.com/cloneofsimo/lora.

[69]

Chitwan Saharia, William Chan, Saurabh Saxena, Lala Li, Jay Whang, Emily L Denton, Kamyar Ghasemipour, Raphael Gontijo Lopes, Burcu Karagol Ayan, Tim Salimans, 2022. Photorealistic text-to-image diffusion models with deep language understanding. Advances in Neural Information Processing Systems 35 (2022), 36479?36494.

[70]

Etai Sella, Gal Fiebelman, Peter Hedman, and Hadar Averbuch-Elor. 2023. Vox-E: Text-guided Voxel Editing of 3D Objects. ArXiv abs/2303.12048 (2023). https://api.semanticscholar.org/CorpusID:257636627

[71]

Shelly Sheynin, Oron Ashual, Adam Polyak, Uriel Singer, Oran Gafni, Eliya Nachmani, and Yaniv Taigman. 2022. kNN-Diffusion: Image Generation via Large-Scale Retrieval. In The Eleventh International Conference on Learning Representations.

[72]

Jing Shi, Wei Xiong, Zhe L. Lin, and Hyun Joon Jung. 2023. InstantBooth: Personalized Text-to-Image Generation without Test-Time Finetuning. ArXiv abs/2304.03411 (2023).

[73]

Jascha Sohl-Dickstein, Eric Weiss, Niru Maheswaranathan, and Surya Ganguli. 2015. Deep unsupervised learning using nonequilibrium thermodynamics. In International Conference on Machine Learning. PMLR, 2256?2265.

[74]

Kihyuk Sohn, Nataniel Ruiz, Kimin Lee, Daniel Castro Chin, Irina Blok, Huiwen Chang, Jarred Barber, Lu Jiang, Glenn Entis, Yuanzhen Li, Yuan Hao, Irfan Essa, Michael Rubinstein, and Dilip Krishnan. 2023. StyleDrop: Text-to-Image Generation in Any Style. ArXiv abs/2306.00983 (2023). https://api.semanticscholar.org/CorpusID:258999204

[75]

Jiaming Song, Chenlin Meng, and Stefano Ermon. 2020. Denoising Diffusion Implicit Models. In International Conference on Learning Representations.

[76]

Yang Song and Stefano Ermon. 2019. Generative modeling by estimating gradients of the data distribution. Advances in Neural Information Processing Systems 32 (2019).

[77]

stassius. 2023. How to create consistent character faces without training (info in the comments) : StableDiffusion. https://www.reddit.com/r/StableDiffusion/comments/12djxvz/how_to_create_consistent_character_faces_without/.

[78]

G?bor Sz?cs and Modafar Al-Shouha. 2022. Modular StoryGAN with background and theme awareness for story visualization. In International Conference on Pattern Recognition and Artificial Intelligence. Springer, 275?286.

[79]

Guy Tevet, Sigal Raab, Brian Gordon, Yonatan Shafir, Daniel Cohen-Or, and Amit H. Bermano. 2022. Human Motion Diffusion Model. ArXiv abs/2209.14916 (2022). https://api.semanticscholar.org/CorpusID:252595883

[80]

Yoad Tewel, Rinon Gal, Gal Chechik, and Yuval Atzmon. 2023. Key-Locked Rank One Editing for Text-to-Image Personalization. ACM SIGGRAPH 2023 Conference Proceedings (2023). https://api.semanticscholar.org/CorpusID:258436985

[81]

Narek Tumanyan, Michal Geyer, Shai Bagon, and Tali Dekel. 2023. Plug-and-play diffusion features for text-driven image-to-image translation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 1921?1930.

[82]

Dani Valevski, Danny Lumen, Yossi Matias, and Yaniv Leviathan. 2023. Face0: Instantaneously Conditioning a Text-to-Image Model on a Face. SIGGRAPH Asia 2023 Conference Papers (2023). https://api.semanticscholar.org/CorpusID:259138505

[83]

Yael Vinker, Andrey Voynov, Daniel Cohen-Or, and Ariel Shamir. 2023. Concept Decomposition for Visual Exploration and Inspiration. ArXiv abs/2305.18203 (2023). https://api.semanticscholar.org/CorpusID:258959472

[84]

Andrey Voynov, Kfir Aberman, and Daniel Cohen-Or. 2022. Sketch-Guided Text-to-Image Diffusion Models. arXiv preprint arXiv:2211.13752 (2022).

[85]

Andrey Voynov, Q. Chu, Daniel Cohen-Or, and Kfir Aberman. 2023. P+: Extended Textual Conditioning in Text-to-Image Generation. ArXiv abs/2303.09522 (2023).

[86]

Yuxiang Wei. 2023. Official Implementation of ELITE. https://github.com/csyxwei/ELITE. Accessed: 2023-05-01.

[87]

Yuxiang Wei, Yabo Zhang, Zhilong Ji, Jinfeng Bai, Lei Zhang, and Wangmeng Zuo. 2023. ELITE: Encoding Visual Concepts into Textual Embeddings for Customized Text-to-Image Generation. ArXiv abs/2302.13848 (2023).

[88]

Shuai Yang, Yifan Zhou, Ziwei Liu, and Chen Change Loy. 2023. Rerender A Video: Zero-Shot Text-Guided Video-to-Video Translation. ArXiv abs/2306.07954 (2023). https://api.semanticscholar.org/CorpusID:259144797

[89]

Hu Ye, Jun Zhang, Siyi Liu, Xiao Han, and Wei Yang. 2023. IP-Adapter: Text Compatible Image Prompt Adapter for Text-to-Image Diffusion Models. ArXiv abs/2308.06721 (2023). https://api.semanticscholar.org/CorpusID:260886966

[90]

Jiahui Yu, Yuanzhong Xu, Jing Yu Koh, Thang Luong, Gunjan Baid, Zirui Wang, Vijay Vasudevan, Alexander Ku, Yinfei Yang, Burcu Karagol Ayan, 2022. Scaling Autoregressive Models for Content-Rich Text-to-Image Generation. arXiv preprint arXiv:2206.10789 (2022).

[91]

Chenshuang Zhang, Chaoning Zhang, Mengchun Zhang, and In-So Kweon. 2023b. Text-to-image Diffusion Models in Generative AI: A Survey. ArXiv abs/2303.07909 (2023). https://api.semanticscholar.org/CorpusID:257505012

[92]

Lvmin Zhang, Anyi Rao, and Maneesh Agrawala. 2023a. Adding Conditional Control to Text-to-Image Diffusion Models. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). 3836?3847.

[93]

Jingyu Zhuang, Chen Wang, Lingjie Liu, Liang Lin, and Guanbin Li. 2023. DreamEditor: Text-Driven 3D Scene Editing with Neural Fields. ArXiv abs/2306.13455 (2023). https://api.semanticscholar.org/CorpusID:259243782