“Taming Diffusion Probabilistic Models for Character Control”

Conference:

Type(s):

Title:

- Taming Diffusion Probabilistic Models for Character Control

Presenter(s)/Author(s):

Abstract:

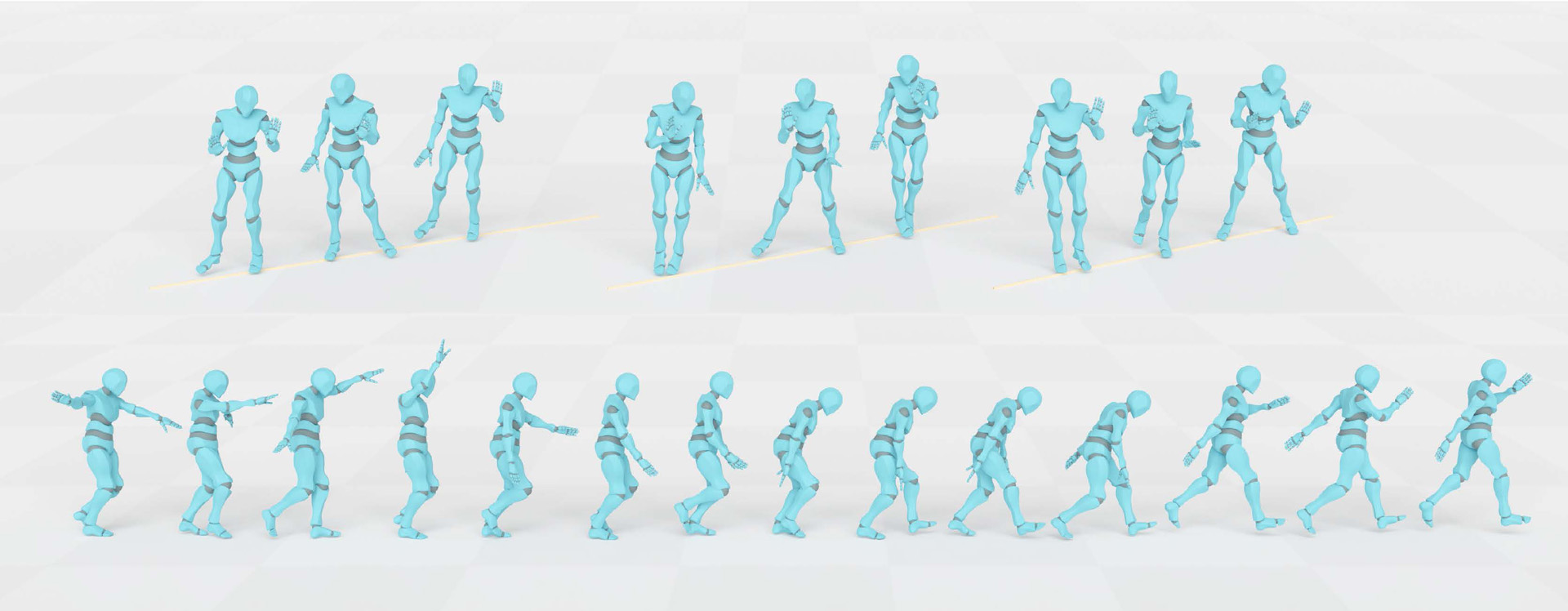

We present a novel character control framework that enables real-time generation of high-quality, diverse character animations in response to user interactive control. Our framework supports the animation of characters in various styles using a single, unified model.

References:

[1]

Simon Alexanderson, Rajmund Nagy, Jonas Beskow, and Gustav Eje Henter. 2023. Listen, denoise, action! audio-driven motion synthesis with diffusion models. ACM Transactions on Graphics (TOG) 42, 4 (2023), 1?20.

[2]

Junjie Bai, Fang Lu, Ke Zhang, 2019. ONNX: Open Neural Network Exchange. https://github.com/onnx/onnx.

[3]

Kevin Bergamin, Simon Clavet, Daniel Holden, and James Richard Forbes. 2019. DReCon: data-driven responsive control of physics-based characters. ACM Transactions On Graphics (TOG) 38, 6 (2019), 1?11.

[4]

Michael B?ttner and Simon Clavet. 2015. Motion Matching – The Road to Next Gen Animation.https://www.youtube.com/watch?v=z_wpgHFSWss&t=658s

[5]

Xin Chen, Biao Jiang, Wen Liu, Zilong Huang, Bin Fu, Tao Chen, and Gang Yu. 2023. Executing your Commands via Motion Diffusion in Latent Space. In Conference on Computer Vision and Pattern Recognition (CVPR). 18000?18010.

[6]

Kyungmin Cho, Chaelin Kim, Jungjin Park, Joonkyu Park, and Junyong Noh. 2021. Motion recommendation for online character control. ACM Transactions on Graphics (TOG) 40, 6 (2021), 1?16.

[7]

Rishabh Dabral, Muhammad Hamza Mughal, Vladislav Golyanik, and Christian Theobalt. 2023. Mofusion: A framework for denoising-diffusion-based motion synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 9760?9770.

[8]

Zhiyang Dou, Xuelin Chen, Qingnan Fan, Taku Komura, and Wenping Wang. 2023. C? ase: Learning conditional adversarial skill embeddings for physics-based characters. In SIGGRAPH Asia. 1?11.

[9]

Levi Fussell, Kevin Bergamin, and Daniel Holden. 2021. Supertrack: Motion tracking for physically simulated characters using supervised learning. ACM Transactions on Graphics (TOG) 40, 6 (2021), 1?13.

[10]

Gustav Eje Henter, Simon Alexanderson, and Jonas Beskow. 2020. Moglow: Probabilistic and controllable motion synthesis using normalising flows. ACM Transactions on Graphics (TOG) 39, 6 (2020), 1?14.

[11]

Jonathan Ho. 2022. Classifier-Free Diffusion Guidance. ArXiv abs/2207.12598 (2022).

[12]

Jonathan Ho, Ajay Jain, and Pieter Abbeel. 2020. Denoising Diffusion Probabilistic Models. In Advances in Neural Information Processing Systems (NeurIPS), H. Larochelle, M. Ranzato, R. Hadsell, M.F. Balcan, and H. Lin (Eds.). Vol. 33. Curran Associates, Inc., 6840?6851. https://proceedings.neurips.cc/paper_files/paper/2020/file/4c5bcfec8584af0d967f1ab10179ca4b-Paper.pdf

[13]

Daniel Holden, Oussama Kanoun, Maksym Perepichka, and Tiberiu Popa. 2020. Learned motion matching. 39, 4 (2020), 53?1.

[14]

Daniel Holden, Taku Komura, and Jun Saito. 2017. Phase-functioned neural networks for character control. ACM Transactions on Graphics (SIGGRAPH) 36, 4 (2017), 1?13.

[15]

Biao Jiang, Xin Chen, Wen Liu, Jingyi Yu, Gang Yu, and Tao Chen. 2023. MotionGPT: Human Motion as a Foreign Language. arXiv preprint arXiv:2306.14795 (2023).

[16]

Korrawe Karunratanakul, Konpat Preechakul, Supasorn Suwajanakorn, and Siyu Tang. 2023. Guided motion diffusion for controllable human motion synthesis. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 2151?2162.

[17]

Jihoon Kim, Jiseob Kim, and Sungjoon Choi. 2023. Flame: Free-form language-based motion synthesis & editing. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 37. 8255?8263.

[18]

Yongjoon Lee, Kevin Wampler, Gilbert Bernstein, Jovan Popovi?, and Zoran Popovi?. 2010. Motion fields for interactive character locomotion. (2010), 1?8.

[19]

Hung Yu Ling, Fabio Zinno, George Cheng, and Michiel Van De Panne. 2020. Character controllers using motion vaes. ACM Transactions on Graphics (SIGGRAPH) 39, 4 (2020), 40?1.

[20]

Simian Luo, Yiqin Tan, Longbo Huang, Jian Li, and Hang Zhao. 2023. Latent consistency models: Synthesizing high-resolution images with few-step inference. arXiv preprint arXiv:2310.04378 (2023).

[21]

Ian Mason, Sebastian Starke, and Taku Komura. 2022. Real-time style modelling of human locomotion via feature-wise transformations and local motion phases. Proceedings of the ACM on Computer Graphics and Interactive Techniques 5, 1 (2022), 1?18.

[22]

Chenlin Meng, Robin Rombach, Ruiqi Gao, Diederik Kingma, Stefano Ermon, Jonathan Ho, and Tim Salimans. 2023. On distillation of guided diffusion models. In Conference on Computer Vision and Pattern Recognition (CVPR). 14297?14306.

[23]

Alexander Quinn Nichol, Prafulla Dhariwal, Aditya Ramesh, Pranav Shyam, Pamela Mishkin, Bob Mcgrew, Ilya Sutskever, and Mark Chen. 2022. GLIDE: Towards Photorealistic Image Generation and Editing with Text-Guided Diffusion Models. In International Conference on Machine Learning (ICML). PMLR, 16784?16804.

[24]

Xue Bin Peng, Pieter Abbeel, Sergey Levine, and Michiel Van de Panne. 2018. Deepmimic: Example-guided deep reinforcement learning of physics-based character skills. ACM Transactions On Graphics (TOG) 37, 4 (2018), 1?14.

[25]

Xue Bin Peng, Yunrong Guo, Lina Halper, Sergey Levine, and Sanja Fidler. 2022. Ase: Large-scale reusable adversarial skill embeddings for physically simulated characters. ACM Transactions On Graphics (TOG) 41, 4 (2022), 1?17.

[26]

Xue Bin Peng, Ze Ma, Pieter Abbeel, Sergey Levine, and Angjoo Kanazawa. 2021. Amp: Adversarial motion priors for stylized physics-based character control. ACM Transactions on Graphics (ToG) 40, 4 (2021), 1?20.

[27]

Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. 2022. Hierarchical Text-Conditional Image Generation with CLIP Latents. arxiv:2204.06125 [cs.CV]

[28]

Aditya Ramesh, Mikhail Pavlov, Gabriel Goh, Scott Gray, Chelsea Voss, Alec Radford, Mark Chen, and Ilya Sutskever. 2021. Zero-shot text-to-image generation. In International Conference on Machine Learning (ICML). PMLR, 8821?8831.

[29]

Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Bj?rn Ommer. 2022. High-resolution image synthesis with latent diffusion models. In Conference on Computer Vision and Pattern Recognition (CVPR). 10684?10695.

[30]

Chitwan Saharia, William Chan, Saurabh Saxena, Lala Li, Jay Whang, Emily L Denton, Kamyar Ghasemipour, Raphael Gontijo Lopes, Burcu Karagol Ayan, Tim Salimans, 2022. Photorealistic text-to-image diffusion models with deep language understanding. Advances in Neural Information Processing Systems (NeurIPS) 35 (2022), 36479?36494.

[31]

Yonatan Shafir, Guy Tevet, Roy Kapon, and Amit H Bermano. 2023. Human motion diffusion as a generative prior. arXiv preprint arXiv:2303.01418 (2023).

[32]

Yi Shi, Jingbo Wang, Xuekun Jiang, and Bo Dai. 2023. Controllable Motion Diffusion Model. arXiv preprint arXiv:2306.00416 (2023).

[33]

Jiaming Song, Chenlin Meng, and Stefano Ermon. 2020. Denoising diffusion implicit models. arXiv preprint arXiv:2010.02502 (2020).

[34]

Yang Song, Prafulla Dhariwal, Mark Chen, and Ilya Sutskever. 2023. Consistency models. arXiv preprint arXiv:2303.01469 (2023).

[35]

Sebastian Starke. 2024. AI4Animation. https://github.com/sebastianstarke/AI4Animation.

[36]

Sebastian Starke, Ian Mason, and Taku Komura. 2022. Deepphase: Periodic autoencoders for learning motion phase manifolds. ACM Transactions on Graphics (SIGGRAPH) 41, 4 (2022), 1?13.

[37]

Sebastian Starke, Yiwei Zhao, Taku Komura, and Kazi Zaman. 2020. Local motion phases for learning multi-contact character movements. ACM Transactions on Graphics (SIGGRAPH) 39, 4 (2020), 54?1.

[38]

Chen Tessler, Yoni Kasten, Yunrong Guo, Shie Mannor, Gal Chechik, and Xue Bin Peng. 2023. Calm: Conditional adversarial latent models for directable virtual characters. In ACM SIGGRAPH 2023 Conference Proceedings. 1?9.

[39]

Guy Tevet, Sigal Raab, Brian Gordon, Yonatan Shafir, Daniel Cohen-Or, and Amit H Bermano. 2023. Human motion diffusion model. International Conference on Learning Representations (ICLR) (2023).

[40]

Yin Wang, Zhiying Leng, Frederick W. B. Li, Shun-Cheng Wu, and Xiaohui Liang. 2023. Fg-T2M: Fine-Grained Text-Driven Human Motion Generation via Diffusion Model. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). 22035?22044.

[41]

Jungdam Won, Deepak Gopinath, and Jessica Hodgins. 2020. A scalable approach to control diverse behaviors for physically simulated characters. ACM Transactions on Graphics (TOG) 39, 4 (2020), 33?1.

[42]

Jungdam Won, Deepak Gopinath, and Jessica Hodgins. 2022. Physics-based character controllers using conditional vaes. ACM Transactions on Graphics (TOG) 41, 4 (2022), 1?12.

[43]

Yiming Xie, Varun Jampani, Lei Zhong, Deqing Sun, and Huaizu Jiang. 2023. Omnicontrol: Control any joint at any time for human motion generation. arXiv preprint arXiv:2310.08580 (2023).

[44]

Heyuan Yao, Zhenhua Song, Baoquan Chen, and Libin Liu. 2022. Controlvae: Model-based learning of generative controllers for physics-based characters. ACM Transactions on Graphics (TOG) 41, 6 (2022), 1?16.

[45]

Ye Yuan, Jiaming Song, Umar Iqbal, Arash Vahdat, and Jan Kautz. 2023. Physdiff: Physics-guided human motion diffusion model. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 16010?16021.

[46]

He Zhang, Sebastian Starke, Taku Komura, and Jun Saito. 2018. Mode-adaptive neural networks for quadruped motion control. ACM Transactions on Graphics (SIGGRAPH) 37, 4 (2018), 1?11.

[47]

Mingyuan Zhang, Zhongang Cai, Liang Pan, Fangzhou Hong, Xinying Guo, Lei Yang, and Ziwei Liu. 2022. Motiondiffuse: Text-driven human motion generation with diffusion model. arXiv preprint arXiv:2208.15001 (2022).

[48]

Yi Zhou, Connelly Barnes, Jingwan Lu, Jimei Yang, and Hao Li. 2019. On the continuity of rotation representations in neural networks. In Conference on Computer Vision and Pattern Recognition (CVPR). 5745?5753.