“SketchPatch: sketch stylization via seamless patch-level synthesis” by Fish, Perry, Bermano and Cohen-Or

Conference:

Type(s):

Title:

- SketchPatch: sketch stylization via seamless patch-level synthesis

Session/Category Title:

- Image Synthesis with Generative Models

Presenter(s)/Author(s):

Abstract:

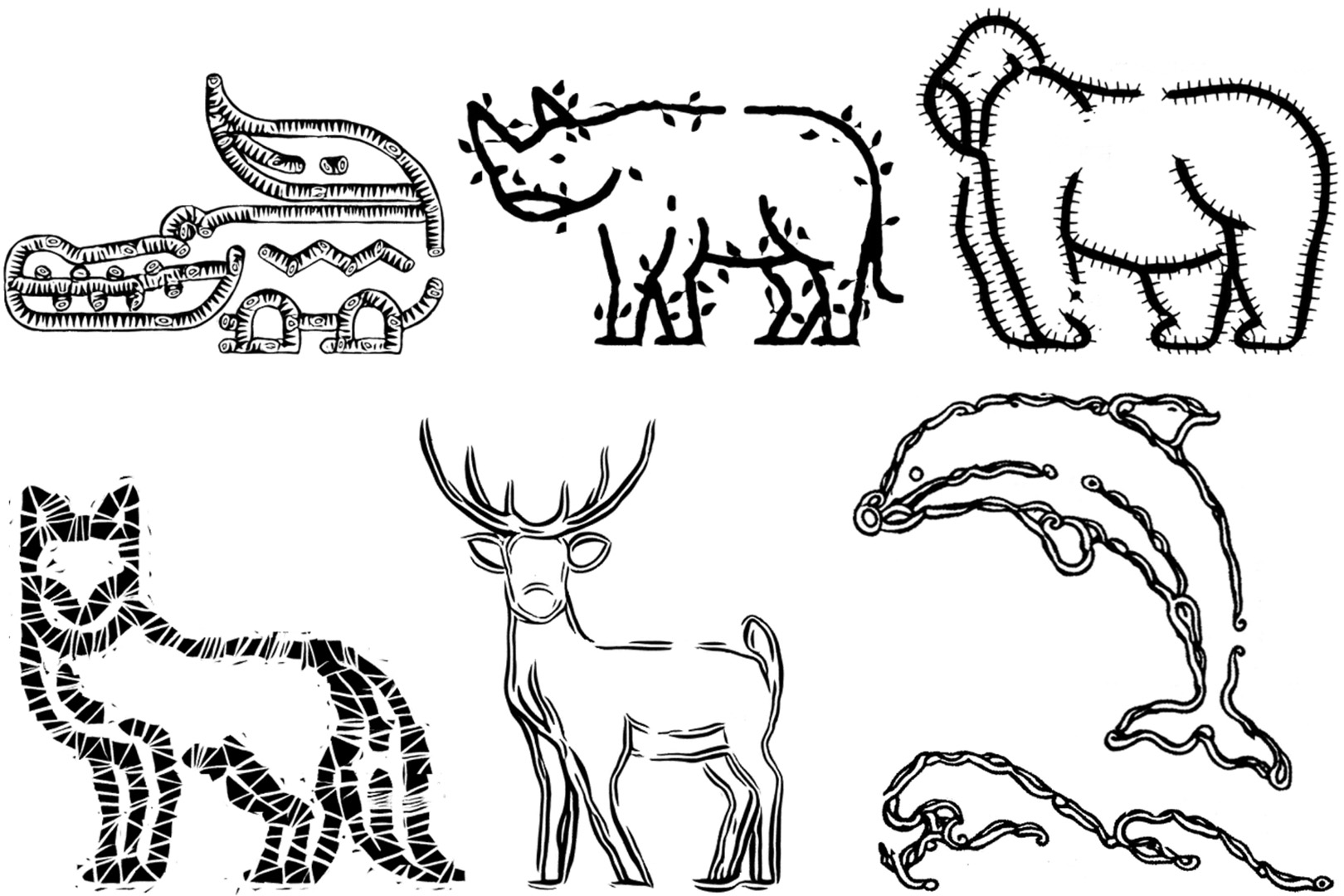

The paradigm of image-to-image translation is leveraged for the benefit of sketch stylization via transfer of geometric textural details. Lacking the necessary volumes of data for standard training of translation systems, we advocate for operation at the patch level, where a handful of stylized sketches provide ample mining potential for patches featuring basic geometric primitives. Operating at the patch level necessitates special consideration of full sketch translation, as individual translation of patches with no regard to neighbors is likely to produce visible seams and artifacts at patch borders. Aligned pairs of styled and plain primitives are combined to form input hybrids containing styled elements around the border and plain elements within, and given as input to a seamless translation (ST) generator, whose output patches are expected to reconstruct the fully styled patch. An adversarial addition promotes generalization and robustness to diverse geometries at inference time, forming a simple and effective system for arbitrary sketch stylization, as demonstrated upon a variety of styles and sketches.

References:

1. Samaneh Azadi, Matthew Fisher, Vladimir Kim, Zhaowen Wang, Eli Shechtman, and Trevor Darrell. 2018. Multi-content gan for few-shot font style transfer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Vol. 11. 13.Google ScholarCross Ref

2. Connelly Barnes, Eli Shechtman, Dan B Goldman, and Adam Finkelstein. 2010. The generalized patchmatch correspondence algorithm. In European Conference on Computer Vision. Springer, 29–43.Google ScholarCross Ref

3. Connelly Barnes and Fang-Lue Zhang. 2017. A survey of the state-of-the-art in patch-based synthesis. Computational Visual Media 3, 1 (2017), 3–20.Google ScholarCross Ref

4. Tian Qi Chen and Mark Schmidt. 2016. Fast patch-based style transfer of arbitrary style. arXiv preprint arXiv:1612.04337 (2016).Google Scholar

5. Leon A Gatys, Alexander S Ecker, and Matthias Bethge. 2016. Image style transfer using convolutional neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 2414–2423.Google ScholarCross Ref

6. Aaron Hertzmann, Charles E Jacobs, Nuria Oliver, Brian Curless, and David H Salesin. 2001. Image analogies. In Proceedings of the 28th annual conference on Computer graphics and interactive techniques. 327–340.Google ScholarDigital Library

7. Xun Huang and Serge Belongie. 2017. Arbitrary style transfer in real-time with adaptive instance normalization. In Proceedings of the IEEE International Conference on Computer Vision. 1501–1510.Google ScholarCross Ref

8. Xun Huang, Ming-Yu Liu, Serge Belongie, and Jan Kautz. 2018. Multimodal unsupervised image-to-image translation. In Proceedings of the European Conference on Computer Vision (ECCV). 172–189.Google ScholarDigital Library

9. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. 2017. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 1125–1134.Google ScholarCross Ref

10. Justin Johnson, Alexandre Alahi, and Li Fei-Fei. 2016. Perceptual losses for real-time style transfer and super-resolution. In European conference on computer vision. Springer, 694–711.Google ScholarCross Ref

11. Rubaiat Habib Kazi, Takeo Igarashi, Shengdong Zhao, and Richard Davis. 2012. Vignette: interactive texture design and manipulation with freeform gestures for pen-and-ink illustration. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. ACM, 1727–1736.Google ScholarDigital Library

12. Taeksoo Kim, Moonsu Cha, Hyunsoo Kim, Jung Kwon Lee, and Jiwon Kim. 2017. Learning to discover cross-domain relations with generative adversarial networks. In Proceedings of the 34th International Conference on Machine Learning-Volume 70. JMLR. org, 1857–1865.Google ScholarDigital Library

13. Katrin Lang and Marc Alexa. 2015. The Markov pen: online synthesis of free-hand drawing styles. In Proceedings of the workshop on Non-Photorealistic Animation and Rendering. Eurographics Association, 203–215.Google Scholar

14. Yijun Li, Chen Fang, Aaron Hertzmann, Eli Shechtman, and Ming-Hsuan Yang. 2019. Im2pencil: Controllable pencil illustration from photographs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 1525–1534.Google ScholarCross Ref

15. Yijun Li, Chen Fang, Jimei Yang, Zhaowen Wang, Xin Lu, and Ming-Hsuan Yang. 2017. Universal style transfer via feature transforms. In Advances in neural information processing systems. 386–396.Google Scholar

16. Jing Liao, Yuan Yao, Lu Yuan, Gang Hua, and Sing Bing Kang. 2017. Visual attribute transfer through deep image analogy. ACM Transactions on Graphics (TOG) 36, 4 (2017), 120.Google ScholarDigital Library

17. Jingwan Lu, Connelly Barnes, Stephen DiVerdi, and Adam Finkelstein. 2013. RealBrush: painting with examples of physical media. ACM Transactions on Graphics (TOG) 32, 4 (2013), 117.Google ScholarDigital Library

18. Jingwan Lu, Connelly Barnes, Connie Wan, Paul Asente, Radomir Mech, and Adam Finkelstein. 2014. DecoBrush: drawing structured decorative patterns by example. ACM Transactions on Graphics (TOG) 33, 4 (2014), 90.Google ScholarDigital Library

19. Michal Lukáč, Jakub Fišer, Paul Asente, Jingwan Lu, Eli Shechtman, and Daniel SᏳkora. 2015. Brushables: Example-based Edge-aware Directional Texture Painting. In Computer Graphics Forum, Vol. 34. Wiley Online Library, 257–267.Google Scholar

20. Xudong Mao, Qing Li, Haoran Xie, Raymond YK Lau, Zhen Wang, and Stephen Paul Smolley. 2017. Least squares generative adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision. 2794–2802.Google ScholarCross Ref

21. H. Q. Phan, J. Lu, P. Asente, A. B. Chan, and H. Fu. 2016. Patternista: Learning Element Style Compatibility and Spatial Composition for Ring-Based Layout Decoration. In Proceedings of the Joint Symposium on Computational Aesthetics and Sketch Based Interfaces and Modeling and Non-Photorealistic Animation and Rendering (Expresive ’16). Eurographics Association, Goslar, DEU, 79–88.Google Scholar

22. Christian Santoni and Fabio Pellacini. 2016. gTangle: A grammar for the procedural generation of tangle patterns. ACM Transactions on Graphics (TOG) 35, 6 (2016), 1–11.Google ScholarDigital Library

23. Karen Simonyan and Andrew Zisserman. 2014. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014).Google Scholar

24. Ondřej Texler, Jakub Fišer, Mike Lukáč, Jingwan Lu, Eli Shechtman, and Daniel SᏳkora. 2019. Enhancing neural style transfer using patch-based synthesis. In Proceedings of the 8th ACM/Eurographics Expressive Symposium on Computational Aesthetics and Sketch Based Interfaces and Modeling and Non-Photorealistic Animation and Rendering. Eurographics Association, 43–50.Google Scholar

25. Rundong Wu, Zhili Chen, Zhaowen Wang, Jimei Yang, and Steve Marschner. 2018. Brush stroke synthesis with a generative adversarial network driven by physically based simulation. In Proceedings of the Joint Symposium on Computational Aesthetics and Sketch-Based Interfaces and Modeling and Non-Photorealistic Animation and Rendering. ACM, 12.Google ScholarDigital Library

26. Shuai Yang, Jiaying Liu, Wenjing Wang, and Zongming Guo. 2019. TET-GAN: Text Effects Transfer via Stylization and Destylization. In AAAI Conference on Artificial Intelligence.Google ScholarDigital Library

27. Zili Yi, Hao Zhang, Ping Tan, and Minglun Gong. 2017. Dualgan: Unsupervised dual learning for image-to-image translation. In Proceedings of the IEEE international conference on computer vision. 2849–2857.Google ScholarCross Ref

28. Gao Yue, Guo Yuan, Lian Zhouhui, Tang Yingmin, and Xiao Jianguo. 2019. Artistic Glyph Image Synthesis via One-Stage Few-Shot Learning. ACM Trans. Graph. 38, 6, Article 185 (2019), 12 pages. Google ScholarDigital Library

29. Shizhe Zhou, Anass Lasram, and Sylvain Lefebvre. 2013. By-example synthesis of curvilinear structured patterns. In Computer Graphics Forum, Vol. 32. Wiley Online Library, 355–360.Google Scholar

30. Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A Efros. 2017. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international conference on computer vision. 2223–2232.Google ScholarCross Ref