“SimpleNeRF: Regularizing Sparse Input Neural Radiance Fields with Simpler Solutions” by Somraj, Karanayil and Soundararajan

Conference:

Type(s):

Title:

- SimpleNeRF: Regularizing Sparse Input Neural Radiance Fields with Simpler Solutions

Session/Category Title:

- How To Deal With NERF?

Presenter(s)/Author(s):

Abstract:

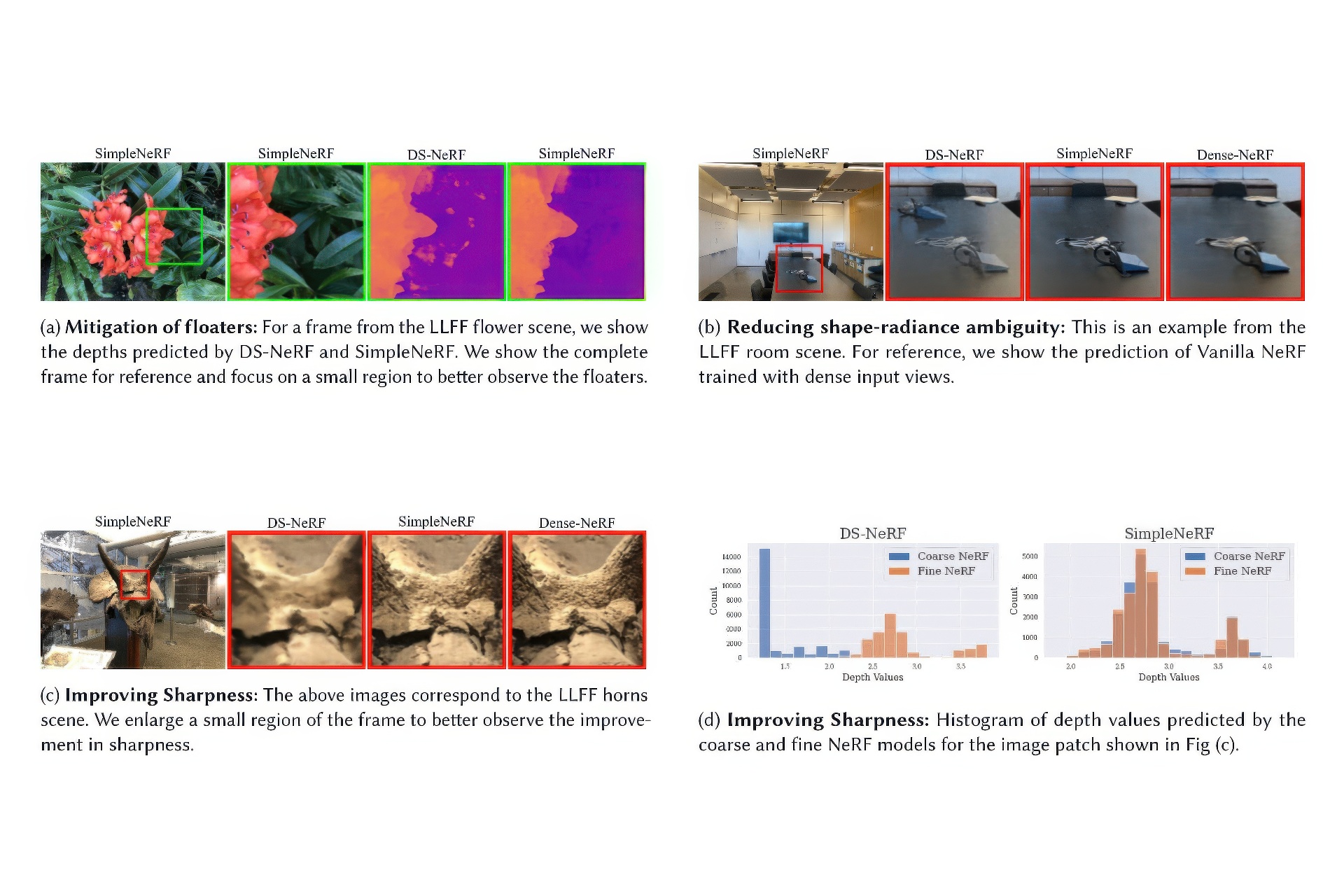

Neural Radiance Fields (NeRF) show impressive performance for the photo-realistic free-view rendering of scenes. However, NeRFs require dense sampling of images in the given scene, and their performance degrades significantly when only a sparse set of views are available. Researchers have found that supervising the depth estimated by the NeRF helps train it effectively with fewer views. The depth supervision is obtained either using classical approaches or neural networks pre-trained on a large dataset. While the former may provide only sparse supervision, the latter may suffer from generalization issues. As opposed to the earlier approaches, we seek to learn the depth supervision by designing augmented models and training them along with the NeRF. We design augmented models that encourage simpler solutions by exploring the role of positional encoding and view-dependent radiance in training the few-shot NeRF. The depth estimated by these simpler models is used to supervise the NeRF depth estimates. Since the augmented models can be inaccurate in certain regions, we design a mechanism to choose only reliable depth estimates for supervision. Finally, we add a consistency loss between the coarse and fine multi-layer perceptrons of the NeRF to ensure better utilization of hierarchical sampling. We achieve state-of-the-art view-synthesis performance on two popular datasets by employing the above regularizations.

References:

[1]

Jonathan T. Barron, Ben Mildenhall, Dor Verbin, Pratul P. Srinivasan, and Peter Hedman. 2022. Mip-NeRF 360: Unbounded Anti-Aliased Neural Radiance Fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

[2]

Wenjing Bian, Zirui Wang, Kejie Li, Jia-Wang Bian, and Victor Adrian Prisacariu. 2023. NoPe-NeRF: Optimising Neural Radiance Field with No Pose Prior. (June 2023).

[3]

Matteo Bortolon, Alessio Del Bue, and Fabio Poiesi. 2022. Data augmentation for NeRF: a geometric consistent solution based on view morphing. arXiv e-prints, Article arXiv:2210.04214 (2022), arXiv:2210.04214 pages. arxiv:2210.04214

[4]

Anpei Chen, Zexiang Xu, Fuqiang Zhao, Xiaoshuai Zhang, Fanbo Xiang, Jingyi Yu, and Hao Su. 2021. MVSNeRF: Fast Generalizable Radiance Field Reconstruction from Multi-View Stereo. arXiv e-prints, Article arXiv:2103.15595 (March 2021), arXiv:2103.15595 pages. arxiv:2103.15595

[5]

Di Chen, Yu Liu, Lianghua Huang, Bin Wang, and Pan Pan. 2022. GeoAug: Data Augmentation for Few-Shot NeRF with Geometry Constraints. In Proceedings of the European Conference on Computer Vision (ECCV).

[6]

Shenchang Eric Chen and Lance Williams. 1993. View Interpolation for Image Synthesis. In Proceedings of the Computer Graphics and Interactive Techniques (SIGGRAPH). https://doi.org/10.1145/166117.166153

[7]

Yuedong Chen, Haofei Xu, Qianyi Wu, Chuanxia Zheng, Tat-Jen Cham, and Jianfei Cai. 2023. Explicit Correspondence Matching for Generalizable Neural Radiance Fields. arXiv e-prints, Article arXiv:2304.12294 (2023), arXiv:2304.12294 pages. arxiv:2304.12294

[8]

Julian Chibane, Aayush Bansal, Verica Lazova, and Gerard Pons-Moll. 2021. Stereo Radiance Fields (SRF): Learning View Synthesis for Sparse Views of Novel Scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

[9]

Kangle Deng, Andrew Liu, Jun-Yan Zhu, and Deva Ramanan. 2022b. Depth-Supervised NeRF: Fewer Views and Faster Training for Free. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

[10]

Nianchen Deng, Zhenyi He, Jiannan Ye, Budmonde Duinkharjav, Praneeth Chakravarthula, Xubo Yang, and Qi Sun. 2022a. FoV-NeRF: Foveated Neural Radiance Fields for Virtual Reality. IEEE Transactions on Visualization and Computer Graphics (TVCG) 28, 11 (2022), 3854–3864. https://doi.org/10.1109/TVCG.2022.3203102

[11]

Steven J. Gortler, Radek Grzeszczuk, Richard Szeliski, and Michael F. Cohen. 1996. The Lumigraph. In Proceedings of the Computer Graphics and Interactive Techniques (SIGGRAPH). https://doi.org/10.1145/237170.237200

[12]

Ajay Jain, Matthew Tancik, and Pieter Abbeel. 2021. Putting NeRF on a Diet: Semantically Consistent Few-Shot View Synthesis. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV).

[13]

Mohammad Mahdi Johari, Yann Lepoittevin, and François Fleuret. 2022. GeoNeRF: Generalizing NeRF With Geometry Priors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

[14]

Zhanghan Ke, Daoye Wang, Qiong Yan, Jimmy Ren, and Rynson W.H. Lau. 2019. Dual Student: Breaking the Limits of the Teacher in Semi-Supervised Learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV).

[15]

Mijeong Kim, Seonguk Seo, and Bohyung Han. 2022. InfoNeRF: Ray Entropy Minimization for Few-Shot Neural Volume Rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

[16]

Minseop Kwak, Jiuhn Song, and Seungryong Kim. 2023. GeCoNeRF: Few-shot Neural Radiance Fields via Geometric Consistency. arXiv e-prints, Article arXiv:2301.10941 (2023), arXiv:2301.10941 pages. arxiv:2301.10941

[17]

SeokYeong Lee, JunYong Choi, Seungryong Kim, Ig-Jae Kim, and Junghyun Cho. 2023. ExtremeNeRF: Few-shot Neural Radiance Fields Under Unconstrained Illumination. arXiv e-prints, Article arXiv:2303.11728 (2023), arXiv:2303.11728 pages. arxiv:2303.11728

[18]

Marc Levoy and Pat Hanrahan. 1996. Light Field Rendering. In Proceedings of the Computer Graphics and Interactive Techniques (SIGGRAPH). https://doi.org/10.1145/237170.237199

[19]

Kai-En Lin, Yen-Chen Lin, Wei-Sheng Lai, Tsung-Yi Lin, Yi-Chang Shih, and Ravi Ramamoorthi. 2023. Vision Transformer for NeRF-Based View Synthesis From a Single Input Image. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV).

[20]

Li Ma, Xiaoyu Li, Jing Liao, Qi Zhang, Xuan Wang, Jue Wang, and Pedro V. Sander. 2022. Deblur-NeRF: Neural Radiance Fields From Blurry Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

[21]

Willi Menapace, Aliaksandr Siarohin, Stéphane Lathuilière, Panos Achlioptas, Vladislav Golyanik, Elisa Ricci, and Sergey Tulyakov. 2023. Plotting Behind the Scenes: Towards Learnable Game Engines. arXiv e-prints, Article arXiv:2303.13472 (2023), arXiv:2303.13472 pages. arxiv:2303.13472

[22]

Ben Mildenhall, Peter Hedman, Ricardo Martin-Brualla, Pratul P. Srinivasan, and Jonathan T. Barron. 2022. NeRF in the Dark: High Dynamic Range View Synthesis From Noisy Raw Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

[23]

Ben Mildenhall, Pratul P. Srinivasan, Rodrigo Ortiz-Cayon, Nima Khademi Kalantari, Ravi Ramamoorthi, Ren Ng, and Abhishek Kar. 2019. Local Light Field Fusion: Practical View Synthesis with Prescriptive Sampling Guidelines. ACM Transactions on Graphics (TOG) 38, 4 (July 2019), 1–14. https://doi.org/10.1145/3306346.3322980

[24]

Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. 2020. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. In Proceedings of the European Conference on Computer Vision (ECCV).

[25]

Thomas Müller, Alex Evans, Christoph Schied, and Alexander Keller. 2022. Instant Neural Graphics Primitives with a Multiresolution Hash Encoding. ACM Transactions on Graphics (ToG) 41, 4 (2022), 1–15.

[26]

Michael Niemeyer, Jonathan T. Barron, Ben Mildenhall, Mehdi S. M. Sajjadi, Andreas Geiger, and Noha Radwan. 2022. RegNeRF: Regularizing Neural Radiance Fields for View Synthesis From Sparse Inputs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

[27]

Naama Pearl, Tali Treibitz, and Simon Korman. 2022. NAN: Noise-Aware NeRFs for Burst-Denoising. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

[28]

Barbara Roessle, Jonathan T. Barron, Ben Mildenhall, Pratul P. Srinivasan, and Matthias Nießner. 2022. Dense Depth Priors for Neural Radiance Fields From Sparse Input Views. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

[29]

Seunghyeon Seo, Donghoon Han, Yeonjin Chang, and Nojun Kwak. 2023. MixNeRF: Modeling a Ray with Mixture Density for Novel View Synthesis from Sparse Inputs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

[30]

Yue Shi, Dingyi Rong, Bingbing Ni, Chang Chen, and Wenjun Zhang. 2022. GARF: Geometry-Aware Generalized Neural Radiance Field. arXiv e-prints, Article arXiv:2212.02280 (2022), arXiv:2212.02280 pages. arxiv:2212.02280

[31]

Nagabhushan Somraj and Rajiv Soundararajan. 2023. ViP-NeRF: Visibility Prior for Sparse Input Neural Radiance Fields. In Proceedings of the ACM Special Interest Group on Computer Graphics and Interactive Techniques (SIGGRAPH). https://doi.org/10.1145/3588432.3591539

[32]

Matthew Tancik, Ben Mildenhall, Terrance Wang, Divi Schmidt, Pratul P. Srinivasan, Jonathan T. Barron, and Ren Ng. 2021. Learned Initializations for Optimizing Coordinate-Based Neural Representations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

[33]

Alex Trevithick and Bo Yang. 2021. GRF: Learning a General Radiance Field for 3D Representation and Rendering. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV).

[34]

Mikaela Angelina Uy, Ricardo Martin-Brualla, Leonidas Guibas, and Ke Li. 2023. SCADE: NeRFs from Space Carving with Ambiguity-Aware Depth Estimates. (June 2023).

[35]

Dor Verbin, Peter Hedman, Ben Mildenhall, Todd Zickler, Jonathan T. Barron, and Pratul P. Srinivasan. 2022. Ref-NeRF: Structured View-Dependent Appearance for Neural Radiance Fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). https://doi.org/10.1109/CVPR52688.2022.00541

[36]

Guangcong Wang, Zhaoxi Chen, Chen Change Loy, and Ziwei Liu. 2023. SparseNeRF: Distilling Depth Ranking for Few-shot Novel View Synthesis. arXiv e-prints, Article arXiv:2303.16196 (2023), arXiv:2303.16196 pages. arxiv:2303.16196

[37]

Qianqian Wang, Zhicheng Wang, Kyle Genova, Pratul P. Srinivasan, Howard Zhou, Jonathan T. Barron, Ricardo Martin-Brualla, Noah Snavely, and Thomas Funkhouser. 2021. IBRNet: Learning Multi-View Image-Based Rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

[38]

Zhou Wang, Alan C Bovik, Hamid R Sheikh, and Eero P Simoncelli. 2004. Image quality assessment: from error visibility to structural similarity. IEEE Transactions on Image Processing (TIP) 13, 4 (2004), 600–612.

[39]

Jamie Wynn and Daniyar Turmukhambetov. 2023. DiffusioNeRF: Regularizing Neural Radiance Fields with Denoising Diffusion Models. arXiv e-prints, Article arXiv:2302.12231 (2023), arXiv:2302.12231 pages. arxiv:2302.12231

[40]

Dejia Xu, Yifan Jiang, Peihao Wang, Zhiwen Fan, Humphrey Shi, and Zhangyang Wang. 2022. SinNeRF: Training Neural Radiance Fields on Complex Scenes from a Single Image. In Proceedings of the European Conference on Computer Vision (ECCV).

[41]

Jiawei Yang, Marco Pavone, and Yue Wang. 2023. FreeNeRF: Improving Few-shot Neural Rendering with Free Frequency Regularization. (June 2023).

[42]

Lior Yariv, Jiatao Gu, Yoni Kasten, and Yaron Lipman. 2021. Volume Rendering of Neural Implicit Surfaces. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS).

[43]

Alex Yu, Vickie Ye, Matthew Tancik, and Angjoo Kanazawa. 2021. pixelNeRF: Neural Radiance Fields From One or Few Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

[44]

Yu-Jie Yuan, Yang-Tian Sun, Yu-Kun Lai, Yuewen Ma, Rongfei Jia, and Lin Gao. 2022. NeRF-Editing: Geometry Editing of Neural Radiance Fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

[45]

Jason Zhang, Gengshan Yang, Shubham Tulsiani, and Deva Ramanan. 2021. NeRS: Neural Reflectance Surfaces for Sparse-view 3D Reconstruction in the Wild. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS).

[46]

Kai Zhang, Gernot Riegler, Noah Snavely, and Vladlen Koltun. 2020. NeRF++: Analyzing and Improving Neural Radiance Fields. arXiv e-prints, Article arXiv:2010.07492 (2020), arXiv:2010.07492 pages. arxiv:2010.07492

[47]

Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

[48]

Tinghui Zhou, Richard Tucker, John Flynn, Graham Fyffe, and Noah Snavely. 2018. Stereo Magnification: Learning View Synthesis Using Multiplane Images. ACM Transactions on Graphics (TOG) 37, 4 (July 2018).

[49]

Bingfan Zhu, Yanchao Yang, Xulong Wang, Youyi Zheng, and Leonidas Guibas. 2023. VDN-NeRF: Resolving Shape-Radiance Ambiguity via View-Dependence Normalization. arXiv e-prints, Article arXiv:2303.17968 (2023), arXiv:2303.17968 pages. arxiv:2303.17968