“ShapeAssembly: learning to generate programs for 3D shape structure synthesis” by Jones, Barton, Xu, Wang, Jiang, et al. …

Conference:

Type(s):

Title:

- ShapeAssembly: learning to generate programs for 3D shape structure synthesis

Session/Category Title:

- Learning to Move and Synthesize

Presenter(s)/Author(s):

Abstract:

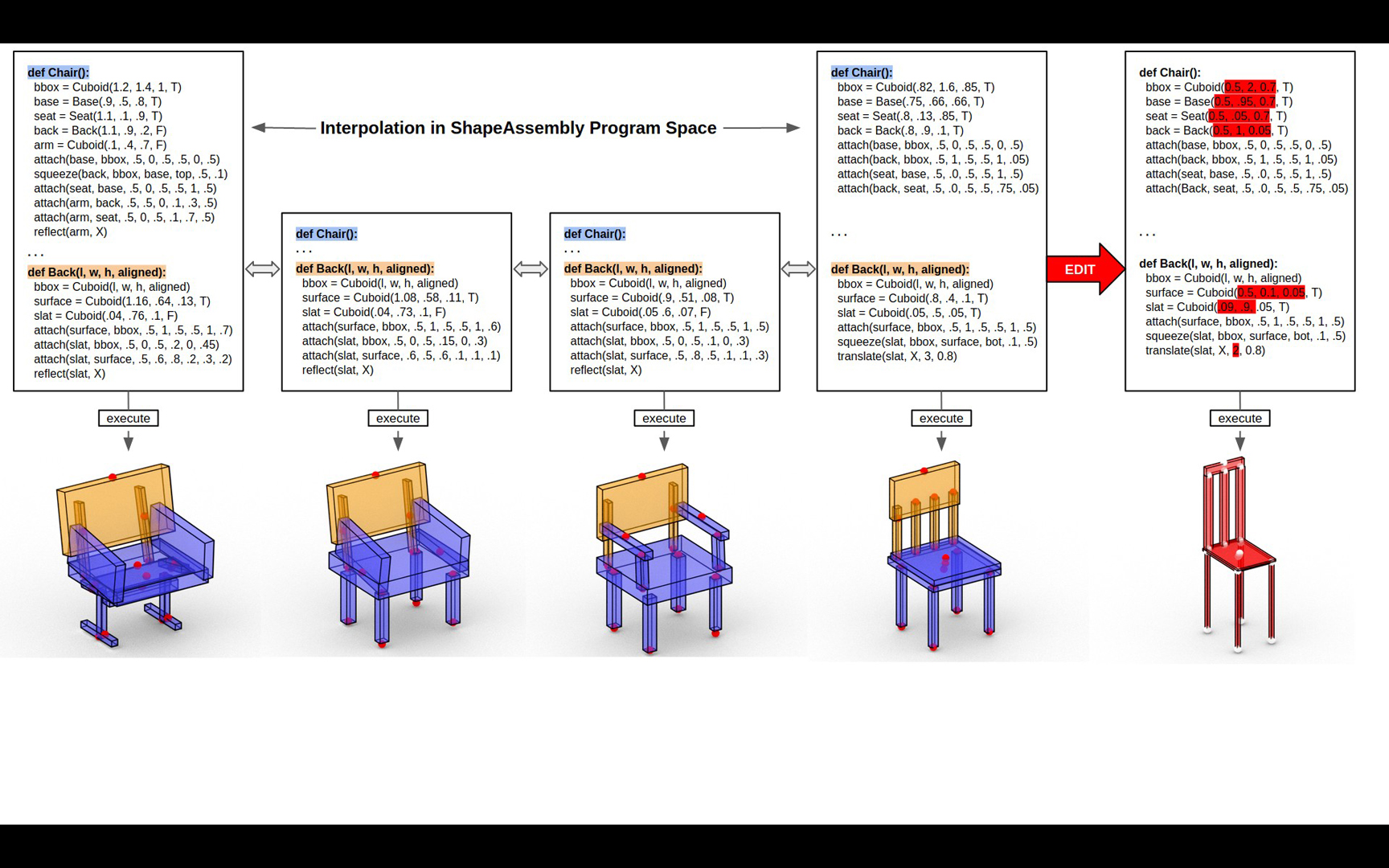

Manually authoring 3D shapes is difficult and time consuming; generative models of 3D shapes offer compelling alternatives. Procedural representations are one such possibility: they offer high-quality and editable results but are difficult to author and often produce outputs with limited diversity. On the other extreme are deep generative models: given enough data, they can learn to generate any class of shape but their outputs have artifacts and the representation is not editable.In this paper, we take a step towards achieving the best of both worlds for novel 3D shape synthesis. First, we propose ShapeAssembly, a domain-specific “assembly-language” for 3D shape structures. ShapeAssembly programs construct shape structures by declaring cuboid part proxies and attaching them to one another, in a hierarchical and symmetrical fashion. ShapeAssembly functions are parameterized with continuous free variables, so that one program structure is able to capture a family of related shapes.We show how to extract ShapeAssembly programs from existing shape structures in the PartNet dataset. Then, we train a deep generative model, a hierarchical sequence VAE, that learns to write novel ShapeAssembly programs. Our approach leverages the strengths of each representation: the program captures the subset of shape variability that is interpretable and editable, and the deep generative model captures variability and correlations across shape collections that is hard to express procedurally.We evaluate our approach by comparing the shapes output by our generated programs to those from other recent shape structure synthesis models. We find that our generated shapes are more plausible and physically-valid than those of other methods. Additionally, we assess the latent spaces of these models, and find that ours is better structured and produces smoother interpolations. As an application, we use our generative model and differentiable program interpreter to infer and fit shape programs to unstructured geometry, such as point clouds.

References:

1. Ben Abbatematteo, Stefanie Tellex, and George Konidaris. 2019. Learning to Generalize Kinematic Models to Novel Objects. In Proceedings of the Third Conference on Robot Learning.Google Scholar

2. Zhiqin Chen, Andrea Tagliasacchi, and Hao Zhang. 2019. BSP-Net: Generating Compact Meshes via Binary Space Partitioning. arXiv:cs.CV/1911.06971Google Scholar

3. Zhiqin Chen and Hao Zhang. 2019. Learning Implicit Fields for Generative Shape Modeling. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

4. İ. Demir, D. G. Aliaga, and B. Benes. 2016. Proceduralization for Editing 3D Architectural Models. In 2016 Fourth International Conference on 3D Vision (3DV).Google Scholar

5. Tao Du, Jeevana Priya Inala, Yewen Pu, Andrew Spielberg, Adriana Schulz, Daniela Rus, Armando Solar-Lezama, and Wojciech Matusik. 2018. InverseCSG: Automatic Conversion of 3D Models to CSG Trees. ACM Trans. Graph. 37, 6 (Dec. 2018).Google ScholarDigital Library

6. Kevin Ellis, Maxwell Nye, Yewen Pu, Felix Sosa, Josh Tenenbaum, and Armando Solar-Lezama. 2019. Write, Execute, Assess: Program Synthesis with a REPL. In Advances in Neural Information Processing Systems (NeurIPS).Google Scholar

7. Kevin Ellis, Daniel Ritchie, Armando Solar-Lezama, and Josh Tenenbaum. 2018. Learning to Infer Graphics Programs from Hand-Drawn Images. In Advances in Neural Information Processing Systems (NeurIPS).Google Scholar

8. Haoqiang Fan, Hao Su, and Leonidas J Guibas. 2017. A point set generation network for 3D object reconstruction from a single image. In Proceedings of the IEEE conference on computer vision and pattern recognition. 605–613.Google ScholarCross Ref

9. Lin Gao, Jie Yang, Tong Wu, Yu-Jie Yuan, Hongbo Fu, Yu-Kun Lai, and Hao (Richard) Zhang. 2019. SDM-NET: Deep Generative Network for Structured Deformable Mesh. In SIGGRAPH Asia.Google Scholar

10. Thibault Groueix, Matthew Fisher, Vladimir G. Kim, Bryan C. Russell, and Mathieu Aubry. 2018. AtlasNet: A Papier-Mâché Approach to Learning 3D Surface Generation. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

11. Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. 2017. GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. In NeurIPS.Google Scholar

12. Irvin Hwang, Andreas Stuhlmüller, and Noah D. Goodman. 2011. Inducing Probabilistic Programs by Bayesian Program Merging. CoRR arXiv:1110.5667 (2011).Google Scholar

13. Justin Johnson, Bharath Hariharan, Laurens van der Maaten, Judy Hoffman, Li Fei-Fei, C Lawrence Zitnick, and Ross Girshick. 2017. Inferring and Executing Programs for Visual Reasoning. In ICCV.Google Scholar

14. Amlan Kar, Aayush Prakash, Ming-Yu Liu, Eric Cameracci, Justin Yuan, Matt Rusiniak, David Acuna, Antonio Torralba, and Sanja Fidler. 2019. Meta-Sim: Learning to Generate Synthetic Datasets. arXiv:cs.CV/1904.11621Google Scholar

15. Diederik P. Kingma and Jimmy Ba. 2014. Adam: A Method for Stochastic Optimization. CoRR abs/1412.6980 (2014).Google Scholar

16. Diederik P. Kingma and Max Welling. 2014. Auto-Encoding Variational Bayes. In International Conference on Learning Representations (ICLR).Google Scholar

17. Arno Knapitsch, Jaesik Park, Qian-Yi Zhou, and Vladlen Koltun. 2017. Tanks and Temples: Benchmarking Large-Scale Scene Reconstruction. ACM Transactions on Graphics 36, 4 (2017).Google ScholarDigital Library

18. Eric Kolve, Roozbeh Mottaghi, Daniel Gordon, Yuke Zhu, Abhinav Gupta, and Ali Farhadi. 2017. AI2-THOR: An Interactive 3D Environment for Visual AI. CoRR arXiv:1712.05474 (2017).Google Scholar

19. Matt J. Kusner, Brooks Paige, and José Miguel Hernández-Lobato. 2017. Grammar Variational Autoencoder. In Proceedings of the 34th International Conference on Machine Learning – Volume 70 (ICML’17). JMLR.org, 1945–1954.Google Scholar

20. Manfred Lau, Akira Ohgawara, Jun Mitani, and Takeo Igarashi. 2011. Converting 3D Furniture Models to Fabricatable Parts and Connectors. ACM Trans. Graph. 30, 4, Article 85 (July 2011), 6 pages. Google ScholarDigital Library

21. Jun Li, Kai Xu, Siddhartha Chaudhuri, Ersin Yumer, Hao Zhang, and Leonidas Guibas. 2017. GRASS: Generative recursive autoencoders for shape structures. ACM Transactions on Graphics (TOG) 36, 4 (2017), 52.Google ScholarDigital Library

22. Yunchao Liu, Zheng Wu, Daniel Ritchie, William T. Freeman, Joshua B. Tenenbaum, and Jiajun Wu. 2019. Learning to Describe Scenes with Programs. In International Conference on Learning Representations (ICLR).Google Scholar

23. Sidi Lu, Jiayuan Mao, Joshua B. Tenenbaum, and Jiajun Wu. 2019. Neurally-Guided Structure Inference. In International Conference on Machine Learning (ICML).Google Scholar

24. Andrew L. Maas. 2013. Rectifier Nonlinearities Improve Neural Network Acoustic Models.Google Scholar

25. A. Martinovic and L. Van Gool. 2013. Bayesian Grammar Learning for Inverse Procedural Modeling. In CVPR.Google Scholar

26. Mateusz Michalkiewicz, Jhony K. Pontes, Dominic Jack, Mahsa Baktashmotlagh, and Anders P. Eriksson. 2019. Deep Level Sets: Implicit Surface Representations for 3D Shape Inference. CoRR abs/1901.06802 (2019).Google Scholar

27. Kaichun Mo, Paul Guerrero, Li Yi, Hao Su, Peter Wonka, Niloy Mitra, and Leonidas Guibas. 2019a. StructureNet: Hierarchical Graph Networks for 3D Shape Generation. In SIGGRAPH Asia.Google Scholar

28. Kaichun Mo, Shilin Zhu, Angel X. Chang, Li Yi, Subarna Tripathi, Leonidas J. Guibas, and Hao Su. 2019b. PartNet: A Large-Scale Benchmark for Fine-Grained and Hierarchical Part-Level 3D Object Understanding. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarCross Ref

29. Pascal Müller, Peter Wonka, Simon Haegler, Andreas Ulmer, and Luc Van Gool. 2006. Procedural Modeling of Buildings. In SIGGRAPH.Google Scholar

30. Gen Nishida, Adrien Bousseau, and Daniel G. Aliaga. 2018. Procedural Modeling of a Building from a Single Image. Computer Graphics Forum (Eurographics) 37, 2 (2018).Google Scholar

31. Gen Nishida, Ignacio Garcia-Dorado, Daniel G Aliaga, Bedrich Benes, and Adrien Bousseau. 2016. Interactive Sketching of Urban Procedural Models. ACM Transactions on Graphics (TOG) 35, 4 (2016), 130.Google ScholarDigital Library

32. Yoav I. H. Parish and Pascal Müller. 2001. Procedural Modeling of Cities. In SIGGRAPH.Google Scholar

33. Jeong Joon Park, Peter Florence, Julian Straub, Richard Newcombe, and Steven Love-grove. 2019. DeepSDF: Learning Continuous Signed Distance Functions for Shape Representation. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

34. Adam Paszke, Sam Gross, Soumith Chintala, Gregory Chanan, Edward Yang, Zachary DeVito, Zeming Lin, Alban Desmaison, Luca Antiga, and Adam Lerer. 2017. Automatic differentiation in PyTorch. (2017).Google Scholar

35. Przemyslaw Prusinkiewicz and Aristid Lindenmayer. 1996. The Algorithmic Beauty of Plants. Springer-Verlag, Berlin, Heidelberg.Google Scholar

36. Charles Ruizhongtai Qi, Li Yi, Hao Su, and Leonidas J Guibas. 2017. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Advances in neural information processing systems. 5099–5108.Google Scholar

37. Stephan R Richter, Vibhav Vineet, Stefan Roth, and Vladlen Koltun. 2016. Playing for data: Ground truth from computer games. In European conference on computer vision. Springer, 102–118.Google ScholarCross Ref

38. Daniel Ritchie, Sarah Jobalia, and Anna Thomas. 2018. Example-based Authoring of Procedural Modeling Programs with Structural and Continuous Variability. In EUROGRAPHICS.Google Scholar

39. Manolis Savva, Abhishek Kadian, Oleksandr Maksymets, Yili Zhao, Erik Wijmans, Bhavana Jain, Julian Straub, Jia Liu, Vladlen Koltun, Jitendra Malik, Devi Parikh, and Dhruv Batra. 2019. Habitat: A Platform for Embodied AI Research. In The IEEE International Conference on Computer Vision (ICCV).Google Scholar

40. Gopal Sharma, Rishabh Goyal, Difan Liu, Evangelos Kalogerakis, and Subhransu Maji. 2018. CSGNet: Neural Shape Parser for Constructive Solid Geometry. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

41. Ondrej Stava, Bedrich Benes, Radomír Mech, Daniel G. Aliaga, and Peter Kristof. 2010. Inverse Procedural Modeling by Automatic Generation of L-systems. Comput. Graph. Forum 29 (2010), 665–674.Google ScholarCross Ref

42. Minhyuk Sung, Hao Su, Vladimir G. Kim, Siddhartha Chaudhuri, and Leonidas Guibas. 2017. ComplementMe: Weakly-Supervised Component Suggestions for 3D Modeling. ACM Transactions on Graphics (Proc. of SIGGRAPH Asia) (2017).Google ScholarDigital Library

43. Jerry O. Talton, Lingfeng Yang, Ranjitha Kumar, Maxine Lim, Noah D. Goodman, and Radomír Mech. 2012. Learning design patterns with Bayesian grammar induction. In UIST.Google Scholar

44. Yonglong Tian, Andrew Luo, Xingyuan Sun, Kevin Ellis, William T. Freeman, Joshua B. Tenenbaum, and Jiajun Wu. 2019. Learning to Infer and Execute 3D Shape Programs. In International Conference on Learning Representations (ICLR).Google Scholar

45. Jiajun Wu, Chengkai Zhang, Tianfan Xue, William T. Freeman, and Joshua B. Tenenbaum. 2016. Learning a Probabilistic Latent Space of Object Shapes via 3D Generative-Adversarial Modeling. In Advances in Neural Information Processing Systems (NeurIPS).Google ScholarDigital Library

46. Fei Xia, Amir R. Zamir, Zhi-Yang He, Alexander Sax, Jitendra Malik, and Silvio Savarese. 2018. Gibson env: real-world perception for embodied agents. In CVPR.Google Scholar

47. A. Khosla F. Yu L. Zhang X. Tang J. Xiao Z. Wu, S. Song. 2015. 3D ShapeNets: A Deep Representation for Volumetric Shapes. In Computer Vision and Pattern Recognition.Google Scholar

48. Yinda Zhang, Shuran Song, Ersin Yumer, Manolis Savva, Joon-Young Lee, Hailin Jin, and Thomas Funkhouser. 2017. Physically-Based Rendering for Indoor Scene Understanding Using Convolutional Neural Networks. The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017).Google Scholar

49. Chenghui Zhou, Chun-liang Li, and Barnabas Poczos. 2019. Program Synthesis for Images using Tree-Structured LSTM. In PGR Workshop at NeurIPS.Google Scholar

50. Chenyang Zhu, Kai Xu, Siddhartha Chaudhuri, Renjiao Yi, and Hao Zhang. 2018. SCORES: Shape Composition with Recursive Substructure Priors. ACM Transactions on Graphics (TOG) 37, 6 (2018), 211:1–211:14.Google ScholarDigital Library

51. Chuhang Zou, Ersin Yumer, Jimei Yang, Duygu Ceylan, and Derek Hoiem. 2017. 3D-PRNN: Generating Shape Primitives with Recurrent Neural Networks. In IEEE International Conference on Computer Vision (ICCV).Google ScholarCross Ref