“Semi-supervised video-driven facial animation transfer for production” by Moser, Chien, Williams, Serra, Hendler, et al. …

Conference:

Type(s):

Title:

- Semi-supervised video-driven facial animation transfer for production

Session/Category Title:

- Facial Animation and Rendering

Presenter(s)/Author(s):

Abstract:

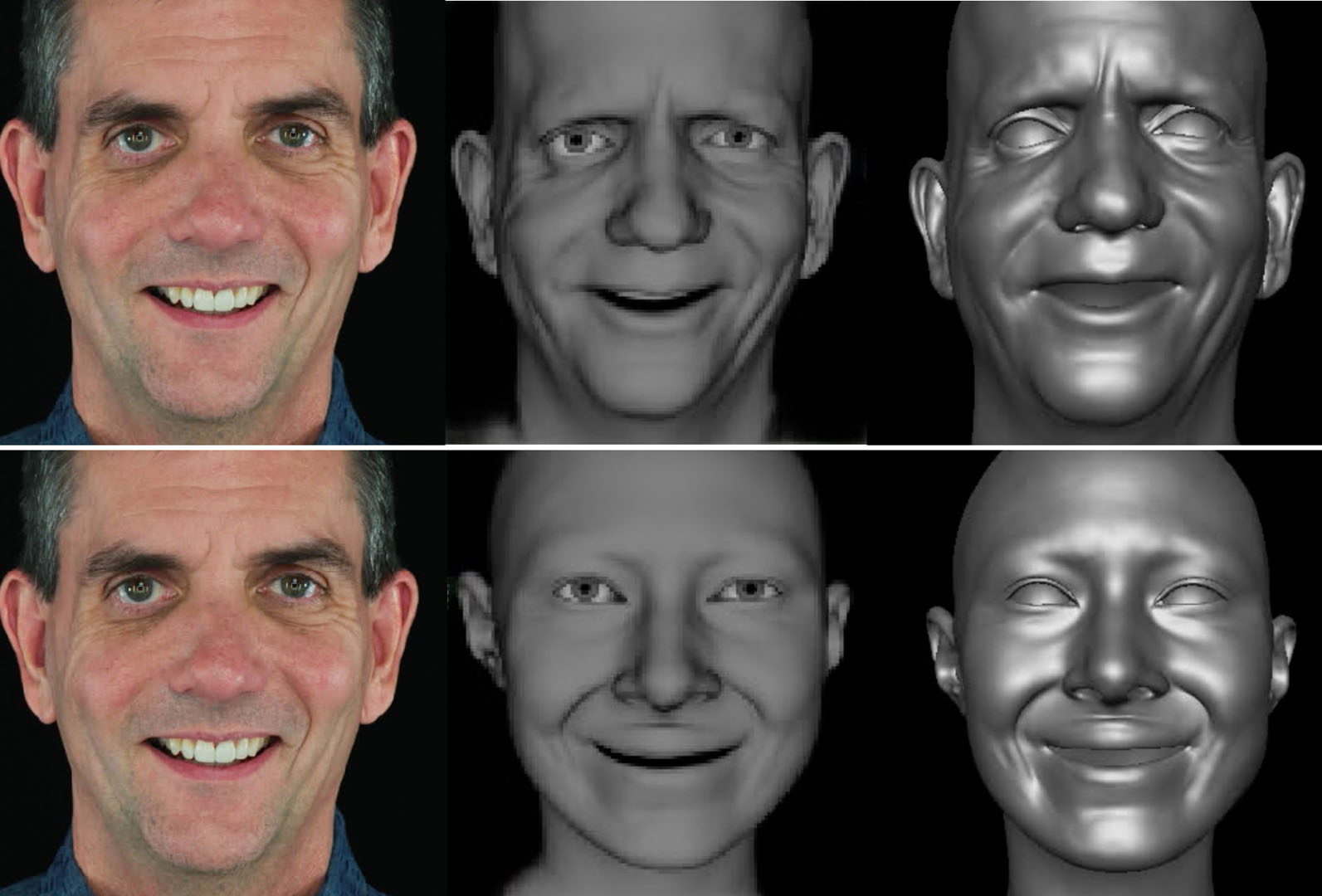

We propose a simple algorithm for automatic transfer of facial expressions, from videos to a 3D character, as well as between distinct 3D characters through their rendered animations. Our method begins by learning a common, semantically-consistent latent representation for the different input image domains using an unsupervised image-to-image translation model. It subsequently learns, in a supervised manner, a linear mapping from the character images’ encoded representation to the animation coefficients. At inference time, given the source domain (i.e., actor footage), it regresses the corresponding animation coefficients for the target character. Expressions are automatically remapped between the source and target identities despite differences in physiognomy. We show how our technique can be used in the context of markerless motion capture with controlled lighting conditions, for one actor and for multiple actors. Additionally, we show how it can be used to automatically transfer facial animation between distinct characters without consistent mesh parameterization and without engineered geometric priors. We compare our method with standard approaches used in production and with recent state-of-the-art models on single camera face tracking.

References:

1. 2019. DeepFakes/Faceswap. https://github.com/deepfakes/faceswap

2. Victoria Fernandez Abrevaya, Adnane Boukhayma, Philip H.S. Torr, and Edmond Boyer. 2020. Cross-Modal Deep Face Normals With Deactivable Skip Connections. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

3. Thabo Beeler, Fabian Hahn, Derek Bradley, Bernd Bickel, Paul Beardsley, Craig Gotsman, Robert W. Sumner, and Markus Gross. 2011. High-Quality Passive Facial Performance Capture Using Anchor Frames. ACM Trans. Graph. 30, 4, Article 75 (July 2011), 10 pages.

4. Mario Botsch, R. Sumner, M. Pauly, and M. Gross. 2006. Deformation Transfer for Detail-Preserving Surface Editing.

5. Adrian Bulat and Georgios Tzimiropoulos. 2017. How far are we from solving the 2D & 3D Face Alignment problem? (and a dataset of 230,000 3D facial landmarks). In International Conference on Computer Vision.

6. Egor Burkov, Igor Pasechnik, Artur Grigorev, and Victor Lempitsky. 2020. Neural Head Reenactment with Latent Pose Descriptors. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

7. Bindita Chaudhuri, Noranart Vesdapunt, Linda G. Shapiro, and Baoyuan Wang. 2020. Personalized Face Modeling for Improved Face Reconstruction and Motion Retargeting. In Computer Vision – ECCV 2020 – 16th European Conference, Glasgow, UK, August 23-28, 2020, Proceedings, Part V (Lecture Notes in Computer Science, Vol. 12350), Andrea Vedaldi, Horst Bischof, Thomas Brox, and Jan-Michael Frahm (Eds.). Springer, 142–160.

8. Bindita Chaudhuri, Noranart Vesdapunt, and Baoyuan Wang. 2019. Joint Face Detection and Facial Motion Retargeting for Multiple Faces. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 9711–9720.

9. Matthew Cong, Lana Lan, and Ronald Fedkiw. 2019. Local Geometric Indexing of High Resolution Data for Facial Reconstruction from Sparse Markers. CoRR abs/1903.00119 (2019). arXiv:1903.00119 http://arxiv.org/abs/1903.00119

10. Bernhard Egger, William A. P. Smith, Ayush Tewari, Stefanie Wuhrer, Michael Zollhoefer, Thabo Beeler, Florian Bernard, Timo Bolkart, Adam Kortylewski, Sami Romdhani, Christian Theobalt, Volker Blanz, and Thomas Vetter. 2020. 3D Morphable Face Models—Past, Present, and Future. ACM Trans. Graph. 39, 5, Article 157 (June 2020), 38 pages.

11. P. Ekman and W. Friesen. 1978. Facial action coding system: A technique for the measurement of facial movement. Consulting Psychologists Press.

12. Yao Feng, Haiwen Feng, Michael J. Black, and Timo Bolkart. 2021a. Learning an Animatable Detailed 3D Face Model from In-The-Wild Images. ACM Transactions on Graphics, (Proc. SIGGRAPH) 40, 8.

13. Yao Feng, Haiwen Feng, Michael J. Black, and Timo Bolkart. 2021b. Learning an Animatable Detailed 3D Face Model from In-The-Wild Images. ACM Transactions on Graphics, (Proc. SIGGRAPH) 40, 8.

14. Graham Fyffe, Andrew Jones, Oleg Alexander, Ryosuke Ichikari, and Paul Debevec. 2015. Driving High-Resolution Facial Scans with Video Performance Capture. ACM Trans. Graph. 34, 1, Article 8 (Dec. 2015), 14 pages.

15. Ivan Grishchenko, Artsiom Ablavatski, Yury Kartynnik, Karthik Raveendran, and Matthias Grundmann. 2020. Attention Mesh: High-fidelity Face Mesh Prediction in Real-time. CoRR abs/2006.10962 (2020). arXiv:2006.10962 https://arxiv.org/abs/2006.10962

16. Jianzhu Guo, Xiangyu Zhu, Yang Yang, Fan Yang, Zhen Lei, and Stan Z Li. 2020. Towards Fast, Accurate and Stable 3D Dense Face Alignment. In Proceedings of the European Conference on Computer Vision (ECCV).

17. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep Residual Learning for Image Recognition. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 770–778.

18. Xun Huang, Ming-Yu Liu, Serge Belongie, and Jan Kautz. 2018. Multimodal Unsupervised Image-to-image Translation. In ECCV.

19. Digital Imaging. 2021. DI4D PRO System. Retrieved May 19, 2021 from https://di4d.com/technology/

20. Tomas Jakab, Ankush Gupta, Hakan Bilen, and Andrea Vedaldi. 2019. Learning Human Pose from Unaligned Data through Image Translation. In CVPR Workshop on Learning from Unlabeled Videos.

21. Tero Karras, Samuli Laine, and Timo Aila. 2019. A Style-Based Generator Architecture for Generative Adversarial Networks. 4396–4405.

22. Diederik P. Kingma and Max Welling. 2014a. Auto-Encoding Variational Bayes. In 2nd International Conference on Learning Representations, ICLR 2014, Banff, AB, Canada, April 14-16, 2014, Conference Track Proceedings, Yoshua Bengio and Yann LeCun (Eds.). http://arxiv.org/abs/1312.6114

23. Diederik P. Kingma and Max Welling. 2014b. Auto-Encoding Variational Bayes. In 2nd International Conference on Learning Representations, ICLR 2014, Banff, AB, Canada, April 14-16, 2014, Conference Track Proceedings. arXiv:http://arxiv.org/abs/1312.6114v10 [stat.ML]

24. Samuli Laine, Tero Karras, Timo Aila, Antti Herva, Shunsuke Saito, Ronald Yu, Hao Li, and Jaakko Lehtinen. 2017. Production-Level Facial Performance Capture Using Deep Convolutional Neural Networks. In Proceedings of the ACM SIGGRAPH / Eurographics Symposium on Computer Animation (Los Angeles, California) (SCA ’17). Association for Computing Machinery, New York, NY, USA, Article 10, 10 pages.

25. Siyuan Li, Semih Günel, Mirela Ostrek, Pavan Ramdya, Pascal Fua, and Helge Rhodin. 2020. Deformation-aware Unpaired Image Translation for Pose Estimation on Laboratory Animals. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 13158–13168.

26. Tianye Li, Timo Bolkart, Michael. J. Black, Hao Li, and Javier Romero. 2017. Learning a model of facial shape and expression from 4D scans. ACM Transactions on Graphics, (Proc. SIGGRAPH Asia) 36, 6 (2017).

27. Ming-Yu Liu, Thomas Breuel, and Jan Kautz. 2017. Unsupervised Image-to-Image Translation Networks. In Advances in Neural Information Processing Systems, I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett (Eds.), Vol. 30. Curran Associates, Inc. https://proceedings.neurips.cc/paper/2017/file/dc6a6489640ca02b0d42dabeb8e46bb7-Paper.pdf

28. Stephen Lombardi, Jason Saragih, Tomas Simon, and Yaser Sheikh. 2018. Deep Appearance Models for Face Rendering. ACM Trans. Graph. 37, 4, Article 68 (July 2018), 13 pages.

29. Araceli Morales, Gemma Piella, and Federico M. Sukno. 2021. Survey on 3D face reconstruction from uncalibrated images. Computer Science Review 40 (01 May 2021), 100400. https://www.sciencedirect.com/science/article/pii/S157401372100040X

30. Lucio Moser, Darren Hendler, and Doug Roble. 2017. Masquerade: Fine-Scale Details for Head-Mounted Camera Motion Capture Data. In ACM SIGGRAPH 2017 Talks (Los Angeles, California) (SIGGRAPH ’17). Association for Computing Machinery, New York, NY, USA, Article 18, 2 pages.

31. J. Naruniec, L. Helminger, C. Schroers, and R.M. Weber. 2020. High-Resolution Neural Face Swapping for Visual Effects. Computer Graphics Forum 39, 4 (2020), 173–184.

32. Yuval Nirkin, Yosi Keller, and Tal Hassner. 2019. FSGAN: Subject agnostic face swapping and reenactment. In Proceedings of the IEEE International Conference on Computer Vision. 7184–7193.

33. Ivan Perov, Daiheng Gao, Nikolay Chervoniy, Kunlin Liu, Sugasa Marangonda, Chris Umé, Mr. Dpfks, Carl Shift Facenheim, Luis RP, Jian Jiang, Sheng Zhang, Pingyu Wu, Bo Zhou, and Weiming Zhang. 2019. DeepFaceLab. https://github.com/iperov/DeepFaceLab

34. Frederic Pighin and J. P. Lewis. 2006. Facial Motion Retargeting. In ACM SIGGRAPH 2006 Courses (Boston, Massachusetts) (SIGGRAPH ’06). Association for Computing Machinery, New York, NY, USA, 2–es.

35. Roger Blanco i Ribera, Eduard Zell, J. P. Lewis, Junyong Noh, and Mario Botsch. 2017. Facial Retargeting with Automatic Range of Motion Alignment. ACM Trans. Graph. 36, 4, Article 154 (July 2017), 12 pages.

36. Nataniel Ruiz, Eunji Chong, and James M. Rehg. 2018. Fine-Grained Head Pose Estimation Without Keypoints. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops.

37. Soubhik Sanyal, Timo Bolkart, Haiwen Feng, and Michael Black. 2019. Learning to Regress 3D Face Shape and Expression from an Image without 3D Supervision. In Proceedings IEEE Conf. on Computer Vision and Pattern Recognition (CVPR).

38. Wenzhe Shi, Jose Caballero, Ferenc Huszár, Johannes Totz, Andrew P. Aitken, Rob Bishop, Daniel Rueckert, and Zehan Wang. 2016. Real-Time Single Image and Video Super-Resolution Using an Efficient Sub-Pixel Convolutional Neural Network. CoRR abs/1609.05158 (2016). arXiv:1609.05158 http://arxiv.org/abs/1609.05158

39. Ayush Tewari, Michael Zollhöfer, Hyeongwoo Kim, Pablo Garrido, Florian Bernard, Patrick Pérez, and Christian Theobalt. 2017. MoFA: Model-Based Deep Convolutional Face Autoencoder for Unsupervised Monocular Reconstruction. In 2017 IEEE International Conference on Computer Vision (ICCV). 3735–3744.

40. J. Thies, M. Zollhöfer, M. Stamminger, C. Theobalt, and M. Nießner. 2016. Face2Face: Real-time Face Capture and Reenactment of RGB Videos. In Proc. Computer Vision and Pattern Recognition (CVPR), IEEE.

41. Anh Tuan Tran, Tal Hassner, Iacopo Masi, and Gerard Medioni. 2017. Regressing Robust and Discriminative 3D Morphable Models with a very Deep Neural Network. In Computer Vision and Pattern Recognition (CVPR).

42. Shinji Umeyama. 1991. Least-Squares Estimation of Transformation Parameters Between Two Point Patterns. IEEE Trans. Pattern Anal. Mach. Intell. 13, 4 (1991), 376–380. http://dblp.uni-trier.de/db/journals/pami/pami13.html#Umeyama91

43. Zhou Wang, A.C. Bovik, H.R. Sheikh, and E.P. Simoncelli. 2004. Image quality assessment: from error visibility to structural similarity. IEEE Transactions on Image Processing 13, 4 (2004), 600–612.

44. Chenglei Wu, Derek Bradley, Markus Gross, and Thabo Beeler. 2016. An Anatomically-Constrained Local Deformation Model for Monocular Face Capture. ACM Trans. Graph. 35, 4, Article 115 (July 2016), 12 pages.

45. Shuqi Yan, Shaorong He, Xue Lei, Guanhua Ye, and Zhifeng Xie. 2018. Video Face Swap Based on Autoencoder Generation Network. In 2018 International Conference on Audio, Language and Image Processing (ICALIP). 103–108.

46. Yuxuan Zhang, Huan Ling, Jun Gao, Kangxue Yin, Jean-Francois Lafleche, Adela Barriuso, Antonio Torralba, and Sanja Fidler. 2021. DatasetGAN: Efficient Labeled Data Factory with Minimal Human Effort. In CVPR.

47. Michael Zollhöfer, Justus Thies, Darek Bradley, Pablo Garrido, Thabo Beeler, Patrick Péerez, Marc Stamminger, Matthias Nießner, and Christian Theobalt. 2018. State of the Art on Monocular 3D Face Reconstruction, Tracking, and Applications. (2018).