“SceneGrok: inferring action maps in 3D environments” by Savva, Chang, Hanrahan, Fisher and Nießner

Conference:

Type(s):

Title:

- SceneGrok: inferring action maps in 3D environments

Session/Category Title:

- Scenes, Syntax, Statistics and Semantics

Presenter(s)/Author(s):

Abstract:

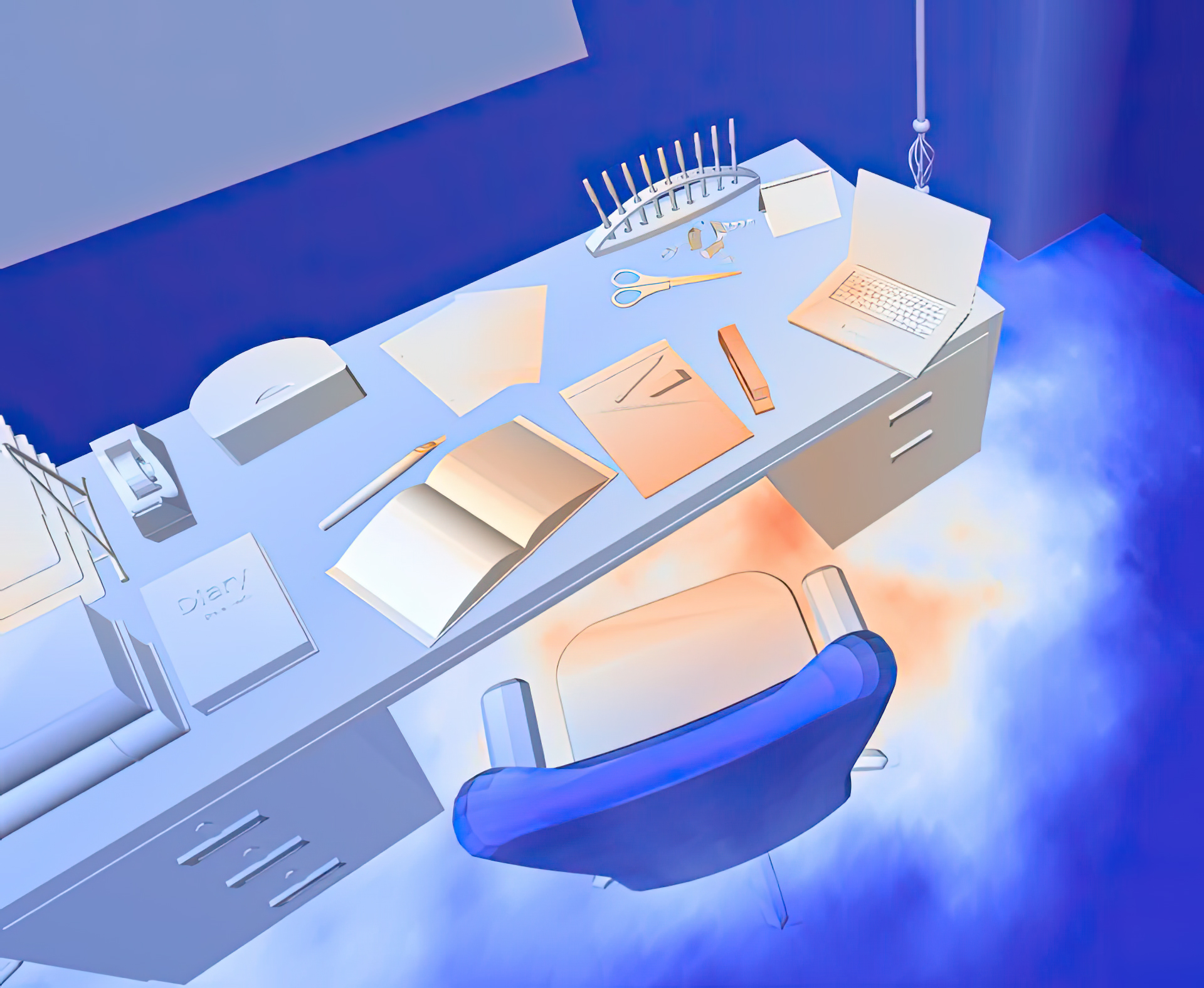

With modern computer graphics, we can generate enormous amounts of 3D scene data. It is now possible to capture high-quality 3D representations of large real-world environments. Large shape and scene databases, such as the Trimble 3D Warehouse, are publicly accessible and constantly growing. Unfortunately, while a great amount of 3D content exists, most of it is detached from the semantics and functionality of the objects it represents. In this paper, we present a method to establish a correlation between the geometry and the functionality of 3D environments. Using RGB-D sensors, we capture dense 3D reconstructions of real-world scenes, and observe and track people as they interact with the environment. With these observations, we train a classifier which can transfer interaction knowledge to unobserved 3D scenes. We predict a likelihood of a given action taking place over all locations in a 3D environment and refer to this representation as an action map over the scene. We demonstrate prediction of action maps in both 3D scans and virtual scenes. We evaluate our predictions against ground truth annotations by people, and present an approach for characterizing 3D scenes by functional similarity using action maps.

References:

1. Arthur, D., and Vassilvitskii, S. 2007. k-means++: The advantages of careful seeding. In ACM-SIAM symposium on Discrete algorithms.

2. Bohg, J., Morales, A., Asfour, T., and Kragic, D. 2013. Data-driven grasp synthesis—a survey.

3. Breiman, L. 2001. Random forests. Machine learning.

4. Coates, A., and Ng, A. Y. 2012. Learning feature representations with k-means. In Neural Networks: Tricks of the Trade.

5. Coates, A., Ng, A. Y., and Lee, H. 2011. An analysis of single-layer networks in unsupervised feature learning. In ICAIS.

6. Delaitre, V., Fouhey, D. F., Laptev, I., Sivic, J., Gupta, A., and Efros, A. A. 2012. Scene semantics from long-term observation of people. In ECCV.

7. Felzenszwalb, P. F., and Huttenlocher, D. P. 2004. Efficient graph-based image segmentation. IJCV.

8. Fisher, M., Savva, M., and Hanrahan, P. 2011. Characterizing structural relationships in scenes using graph kernels. In ACM TOG.

9. Fisher, M., Ritchie, D., Savva, M., Funkhouser, T., and Hanrahan, P. 2012. Example-based synthesis of 3D object arrangements. In ACM TOG.

10. Fouhey, D. F., Delaitre, V., Gupta, A., Efros, A. A., Laptev, I., and Sivic, J. 2012. People watching: Human actions as a cue for single view geometry. In ECCV.

11. Fritz, G., Paletta, L., Breithaupt, R., Rome, E., and Dorffner, G. 2006. Learning predictive features in affordance based robotic perception systems. In IROS.

12. Gibson, J. 1977. The concept of affordances. Perceiving, acting, and knowing.

13. Goldfeder, C., and Allen, P. K. 2011. Data-driven grasping. Autonomous Robots.

14. Grabner, H., Gall, J., and Van Gool, L. 2011. What makes a chair a chair? In CVPR.

15. Gupta, A., Satkin, S., Efros, A. A., and Hebert, M. 2011. From 3D scene geometry to human workspace. In CVPR.

16. Hall, M., Frank, E., Holmes, G., Pfahringer, B., Reutemann, P., and Witten, I. H. 2009. The WEKA data mining software: an update. ACM SIGKDD explorations newsletter.

17. Hermans, T., Rehg, J. M., and Bobick, A. 2011. Affordance prediction via learned object attributes. In ICRA Workshop on Semantic Perception, Mapping, and Exploration.

18. Hyvärinen, A., and Oja, E. 2000. Independent component analysis: algorithms and applications. Neural networks.

19. Jiang, Y., and Saxena, A. 2013. Infinite latent conditional random fields for modeling environments through humans. In RSS.

20. Jiang, Y., Lim, M., and Saxena, A. 2012. Learning object arrangements in 3d scenes using human context. arXiv preprint arXiv:1206.6462.

21. Jiang, Y., Koppula, H., and Saxena, A. 2013. Hallucinated humans as the hidden context for labeling 3D scenes. In CVPR.

22. Karpathy, A., Miller, S., and Fei-Fei, L. 2013. Object discovery in 3D scenes via shape analysis. In ICRA.

23. Kim, V. G., Chaudhuri, S., Guibas, L., and Funkhouser, T. 2014. Shape2Pose: Human-centric shape analysis. ACM TOG.

24. Koppula, H. S., and Saxena, A. 2013. Anticipating human activities using object affordances for reactive robotic response. RSS.

25. Koppula, H., Gupta, R., and Saxena, A. 2013. Learning human activities and object affordances from RGB-D videos. IJRR.

26. Mitra, N., Wand, M., Zhang, H. R., Cohen-Or, D., Kim, V., and Huang, Q.-X. 2013. Structure-aware shape processing. In SIGGRAPH Asia 2013 Courses.

27. Mitra, N. J., Pauly, M., Wand, M., and Ceylan, D. 2013. Symmetry in 3d geometry: Extraction and applications. In Computer Graphics Forum.

28. Montesano, L., Lopes, M., Bernardino, A., and Santos-Victor, J. 2008. Learning object affordances: From sensory–motor coordination to imitation. IEEE Transactions on Robotics.

29. Nan, L., Xie, K., and Sharf, A. 2012. A search-classify approach for cluttered indoor scene understanding. ACM TOG.

30. Niessner, M., Zollhöfer, M., Izadi, S., and Stamminger, M. 2013. Real-time 3D reconstruction at scale using voxel hashing. ACM TOG.

31. Pandey, A. K., and Alami, R. 2012. Taskability graph: Towards analyzing effort based agent-agent affordances. In ROMAN, 2012 IEEE.

32. Shotton, J., Sharp, T., Kipman, A., Fitzgibbon, A., Finocchio, M., Blake, A., Cook, M., and Moore, R. 2013. Real-time human pose recognition in parts from single depth images. CACM.

33. Stark, M., Lies, P., Zillich, M., Wyatt, J., and Schiele, B. 2008. Functional object class detection based on learned affordance cues. In Computer Vision Systems.

34. Sun, J., Moore, J. L., Bobick, A., and Rehg, J. M. 2010. Learning visual object categories for robot affordance prediction. IJRR.

35. Wei, P., Zhao, Y., Zheng, N., and Zhu, S.-C. 2013. Modeling 4D human-object interactions for event and object recognition. In ICCV.

36. Wei, P., Zheng, N., Zhao, Y., and Zhu, S.-C. 2013. Concurrent action detection with structural prediction. In ICCV.

37. Xu, K., Chen, K., Fu, H., Sun, W.-L., and Hu, S.-M. 2013. Sketch2Scene: Sketch-based co-retrieval and co-placement of 3D models. ACM TOG.

38. Xu, K., Ma, R., Zhang, H., Zhu, C., Shamir, A., Cohen-Or, D., and Huang, H. 2014. Organizing heterogeneous scene collection through contextual focal points. ACM TOG.

39. Zheng, B., Zhao, Y., Yu, J. C., Ikeuchi, K., and Zhu, S.-C. 2014. Detecting potential falling objects by inferring human action and natural disturbance. In ICRA.