“Practical course on computing derivatives in code” Chaired by

Conference:

Type(s):

Title:

- Practical course on computing derivatives in code

Presenter(s)/Author(s):

Entry Number:

- 12

Abstract:

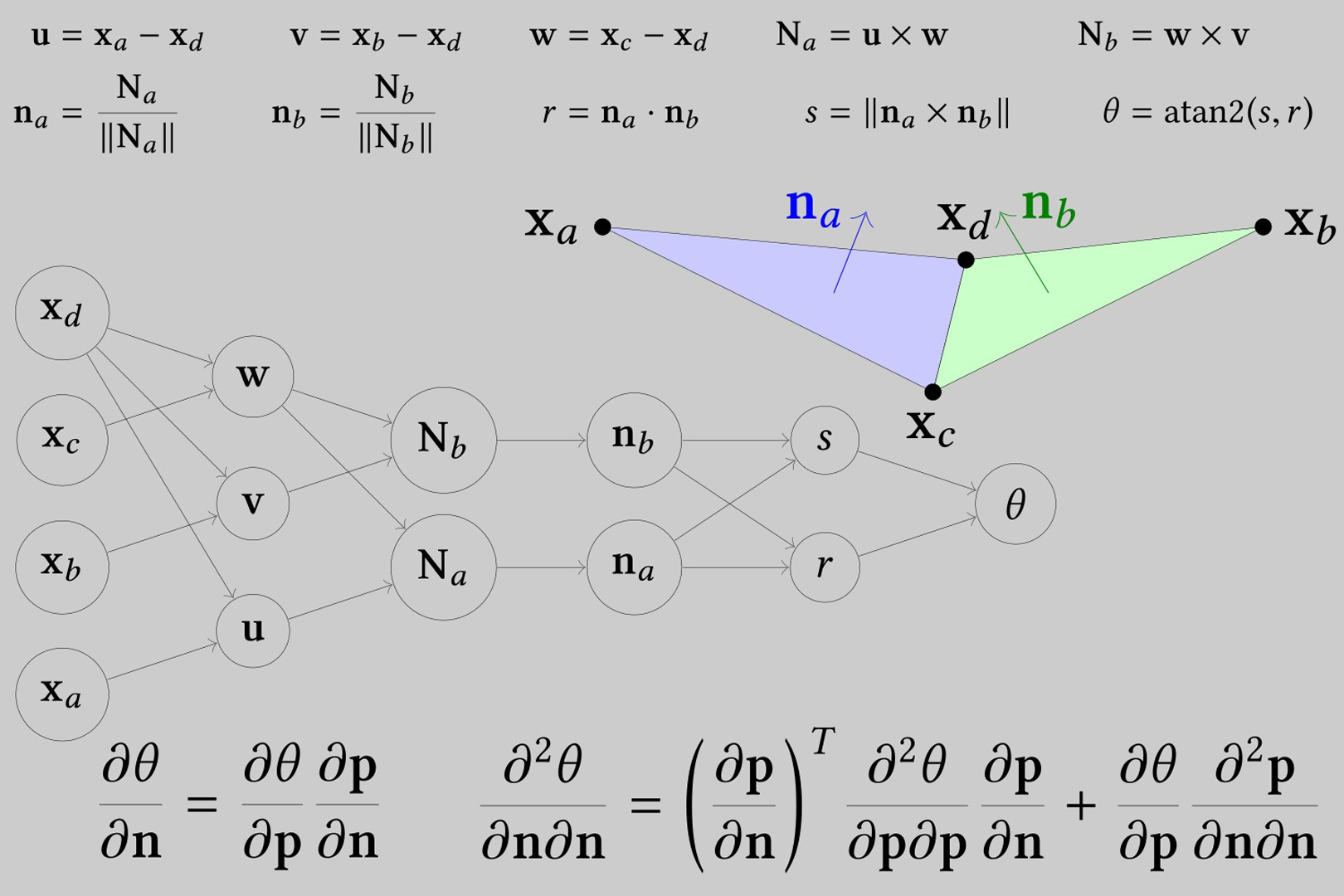

Derivatives occur frequently in computer graphics and arise in many different contexts. Gradients and often Hessians of objective functions are required for efficient optimization. Gradients of potential energy are used to compute forces. Constitutive models are frequently formulated from an energy density, which must be differentiated to compute stress. Hessians of potential energy or energy density are needed for implicit integration. As the methods used in computer graphics become more accurate and sophisticated, the complexity of the functions that must be differentiated also increases. The purpose of this course is to show that it is practical to compute derivatives even for functions that may seem impossibly complex. This course provides practical strategies and techniques for planning, computing, testing, debugging, and optimizing routines for computing first and second derivatives of real-world routines. This course will also introduce and explore auto differentiation, which encompasses a variety of techniques for obtaining derivatives automatically. Applications to machine learning and differentiable simulation are also considered. The goal of this course is not to introduce the concept of derivatives, how to use them, or even how to calculate them per se. This is not intended to be a calculus course; we will assume that our audience is familiar with multivariable calculus. Instead, the emphasis is on implementing derivatives of complicated computational procedures in computer programs and actually getting them to work.

References:

- Michael Bartholomew-Biggs, Steven Brown, Bruce Christianson, and Laurence Dixon. 2000. Automatic differentiation of algorithms. J. Comput. Appl. Math. 124, 1–2 (2000), 171–190.

- Atilim Gunes Baydin, Barak A Pearlmutter, Alexey Andreyevich Radul, and Jeffrey Mark Siskind. 2018. Automatic differentiation in machine learning: a survey. Journal of Marchine Learning Research 18 (2018), 1–43.

- Robin J Hogan. 2014. Fast reverse-mode automatic differentiation using expression templates in C++. ACM Transactions on Mathematical Software (TOMS) 40, 4 (2014), 26.

- Uwe Naumann. 2008. Optimal Jacobian accumulation is NP-complete. Mathematical Programming 112, 2 (2008), 427–441.

- A. Stomakhin, R. Howes, C. Schroeder, and J.M. Teran. 2012. Energetically Consistent Invertible Elasticity. In Proc. Symp. Comp. Anim. 25–32.