“Portrait3D: Text-guided High-quality 3D Portrait Generation Using Pyramid Representation and GANs Prior”

Conference:

Type(s):

Title:

- Portrait3D: Text-guided High-quality 3D Portrait Generation Using Pyramid Representation and GANs Prior

Presenter(s)/Author(s):

Abstract:

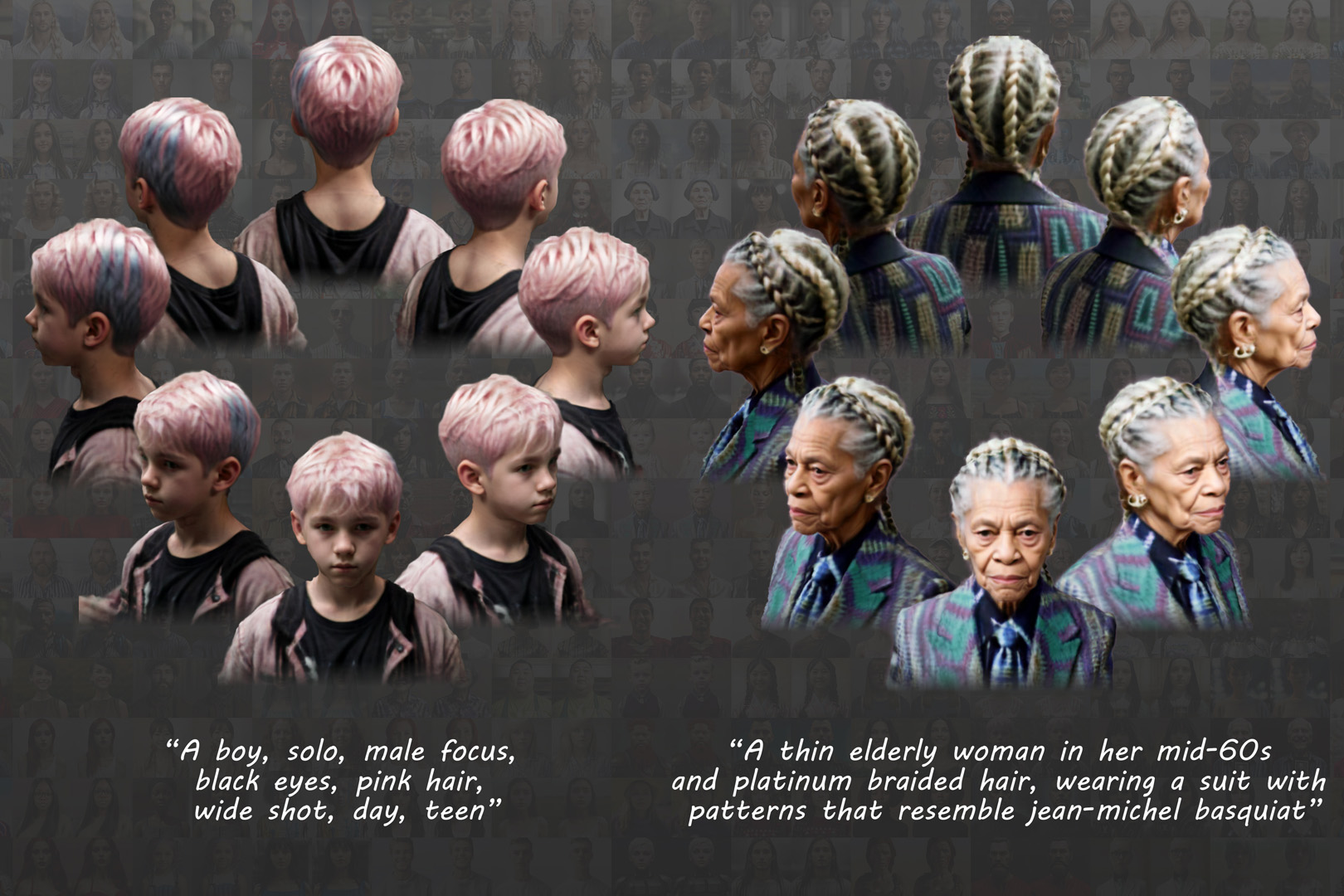

We present Portrait3D, a novel text-to-3D-portrait generation framework that produces high-quality, view-consistent, realistic, and canonical 3D portraits that are in alignment with the input text prompts.

References:

[1]

Badour Albahar, Shunsuke Saito, Hung-Yu Tseng, Changil Kim, Johannes Kopf, and Jia-Bin Huang. 2023. Single-Image 3D Human Digitization with Shape-Guided Diffusion. In SIGGRAPH Asia 2023 Conference Papers. Article 62, 11 pages.

[2]

Thiemo Alldieck, Hongyi Xu, and Cristian Sminchisescu. 2021. imGHUM: Implicit Generative Models of 3D Human Shape and Articulated Pose. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). 5461–5470.

[3]

Sizhe An, Hongyi Xu, Yichun Shi, Guoxian Song, Umit Y. Ogras, and Linjie Luo. 2023. PanoHead: Geometry-Aware 3D Full-Head Synthesis in 360deg. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR. 20950–20959.

[4]

Eric R. Chan, Connor Z. Lin, Matthew A. Chan, Koki Nagano, Boxiao Pan, Shalini De Mello, Orazio Gallo, Leonidas J. Guibas, Jonathan Tremblay, Sameh Khamis, Tero Karras, and Gordon Wetzstein. 2022. Efficient Geometry-aware 3D Generative Adversarial Networks. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR. 16102–16112.

[5]

Eric R. Chan, Marco Monteiro, Petr Kellnhofer, Jiajun Wu, and Gordon Wetzstein. 2021. Pi-GAN: Periodic Implicit Generative Adversarial Networks for 3D-Aware Image Synthesis. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR. 5799–5809.

[6]

Xingyu Chen, Yu Deng, and Baoyuan Wang. 2023a. Mimic3D: Thriving 3D-Aware GANs via 3D-to-2D Imitation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). 2338–2348.

[7]

Yufan Chen, Lizhen Wang, Qijing Li, Hongjiang Xiao, Shengping Zhang, Hongxun Yao, and Yebin Liu. 2023b. MonoGaussianAvatar: Monocular Gaussian Point-based Head Avatar. CoRR abs/2312.04558 (2023).

[8]

Jun Gao, Tianchang Shen, Zian Wang, Wenzheng Chen, Kangxue Yin, Daiqing Li, Or Litany, Zan Gojcic, and Sanja Fidler. 2022. GET3D: A Generative Model of High Quality 3D Textured Shapes Learned from Images. In Advances in Neural Information Processing Systems, Vol. 35. 31841–31854.

[9]

Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron C. Courville, and Yoshua Bengio. 2014. Generative Adversarial Nets. In Advances in Neural Information Processing Systems, Vol. 27. 2672–2680.

[10]

Jiatao Gu, Lingjie Liu, Peng Wang, and Christian Theobalt. 2022. StyleNeRF: A Style-based 3D Aware Generator for High-resolution Image Synthesis. In The 10th International Conference on Learning Representations, ICLR.

[11]

Riza Alp G?ler, Natalia Neverova, and Iasonas Kokkinos. 2018. DensePose: Dense Human Pose Estimation in the Wild. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR. 7297–7306.

[12]

Ishaan Gulrajani, Faruk Ahmed, Mart?n Arjovsky, Vincent Dumoulin, and Aaron C. Courville. 2017. Improved Training of Wasserstein GANs. In Advances in Neural Information Processing Systems, Vol. 30. 5767–5777.

[13]

Xiao Han, Yukang Cao, Kai Han, Xiatian Zhu, Jiankang Deng, Yi-Zhe Song, Tao Xiang, and Kwan-Yee K. Wong. 2023. HeadSculpt: Crafting 3D Head Avatars with Text. CoRR abs/2306.03038 (2023).

[14]

Ayaan Haque, Matthew Tancik, Alexei A. Efros, Aleksander Holynski, and Angjoo Kanazawa. 2023. Instruct-NeRF2NeRF: Editing 3D Scenes with Instructions. In IEEE/CVF International Conference on Computer Vision, ICCV 2023, Paris, France, October 1–6, 2023. IEEE, 19683–19693.

[15]

Jack Hessel, Ari Holtzman, Maxwell Forbes, Ronan Le Bras, and Yejin Choi. 2021. CLIPScore: A Reference-free Evaluation Metric for Image Captioning. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, EMNLP. 7514–7528.

[16]

Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. 2017. GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. In Advances in Neural Information Processing Systems, Vol. 30. 6626–6637.

[17]

Hsuan-I Ho, Jie Song, and Otmar Hilliges. 2023. SiTH: Single-view Textured Human Reconstruction with Image-Conditioned Diffusion. arXiv:2311.15855 [cs.CV]

[18]

Jonathan Ho, Ajay Jain, and Pieter Abbeel. 2020. Denoising Diffusion Probabilistic Models. In Advances in Neural Information Processing Systems, Vol. 33. 6840–6851.

[19]

Shoukang Hu, Fangzhou Hong, Tao Hu, Liang Pan, Haiyi Mei, Weiye Xiao, Lei Yang, and Ziwei Liu. 2023. HumanLiff: Layer-wise 3D Human Generation with Diffusion Model. CoRR abs/2308.09712 (2023).

[20]

Xin Huang, Ruizhi Shao, Qi Zhang, Hongwen Zhang, Ying Feng, Yebin Liu, and Qing Wang. 2023a. HumanNorm: Learning Normal Diffusion Model for High-quality and Realistic 3D Human Generation. CoRR abs/2310.01406 (2023).

[21]

Yangyi Huang, Hongwei Yi, Yuliang Xiu, Tingting Liao, Jiaxiang Tang, Deng Cai, and Justus Thies. 2023b. arXiv:2308.08545 [cs.CV]

[22]

Ruixiang Jiang, Can Wang, Jingbo Zhang, Menglei Chai, Mingming He, Dongdong Chen, and Jing Liao. 2023. AvatarCraft: Transforming Text into Neural Human Avatars with Parameterized Shape and Pose Control. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). 14371–14382.

[23]

Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. 2018. Progressive Growing of GANs for Improved Quality, Stability, and Variation. In 6th International Conference on Learning Representations, ICLR.

[24]

Bernhard Kerbl, Georgios Kopanas, Thomas Leimkuehler, and George Drettakis. 2023a. 3D Gaussian Splatting for Real-Time Radiance Field Rendering. ACM Trans. Graph. 42, 4 (2023), 139:1–139:14.

[25]

Bernhard Kerbl, Georgios Kopanas, Thomas Leimk?hler, and George Drettakis. 2023b. 3D Gaussian Splatting for Real-Time Radiance Field Rendering. ACM Trans. Graph. 42, 4 (2023), 139:1–139:14.

[26]

Jaehoon Ko, Kyusun Cho, Daewon Choi, Kwangrok Ryoo, and Seungryong Kim. 2023. 3D GAN Inversion with Pose Optimization. In IEEE/CVF Winter Conference on Applications of Computer Vision, WACV 2023, Waikoloa, HI, USA, January 2–7, 2023. 2966–2975.

[27]

Nikos Kolotouros, Thiemo Alldieck, Andrei Zanfir, Eduard Gabriel Bazavan, Mihai Fieraru, and Cristian Sminchisescu. 2023. DreamHuman: Animatable 3D Avatars from Text. CoRR abs/2306.09329 (2023).

[28]

Tianye Li, Timo Bolkart, Michael J. Black, Hao Li, and Javier Romero. 2017. Learning a model of facial shape and expression from 4D scans. ACM Trans. Graph. 36, 6 (2017), 194:1–194:17.

[29]

Yixun Liang, Xin Yang, Jiantao Lin, Haodong Li, Xiaogang Xu, and Yingcong Chen. 2023. LucidDreamer: Towards High-Fidelity Text-to-3D Generation via Interval Score Matching. CoRR abs/2311.11284 (2023).

[30]

Tingting Liao, Hongwei Yi, Yuliang Xiu, Jiaxiang Tang, Yangyi Huang, Justus Thies, and Michael J. Black. 2023. TADA! Text to Animatable Digital Avatars. CoRR abs/2308.10899 (2023).

[31]

Xian Liu, Jian Ren, Aliaksandr Siarohin, Ivan Skorokhodov, Yanyu Li, Dahua Lin, Xihui Liu, Ziwei Liu, and Sergey Tulyakov. 2023a. HyperHuman: Hyper-Realistic Human Generation with Latent Structural Diffusion. CoRR abs/2310.08579 (2023).

[32]

Xian Liu, Xiaohang Zhan, Jiaxiang Tang, Ying Shan, Gang Zeng, Dahua Lin, Xihui Liu, and Ziwei Liu. 2023b. HumanGaussian: Text-Driven 3D Human Generation with Gaussian Splatting. CoRR abs/2311.17061 (2023).

[33]

Matthew Loper, Naureen Mahmood, Javier Romero, Gerard Pons-Moll, and Michael J. Black. 2015. SMPL: a skinned multi-person linear model. ACM Trans. Graph. 34, 6 (2015), 248:1–248:16.

[34]

Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. 2020. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. In Computer Vision – ECCV 2020 – 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part I (Lecture Notes in Computer Science, Vol. 12346). 405–421.

[35]

Thomas M?ller, Alex Evans, Christoph Schied, and Alexander Keller. 2022. Instant neural graphics primitives with a multiresolution hash encoding. ACM Trans. Graph. 41, 4 (2022), 102:1–102:15.

[36]

Thu H Nguyen-Phuoc, Christian Richardt, Long Mai, Yongliang Yang, and Niloy Mitra. 2020. BlockGAN: Learning 3D Object-aware Scene Representations from Unlabelled Images. In Advances in Neural Information Processing Systems, Vol. 33. 6767–6778.

[37]

Ben Poole, Ajay Jain, Jonathan T. Barron, and Ben Mildenhall. 2023. DreamFusion: Text-to-3D using 2D Diffusion. In The 11th International Conference on Learning Representations, ICLR.

[38]

Alec Radford, Luke Metz, and Soumith Chintala. 2016. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. In 4th International Conference on Learning Representations, ICLR.

[39]

Alfredo Rivero, ShahRukh Athar, Zhixin Shu, and Dimitris Samaras. 2024. Rig3DGS: Creating Controllable Portraits from Casual Monocular Videos. CoRR abs/2402.03723 (2024).

[40]

Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Bj?rn Ommer. 2022. High-Resolution Image Synthesis With Latent Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 10684–10695.

[41]

Katja Schwarz, Axel Sauer, Michael Niemeyer, Yiyi Liao, and Andreas Geiger. 2022. VoxGRAF: Fast 3D-Aware Image Synthesis with Sparse Voxel Grids. In Advances in Neural Information Processing Systems, Vol. 35. 33999–34011.

[42]

Ivan Skorokhodov, Sergey Tulyakov, Yiqun Wang, and Peter Wonka. 2022. EpiGRAF: Rethinking training of 3D GANs. CoRR abs/2206.10535 (2022). arXiv:2206.10535

[43]

Jingxiang Sun, Xuan Wang, Lizhen Wang, Xiaoyu Li, Yong Zhang, Hongwen Zhang, and Yebin Liu. 2022. Next3D: Generative Neural Texture Rasterization for 3D-Aware Head Avatars. CoRR abs/2211.11208 (2022).

[44]

Alex Trevithick, Matthew A. Chan, Michael Stengel, Eric R. Chan, Chao Liu, Zhiding Yu, Sameh Khamis, Manmohan Chandraker, Ravi Ramamoorthi, and Koki Nagano. 2023. Real-Time Radiance Fields for Single-Image Portrait View Synthesis. ACM Trans. Graph. 42, 4 (2023), 135:1–135:15.

[45]

Alex Trevithick, Matthew A. Chan, Towaki Takikawa, Umar Iqbal, Shalini De Mello, Manmohan Chandraker, Ravi Ramamoorthi, and Koki Nagano. 2024. What You See is What You GAN: Rendering Every Pixel for High-Fidelity Geometry in 3D GANs. CoRR abs/2401.02411 (2024).

[46]

Jie Wang, Jiu-Cheng Xie, Xianyan Li, Feng Xu, Chi-Man Pun, and Hao Gao. 2024. GaussianHead: High-fidelity Head Avatars with Learnable Gaussian Derivation. arXiv:2312.01632 [cs.CV]

[47]

Peng Wang, Lingjie Liu, Yuan Liu, Christian Theobalt, Taku Komura, and Wenping Wang. 2021. NeuS: Learning Neural Implicit Surfaces by Volume Rendering for Multi-view Reconstruction. In Advances in Neural Information Processing Systems, Vol. 34. 27171–27183.

[48]

Tengfei Wang, Bo Zhang, Ting Zhang, Shuyang Gu, Jianmin Bao, Tadas Baltrusaitis, Jingjing Shen, Dong Chen, Fang Wen, Qifeng Chen, and Baining Guo. 2023b. RODIN: A Generative Model for Sculpting 3D Digital Avatars Using Diffusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 4563–4573.

[49]

Zhengyi Wang, Cheng Lu, Yikai Wang, Fan Bao, Chongxuan Li, Hang Su, and Jun Zhu. 2023a. ProlificDreamer: High-Fidelity and Diverse Text-to-3D Generation with Variational Score Distillation. In Advances in Neural Information Processing Systems, Vol. 34.

[50]

Yiqian Wu, Hao Xu, Xiangjun Tang, Hongbo Fu, and Xiaogang Jin. 2023a. 3DPortraitGAN: Learning One-Quarter Headshot 3D GANs from a Single-View Portrait Dataset with Diverse Body Poses. arXiv:2307.14770 [cs.CV]

[51]

Yue Wu, Sicheng Xu, Jianfeng Xiang, Fangyun Wei, Qifeng Chen, Jiaolong Yang, and Xin Tong. 2023b. AniPortraitGAN: Animatable 3D Portrait Generation from 2D Image Collections. In SIGGRAPH Asia 2023 Conference Papers, SA 2023, Sydney, NSW, Australia, December 12–15, 2023, June Kim, Ming C. Lin, and Bernd Bickel (Eds.). ACM, 51:1–51:9.

[52]

Jiaxin Xie, Hao Ouyang, Jingtan Piao, Chenyang Lei, and Qifeng Chen. 2023. Highfidelity 3D GAN Inversion by Pseudo-multi-view Optimization. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2023, Vancouver, BC, Canada, June 17–24, 2023. IEEE, 321–331.

[53]

Yuelang Xu, Benwang Chen, Zhe Li, Hongwen Zhang, Lizhen Wang, Zerong Zheng, and Yebin Liu. 2023a. Gaussian Head Avatar: Ultra High-fidelity Head Avatar via Dynamic Gaussians. CoRR abs/2312.03029 (2023).

[54]

Yuanyou Xu, Zongxin Yang, and Yi Yang. 2023b. SEEAvatar: Photorealistic Text-to-3D Avatar Generation with Constrained Geometry and Appearance. arXiv:2312.08889 [cs.CV]

[55]

Han Yi, Zhedong Zheng, Xiangyu Xu, and Tat-Seng Chua. 2023. Progressive Text-to-3D Generation for Automatic 3D Prototyping. CoRR abs/2309.14600 (2023).

[56]

Fei Yin, Yong Zhang, Xuan Wang, Tengfei Wang, Xiaoyu Li, Yuan Gong, Yanbo Fan, Xiaodong Cun, Ying Shan, Cengiz ?ztireli, and Yujiu Yang. 2023. 3D GAN Inversion with Facial Symmetry Prior. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2023, Vancouver, BC, Canada, June 17–24, 2023. IEEE, 342–351.

[57]

Huichao Zhang, Bowen Chen, Hao Yang, Liao Qu, Xu Wang, Li Chen, Chao Long, Feida Zhu, Kang Du, and Min Zheng. 2023a. AvatarVerse: High-quality & Stable 3D Avatar Creation from Text and Pose. CoRR abs/2308.03610 (2023).

[58]

Hao Zhang, Yao Feng, Peter Kulits, Yandong Wen, Justus Thies, and Michael J. Black. 2024. TECA: Text-Guided Generation and Editing of Compositional 3D Avatars.

[59]

Jianfeng Zhang, Xuanmeng Zhang, Huichao Zhang, Jun Hao Liew, Chenxu Zhang, Yi Yang, and Jiashi Feng. 2023c. AvatarStudio: High-fidelity and Animatable 3D Avatar Creation from Text. CoRR abs/2311.17917 (2023).

[60]

Xuanmeng Zhang, Jianfeng Zhang, Rohan Chacko, Hongyi Xu, Guoxian Song, Yi Yang, and Jiashi Feng. 2023b. GETAvatar: Generative Textured Meshes for Animatable Human Avatars. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). 2273–2282.

[61]

Zhenglin Zhou, Fan Ma, Hehe Fan, and Yi Yang. 2024. HeadStudio: Text to Animatable Head Avatars with 3D Gaussian Splatting. CoRR abs/2402.06149 (2024).

[62]

Jun-Yan Zhu, Zhoutong Zhang, Chengkai Zhang, Jiajun Wu, Antonio Torralba, Josh Tenenbaum, and Bill Freeman. 2018. Visual Object Networks: Image Generation with Disentangled 3D Representations. In Advances in Neural Information Processing Systems, Vol. 31. 118–129.