“Perceptual model for adaptive local shading and refresh rate” by Jindal, Wolski, Myszkowski and Mantiuk

Conference:

Type(s):

Title:

- Perceptual model for adaptive local shading and refresh rate

Session/Category Title:

- Real-time Rendering

Presenter(s)/Author(s):

Abstract:

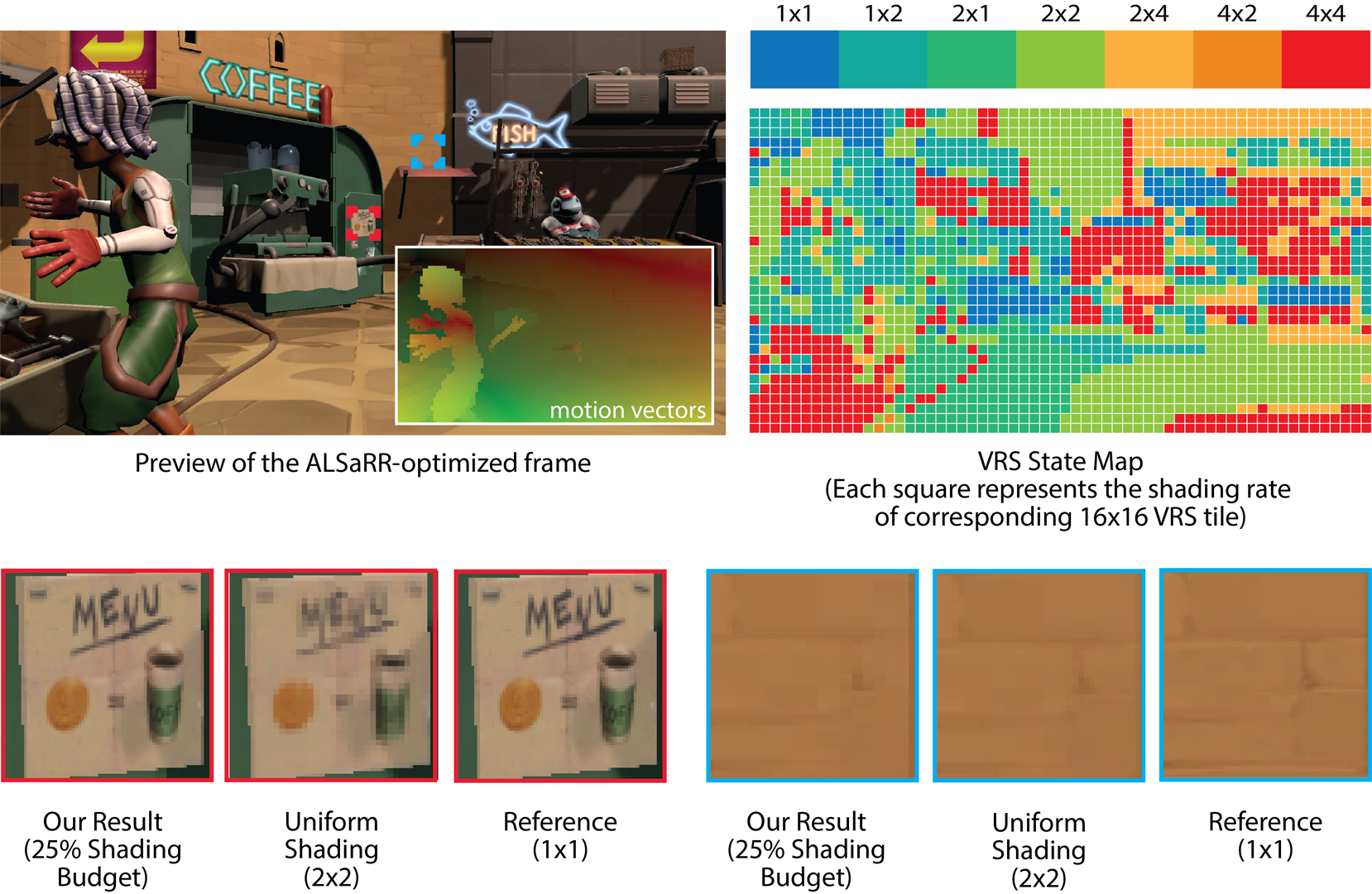

When the rendering budget is limited by power or time, it is necessary to find the combination of rendering parameters, such as resolution and refresh rate, that could deliver the best quality. Variable-rate shading (VRS), introduced in the last generations of GPUs, enables fine control of the rendering quality, in which each 16×16 image tile can be rendered with a different ratio of shader executions. We take advantage of this capability and propose a new method for adaptive control of local shading and refresh rate. The method analyzes texture content, on-screen velocities, luminance, and effective resolution and suggests the refresh rate and a VRS state map that maximizes the quality of animated content under a limited budget. The method is based on the new content-adaptive metric of judder, aliasing, and blur, which is derived from the psychophysical models of contrast sensitivity. To calibrate and validate the metric, we gather data from literature and also collect new measurements of motion quality under variable shading rates, different velocities of motion, texture content, and display capabilities, such as refresh rate, persistence, and angular resolution. The proposed metric and adaptive shading method is implemented as a game engine plugin. Our experimental validation shows a substantial increase in preference of our method over rendering with a fixed resolution and refresh rate, and an existing motion-adaptive technique.

References:

1. Tomas Akenine-Möller, Eric Haines, and Naty Hoffman. 2019. Real-time rendering. Crc Press.

2. AMD. 2021. AMD FidelityFX. https://www.amd.com/en/technologies/radeon-software-fidelityfx

3. Peter G. J. Barten. 2003. Formula for the contrast sensitivity of the human eye. In Image Quality and System Performance, Yoichi Miyake and D. Rene Rasmussen (Eds.), Vol. 5294. International Society for Optics and Photonics, SPIE, 231 — 238.

4. Mark R Bolin and Gary W Meyer. 1995. A frequency based ray tracer. In Proceedings of the 22nd annual conference on Computer graphics and interactive techniques. 409–418.

5. Kirsten Cater, Alan Chalmers, and Greg Ward. 2003. Detail to attention: exploiting visual tasks for selective rendering. In ACM International Conference Proceeding Series, Vol. 44. 270–280.

6. Alexandre Chapiro, Robin Atkins, and Scott Daly. 2019. A Luminance-aware Model of Judder Perception. ACM Transactions on Graphics (TOG) 38, 5 (2019), 1–10.

7. Roelof Roderick Colenbrander. 2021. Adaptive graphics for cloud gaming. US Patent App. 16/688,369.

8. Scott Daly, Ning Xu, James Crenshaw, and Vikrant J Zunjarrao. 2015. A psychophysical study exploring judder using fundamental signals and complex imagery. SMPTE Motion Imaging Journal 124, 7 (2015), 62–70.

9. James Davis, Yi-Hsuan Hsieh, and Hung-Chi Lee. 2015. Humans perceive flicker artifacts at 500 Hz. Scientific reports 5 (2015), 7861.

10. Kurt Debattista, Keith Bugeja, Sandro Spina, Thomas Bashford-Rogers, and Vedad Hulusic. 2018. Frame rate vs resolution: A subjective evaluation of spatiotemporal perceived quality under varying computational budgets. In Computer Graphics Forum, Vol. 37. Wiley Online Library, 363–374.

11. Gyorgy Denes, Akshay Jindal, Aliaksei Mikhailiuk, and Rafał K. Mantiuk. 2020. A perceptual model of motion quality for rendering with adaptive refresh-rate and resolution. ACM Transactions on Graphics 39, 4 (jul 2020), 133.

12. Michal Drobot. 2020. Software-based Variable Rate Shading in Call of Duty: Modern Warfare. In ACM SIGGRAPH 2020 Courses.

13. Xiao-Fan Feng. 2006. LCD motion blur analysis, perception, and reduction using synchronized backlight flashing. In Human Vision and Electronic Imaging XI. SPIE Vol. 6057, M1–14.

14. James A Ferwerda, Peter Shirley, Sumanta N Pattanaik, and Donald P Greenberg. 1997. A model of visual masking for computer graphics. In Proceedings of the 24th annual conference on Computer graphics and interactive techniques. 143–152.

15. Martin Fuchs, Tongbo Chen, Oliver Wang, Ramesh Raskar, Hans-Peter Seidel, and Hendrik P.A. Lensch. 2010. Real-time temporal shaping of high-speed video streams. Computers & Graphics 34 (2010), 575–584. Issue 5.

16. Alexander Goettker, Kevin J. MacKenzie, and T. Scott Murdison. 2020. Differences between oculomotor and perceptual artifacts for temporally limited head mounted displays. Journal of the Society for Information Display 28, 6 (jun 2020), 509–519.

17. Gerben Johan Hekstra, Leo Jan Velthoven, and Michiel Adriaanszoon Klompenhouwer. 2008. Motion blur decrease in varying duty cycle. US Patent 7,317,445.

18. David M Hoffman, Vasiliy I Karasev, and Martin S Banks. 2011. Temporal presentation protocols in stereoscopic displays: Flicker visibility, perceived motion, and perceived depth. Journal of the Society for Information Display 19, 3 (2011), 271–297.

19. Intel. 2019. Use Variable Rate Shading (VRS) to Improve the User Experience in Real-Time Game Engines | SIGGRAPH 2019 Technical Sessions. https://www.slideshare.net/IntelSoftware/use-variable-rate-shading-vrs-to-improve-the-user-experience-in-real-time-game-engines

20. Adrian Jarabo, Tom Van Eyck, Veronica Sundstedt, Kavita Bala, Diego Gutierrez, and Carol O’Sullivan. 2012. Crowd light: Evaluating the perceived fidelity of illuminated dynamic scenes. In Computer Graphics Forum, Vol. 31. Wiley Online Library, 565–574.

21. Paul V Johnson, Joohwan Kim, David M Hoffman, Andy D Vargas, and Martin S Banks. 2014. Motion artifacts on 240-Hz OLED stereoscopic 3D displays. Journal of the Society for Information Display 22, 8 (2014), 393–403.

22. Kimmo P Jokinen and Wen Nivala. 2017. 65-4: novel methods for measuring VR/AR performance factors from OLED/LCD. In SID Symposium Digest of Technical Papers, Vol. 48. Wiley Online Library, 961–964.

23. Hans Kellerer, Ulrich Pferschy, and David Pisinger. 2004. Multidimensional Knapsack Problems. Springer Berlin Heidelberg, Berlin, Heidelberg, 235–283.

24. Manuel Kraemer. 2018. Accelerating your vr games with vrworks. In NVIDIAs GPU Technology Conference (GTC).

25. Yoshihiko Kuroki, Tomohiro Nishi, Seiji Kobayashi, Hideki Oyaizu, and Shinichi Yoshimura. 2007. A psychophysical study of improvements in motion-image quality by using high frame rates. Journal of the Society for Information Display 15, 1 (2007), 61–68.

26. James Larimer, Jennifer Gille, and James Wong. 2001. 41.2: Judder-Induced Edge Flicker in Moving Objects. In SID Symposium Digest of Technical Papers, Vol. 32. Wiley Online Library, 1094–1097.

27. Chenglin Li, Laura Toni, Junni Zou, Hongkai Xiong, and Pascal Frossard. 2017. Delay-power-rate-distortion optimization of video representations for dynamic adaptive streaming. IEEE Transactions on Circuits and Systems for Video Technology 28, 7 (2017), 1648–1664.

28. Peter Longhurst, Kurt Debattista, and Alan Chalmers. 2006. A GPU based saliency map for high-fidelity selective rendering. In Proceedings of the 4th international conference on Computer graphics, virtual reality, visualisation and interaction in Africa. 21–29.

29. Alex Mackin, Katy C Noland, and David R Bull. 2016. The visibility of motion artifacts and their effect on motion quality. In 2016 IEEE International Conference on Image Processing (ICIP). IEEE, 2435–2439.

30. Rafał K. Mantiuk, Gyorgy Denes, Alexandre Chapiro, Anton Kaplanyan, Gizem Rufo, Romain Bachy, Trisha Lian, and Anjul Patney. 2021. FovVideoVDP : A visible difference predictor for wide field-of-view video. ACM Transaction on Graphics 40, 4 (2021), 49.

31. Aliaksei Mikhailiuk, Clifford Wilmot, Maria Perez-Ortiz, Dingcheng Yue, and Rafal Mantiuk. 2020. Active Sampling for Pairwise Comparisons via Approximate Message Passing and Information Gain Maximization. In 2020 IEEE International Conference on Pattern Recognition (ICPR).

32. Karol Myszkowski. 1998. The visible differences predictor: Applications to global illumination problems. In Eurographics Workshop on Rendering Techniques. Springer, 223–236.

33. Karol Myszkowski, Przemyslaw Rokita, and Takehiro Tawara. 1999. Perceptually-Informed Accelerated Rendering of High Quality Walkthrough Sequences. In Rendering Symposium. 5–18.

34. Fernando Navarro, Susana Castillo, Francisco J Serón, and Diego Gutierrez. 2011. Perceptual considerations for motion blur rendering. ACM Transactions on Applied Perception (TAP) 8, 3 (2011), 1–15.

35. Nvidia. 2018. NVIDIA Turing GPU Architecture. https://www.nvidia.com/content/dam/en-zz/Solutions/design-visualization/technologies/turing-architecture/NVIDIA-Turing-Architecture-Whitepaper.pdf

36. A. Ortega and K. Ramchandran. 1998. Rate-distortion methods for image and video compression. IEEE Signal Processing Magazine 15, 6 (1998), 23–50.

37. Maria Perez-Ortiz and Rafal K. Mantiuk. 2017. A practical guide and software for analysing pairwise comparison experiments. arXiv preprint (dec 2017). arXiv:1712.03686 http://arxiv.org/abs/1712.03686

38. Daniel Pohl, Timo Bolkart, Stefan Nickels, and Oliver Grau. 2015. Using astigmatism in wide angle HMDs to improve rendering. In 2015 IEEE Virtual Reality (VR). 263–264.

39. Qualcomm. 2021. Qualcomm Snapdragon 888 levels up mobile gaming. https://www.qualcomm.com/news/onq/2020/12/02/qualcomm-snapdragon-888-levels-mobile-gaming

40. Mark Rejhon. 2017. Strobe Crosstalk: Blur Reduction Double-Images. https://blurbusters.com/faq/advanced-strobe-crosstalk-faq/

41. JE Roberts and AJ Wilkins. 2013. Flicker can be perceived during saccades at frequencies in excess of 1 kHz. Lighting Research & Technology 45, 1 (2013), 124–132.

42. Jan Schmid, Y ULUDAG, and J DELIGIANNIS. 2019. It just works: Raytraced reflections in” Battlefield V”. In GPU Technology Conference.

43. T. Scott Murdison, Christopher McIntosh, James Hillis, and Kevin J. MacKenzie. 2019. 3-1: Psychophysical Evaluation of Persistence- and Frequency-Limited Displays for Virtual and Augmented Reality. SID Symposium Digest of Technical Papers 50, 1 (2019), 1–4. arXiv:https://onlinelibrary.wiley.com/doi/pdf/10.1002/sdtp.12840

44. BT Series. 2020. The present state of ultra-high definition television. https://www.itu.int/pub/R-REP-BT.2246-7-2020. (2020).

45. AAS Sluyterman. 2006. What is needed in LCD panels to achieve CRT-like motion portrayal? Journal of the Society for Information Display 14, 8 (2006), 681–686.

46. M. Stengel, P. Bauszat, M. Eisemann, E. Eisemann, and M. Magnor. 2015. Temporal Video Filtering and Exposure Control for Perceptual Motion Blur. Visualization and Computer Graphics, IEEE Transactions on 21, 2 (2015), 1–11.

47. K. Sung, A. Pearce, and C. Wang. 2002. Spatial-Temporal Antialiasing. IEEE Transactions on Visualization and Computer Graphics 8, 2 (2002), 144–153.

48. Okan Tarhan Tursun, Elena Arabadzhiyska, Marek Wernikowski, Radosław Mantiuk, Hans-Peter Seidel, Karol Myszkowski, and Piotr Didyk. 2019. Luminance-Contrast-Aware Foveated Rendering. ACM Transactions on Graphics (Proc. ACM SIGGRAPH) 38, 4 (2019).

49. Unity. 2021. Unity – Manual: Dynamic resolution. https://docs.unity3d.com/Manual/DynamicResolution.html

50. Unreal. 2021. https://docs.unrealengine.com/en-US/RenderingAndGraphics/DynamicResolution/index.html

51. Karthik Vaidyanathan, Marco Salvi, Robert Toth, Tim Foley, Tomas Akenine-Möller, Jim Nilsson, Jacob Munkberg, Jon Hasselgren, Masamichi Sugihara, Petrik Clarberg, et al. 2014. Coarse pixel shading. In Proceedings of High Performance Graphics. 9–18.

52. Karthik Vaidyanathan, Robert Toth, Marco Salvi, Solomon Boulos, and Aaron E Lefohn. 2012. Adaptive Image Space Shading for Motion and Defocus Blur.. In High Performance Graphics. 13–21.

53. Tom Verbeure, Gerrit A Slavenburg, Thomas F Fox, Robert Jan Schutten, Luis Mariano Lucas, and Marcel Dominicus Janssens. 2017. System, method, and computer program product for combining low motion blur and variable refresh rate in a display. US Patent 9,773,460.

54. Andrew B Watson. 2013. High frame rates and human vision: A view through the window of visibility. SMPTE Motion Imaging Journal 122, 2 (2013), 18–32.

55. Andrew B Watson and Albert J Ahumada. 2011. Blur clarified: A review and synthesis of blur discrimination. Journal of Vision 11, 5 (2011), 10–10.

56. Martin Weier, Michael Stengel, Thorsten Roth, Piotr Didyk, Elmar Eisemann, Martin Eisemann, Steve Grogorick, André Hinkenjann, Ernst Kruijff, Marcus Magnor, et al. 2017. Perception-driven accelerated rendering. In Computer Graphics Forum, Vol. 36. Wiley Online Library, 611–643.

57. Laurie M Wilcox, Robert S Allison, John Helliker, Bert Dunk, and Roy C Anthony. 2015. Evidence that viewers prefer higher frame-rate film. ACM Transactions on Applied Perception (TAP) 12, 4 (2015), 1–12.

58. Lance Williams. 1983. Pyramidal parametrics. In Proceedings of the 10th annual conference on Computer graphics and interactive techniques. 1–11.

59. Himanshu Yadav and B Annappa. 2017. Adaptive GPU resource scheduling on virtualized servers in cloud gaming. In 2017 Conference on Information and Communication Technology (CICT). IEEE, 1–6.

60. Lei Yang, Dmitry Zhdan, Emmett Kilgariff, Eric B Lum, Yubo Zhang, Matthew Johnson, and Henrik Rydgård. 2019. Visually Lossless Content and Motion Adaptive Shading in Games. Proceedings of the ACM on Computer Graphics and Interactive Techniques 2, 1 (2019), 1–19.

61. Hector Yee, Sumanta Pattanaik, and Donald P. Greenberg. 2001. Spatiotemporal Sensitivity and Visual Attention for Efficient Rendering of Dynamic Environments. ACM Trans. Graph. 20, 1 (2001), 39–65.