“Optimized local blendshape mapping for facial motion retargeting” by Ma, Fyffe and Debevec

Conference:

Title:

- Optimized local blendshape mapping for facial motion retargeting

Session/Category Title: Heads or tails

Presenter(s)/Author(s):

Abstract:

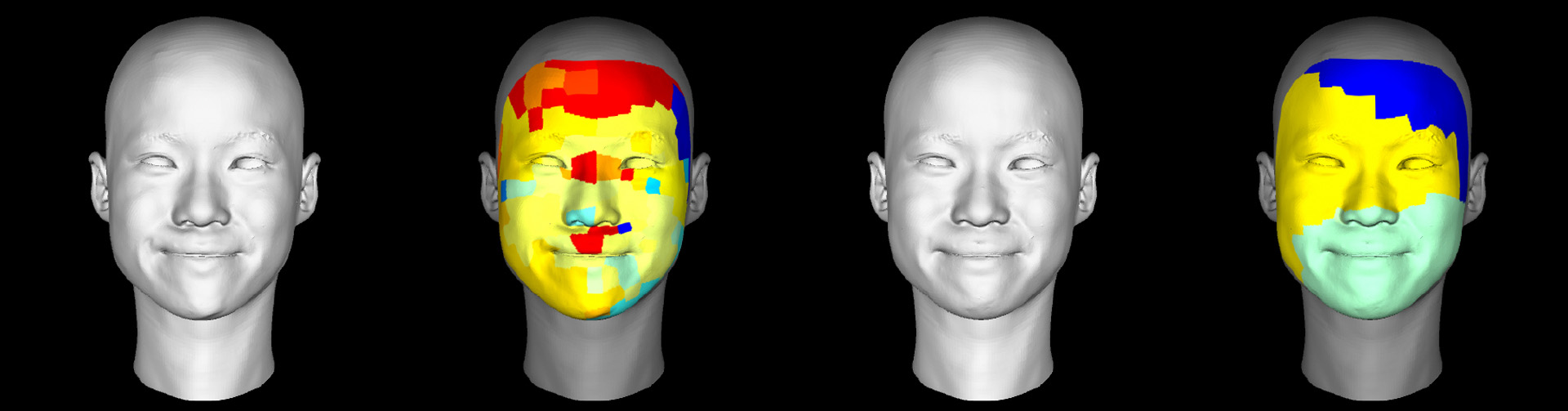

One of the popular methods for facial motion retargeting is local blendshape mapping [Pighin and Lewis 2006], where each local facial region is controlled by a tracked feature (for example, a vertex in motion capture data). To map a target motion input onto blendshapes, a pose set is chosen for each facial region with minimal retargeting error. However, since the best pose set for each region is chosen independently, the solution likely has unorganized pose sets across the face regions, as shown in Figure 1(b). Therefore, even though every pose set matches the local features, the retargeting result is not guaranteed to be spatially smooth. In addition, previous methods ignored temporal coherence which is key for jitter-free results.

References:

1. Pighin, F., and Lewis, J. P. 2006. Facial motion retargeting. In SIGGRAPH Courses.

2. Yedidia, J., Freeman, W., and Weiss, Y. 2003. Understanding Belief Propagation and Its Generalizations. ch. 8, 239–236