“Neural Bounding”

Conference:

Type(s):

Title:

- Neural Bounding

Presenter(s)/Author(s):

Abstract:

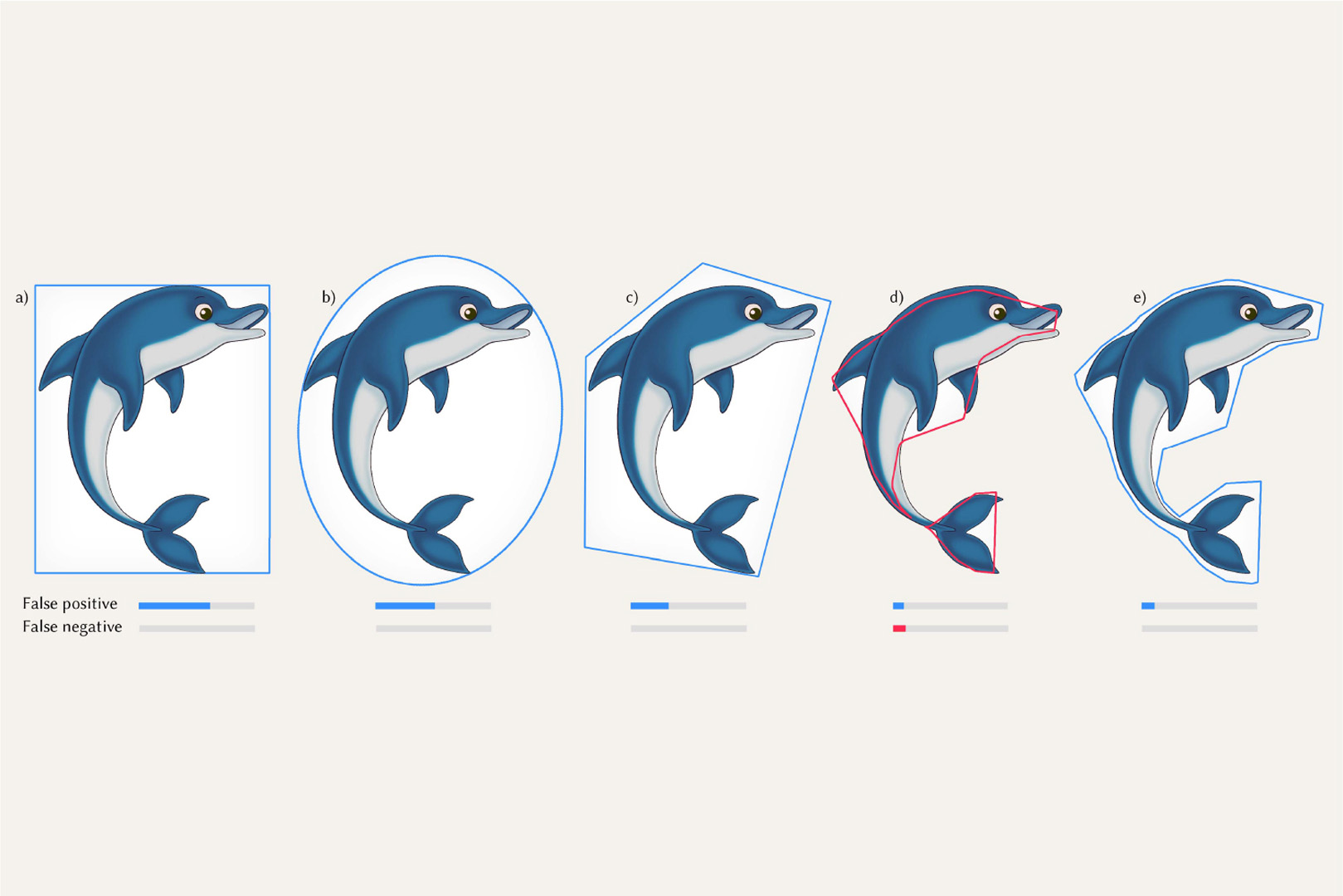

Our research introduces a neural approach to bounding volumes, conservatively classifying space across diverse dimensions and scenes. The key is a novel loss function that produces minimal false negatives. Our method extends to non-neural and hybrid representations. We also propose an early exit strategy that accelerates query speeds by 25%.

References:

[1]

Timo Aila and Samuli Laine. 2009. Understanding the efficiency of ray traversal on GPUs. In Proceedings of the conference on high performance graphics 2009. 145?149.

[2]

Matan Atzmon and Yaron Lipman. 2020. Sal: Sign agnostic learning of shapes from raw data. In CVPR. 2565?2574.

[3]

Annada Prasad Behera and Subhankar Mishra. 2023. Neural directional distance field object representation for uni-directional path-traced rendering. arXiv preprint arXiv:2306.16142 (2023).

[4]

Gino van den Bergen. 1997. Efficient collision detection of complex deformable models using AABB trees. J Graphics Tools 2, 4 (1997), 1?13.

[5]

Xinhao Cai, Eulalie Coevoet, Alec Jacobson, and Paul Kry. 2022. Active Learning Neural C-space Signed Distance Fields for Reduced Deformable Self-Collision. In Graphics Interface.

[6]

Edward R Dougherty. 1992. An introduction to morphological image processing. In SPIE.

[7]

Stephen A Ehmann and Ming C Lin. 2001. Accurate and fast proximity queries between polyhedra using convex surface decomposition. Comp Graph Forum 20, 3 (2001), 500?511.

[8]

Christer Ericson. 2004. Real-time collision detection. Crc Press.

[9]

Michael Fischer and Tobias Ritschel. 2022. Metappearance: Meta-learning for visual appearance reproduction. ACM Trans Graph (Proc. SIGGRPAH) 41, 6 (2022), 1?13.

[10]

Shin Fujieda, Chih-Chen Kao, and Takahiro Harada. 2023. Neural Intersection Function. arXiv preprint arXiv:2306.07191 (2023).

[11]

Yan Gu, Yong He, Kayvon Fatahalian, and Guy Blelloch. 2013. Efficient BVH construction via approximate agglomerative clustering. In Proc. HPG. 81?88.

[12]

Johannes Gunther, Stefan Popov, Hans-Peter Seidel, and Philipp Slusallek. 2007. Realtime ray tracing on GPU with BVH-based packet traversal. In Symp Interactive Ray Tracing. 113?118.

[13]

Koji Hashimoto, Tomoya Naito, and Hisashi Naito. 2023. Neural Polytopes. arXiv preprint arXiv:2307.00721 (2023).

[14]

Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In CVPR. 770?778.

[15]

Sergey Ioffe and Christian Szegedy. 2015. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In ICCV. 448?456.

[16]

Animesh Karnewar, Tobias Ritschel, Oliver Wang, and Niloy Mitra. 2022. ReLU fields: The little non-linearity that could. In ACM SIGGRAPH. 1?9.

[17]

Timothy L Kay and James T Kajiya. 1986. Ray tracing complex scenes. ACM SIGGRAPH Computer Graphics 20, 4 (1986), 269?278.

[18]

Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).

[19]

James T Klosowski, Martin Held, Joseph SB Mitchell, Henry Sowizral, and Karel Zikan. 1998. Efficient collision detection using bounding volume hierarchies of k-DOPs. IEEE TVCG 4, 1 (1998), 21?36.

[20]

Christian Lauterbach, Michael Garland, Shubhabrata Sengupta, David Luebke, and Dinesh Manocha. 2009. Fast BVH construction on GPUs. In Computer Graphics Forum, Vol. 28. Wiley Online Library, 375?384.

[21]

Chen Liu, Michael Fischer, and Tobias Ritschel. 2023. Learning to learn and sample BRDFs. Comp Graph Forum (Proc. Eurographics) 42, 2 (2023), 201?211.

[22]

Daniel Meister, Shinji Ogaki, Carsten Benthin, Michael J Doyle, Michael Guthe, and Ji?? Bittner. 2021. A survey on bounding volume hierarchies for ray tracing. Comp Graph Forum 40, 2 (2021), 683?712.

[23]

Lars Mescheder, Michael Oechsle, Michael Niemeyer, Sebastian Nowozin, and Andreas Geiger. 2019. Occupancy Networks: Learning 3D Reconstruction in Function Space. In CVPR.

[24]

Thomas M?ller, Alex Evans, Christoph Schied, and Alexander Keller. 2022. Instant neural graphics primitives with a multiresolution hash encoding. ACM Trans Graph (Proc. SIGGRAPH) 41, 4 (2022), 1?15.

[25]

Ntumba Elie Nsampi, Adarsh Djeacoumar, Hans-Peter Seidel, Tobias Ritschel, and Thomas Leimk?hler. 2023. Neural Field Convolutions by Repeated Differentiation. arXiv preprint arXiv:2304.01834 (2023).

[26]

Jeong Joon Park, Peter Florence, Julian Straub, Richard Newcombe, and Steven Lovegrove. 2019. DeepSDF: Learning Continuous Signed Distance Functions for Shape Representation. In CVPR.

[27]

Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, 2019. Pytorch: An imperative style, high-performance deep learning library. NeurIPS 32 (2019).

[28]

Christian Reiser, Songyou Peng, Yiyi Liao, and Andreas Geiger. 2021. Kilonerf: Speeding up neural radiance fields with thousands of tiny MLPs. In ICCV. 14335?14345.

[29]

Tal Ridnik, Emanuel Ben-Baruch, Nadav Zamir, Asaf Noy, Itamar Friedman, Matan Protter, and Lihi Zelnik-Manor. 2021. Asymmetric loss for multi-label classification. In ICCV. 82?91.

[30]

Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2015. U-net: Convolutional networks for biomedical image segmentation. In Proc. MICCAI. 234?241.

[31]

Igor Santesteban, Miguel Otaduy, Nils Thuerey, and Dan Casas. 2022. Ulnef: Untangled layered neural fields for mix-and-match virtual try-on. NeurIPS 35 (2022), 12110?12125.

[32]

Philip Schneider and David H Eberly. 2002. Geometric tools for computer graphics. Elsevier.

[33]

Nicholas Sharp and Alec Jacobson. 2022. Spelunking the deep: Guaranteed queries on general neural implicit surfaces via range analysis. ACM Trans Graph (Proc. SIGGRAPH) 41, 4 (2022), 1?16.

[34]

Vincent Sitzmann, Eric Chan, Richard Tucker, Noah Snavely, and Gordon Wetzstein. 2020. MetaSDF: Meta-learning signed distance functions. NeurIPS 33 (2020), 10136?10147.

[35]

Matthew Tancik, Ben Mildenhall, Terrance Wang, Divi Schmidt, Pratul P Srinivasan, Jonathan T Barron, and Ren Ng. 2021. Learned initializations for optimizing coordinate-based neural representations. In CVPR. 2846?2855.

[36]

Thijs Vogels, Fabrice Rousselle, Brian McWilliams, Gerhard R?thlin, Alex Harvill, David Adler, Mark Meyer, and Jan Nov?k. 2018. Denoising with kernel prediction and asymmetric loss functions. ACM Trans Graph (Proc. SIGGRAPH) 37, 4 (2018), 1?15.

[37]

Ren? Weller, David Mainzer, Abhishek Srinivas, Matthias Teschner, and Gabriel Zachmann. 2014. Massively Parallel Batch Neural Gas for Bounding Volume Hierarchy Construction. In VRIPHYS. 9?17.

[38]

Yiheng Xie, Towaki Takikawa, Shunsuke Saito, Or Litany, Shiqin Yan, Numair Khan, Federico Tombari, James Tompkin, Vincent Sitzmann, and Srinath Sridhar. 2022. Neural Fields in Visual Computing and Beyond. Comp Graph Forum 41, 2 (2022).

[39]

Lior Yariv, Jiatao Gu, Yoni Kasten, and Yaron Lipman. 2021. Volume rendering of neural implicit surfaces. NeurIPS 34 (2021), 4805?4815.

[40]

Ryan S Zesch, Bethany R Witemeyer, Ziyan Xiong, David IW Levin, and Shinjiro Sueda. 2022. Neural collision detection for deformable objects. arXiv preprint arXiv:2202.02309 (2022).

[41]

Xiong Zhou, Xianming Liu, Junjun Jiang, Xin Gao, and Xiangyang Ji. 2021. Asymmetric loss functions for learning with noisy labels. In ICML. 12846?12856.