“Near-gaze Visualisations of Empathic Communication Cues in Mixed Reality Collaboration” by Jing, Gupta, McDade, Lee and Billinghurst

Conference:

Type(s):

Title:

- Near-gaze Visualisations of Empathic Communication Cues in Mixed Reality Collaboration

Presenter(s)/Author(s):

Entry Number:

- 29

Abstract:

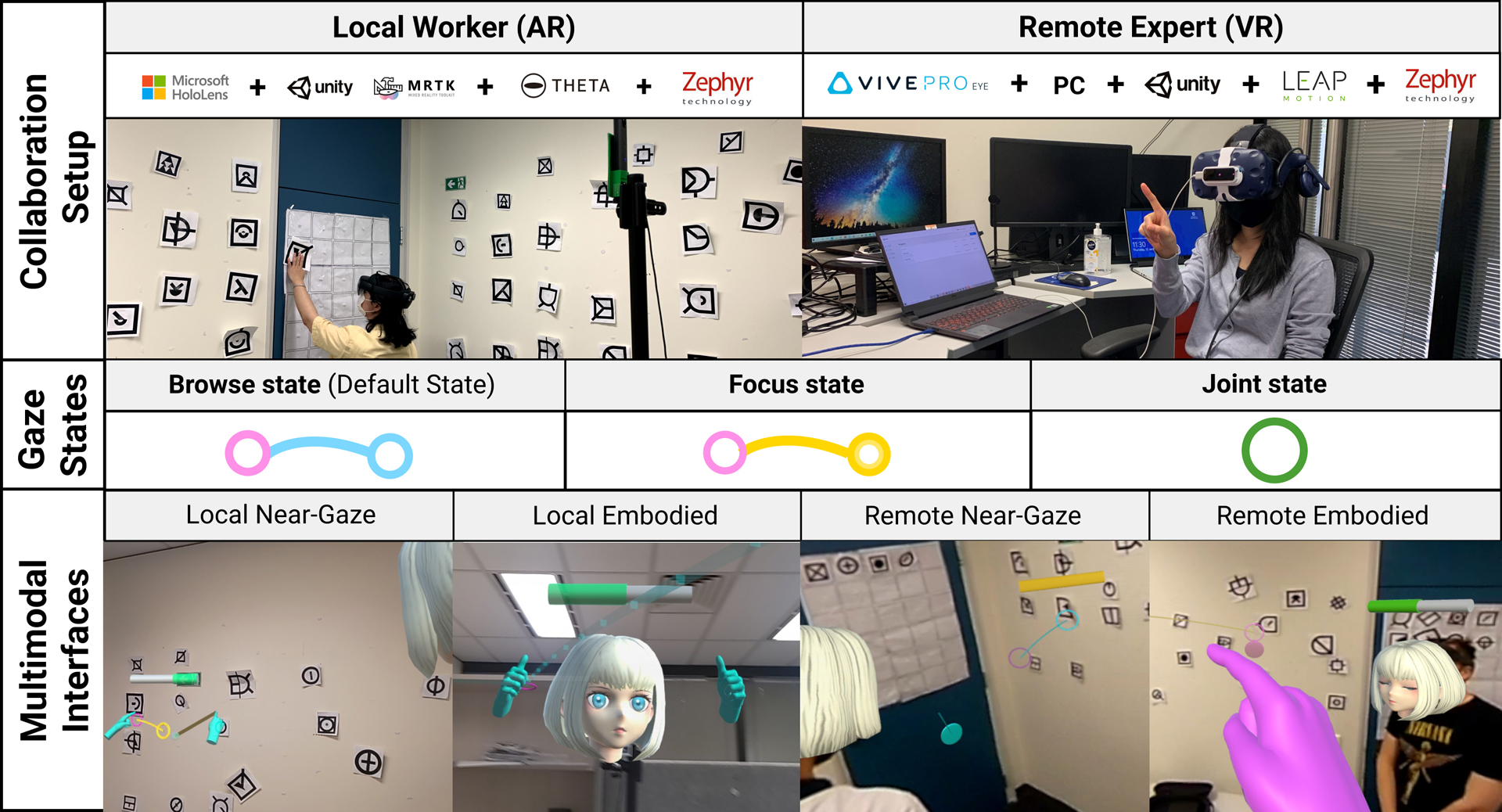

In this poster, we present a live 360° panoramic-video based empathic Mixed Reality (MR) collaboration system that shares various Near-Gaze non-verbal communication cues including gaze, hand pointing, gesturing, and heart rate visualisations in real-time. The preliminary results indicate that the interface with the partner’s communication cues visualised close to the gaze point allows users to focus without dividing attention to the collaborator’s physical body movements yet still effectively communicate. Shared gaze visualisations coupled with deictic languages are primarily used to affirm joint attention and mutual understanding, while hand pointing and gesturing are used as secondary. Our approach provides a new way to help enable effective remote collaboration through varied empathic communication visualisations and modalities which covers different task properties and spatial setups.

References:

Allison Jing, Brandon Matthews, Mark Billinghurst, Kieran May, Thomas Clarke, Gun Lee, and Mark Billinghurst. 2021a. EyemR-Talk: Using Speech to Visualise Shared MR Gaze Cues. In SIGGRAPH Asia 2021 Posters(SA ’21 Posters). Association for Computing Machinery, New York, NY, USA, 6–7. https://doi.org/10.1145/3476124.3488618Google ScholarDigital Library

Allison Jing, Kieran William May, Mahnoor Naeem, Gun Lee, Mark Billinghurst, Mahnoor Naeem, Gun Lee, and Mark Billinghurst. 2021b. EyemR-Vis: Using Bi-Directional Gaze Behavioural Cues to Improve Mixed Reality Remote Collaboration. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems(CHI EA ’21). Association for Computing Machinery, New York, NY, USA, 1–7. https://doi.org/10.1145/3411763.3451844Google ScholarDigital Library