“MyStyle: A Personalized Generative Prior” by Nitzan, Aberman, He, Liba, Yarom, et al. …

Conference:

Type(s):

Title:

- MyStyle: A Personalized Generative Prior

Session/Category Title: Styilzation and Colorization

Presenter(s)/Author(s):

Abstract:

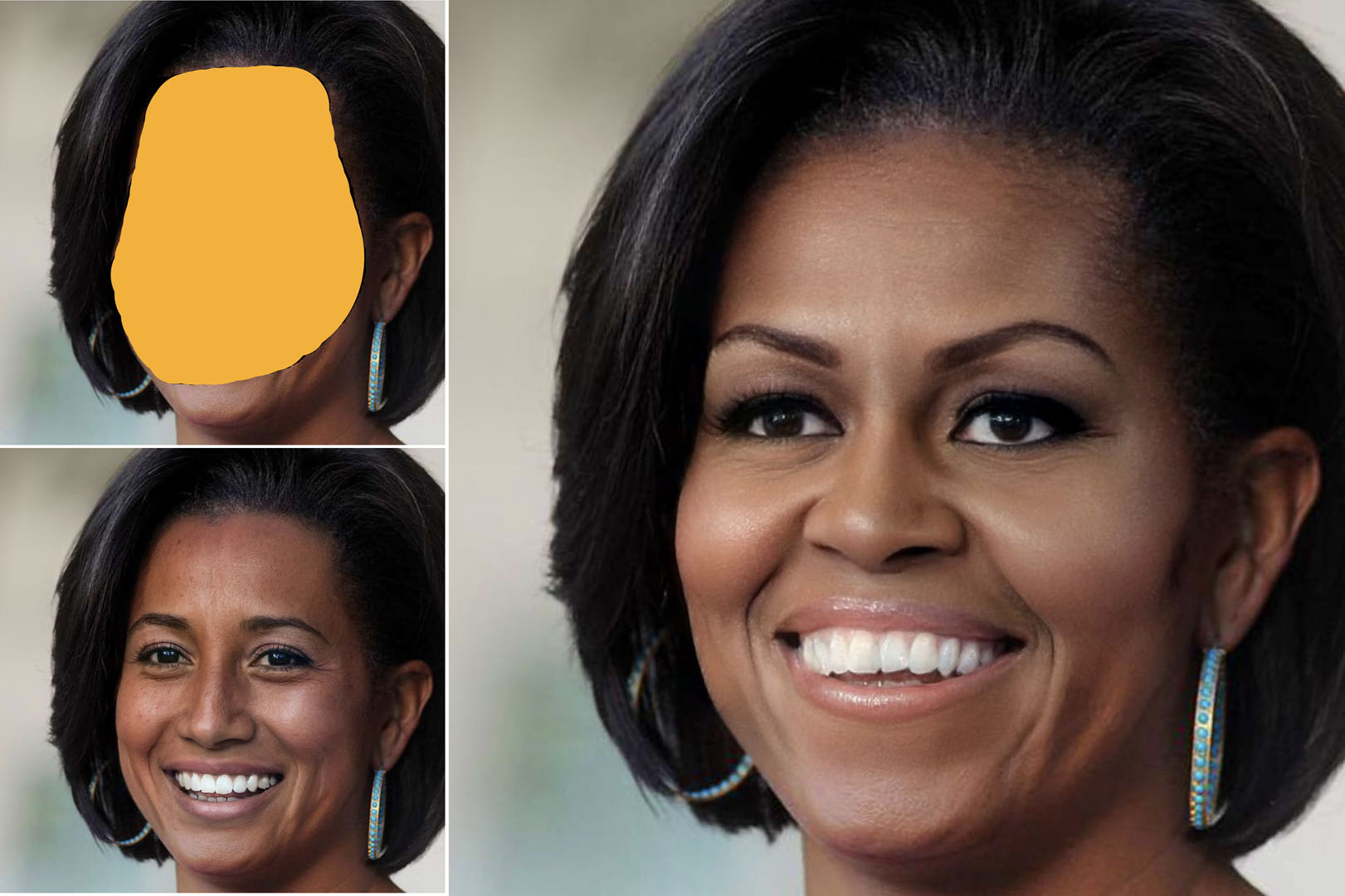

We introduce MyStyle, a personalized deep generative prior trained with a few shots of an individual. MyStyle allows to reconstruct, enhance and edit images of a specific person, such that the output is faithful to the person’s key facial characteristics. Given a small reference set of portrait images of a person (~ 100), we tune the weights of a pretrained StyleGAN face generator to form a local, low-dimensional, personalized manifold in the latent space. We show that this manifold constitutes a personalized region that spans latent codes associated with diverse portrait images of the individual. Moreover, we demonstrate that we obtain a personalized generative prior, and propose a unified approach to apply it to various ill-posed image enhancement problems, such as inpainting and super-resolution, as well as semantic editing. Using the personalized generative prior we obtain outputs that exhibit high-fidelity to the input images and are also faithful to the key facial characteristics of the individual in the reference set. We demonstrate our method with fair-use images of numerous widely recognizable individuals for whom we have the prior knowledge for a qualitative evaluation of the expected outcome. We evaluate our approach against few-shots baselines and show that our personalized prior, quantitatively and qualitatively, outperforms state-of-the-art alternatives.

References:

1. Rameen Abdal, Yipeng Qin, and Peter Wonka. 2019. Image2stylegan: How to embed images into the StyleGAN latent space?. In Proceedings of the IEEE international conference on computer vision. 4432–4441.

2. Rameen Abdal, Peihao Zhu, Niloy Mitra, and Peter Wonka. 2020. StyleFlow: Attribute-conditioned Exploration of StyleGAN-Generated Images using Conditional Continuous Normalizing Flows. arXiv preprint arXiv:2008.02401 (2020).

3. Yuval Alaluf, Or Patashnik, and Daniel Cohen-Or. 2021a. ReStyle: A Residual-Based StyleGAN Encoder via Iterative Refinement. arXiv preprint arXiv:2104.02699 (2021).

4. Yuval Alaluf, Omer Tov, Ron Mokady, Rinon Gal, and Amit H Bermano. 2021b. HyperStyle: StyleGAN Inversion with HyperNetworks for Real Image Editing. arXiv preprint arXiv:2111.15666 (2021).

5. Hadar Averbuch-Elor, Daniel Cohen-Or, Johannes Kopf, and Michael F Cohen. 2017. Bringing portraits to life. ACM Transactions on Graphics (TOG) 36, 6 (2017), 1–13.

6. David Bau, Hendrik Strobelt, William Peebles, Jonas Wulff, Bolei Zhou, Jun-Yan Zhu, and Antonio Torralba. 2020. Semantic photo manipulation with a generative image prior. arXiv preprint arXiv:2005.07727 (2020).

7. Amit H. Bermano, Rinon Gal, Yuval Alaluf, Ron Mokady, Yotam Nitzan, Omer Tov, Oren Patashnik, and Daniel Cohen-Or. 2022. State-of-the-Art in the Architecture, Methods and Applications of StyleGAN.

8. Yochai Blau and Tomer Michaeli. 2018. The Perception-Distortion Tradeoff. In CVPR.

9. Kaidi Cao, Yu Rong, Cheng Li, Xiaoou Tang, and Chen Change Loy. 2018. Pose-robust face recognition via deep residual equivariant mapping. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 5187–5196.

10. Julie Cattiau. 2021. A communication tool for people with speech impairments. https://blog.google/outreach-initiatives/accessibility/project-relate/. Accessed: January 2022.

11. Kelvin CK Chan, Xintao Wang, Xiangyu Xu, Jinwei Gu, and Chen Change Loy. 2021. Glean: Generative latent bank for large-factor image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 14245–14254.

12. Ashok Chandrashekar, Fernando Amat, Justin Basilico, and Tony Jebara. 2021. Artwork Personalization at Netflix. https://netflixtechblog.com/artwork-personalization-c589f074ad76. Accessed: January 2022.

13. Brian Dolhansky and Cristian Canton Ferrer. 2018. Eye in-painting with exemplar generative adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 7902–7911.

14. Michael S Floater. 2015. Generalized barycentric coordinates and applications. Acta Numerica 24 (2015), 161–214.

15. Rinon Gal, Or Patashnik, Haggai Maron, Gal Chechik, and Daniel Cohen-Or. 2021. Stylegan-nada: Clip-guided domain adaptation of image generators. arXiv preprint arXiv:2108.00946 (2021).

16. Shiming Ge, Chenyu Li, Shengwei Zhao, and Dan Zeng. 2020. Occluded face recognition in the wild by identity-diversity inpainting. IEEE Transactions on Circuits and Systems for Video Technology 30, 10 (2020), 3387–3397.

17. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial nets. In Advances in neural information processing systems. 2672–2680.

18. Jinjin Gu, Yujun Shen, and Bolei Zhou. 2020. Image processing using multi-code gan prior. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 3012–3021.

19. Erik Härkönen, Aaron Hertzmann, Jaakko Lehtinen, and Sylvain Paris. 2020. GANSpace: Discovering Interpretable GAN Controls. arXiv preprint arXiv:2004.02546 (2020).

20. Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. 2017. Gans trained by a two time-scale update rule converge to a local nash equilibrium. Advances in neural information processing systems 30 (2017).

21. Tero Karras, Miika Aittala, Janne Hellsten, Samuli Laine, Jaakko Lehtinen, and Timo Aila. 2020a. Training Generative Adversarial Networks with Limited Data. In Proc. NeurIPS.

22. Tero Karras, Samuli Laine, and Timo Aila. 2019. A style-based generator architecture for generative adversarial networks. In Proc. CVPR. 4401–4410.

23. Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2020b. Analyzing and improving the image quality of StyleGAN. In Proc. CVPR. 8110–8119.

24. Xiaoming Li, Wenyu Li, Dongwei Ren, Hongzhi Zhang, Meng Wang, and Wangmeng Zuo. 2020a. Enhanced blind face restoration with multi-exemplar images and adaptive spatial feature fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2706–2715.

25. Yijun Li, Richard Zhang, Jingwan Lu, and Eli Shechtman. 2020b. Few-shot Image Generation with Elastic Weight Consolidation. arXiv preprint arXiv:2012.02780 (2020).

26. Xuan Luo, Xuaner Zhang, Paul Yoo, Ricardo Martin-Brualla, Jason Lawrence, and Steven M Seitz. 2020a. Time-travel rephotography. arXiv preprint arXiv:2012.12261 (2020).

27. Xuan Luo, Xuaner Zhang, Paul Yoo, Ricardo Martin-Brualla, Jason Lawrence, and Steven M. Seitz. 2020b. Time-Travel Rephotography. arXiv preprint arXiv:2012.12261 (2020).

28. Sachit Menon, Alexandru Damian, Shijia Hu, Nikhil Ravi, and Cynthia Rudin. 2020. Pulse: Self-supervised photo upsampling via latent space exploration of generative models. In Proceedings of the ieee/cvf conference on computer vision and pattern recognition. 2437–2445.

29. Sangwoo Mo, Minsu Cho, and Jinwoo Shin. 2020. Freeze the discriminator: a simple baseline for fine-tuning GANs. arXiv preprint arXiv:2002.10964 (2020).

30. Yotam Nitzan, Amit Bermano, Yangyan Li, and Daniel Cohen-Or. 2020. Face Identity Disentanglement via Latent Space Mapping. ACM Trans. Graph. 39, 6, Article 225 (Nov. 2020), 14 pages.

31. Utkarsh Ojha, Yijun Li, Jingwan Lu, Alexei A Efros, Yong Jae Lee, Eli Shechtman, and Richard Zhang. 2021. Few-shot Image Generation via Cross-domain Correspondence. arXiv preprint arXiv:2104.06820 (2021).

32. Xingang Pan, Xiaohang Zhan, Bo Dai, Dahua Lin, Chen Change Loy, and Ping Luo. 2021. Exploiting deep generative prior for versatile image restoration and manipulation. IEEE Transactions on Pattern Analysis and Machine Intelligence (2021).

33. Or Patashnik, Zongze Wu, Eli Shechtman, Daniel Cohen-Or, and Dani Lischinski. 2021. StyleCLIP: Text-Driven Manipulation of StyleGAN Imagery. arXiv preprint arXiv:2103.17249 (2021).

34. Justin NM Pinkney and Doron Adler. 2020. Resolution Dependant GAN Interpolation for Controllable Image Synthesis Between Domains. arXiv preprint arXiv:2010.05334 (2020).

35. Elad Richardson, Yuval Alaluf, Or Patashnik, Yotam Nitzan, Yaniv Azar, Stav Shapiro, and Daniel Cohen-Or. 2021. Encoding in style: a StyleGAN encoder for image-to-image translation. In Proc. IEEE/CVF CVPR. 2287–2296.

36. Daniel Roich, Ron Mokady, Amit H Bermano, and Daniel Cohen-Or. 2021. Pivotal Tuning for Latent-based Editing of Real Images. arXiv preprint arXiv:2106.05744 (2021).

37. Yujun Shen, Ceyuan Yang, Xiaoou Tang, and Bolei Zhou. 2020. InterFaceGAN: Interpreting the Disentangled Face Representation Learned by GANs. arXiv preprint arXiv:2005.09635 (2020).

38. Nurit Spingarn-Eliezer, Ron Banner, and Tomer Michaeli. 2020. GAN” Steerability” without optimization. arXiv preprint arXiv:2012.05328 (2020).

39. Omer Tov, Yuval Alaluf, Yotam Nitzan, Or Patashnik, and Daniel Cohen-Or. 2021. Designing an Encoder for StyleGAN Image Manipulation. arXiv preprint arXiv:2102.02766 (2021).

40. Andrey Voynov and Artem Babenko. 2020. Unsupervised Discovery of Interpretable Directions in the GAN Latent Space. arXiv preprint arXiv:2002.03754 (2020).

41. Neal Wadhwa, Rahul Garg, David E. Jacobs, Bryan E. Feldman, Nori Kanazawa, Robert Carroll, Yair Movshovitz-Attias, Jonathan T. Barron, Yael Pritch, and Marc Levoy. 2018. Synthetic Depth-of-Field with a Single-Camera Mobile Phone. ACM Trans. Graph. 37, 4, Article 64 (jul 2018), 13 pages.

42. Kaili Wang, Jose Oramas, and Tinne Tuytelaars. 2020. Multiple exemplars-based hallucination for face super-resolution and editing. In Proceedings of the Asian Conference on Computer Vision.

43. Xintao Wang, Yu Li, Honglun Zhang, and Ying Shan. 2021. Towards Real-World Blind Face Restoration with Generative Facial Prior. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 9168–9178.

44. Zongze Wu, Dani Lischinski, and Eli Shechtman. 2020. StyleSpace Analysis: Disentangled Controls for StyleGAN Image Generation. arXiv:2011.12799 (2020).

45. Zongze Wu, Yotam Nitzan, Eli Shechtman, and Dani Lischinski. 2021. StyleAlign: Analysis and Applications of Aligned StyleGAN Models. arXiv preprint arXiv:2110.11323 (2021).

46. Ceyuan Yang, Yujun Shen, Yinghao Xu, and Bolei Zhou. 2021b. Data-Efficient Instance Generation from Instance Discrimination. arXiv preprint arXiv:2106.04566 (2021).

47. Tao Yang, Peiran Ren, Xuansong Xie, and Lei Zhang. 2021a. GAN Prior Embedded Network for Blind Face Restoration in the Wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 672–681.

48. Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE conference on computer vision and pattern recognition. 586–595.

49. Shengyu Zhao, Jonathan Cui, Yilun Sheng, Yue Dong, Xiao Liang, Eric I Chang, and Yan Xu. 2021. Large Scale Image Completion via Co-Modulated Generative Adversarial Networks. In International Conference on Learning Representations (ICLR).

50. Shengyu Zhao, Zhijian Liu, Ji Lin, Jun-Yan Zhu, and Song Han. 2020. Differentiable augmentation for data-efficient gan training. arXiv preprint arXiv:2006.10738 (2020).

51. Yajie Zhao, Weikai Chen, Jun Xing, Xiaoming Li, Zach Bessinger, Fuchang Liu, Wangmeng Zuo, and Ruigang Yang. 2018. Identity preserving face completion for large ocular region occlusion. arXiv preprint arXiv:1807.08772 (2018).

52. Yijun Zhou and James Gregson. 2020. WHENet: Real-time Fine-Grained Estimation for Wide Range Head Pose. arXiv preprint arXiv:2005.10353 (2020).