“MyStyle++: A Controllable Personalized Generative Prior” by Zeng, Chen, Xu and Kalantari

Conference:

Type(s):

Title:

- MyStyle++: A Controllable Personalized Generative Prior

Session/Category Title:

- Personalized Generative Models

Presenter(s)/Author(s):

Abstract:

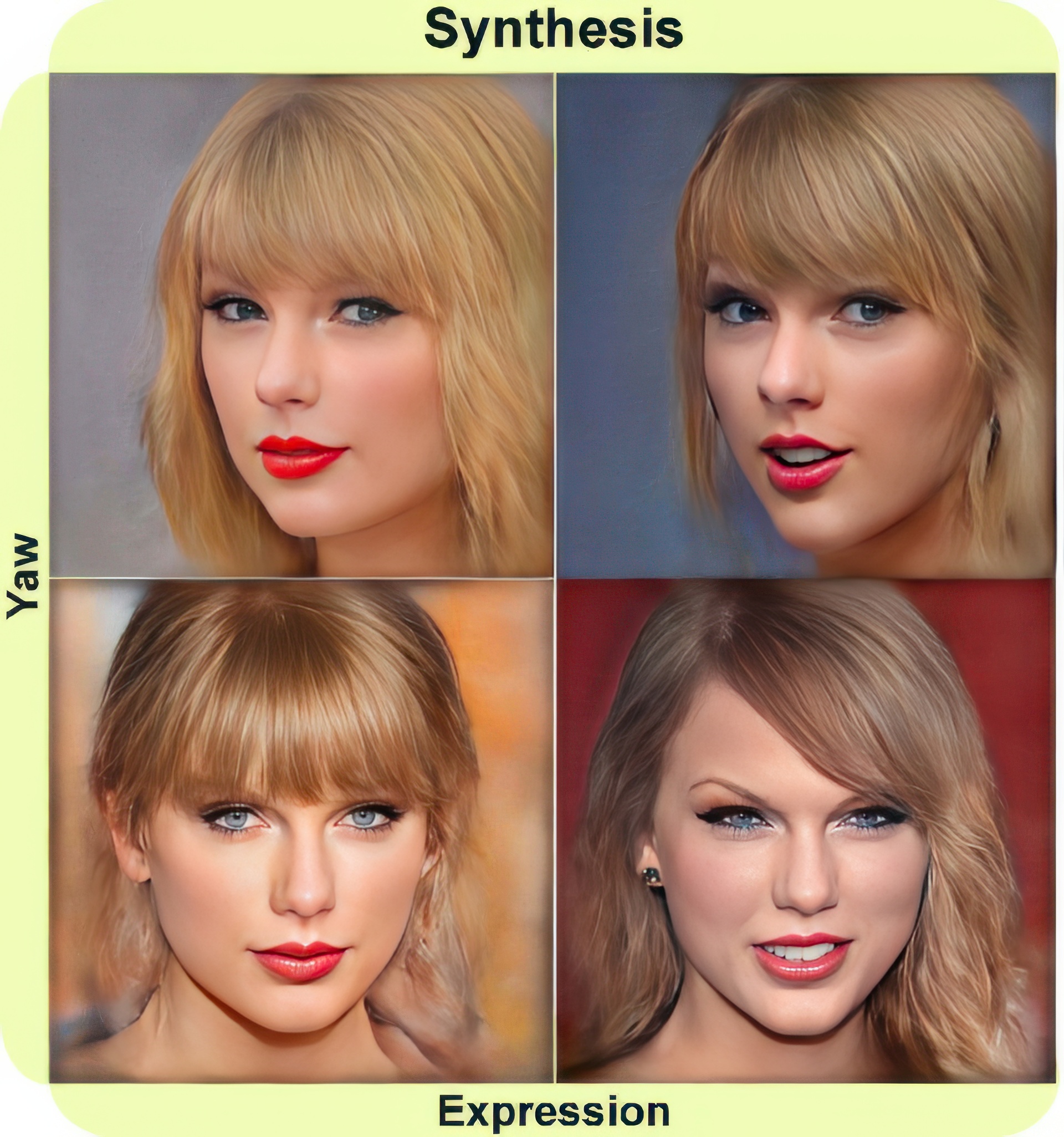

In this paper, we propose an approach to obtain a personalized generative prior with explicit control over a set of attributes. We build upon MyStyle, a recently introduced method, that tunes the weights of a pre-trained StyleGAN face generator on a few images of an individual. This system allows synthesizing, editing, and enhancing images of the target individual with high fidelity to their facial features. However, it relies on the latent space of StyleGAN, and thus inherits its limitations in controllability. We propose to address this problem through a novel optimization system that organizes the latent space in addition to tuning the generator. Our key contribution is to formulate a loss that arranges the latent codes, corresponding to the input images, along a set of specific directions according to their attribute. We demonstrate that our approach, dubbed MyStyle++, is able to synthesize, edit, and enhance images of an individual with great control over the attributes, while preserving the unique facial characteristics of that individual.

References:

[1]

Rameen Abdal, Peihao Zhu, Niloy J Mitra, and Peter Wonka. 2021. Styleflow: Attribute-conditioned exploration of stylegan-generated images using conditional continuous normalizing flows. ACM Transactions on Graphics (ToG) 40, 3 (2021), 1–21.

[2]

Amazon. 2023. AWS Rekognition. https://aws.amazon.com/rekognition/.

[3]

Alexander W. Bergman, Petr Kellnhofer, Wang Yifan, Eric R. Chan, David B. Lindell, and Gordon Wetzstein. 2022. Generative Neural Articulated Radiance Fields. In NeurIPS.

[4]

Volker Blanz and Thomas Vetter. 1999. A morphable model for the synthesis of 3D faces. In Proceedings of the 26th annual conference on Computer graphics and interactive techniques. 187–194.

[5]

Mallikarjun BR, Ayush Tewari, Abdallah Dib, Tim Weyrich, Bernd Bickel, Hans-Peter Seidel, Hanspeter Pfister, Wojciech Matusik, Louis Chevallier, Mohamed Elgharib, 2021. Photoapp: Photorealistic appearance editing of head portraits. ACM Transactions on Graphics 40, 4 (2021), 1–16.

[6]

Andrew Brock, Jeff Donahue, and Karen Simonyan. 2019. Large Scale GAN Training for High Fidelity Natural Image Synthesis. In International Conference on Learning Representations. https://openreview.net/forum?id=B1xsqj09Fm

[7]

Eric R. Chan, Connor Z. Lin, Matthew A. Chan, Koki Nagano, Boxiao Pan, Shalini De Mello, Orazio Gallo, Leonidas Guibas, Jonathan Tremblay, Sameh Khamis, Tero Karras, and Gordon Wetzstein. 2021. Efficient Geometry-aware 3D Generative Adversarial Networks. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

[8]

Lele Chen, Chen Cao, Fernando De la Torre, Jason Saragih, Chenliang Xu, and Yaser Sheikh. 2021. High-fidelity face tracking for AR/VR via deep lighting adaptation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 13059–13069.

[9]

Yu Deng, Jiaolong Yang, Jianfeng Xiang, and Xin Tong. 2022. GRAM: Generative Radiance Manifolds for 3D-Aware Image Generation. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

[10]

Rinon Gal, Or Patashnik, Haggai Maron, Amit H Bermano, Gal Chechik, and Daniel Cohen-Or. 2022. Stylegan-nada: Clip-guided domain adaptation of image generators. ACM Transactions on Graphics (TOG) 41, 4 (2022), 1–13.

[11]

Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative Adversarial Nets. In Advances in Neural Information Processing Systems, Z. Ghahramani, M. Welling, C. Cortes, N. Lawrence, and K.Q. Weinberger (Eds.). Vol. 27. Curran Associates, Inc.https://proceedings.neurips.cc/paper/2014/file/5ca3e9b122f61f8f06494c97b1afccf3-Paper.pdf

[12]

Erik Härkönen, Aaron Hertzmann, Jaakko Lehtinen, and Sylvain Paris. 2020. Ganspace: Discovering interpretable gan controls. Advances in Neural Information Processing Systems 33 (2020), 9841–9850.

[13]

Xinya Ji, Hang Zhou, Kaisiyuan Wang, Qianyi Wu, Wayne Wu, Feng Xu, and Xun Cao. 2022. EAMM: One-Shot Emotional Talking Face via Audio-Based Emotion-Aware Motion Model. In ACM SIGGRAPH 2022 Conference Proceedings (Vancouver, BC, Canada) (SIGGRAPH ’22). Association for Computing Machinery, New York, NY, USA, Article 61, 10 pages. https://doi.org/10.1145/3528233.3530745

[14]

Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. 2018. Progressive Growing of GANs for Improved Quality, Stability, and Variation. In International Conference on Learning Representations. https://openreview.net/forum?id=Hk99zCeAb

[15]

Tero Karras, Miika Aittala, Samuli Laine, Erik Härkönen, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2021. Alias-free generative adversarial networks. Advances in Neural Information Processing Systems 34 (2021), 852–863.

[16]

Tero Karras, Samuli Laine, and Timo Aila. 2019. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 4401–4410.

[17]

Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2020. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 8110–8119.

[18]

Diederik Kingma and Jimmy Ba. 2015. Adam: A method for stochastic optimization. In International Conference on Learning Representations (ICLR).

[19]

Ming-Yu Liu, Xun Huang, Arun Mallya, Tero Karras, Timo Aila, Jaakko Lehtinen, and Jan Kautz. 2019. Few-shot unsupervised image-to-image translation. In Proceedings of the IEEE/CVF international conference on computer vision. 10551–10560.

[20]

Yotam Nitzan, Kfir Aberman, Qiurui He, Orly Liba, Michal Yarom, Yossi Gandelsman, Inbar Mosseri, Yael Pritch, and Daniel Cohen-Or. 2022. MyStyle: A Personalized Generative Prior. arXiv preprint arXiv:2203.17272 (2022).

[21]

Utkarsh Ojha, Yijun Li, Jingwan Lu, Alexei A Efros, Yong Jae Lee, Eli Shechtman, and Richard Zhang. 2021. Few-shot image generation via cross-domain correspondence. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10743–10752.

[22]

Or Patashnik, Zongze Wu, Eli Shechtman, Daniel Cohen-Or, and Dani Lischinski. 2021. Styleclip: Text-driven manipulation of stylegan imagery. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 2085–2094.

[23]

Elad Richardson, Yuval Alaluf, Or Patashnik, Yotam Nitzan, Yaniv Azar, Stav Shapiro, and Daniel Cohen-Or. 2021. Encoding in Style: a StyleGAN Encoder for Image-to-Image Translation. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

[24]

Daniel Roich, Ron Mokady, Amit H. Bermano, and Daniel Cohen-Or. 2022. Pivotal Tuning for Latent-Based Editing of Real Images. ACM Trans. Graph. 42, 1, Article 6 (aug 2022), 13 pages. https://doi.org/10.1145/3544777

[25]

Rasmus Rothe, Radu Timofte, and Luc Van Gool. 2018. Deep Expectation of Real and Apparent Age from a Single Image Without Facial Landmarks. International Journal of Computer Vision 126 (2018), 144–157.

[26]

Yujun Shen, Ceyuan Yang, Xiaoou Tang, and Bolei Zhou. 2020. InterFaceGAN: Interpreting the Disentangled Face Representation Learned by GANs. TPAMI (2020).

[27]

Alon Shoshan, Nadav Bhonker, Igor Kviatkovsky, and Gerard Medioni. 2021. Gan-control: Explicitly controllable gans. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 14083–14093.

[28]

Enis Simsar, Alessio Tonioni, Evin Pınar Örnek, and Federico Tombari. 2022. LatentSwap3D: Semantic Edits on 3D Image GANs. arXiv. https://doi.org/10.48550/ARXIV.2212.01381

[29]

Yasheng Sun, Hang Zhou, Kaisiyuan Wang, Qianyi Wu, Zhibin Hong, Jingtuo Liu, Errui Ding, Jingdong Wang, Ziwei Liu, and Koike Hideki. 2022. Masked Lip-Sync Prediction by Audio-Visual Contextual Exploitation in Transformers. In SIGGRAPH Asia 2022 Conference Papers. 1–9.

[30]

Ayush Tewari, Mohamed Elgharib, Florian Bernard, Hans-Peter Seidel, Patrick Pérez, Michael Zollhöfer, and Christian Theobalt. 2020a. Pie: Portrait image embedding for semantic control. ACM Transactions on Graphics (TOG) 39, 6 (2020), 1–14.

[31]

Ayush Tewari, Mohamed Elgharib, Gaurav Bharaj, Florian Bernard, Hans-Peter Seidel, Patrick Pérez, Michael Zollhofer, and Christian Theobalt. 2020b. Stylerig: Rigging stylegan for 3d control over portrait images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 6142–6151.

[32]

Omer Tov, Yuval Alaluf, Yotam Nitzan, Or Patashnik, and Daniel Cohen-Or. 2021. Designing an encoder for stylegan image manipulation. ACM Transactions on Graphics (TOG) 40, 4 (2021), 1–14.

[33]

Sheng-Yu Wang, David Bau, and Jun-Yan Zhu. 2021a. Sketch your own gan. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 14050–14060.

[34]

Tengfei Wang, Yong Zhang, Yanbo Fan, Jue Wang, and Qifeng Chen. 2022. High-fidelity gan inversion for image attribute editing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 11379–11388.

[35]

Ting-Chun Wang, Ming-Yu Liu, Andrew Tao, Guilin Liu, Jan Kautz, and Bryan Catanzaro. 2019. Few-shot Video-to-Video Synthesis. In Advances in Neural Information Processing Systems (NeurIPS).

[36]

Ting-Chun Wang, Arun Mallya, and Ming-Yu Liu. 2021b. One-shot free-view neural talking-head synthesis for video conferencing. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 10039–10049.

[37]

Zongze Wu, Dani Lischinski, and Eli Shechtman. 2021. Stylespace analysis: Disentangled controls for stylegan image generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 12863–12872.

[38]

Egor Zakharov, Aliaksandra Shysheya, Egor Burkov, and Victor Lempitsky. 2019. Few-shot adversarial learning of realistic neural talking head models. In Proceedings of the IEEE/CVF international conference on computer vision. 9459–9468.

[39]

Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

[40]

Hang Zhou, Yu Liu, Ziwei Liu, Ping Luo, and Xiaogang Wang. 2019. Talking face generation by adversarially disentangled audio-visual representation. In Proceedings of the AAAI conference on artificial intelligence, Vol. 33. 9299–9306.

[41]

Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A Efros. 2017. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international conference on computer vision. 2223–2232.