“Mononizing binocular videos” by Hu, Xia, Fu and Wong

Conference:

Type(s):

Title:

- Mononizing binocular videos

Session/Category Title:

- Learning New Viewpoints

Presenter(s)/Author(s):

Abstract:

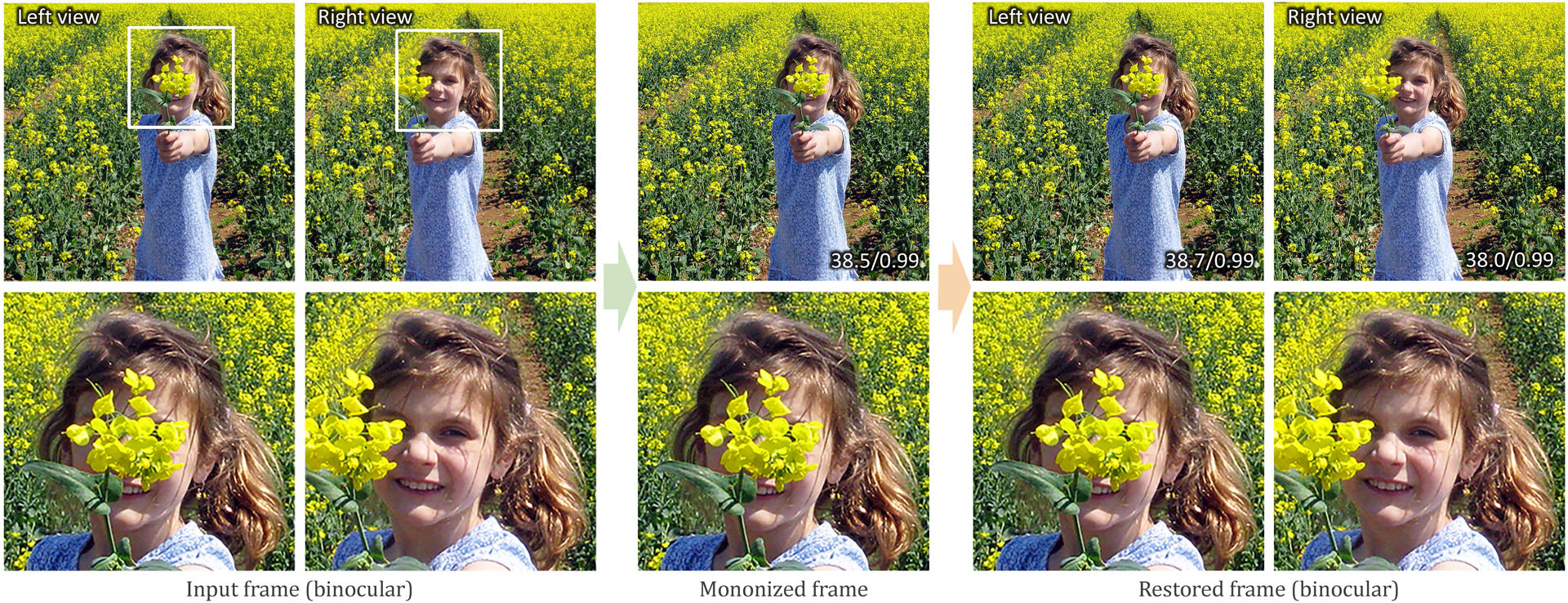

This paper presents the idea of mono-nizing binocular videos and a framework to effectively realize it. Mono-nize means we purposely convert a binocular video into a regular monocular video with the stereo information implicitly encoded in a visual but nearly-imperceptible form. Hence, we can impartially distribute and show the mononized video as an ordinary monocular video. Unlike ordinary monocular videos, we can restore from it the original binocular video and show it on a stereoscopic display. To start, we formulate an encoding-and-decoding framework with the pyramidal deformable fusion module to exploit long-range correspondences between the left and right views, a quantization layer to suppress the restoring artifacts, and the compression noise simulation module to resist the compression noise introduced by modern video codecs. Our framework is self-supervised, as we articulate our objective function with loss terms defined on the input: a monocular term for creating the mononized video, an invertibility term for restoring the original video, and a temporal term for frame-to-frame coherence. Further, we conducted extensive experiments to evaluate our generated mononized videos and restored binocular videos for diverse types of images and 3D movies. Quantitative results on both standard metrics and user perception studies show the effectiveness of our method.

References:

1. Eirikur Agustsson, Fabian Mentzer, Michael Tschannen, Lukas Cavigelli, Radu Timofte, Luca Benini, and Luc V. Gool. 2017. Soft-to-hard vector quantization for end-to-end learning compressible representations. In Advances in Neural Information Processing Systems.Google Scholar

2. Amir Atapour-Abarghouei and Toby P. Breckon. 2018. Real-time monocular depth estimation using synthetic data with domain adaptation via image style transfer. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

3. Johannes Ballé, Valero Laparra, and Eero P. Simoncelli. 2017. End-to-end Optimized Image Compression. In International Conference on Learning Representations (ICLR).Google Scholar

4. Shumeet Baluja. 2017. Hiding images in plain sight: Deep steganography. In Advances in Neural Information Processing Systems.Google Scholar

5. Kiana Calagari, Mohamed Elgharib, Piotr Didyk, Alexandre Kaspar, Wojciech Matusik, and Mohamed Hefeeda. 2017. Data Driven 2-D-To-3-D Video Conversion for Soccer. IEEE Transactions on Multimedia 20, 3 (2017), 605–619.Google ScholarDigital Library

6. Pierre Charbonnier, Laure Blanc-Feraud, Gilles Aubert, and Michel Barlaud. 1994. Two deterministic half-quadratic regularization algorithms for computed imaging. In IEEE International Conference on Image Processing (ICIP).Google ScholarCross Ref

7. Yue Chen, Debargha Mukherjee, Jingning Han, Adrian Grange, Yaowu Xu, Zoe Liu, Sarah Parker, Cheng Chen, Hui Su, Urvang Joshi, Ching-Han Chiang, Yunqing Wang, Paul Wilkins, Jim Bankoski, Luc N. Trudeau, Nathan E. Egge, Jean-Marc Valin, Thomas Davies, Steinar Midtskogen, Andrey Norkin, and Peter De Rivaz. 2018. An Overview of Core Coding Tools in the AV1 Video Codec. In IEEE Picture Coding Symposium (PCS).Google ScholarCross Ref

8. Yoojin Choi, Mostafa El-Khamy, and Jungwon Lee. 2019. Variable rate deep image compression with a conditional autoencoder. In International Conference on Computer Vision (ICCV).Google ScholarCross Ref

9. Xiaodong Cun, Feng Xu, Chi-Man Pun, and Hao Gao. 2018. Depth-Assisted Full Resolution Network for Single Image-Based View Synthesis. IEEE Computer Graphics and Applications 39, 2 (2018), 52–64.Google ScholarCross Ref

10. Jifeng Dai, Haozhi Qi, Yuwen Xiong, Yi Li, Guodong Zhang, Han Hu, and Yichen Wei. 2017. Deformable convolutional networks. In International Conference on Computer Vision (ICCV).Google ScholarCross Ref

11. Piotr Didyk, Tobias Ritschel, Elmar Eisemann, Karol Myszkowski, and Hans-Peter Seidel. 2011. A perceptual model for disparity. ACM Transactions on Graphics (SIGGRAPH) 30, 4 (2011), 96:1–96:10.Google ScholarDigital Library

12. Piotr Didyk, Tobias Ritschel, Elmar Eisemann, Karol Myszkowski, Hans-Peter Seidel, and Wojciech Matusik. 2012. A luminance-contrast-aware disparity model and applications. ACM Transactions on Graphics (SIGGRAPH Asia) 31, 6 (2012), 184:1–184:10.Google Scholar

13. David Eigen, Christian Puhrsch, and Rob Fergus. 2014. Depth Map Prediction from a Single Image using a Multi-Scale Deep Network. In Advances in Neural Information Processing Systems.Google Scholar

14. José M. Fácil, Benjamin Ummenhofer, Huizhong Zhou, Luis Montesano, Thomas Brox, and Javier Civera. 2019. CAM-Convs: Camera-Aware Multi-Scale Convolutions for Single-View Depth. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

15. John Flynn, Ivan Neulander, James Philbin, and Noah Snavely. 2016. DeepStereo: Learning to predict new views from the world’s imagery. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

16. Taiki Fukiage, Takahiro Kawabe, and Shin’ya Nishida. 2017. Hiding of phase-based stereo disparity for ghost-free viewing without glasses. ACM Transactions on Graphics (SIGGRAPH) 36, 4 (2017), 147:1–147:17.Google ScholarDigital Library

17. Andreas Geiger, Philip Lenz, Christoph Stiller, and Raquel Urtasun. 2013. Vision meets robotics: The KITTI dataset. The International Journal of Robotics Research (IJRR) 32, 11 (2013), 1231–1237.Google ScholarDigital Library

18. Ian Goodfellow, Yoshua Bengio, and Aaron Courville. 2016. Deep Learning. MIT Press. http://www.deeplearningbook.org.Google ScholarDigital Library

19. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarCross Ref

20. Itay Hubara, Matthieu Courbariaux, Daniel Soudry, Ran El-Yaniv, and Yoshua Bengio. 2016. Binarized neural networks. In Advances in Neural Information Processing Systems.Google Scholar

21. Sergey Ioffe and Christian Szegedy. 2015. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning (ICML).Google Scholar

22. Max Jaderberg, Karen Simonyan, Andrew Zisserman, et al. 2015. Spatial transformer networks. In Advances in Neural Information Processing Systems.Google Scholar

23. Petr Kellnhofer, Piotr Didyk, Karol Myszkowski, Mohamed M. Hefeeda, Hans-Peter Seidel, and Wojciech Matusik. 2016. GazeStereo3D: Seamless disparity manipulations. ACM Transactions on Graphics (SIGGRAPH) 35, 4 (2016), 1–13.Google ScholarDigital Library

24. Petr Kellnhofer, Piotr Didyk, Szu-Po Wang, Pitchaya Sitthi-Amorn, William Freeman, Fredo Durand, and Wojciech Matusik. 2017. 3DTV at home: Eulerian-Lagrangian stereo-to-multiview conversion. ACM Transactions on Graphics (SIGGRAPH) 36, 4 (2017), 146:1–146:13.Google ScholarDigital Library

25. Diederik P. Kingma and Jimmy Ba. 2015. Adam: A Method for Stochastic Optimization. In International Conference on Learning Representations (ICLR).Google Scholar

26. Yi Lai, Qian Wang, and Yin Gao. 2017. Content-Based Scalable Multi-View Video Coding Using 4D Wavelet. International Journal of Hybrid Information Technology 10, 8 (2017), 91–100.Google ScholarCross Ref

27. Manuel Lang, Alexander Hornung, Oliver Wang, Steven Poulakos, Aljoscha Smolic, and Markus H. Gross. 2010. Nonlinear disparity mapping for stereoscopic 3D. ACM Transactions on Graphics (SIGGRAPH) 29, 4 (2010), 75:1–75:10.Google ScholarDigital Library

28. Thomas Leimkühler, Petr Kellnhofer, Tobias Ritschel, Karol Myszkowski, and HansPeter Seidel. 2018. Perceptual real-time 2D-to-3D conversion using cue fusion. IEEE Transactions on Visualization & Computer Graphics 24, 6 (2018), 2037–2050.Google ScholarCross Ref

29. Yue Li, Dong Liu, Houqiang Li, Li Li, Zhu Li, and Feng Wu. 2019b. Learning a Convolutional Neural Network for Image Compact-Resolution. IEEE Transactions on Image Processing (TIP) 28, 3 (2019), 1092–1107.Google ScholarCross Ref

30. Zhengqi Li, Tali Dekel, Forrester Cole, Richard Tucker, Noah Snavely, Ce Liu, and William T. Freeman. 2019a. Learning the depths of moving people by watching frozen people. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

31. Miaomiao Liu, Xuming He, and Mathieu Salzmann. 2018. Geometry-aware deep network for single-image novel view synthesis. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarCross Ref

32. Stephen Lombardi, Tomas Simon, Jason M. Saragih, Gabriel Schwartz, Andreas M. Lehrmann, and Yaser Sheikh. 2019. Neural volumes: learning dynamic renderable volumes from images. ACM Transactions on Graphics (SIGGRAPH) 38, 4 (2019), 65:1–65:14.Google ScholarDigital Library

33. Yue Luo, Jimmy Ren, Mude Lin, Jiahao Pang, Wenxiu Sun, Hongsheng Li, and Liang Lin. 2018. Single view stereo matching. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarCross Ref

34. Bruhanth Mallik and Akbar Sheikh Akbari. 2016. HEVC based multi-view video codec using frame interleaving technique. In International Conference on Developments in eSystems Engineering (DeSE).Google ScholarCross Ref

35. Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. 2020. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. arXiv:2003.08934 [cs.CV]Google Scholar

36. Debargha Mukherjee, Jingning Han, Jim Bankoski, Ronald Bultje, Adrian Grange, John Koleszar, Paul Wilkins, and Yaowu Xu. 2015. A technical overview of VP9—The latest open-source video codec. SMPTE Motion Imaging Journal 124, 1 (2015), 44–54.Google ScholarCross Ref

37. Seungjun Nah, Tae Hyun Kim, and Kyoung Mu Lee. 2017. Deep Multi-scale Convolutional Neural Network for Dynamic Scene Deblurring. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

38. Simon Niklaus, Long Mai, Jimei Yang, and Feng Liu. 2019. 3D Ken Burns effect from a single image. ACM Transactions on Graphics (SIGGRAPH Asia) 38, 6 (2019), 184:1–184:15.Google Scholar

39. Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, Alban Desmaison, Andreas Köpf, Edward Yang, Zachary DeVito, Martin Raison, Alykhan Tejani, Sasank Chilamkurthy, Benoit Steiner, Lu Fang, Junjie Bai, and Soumith Chintala. 2019. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems.Google Scholar

40. Mohammad Rastegari, Vicente Ordonez, Joseph Redmon, and Ali Farhadi. 2017. XNOR-Net: ImageNet classification using binary convolutional neural networks. In European Conference on Computer Vision (ECCV).Google Scholar

41. Mark A. Robertson and Robert L. Stevenson. 2005. DCT quantization noise in compressed images. IEEE Transactions on Circuits and Systems for Video Technology (CSVT) 15, 1 (2005), 27–38.Google ScholarDigital Library

42. Steven Scher, Jing Liu, Rajan Vaish, Prabath Gunawardane, and James Davis. 2013. 3D+2DTV: 3D displays with no ghosting for viewers without glasses. ACM Transactions on Graphics (SIGGRAPH) 32, 3 (2013), 21:1–21:10.Google ScholarDigital Library

43. Donald J. Schuirmann. 1987. A comparison of the two one-sided tests procedure and the power approach for assessing the equivalence of average bioavailability. Journal of Pharmacokinetics and Biopharmaceutics 15, 6 (1987), 657–680.Google ScholarCross Ref

44. Guillaume Seguin, Karteek Alahari, Josef Sivic, and Ivan Laptev. 2015. Pose Estimation and Segmentation of People in 3D Movies. IEEE Transactions on Pattern Analysis & Machine Intelligence (PAMI) 37, 8 (2015), 1643–1655.Google ScholarDigital Library

45. Meng-Li Shih, Shih-Yang Su, Johannes Kopf, and Jia-Bin Huang. 2020. 3D Photography using Context-aware Layered Depth Inpainting. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarCross Ref

46. Pratul P. Srinivasan, Richard Tucker, Jonathan T. Barron, Ravi Ramamoorthi, Ren Ng, and Noah Snavely. 2019. Pushing the Boundaries of View Extrapolation with Multiplane Images. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

47. Gary J. Sullivan, Jens-Rainer Ohm, Woo-Jin Han, and Thomas Wiegand. 2012. Overview of the high efficiency video coding (HEVC) standard. IEEE Transactions on Circuits and Systems for Video Technology (CSVT) 22, 12 (2012), 1649–1668.Google ScholarDigital Library

48. Deqing Sun, Xiaodong Yang, Ming-Yu Liu, and Jan Kautz. 2018. PWC-Net: CNNs for Optical Flow Using Pyramid, Warping, and Cost Volume. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarCross Ref

49. Gerhard Tech, Ying Chen, Karsten Müller, Jens-Rainer Ohm, Anthony Vetro, and Ye-Kui Wang. 2016. Overview of the multiview and 3D extensions of high efficiency video coding. IEEE Transactions on Circuits and Systems for Video Technology (CSVT) 26, 1 (2016), 35–49.Google ScholarDigital Library

50. Anthony Vetro. 2010. Frame compatible formats for 3D video distribution. In IEEE International Conference on Image Processing (ICIP).Google ScholarCross Ref

51. Anthony Vetro, Thomas Wiegand, and Gary J. Sullivan. 2011. Overview of the stereo and multiview video coding extensions of the H. 264/MPEG-4 AVC standard. Proc. IEEE 99, 4 (2011), 626–642.Google ScholarCross Ref

52. Yingqian Wang, Longguang Wang, Jungang Yang, Wei An, and Yulan Guo. 2019b. Flickr1024: A Large-Scale Dataset for Stereo Image Super-Resolution. In International Conference on Computer Vision Workshops.Google ScholarCross Ref

53. Zihan Wang, Neng Gao, Xin Wang, Ji Xiang, Daren Zha, and Linghui Li. 2019a. HidingGAN: High Capacity Information Hiding with Generative Adversarial Network. Computer Graphics Forum 38, 7 (2019), 393–401.Google ScholarCross Ref

54. Zhou Wang, Eero P. Simoncelli, and Alan C. Bovik. 2003. Multiscale structural similarity for image quality assessment. In The Thirty-Seventh Asilomar Conference on Signals, Systems & Computers. IEEE.Google Scholar

55. Eric Wengrowski and Kristin Dana. 2019. Light Field Messaging With Deep Photographic Steganography. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

56. Thomas Wiegand, Gary J. Sullivan, Gisle Bjontegaard, and Ajay Luthra. 2003. Overview of the H. 264/AVC video coding standard. IEEE Transactions on Circuits and Systems for Video Technology (CSVT) 13, 7 (2003), 560–576.Google ScholarDigital Library

57. Menghan Xia, Xueting Liu, and Tien-Tsin Wong. 2018. Invertible grayscale. ACM Transactions on Graphics (SIGGRAPH Asia) 37, 6 (2018), 246:1–246:10.Google Scholar

58. Junyuan Xie, Ross B. Girshick, and Ali Farhadi. 2016. Deep3D: Fully Automatic 2D-to-3D Video Conversion with Deep Convolutional Neural Networks. In European Conference on Computer Vision (ECCV).Google ScholarCross Ref

59. Zexiang Xu, Sai Bi, Kalyan Sunkavalli, Sunil Hadap, Hao Su, and Ravi Ramamoorthi. 2019. Deep view synthesis from sparse photometric images. ACM Transactions on Graphics (SIGGRAPH) 38, 4 (2019), 76:1–76:13.Google ScholarDigital Library

60. Tinghui Zhou, Richard Tucker, John Flynn, Graham Fyffe, and Noah Snavely. 2018. Stereo magnification: learning view synthesis using multiplane images. ACM Transactions on Graphics (SIGGRAPH) 37, 4 (2018), 65:1–65:12.Google ScholarDigital Library

61. Jiren Zhu, Russell Kaplan, Justin Johnson, and Li Fei-Fei. 2018. HiDDeN: Hiding Data With Deep Networks. In European Conference on Computer Vision (ECCV).Google Scholar

62. Xizhou Zhu, Han Hu, Stephen Lin, and Jifeng Dai. 2019. Deformable ConvNets v2: More deformable, better results. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarCross Ref