“MoGlow: probabilistic and controllable motion synthesis using normalising flows” by Henter, Alexanderson and Beskow

Conference:

Type(s):

Title:

- MoGlow: probabilistic and controllable motion synthesis using normalising flows

Session/Category Title:

- Learning to Move and Synthesize

Presenter(s)/Author(s):

Abstract:

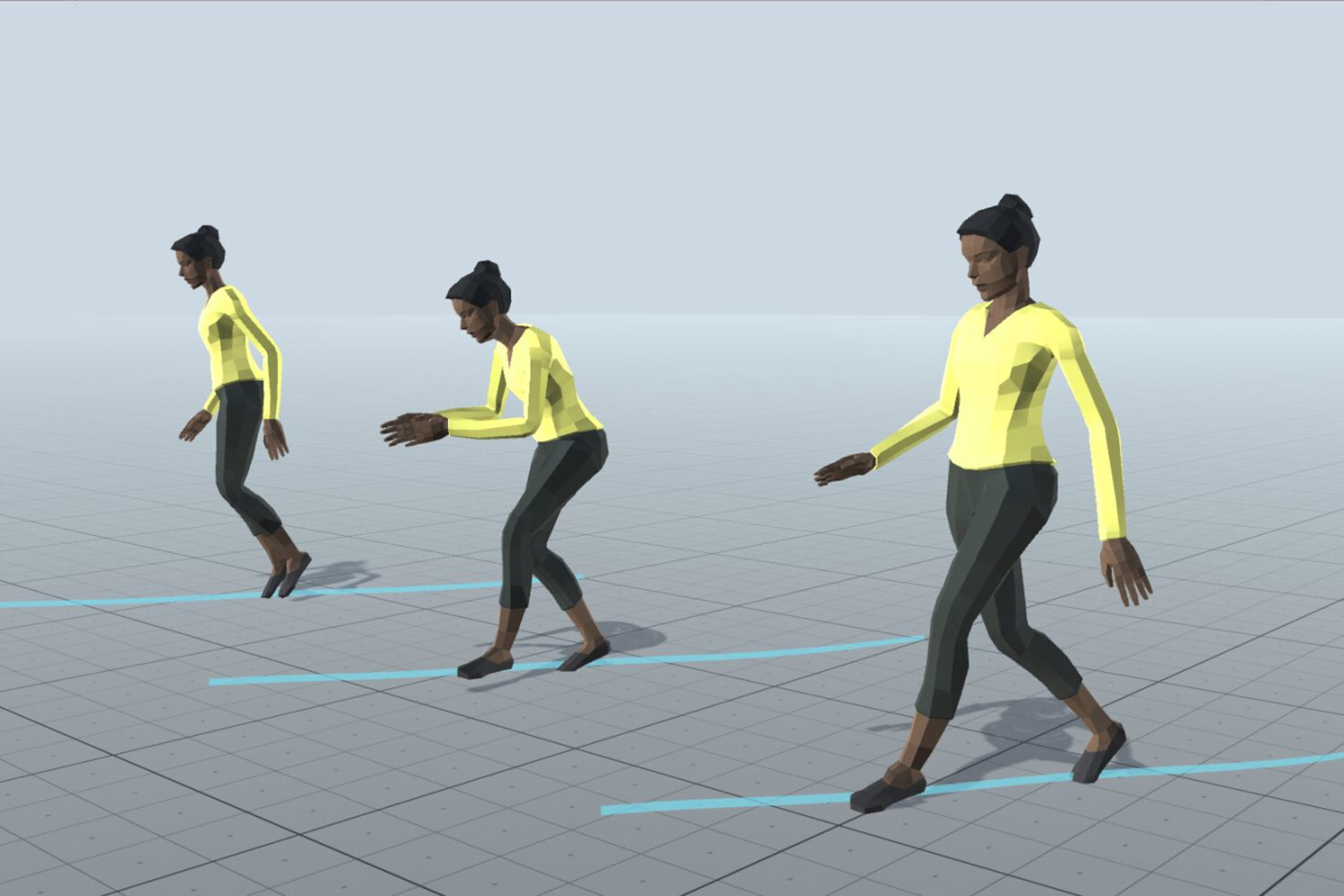

Data-driven modelling and synthesis of motion is an active research area with applications that include animation, games, and social robotics. This paper introduces a new class of probabilistic, generative, and controllable motion-data models based on normalising flows. Models of this kind can describe highly complex distributions, yet can be trained efficiently using exact maximum likelihood, unlike GANs or VAEs. Our proposed model is autoregressive and uses LSTMs to enable arbitrarily long time-dependencies. Importantly, is is also causal, meaning that each pose in the output sequence is generated without access to poses or control inputs from future time steps; this absence of algorithmic latency is important for interactive applications with real-time motion control. The approach can in principle be applied to any type of motion since it does not make restrictive, task-specific assumptions regarding the motion or the character morphology. We evaluate the models on motion-capture datasets of human and quadruped locomotion. Objective and subjective results show that randomly-sampled motion from the proposed method outperforms task-agnostic baselines and attains a motion quality close to recorded motion capture.

References:

1. Simon Alexanderson and Gustav Eje Henter. 2020. Robust model training and generalisation with Studentising flows. In Proceedings of the Workshop on Invertible Neural Networks, Normalizing Flows, and Explicit Likelihood Models (INNF+’20, Vol. 2). Article 15, 9 pages. https://arxiv.org/abs/2006.06599Google Scholar

2. Simon Alexanderson, Gustav Eje Henter, Taras Kucherenko, and Jonas Beskow. 2020. Style-controllable speech-driven gesture synthesis using normalising flows. Comput. Graph. Forum 39, 2 (2020), 487–496. Google ScholarCross Ref

3. Okan Arikan and David A. Forsyth. 2002. Interactive motion generation from examples. ACM Trans. Graph. 21, 3 (2002), 483–490. Google ScholarDigital Library

4. Samy Bengio, Oriol Vinyals, Navdeep Jaitly, and Noam Shazeer. 2015. Scheduled sampling for sequence prediction with recurrent neural networks. In Advances in Neural Information Processing Systems (NIPS’15). Curran Associates, Inc., Red Hook, NY, USA, 1171–1179. http://papers.nips.cc/paper/5956-scheduled-sampling-for-sequence-prediction-with-recurrent-neural-networksGoogle Scholar

5. Davis Blalock, Jose Javier Gonzalez Ortiz, Jonathan Frankle, and John Guttag. 2020. What is the state of neural network pruning?. In Proceedings of the Conference on Machine Learning and Systems (MLSys’20). 129–146. https://proceedings.mlsys.org/book/2020/hash/d2ddea18f00665ce8623e36bd4e3c7c5Google Scholar

6. Samuel R. Bowman, Luke Vilnis, Oriol Vinyals, Andrew M. Dai, Rafal Jozefowicz, and Samy Bengio. 2016. Generating sentences from a continuous space. In Proceedings of the SIGNLL Conference on Computational Natural Language Learning (CoNLL’16). ACL, Berlin, Germany, 10–21. Google ScholarCross Ref

7. Matthew Brand and Aaron Hertzmann. 2000. Style machines. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH’00). ACM Press/Addison-Wesley Publishing Co., USA, 183–192. Google ScholarDigital Library

8. Christoph Bregler. 1997. Learning and recognizing human dynamics in video sequences. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’97). IEEE Computer Society, Los Alamitos, CA, USA, 568–574. Google ScholarCross Ref

9. Andrew Brock, Jeff Donahue, and Karen Simonyan. 2019. Large scale GAN training for high fidelity natural image synthesis. In Proceedings of the International Conference on Learning Representations (ICLR’19). 35. https://openreview.net/forum?id=B1xsqj09FmGoogle Scholar

10. Judith Bütepage, Michael J. Black, Danica Kragic, and Hedvig Kjellström. 2017. Deep representation learning for human motion prediction and classification. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’17). IEEE Computer Society, Los Alamitos, CA, USA, 1591–1599. Google ScholarCross Ref

11. Jinxiang Chai and Jessica K. Hodgins. 2005. Performance animation from low-dimensional control signals. ACM Trans. Graph. 24, 3 (2005), 686–696. Google ScholarDigital Library

12. Xi Chen, Diederik P. Kingma, Tim Salimans, Yan Duan, Prafulla Dhariwal, John Schulman, Ilya Sutskever, and Pieter Abbeel. 2017. Variational lossy autoencoder. In Proceedings of the International Conference on Learning Representations (ICLR’17). 17. https://openreview.net/forum?id=BysvGP5eeGoogle Scholar

13. CMU Graphics Lab. 2003. Carnegie Mellon University motion capture database. http://mocap.cs.cmu.edu/Google Scholar

14. Chris Cremer, Xuechen Li, and David Duvenaud. 2018. Inference suboptimality in variational autoencoders. In Proceedings of the International Conference on Machine Learning (ICML’18). PMLR, 1078–1086. http://proceedings.mlr.press/v80/cremer18a.htmlGoogle Scholar

15. Gustavo Deco and Wilfried Brauer. 1994. Higher order statistical decorrelation without information loss. In Advances in Neural Information Processing Systems (NIPS’94). MIT Press, Cambridge, MA, USA, 247–254. https://papers.nips.cc/paper/901-higher-order-statistical-decorrelation-without-information-lossGoogle Scholar

16. Chuang Ding, Pengcheng Zhu, and Lei Xie. 2015. BLSTM neural networks for speech driven head motion synthesis. In Proceedings of the Annual Conference of the International Speech Communication Association (INTERSPEECH’15). ISCA, Grenoble, France, 3345–3349. https://www.isca-speech.org/archive/interspeech_2015/i15_3345.htmlGoogle ScholarCross Ref

17. Laurent Dinh, David Krueger, and Yoshua Bengio. 2015. NICE: Non-linear independent components estimation. In Proceedings of the International Conference on Learning Representations, Workshop Track (ICLR’15). 13. https://arxiv.org/abs/1410.8516Google Scholar

18. Laurent Dinh, Jascha Sohl-Dickstein, and Samy Bengio. 2017. Density estimation using Real NVP. In Proceedings of the International Conference on Learning Representations (ICLR’17). 32. https://openreview.net/forum?id=HkpbnH9lxGoogle Scholar

19. Ylva Ferstl, Michael Neff, and Rachel McDonnell. 2019. Multi-objective adversarial gesture generation. In Proceedings of the ACM SIGGRAPH Conference on Motion, Interaction and Games (MIG’19). ACM, New York, NY, USA, Article 3, 10 pages. Google ScholarDigital Library

20. Katerina Fragkiadaki, Sergey Levine, Panna Felsen, and Jitendra Malik. 2015. Recurrent network models for human dynamics. In Proceedings of the IEEE International Conference on Computer Vision (ICCV’15). IEEE Computer Society, Los Alamitos, CA, USA, 4346–4354. Google ScholarCross Ref

21. Ian Goodfellow. 2016. NIPS 2016 tutorial: Generative adversarial networks. arXiv:1701.00160Google Scholar

22. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial nets. In Advances in Neural Information Processing Systems (NIPS’14). Curran Associates, Inc., Red Hook, NY, USA, 2672–2680. http://papers.nips.cc/paper/5423-generative-adversarial-netsGoogle Scholar

23. F. Sebastian Grassia. 1998. Practical parameterization of rotations using the exponential map. J. Graph. Tools 3, 3 (1998), 29–48. Google ScholarDigital Library

24. Alex Graves. 2013. Generating sequences with recurrent neural networks. arXiv:1308.0850Google Scholar

25. David Greenwood, Stephen Laycock, and Iain Matthews. 2017a. Predicting head pose from speech with a conditional variational autoencoder. In Proceedings of the Annual Conference of the International Speech Communication Association (INTERSPEECH’17). ISCA, Grenoble, France, 3991–3995. Google ScholarCross Ref

26. David Greenwood, Stephen Laycock, and Iain Matthews. 2017b. Predicting head pose in dyadic conversation. In Proceedings of the International Conference on Intelligent Virtual Agents (IVA’17). Springer, Cham, Switzerland, 160–169. Google ScholarCross Ref

27. Keith Grochow, Steven L. Martin, Aaron Hertzmann, and Zoran Popović. 2004. Style-based inverse kinematics. ACM Trans. Graph. 23, 3 (2004), 522–531. Google ScholarDigital Library

28. Ikhansul Habibie, Daniel Holden, Jonathan Schwarz, Joe Yearsley, and Taku Komura. 2017. A recurrent variational autoencoder for human motion synthesis. In Proceedings of the British Machine Vision Conference (BMVC’17). BMVA Press, Durham, UK, Article 119, 12 pages. Google ScholarCross Ref

29. Félix G. Harvey, Mike Yurick, Derek Nowrouzezahrai, and Christopher Pal. 2020. Robust motion in-betweening. ACM Trans. Graph. 39, 4, Article 60 (2020), 12 pages. Google ScholarDigital Library

30. Dai Hasegawa, Naoshi Kaneko, Shinichi Shirakawa, Hiroshi Sakuta, and Kazuhiko Sumi. 2018. Evaluation of speech-to-gesture generation using bi-directional LSTM network. In Proceedings of the ACM International Conference on Intelligent Virtual Agents (IVA’18). ACM, New York, NY, USA, 79–86. Google ScholarDigital Library

31. Gustav Eje Henter and W. Bastiaan Kleijn. 2016. Minimum entropy rate simplification of stochastic processes. IEEE T. Pattern Anal. 38, 12 (2016), 2487–2500. Google ScholarDigital Library

32. Irina Higgins, Loic Matthey, Arka Pal, Christopher Burgess, Xavier Glorot, Matthew Botvinick, Shakir Mohamed, and Alexander Lerchner. 2016. beta-VAE: Learning basic visual concepts with a constrained variational framework. In Proceedings of the International Conference on Learning Representations (ICLR’16). 22. https://openreview.net/forum?id=Sy2fzU9glGoogle Scholar

33. Sepp Hochreiter and Jürgen Schmidhuber. 1997. Long short-term memory. Neural Comput. 9, 8 (1997), 1735–1780. Google ScholarDigital Library

34. Daniel Holden, Taku Komura, and Jun Saito. 2017. Phase-functioned neural networks for character control. ACM Trans. Graph. 36, 4, Article 42 (2017), 13 pages. Google ScholarDigital Library

35. Daniel Holden, Jun Saito, and Taku Komura. 2016. A deep learning framework for character motion synthesis and editing. ACM Trans. Graph. 35, 4, Article 138 (2016), 11 pages. Google ScholarDigital Library

36. Daniel Holden, Jun Saito, Taku Komura, and Thomas Joyce. 2015. Learning motion manifolds with convolutional autoencoders. In SIGGRAPH Asia 2015 Technical Briefs (SA’15). ACM, New York, NY, USA, Article 18, 4 pages. Google ScholarDigital Library

37. Chin-Wei Huang, Faruk Ahmed, Kundan Kumar, Alexandre Lacoste, and Aaron Courville. 2020. Probability distillation: A caveat and alternatives. In Proceedings of the Conference on Uncertainty in Artificial Intelligence (UAI’20, Vol. 115). PMLR, 1212–1221. http://proceedings.mlr.press/v115/huang20c.htmlGoogle Scholar

38. Chin-Wei Huang, David Krueger, Alexandre Lacoste, and Aaron Courville. 2018. Neural autoregressive flows. In Proceedings of the International Conference on Machine Learning (ICML’18). PMLR, 2078–2087. http://proceedings.mlr.press/v80/huang18d.htmlGoogle Scholar

39. Ferenc Huszár. 2017. Is maximum likelihood useful for representation learning? http://www.inference.vc/maximum-likelihood-for-representation-learning-2/Google Scholar

40. Sergey Ioffe and Christian Szegedy. 2015. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning (ICML’15). PMLR, 448–456. http://proceedings.mlr.press/v37/ioffe15.htmlGoogle ScholarDigital Library

41. Lauri Juvela, Bajibabu Bollepalli, Junichi Yamagishi, and Paavo Alku. 2019. GELP: GAN-Excited Linear Prediction for Speech Synthesis from Mel-Spectrogram. In Proceedings of the Annual Conference of the International Speech Communication Association (INTERSPEECH’19). ISCA, Grenoble, France, 694–698. Google ScholarCross Ref

42. Nal Kalchbrenner, Erich Elsen, Karen Simonyan, Seb Noury, Norman Casagrande, Edward Lockhart, Florian Stimberg, Aäron van den Oord, Sander Dieleman, and Koray Kavukcuoglu. 2018. Efficient Neural Audio Synthesis. In Proceedings of the International Conference on Machine Learning (ICML’18). PMLR, 2410–2419. http://proceedings.mlr.press/v80/kalchbrenner18a.htmlGoogle Scholar

43. Tero Karras, Timo Aila, Samuli Laine, Antti Herva, and Jaakko Lehtinen. 2017. Audio-driven facial animation by joint end-to-end learning of pose and emotion. ACM Trans. Graph. 36, 4, Article 94 (2017), 12 pages. Google ScholarDigital Library

44. Sungwon Kim, Sang-Gil Lee, Jongyoon Song, Jaehyeon Kim, and Sungroh Yoon. 2019. FloWaveNet: A generative flow for raw audio. In Proceedings of the International Conference on Machine Learning (ICML’19). PMLR, 3370–3378. http://proceedings.mlr.press/v97/kim19b.htmlGoogle Scholar

45. Diederik P. Kingma and Jimmy Ba. 2015. Adam: A method for stochastic optimization. In Proceedings of the International Conference on Learning Representations (ICLR’15). 15. http://arxiv.org/abs/1412.6980Google Scholar

46. Diederik P. Kingma and Prafulla Dhariwal. 2018. Glow: Generative flow with invertible 1×1 convolutions. In Advances in Neural Information Processing Systems (NeurIPS’18). Curran Associates, Inc., Red Hook, NY, USA, 10236–10245. http://papers.nips.cc/paper/8224-glow-generative-flow-with-invertible-1×1-conGoogle Scholar

47. Diederik P. Kingma and Max Welling. 2014. Auto-encoding variational Bayes. In Proceedings of the International Conference on Learning Representations (ICLR’14). 14. http://arxiv.org/abs/1312.6114Google Scholar

48. Lucas Kovar and Michael Gleicher. 2004. Automated extraction and parameterization of motions in large data sets. ACM Trans. Graph. 23, 3 (2004), 559–568. Google ScholarDigital Library

49. Lucas Kovar, Michael Gleicher, and Frédéric Pighin. 2002. Motion graphs. ACM Trans. Graph. 21, 3 (2002), 473–482. Google ScholarDigital Library

50. Taras Kucherenko, Dai Hasegawa, Gustav Eje Henter, Naoshi Kaneko, and Hedvig Kjellström. 2019. Analyzing input and output representations for speech-driven gesture generation. In Proceedings of the ACM International Conference on Intelligent Virtual Agents (IVA’19). ACM, New York, NY, USA, 97–104. Google ScholarDigital Library

51. Manoj Kumar, Mohammad Babaeizadeh, Dumitru Erhan, Chelsea Finn, Sergey Levine, Laurent Dinh, and Durk Kingma. 2020. VideoFlow: A conditional flow-based model for stochastic video generation. In Proceedings of the International Conference on Learning Representations (ICLR’20). 18. https://openreview.net/forum?id=rJgUfTEYvHGoogle Scholar

52. Neil Lawrence. 2005. Probabilistic non-linear principal component analysis with Gaussian process latent variable models. J. Mach. Learn. Res. 6, Nov. (2005), 1783–1816. http://www.jmlr.org/papers/v6/lawrence05a.htmlGoogle Scholar

53. Kyungho Lee, Seyoung Lee, and Jehee Lee. 2018. Interactive character animation by learning multi-objective control. ACM Trans. Graph. 37, 6, Article 180 (2018), 10 pages. Google ScholarDigital Library

54. Sergey Levine, Jack M. Wang, Alexis Haraux, Zoran Popović, and Vladlen Koltun. 2012. Continuous character control with low-dimensional embeddings. ACM Trans. Graph. 31, 4, Article 28 (2012), 10 pages. Google ScholarDigital Library

55. Hung Yu Ling, Fabio Zinno, George Cheng, and Michiel van de Panne. 2020. Character controllers using motion VAEs. ACM Trans. Graph. 39, 4, Article 40 (2020), 12 pages. Google ScholarDigital Library

56. Peng Liu, Xixin Wu, Shiyin Kang, Guangzhi Li, Dan Su, and Dong Yu. 2019. Maximizing mutual information for Tacotron. arXiv:1909.01145Google Scholar

57. Mario Lucic, Karol Kurach, Marcin Michalski, Sylvain Gelly, and Olivier Bousquet. 2018. Are GANs created equal? A large-scale study. In Advances in Neural Information Processing Systems (NeurIPS’18). Curran Associates, Inc., Red Hook, NY, USA, 700–709. http://papers.nips.cc/paper/7350-are-gans-created-equal-a-large-scale-studyGoogle Scholar

58. Lars Mescheder, Andreas Geiger, and Sebastian Nowozin. 2018. Which training methods for GANs do actually converge?. In Proceedings of the International Conference on Machine Learning (ICML’18). PMLR, 3481–3490. http://proceedings.mlr.press/v80/mescheder18a.htmlGoogle Scholar

59. Shakir Mohamed and Balaji Lakshminarayanan. 2016. Learning in implicit generative models. arXiv:1610.03483Google Scholar

60. Tomohiko Mukai and Shigeru Kuriyama. 2005. Geostatistical motion interpolation. ACM Trans. Graph. 24, 3 (2005), 1062–1070. Google ScholarDigital Library

61. Meinard Müller, Tido Röder, Michael Clausen, Bernhard Eberhardt, Björn Krüger, and Andreas Weber. 2007. Documentation Mocap Database HDM05. Technical Report CG-2007-2. Universität Bonn, Bonn, Germany. http://resources.mpi-inf.mpg.de/HDM05/07_MuRoClEbKrWe_HDM05.pdfGoogle Scholar

62. Kevin P. Murphy. 1998. Switching Kalman Filters. Technical Report 98–10. Compaq Cambridge Research Lab, Cambridge, MA, USA. https://www.cs.ubc.ca/~murphyk/Papers/skf.ps.gzGoogle Scholar

63. Eric Nalisnick, Akihiro Matsukawa, Yee Whye Teh, Dilan Gorur, and Balaji Lakshminarayanan. 2019. Do deep generative models know what they don’t know?. In Proceedings of the International Conference on Learning Representations (ICLR’19). 19. https://openreview.net/forum?id=H1xwNhCcYmGoogle Scholar

64. OpenAI et al. 2019. Dota 2 with large scale deep reinforcement learning. arXiv:1912.06680Google Scholar

65. George Papamakarios, Eric Nalisnick, Danilo Jimenez Rezende, Shakir Mohamed, and Balaji Lakshminarayanan. 2019. Normalizing flows for probabilistic modeling and inference. arXiv:1912.02762Google Scholar

66. Dario Pavllo, David Grangier, and Michael Auli. 2018. QuaterNet: A quaternion-based recurrent model for human motion. In Proceedings of the British Machine Vision Conference (BMVC’18). BMVA Press, Durham, UK, 14. http://www.bmva.org/bmvc/2018/contents/papers/0675.pdfGoogle Scholar

67. Vladimir Pavlović, James M. Rehg, and John MacCormick. 2000. Learning switching linear models of human motion. In Advances in Neural Information Processing Systems (NIPS’00). MIT Press, Cambridge, MA, USA, 981–987. https://papers.nips.cc/paper/1892-learning-switching-linear-models-of-human-motionGoogle Scholar

68. Hai X. Pham, Yuting Wang, and Vladimir Pavlovic. 2018. Generative adversarial talking head: Bringing portraits to life with a weakly supervised neural network. arXiv:1803.07716Google Scholar

69. Ryan Prenger, Rafael Valle, and Bryan Catanzaro. 2019. WaveGlow: A flow-based generative network for speech synthesis. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP’19). IEEE Signal Processing Society, Piscataway, NJ, USA, 3617–3621. Google ScholarCross Ref

70. Albert Pumarola, Antonio Agudo, Aleix M. Martinez, Alberto Sanfeliu, and Francesc Moreno-Noguer. 2018. GANimation: Anatomically-aware facial animation from a single image. In Proceedings of the European Conference on Computer Vision (ECCV’18). Springer, Cham, Switzerland, 835–851. Google ScholarCross Ref

71. Lawrence R. Rabiner. 1989. A tutorial on hidden Markov models and selected applications in speech recognition. Proc. IEEE 77, 2 (1989), 257–286. Google ScholarCross Ref

72. Danilo Jimenez Rezende, Shakir Mohamed, and Daan Wierstra. 2014. Stochastic back-propagation and approximate inference in deep generative models. In Proceedings of the International Conference on Machine Learning (ICML’14). PMLR, 1278–1286. http://proceedings.mlr.press/v32/rezende14.htmlGoogle Scholar

73. Charles Rose, Michael F. Cohen, and Bobby Bodenheimer. 1998. Verbs and adverbs: Multidimensional motion interpolation. IEEE Comput. Graph. 18, 5 (1998), 32–40. Google ScholarDigital Library

74. Paul Rubenstein. 2019. Variational autoencoders are not autoencoders. http://paulrubenstein.co.uk/variational-autoencoders-are-not-autoencoders/.Google Scholar

75. Najmeh Sadoughi and Carlos Busso. 2018. Novel realizations of speech-driven head movements with generative adversarial networks. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP’18). IEEE Signal Processing Society, Piscataway, NJ, USA, 6169–6173. Google ScholarCross Ref

76. Najmeh Sadoughi and Carlos Busso. 2019. Speech-driven animation with meaningful behaviors. Speech Commun. 110 (2019), 90–100. Google ScholarDigital Library

77. Tim Salimans, Andrej Karpathy, Xi Chen, and Diederik P. Kingma. 2017. PixelCNN++: Improving the PixelCNN with discretized logistic mixture likelihood and other modifications. In Proceedings of the International Conference on Learning Representations (ICLR’17). 10. https://openreview.net/forum?id=BJrFC6cegGoogle Scholar

78. Jonathan Shen, Ruoming Pang, Ron J. Weiss, Mike Schuster, Navdeep Jaitly, Zongheng Yang, Zhifeng Chen, Yu Zhang, Yuxuan Wang, RJ Skerry-Ryan, Rif A. Saurous, Yannis Agiomyrgiannakis, and Yonghui Wu. 2018. Natural TTS synthesis by conditioning WaveNet on mel spectrogram predictions. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP’18). IEEE Signal Processing Society, Piscataway, NJ, USA, 4799–4783. Google ScholarDigital Library

79. Harrison Jesse Smith, Chen Cao, Michael Neff, and Yingying Wang. 2019. Efficient neural networks for real-time motion style transfer. Proceedings of the ACM on Computer Graphics and Interactive Techniques 2, 2, Article 13 (2019), 17 pages. Google ScholarDigital Library

80. Sebastian Starke, Yiwei Zhao, Taku Komura, and Kazi Zaman. 2020. Local motion phases for learning multi-contact character movements. ACM Trans. Graph. 39, 4, Article 54 (2020), 14 pages. Google ScholarDigital Library

81. Supasorn Suwajanakorn, Steven M. Seitz, and Ira Kemelmacher-Shlizerman. 2017. Synthesizing Obama: Learning lip sync from audio. ACM Trans. Graph. 36, 4, Article 95 (2017), 13 pages. Google ScholarDigital Library

82. Hideyuki Tachibana, Katsuya Uenoyama, and Shunsuke Aihara. 2018. Efficiently trainable text-to-speech system based on deep convolutional networks with guided attention. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP’18). IEEE Signal Processing Society, Piscataway, NJ, USA, 4784–4788. Google ScholarCross Ref

83. Graham W. Taylor and Geoffrey E. Hinton. 2009. Factored conditional restricted Boltzmann machines for modeling motion style. In Proceedings of the International Conference on Machine Learning (ICML’09). 1025–1032. https://icml.cc/Conferences/2009/papers/178.pdfGoogle Scholar

84. Graham W. Taylor, Geoffrey E. Hinton, and Sam T. Roweis. 2011. Two distributed-state models for generating high-dimensional time series. J. Mach. Learn. Res. 12, 28 (2011), 1025–1068. http://jmlr.org/papers/v12/taylor11a.htmlGoogle ScholarDigital Library

85. Sarah Taylor, Taehwan Kim, Yisong Yue, Moshe Mahler, James Krahe, Anastasio Garcia Rodriguez, Jessica Hodgins, and Iain Matthews. 2017. A deep learning approach for generalized speech animation. ACM Trans. Graph. 36, 4, Article 93 (2017), 11 pages. Google ScholarDigital Library

86. Benigno Uria, Iain Murray, Steve Renals, Cassia Valentini-Botinhao, and John Bridle. 2015. Modelling acoustic feature dependencies with artificial neural networks: Trajectory-RNADE. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP’15). IEEE Signal Processing Society, Piscataway, NJ, USA, 4465–4469. Google ScholarCross Ref

87. Aäron van den Oord, Sander Dieleman, Heiga Zen, Karen Simonyan, Oriol Vinyals, Alex Graves, Nal Kalchbrenner, Andrew Senior, and Koray Kavukcuoglu. 2016. WaveNet: A generative model for raw audio. arXiv:1609.03499Google Scholar

88. Aaron van den Oord, Oriol Vinyals, and Koray Kavukcuoglu. 2017. Neural discrete representation learning. In Advances in Neural Information Processing Systems (NIPS’17). Curran Associates, Inc., Red Hook, NY, USA, 6306–6315. http://papers.nips.cc/paper/7210-neural-discrete-representation-learningGoogle Scholar

89. Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. In Advances in Neural Information Processing Systems (NIPS’17). Curran Associates, Inc., Red Hook, NY, USA, 5998–6008. https://papers.nips.cc/paper/7181-attention-is-all-you-needGoogle Scholar

90. Konstantinos Vougioukas, Stavros Petridis, and Maja Pantic. 2018. End-to-end speech-driven facial animation with temporal GANs. In Proceedings of the British Machine Vision Conference (BMVC’18). BMVA Press, Durham, UK, 12. http://www.bmva.org/bmvc/2018/contents/papers/0539.pdfGoogle Scholar

91. Konstantinos Vougioukas, Stavros Petridis, and Maja Pantic. 2020. Realistic speech-driven facial animation with GANs. Int. J. Comput. Vis. 128, 5 (2020), 1398–1413. Google ScholarDigital Library

92. Jack M. Wang, David J. Fleet, and Aaron Hertzmann. 2008. Gaussian process dynamical models for human motion. IEEE T. Pattern Anal. 30, 2 (2008), 283–298. Google ScholarDigital Library

93. Xin Wang, Shinji Takaki, and Junichi Yamagishi. 2018. Autoregressive neural f0 model for statistical parametric speech synthesis. IEEE/ACM T. Audio Speech 26, 8 (2018), 1406–1419. Google ScholarDigital Library

94. Yuxuan Wang, RJ Skerry-Ryan, Daisy Stanton, Yonghui Wu, Ron J. Weiss, Navdeep Jaitly, Zongheng Yang, Ying Xiao, Zhifeng Chen, Samy Bengio, Quoc Le, Yannis Agiomyrgiannakis, Rob Clark, and Rif A. Saurous. 2017. Tacotron: Towards end-to-end speech synthesis. In Proceedings of the Annual Conference of the International Speech Communication Association (INTERSPEECH’17). ISCA, Grenoble, France, 4006–4010. Google ScholarCross Ref

95. Zhiyong Wang, Jinxiang Chai, and Shihong Xia. 2019. Combining Recurrent Neural Networks and Adversarial Training for Human Motion Synthesis and Control. IEEE T. Vis. Comput. Gr. (2019), 14. Google ScholarDigital Library

96. Greg Welch and Gary Bishop. 1995. An Introduction to the Kalman Filter. Technical Report 95–041. Department of Computer Science, University of North Carolina at Chapel Hill, Chapel Hill, NC, USA. https://techreports.cs.unc.edu/papers/95-041.pdfGoogle Scholar

97. Youngwoo Yoon, Woo-Ri Ko, Minsu Jang, Jaeyeon Lee, Jaehong Kim, and Geehyuk Lee. 2019. Robots learn social skills: End-to-end learning of co-speech gesture generation for humanoid robots. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA’19). IEEE Robotics and Automation Society, Piscataway, NJ, USA, 4303–4309. Google ScholarDigital Library

98. G. Udny Yule. 1927. On a method of investigating periodicities disturbed series, with special reference to Wolfer’s sunspot numbers. Philos. T. R. Soc. Lond. 226, 636–646 (1927), 267–298. Google ScholarCross Ref

99. Heiga Zen and Andrew Senior. 2014. Deep mixture density networks for acoustic modeling in statistical parametric speech synthesis. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP’14). IEEE Signal Processing Society, Piscataway, NJ, USA, 3844–3848. Google ScholarCross Ref

100. Hongyi Zhang, Yann N. Dauphin, and Tengyu Ma. 2019. Fixup initialization: Residual learning without normalization. In Proceedings of the International Conference on Learning Representations (ICLR’19). 16. https://openreview.net/forum?id=H1gsz30cKXGoogle Scholar

101. He Zhang, Sebastian Starke, Taku Komura, and Jun Saito. 2018. Mode-adaptive neural networks for quadruped motion control. ACM Trans. Graph. 37, 4, Article 145 (2018), 11 pages. Google ScholarDigital Library

102. Yi Zhou, Zimo Li, Shuangjiu Xiao, Chong He, Zeng Huang, and Hao Li. 2018. Auto-conditioned recurrent networks for extended complex human motion synthesis. In Proceedings of the International Conference on Learning Representations (ICLR’18). 13. https://openreview.net/forum?id=r11Q2SlRWGoogle Scholar