“Manga filling style conversion with screentone variational autoencoder” by Xie, Li, Liu and Wong

Conference:

Type(s):

Title:

- Manga filling style conversion with screentone variational autoencoder

Session/Category Title:

- Image Synthesis with Generative Models

Presenter(s)/Author(s):

Abstract:

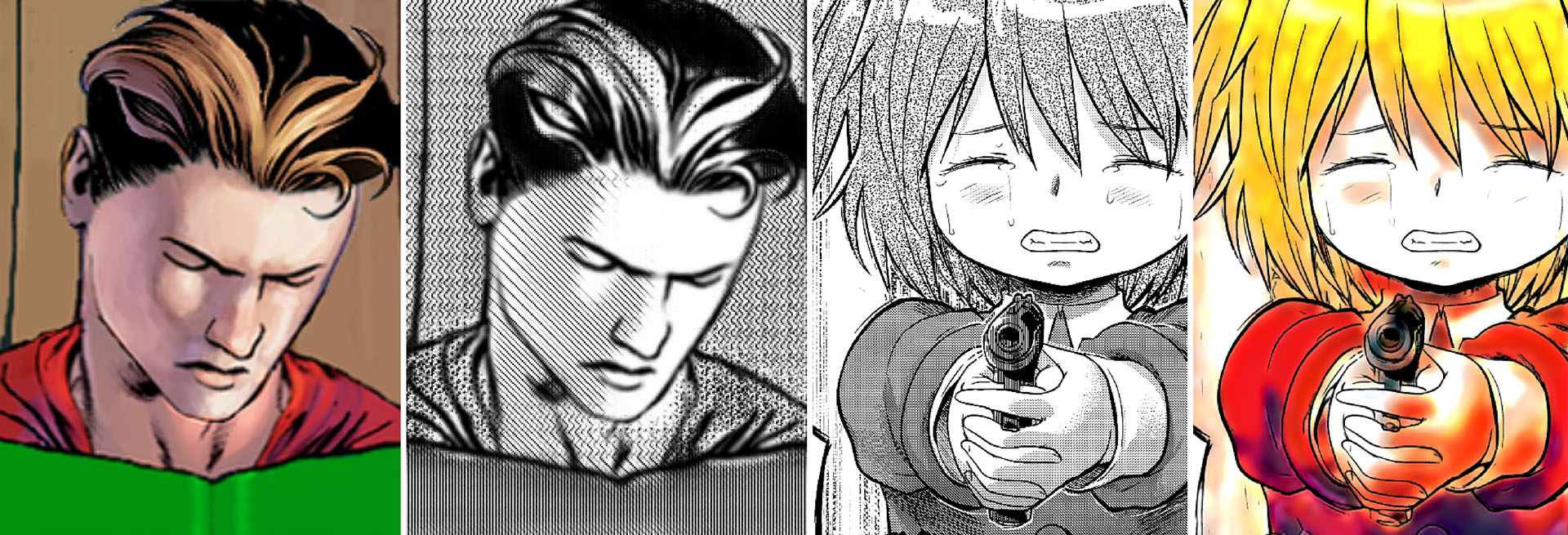

Western color comics and Japanese-style screened manga are two popular comic styles. They mainly differ in the style of region-filling. However, the conversion between the two region-filling styles is very challenging, and manually done currently. In this paper, we identify that the major obstacle in the conversion between the two filling styles stems from the difference between the fundamental properties of screened region-filling and colored region-filling. To resolve this obstacle, we propose a screentone variational autoencoder, ScreenVAE, to map the screened manga to an intermediate domain. This intermediate domain can summarize local texture characteristics and is interpolative. With this domain, we effectively unify the properties of screening and color-filling, and ease the learning for bidirectional translation between screened manga and color comics. To carry out the bidirectional translation, we further propose a network to learn the translation between the intermediate domain and color comics. Our model can generate quality screened manga given a color comic, and generate color comic that retains the original screening intention by the bitonal manga artist. Several results are shown to demonstrate the effectiveness and convenience of the proposed method. We also demonstrate how the intermediate domain can assist other applications such as manga inpainting and photo-to-comic conversion.

References:

1. Radhakrishna Achanta, Appu Shaji, Kevin Smith, Aurelien Lucchi, Pascal Fua, and Sabine Süsstrunk. 2012. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE transactions on pattern analysis and machine intelligence 34, 11 (2012), 2274–2282.Google ScholarDigital Library

2. Vincent Andrearczyk and Paul F Whelan. 2016. Using filter banks in convolutional neural networks for texture classification. Pattern Recognition Letters 84 (2016), 63–69.Google ScholarDigital Library

3. Mircea Cimpoi, Subhransu Maji, Iasonas Kokkinos, Sammy Mohamed, and Andrea Vedaldi. 2014. Describing textures in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 3606–3613.Google ScholarDigital Library

4. Mircea Cimpoi, Subhransu Maji, Iasonas Kokkinos, and Andrea Vedaldi. 2016. Deep filter banks for texture recognition, description, and segmentation. International Journal of Computer Vision 118, 1 (2016), 65–94.Google ScholarDigital Library

5. Mircea Cimpoi, Subhransu Maji, and Andrea Vedaldi. 2015. Deep filter banks for texture recognition and segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition. 3828–3836.Google ScholarCross Ref

6. Chie Furusawa, Kazuyuki Hiroshiba, Keisuke Ogaki, and Yuri Odagiri. 2017. Comicolorization: semi-automatic manga colorization. In SIGGRAPH Asia 2017 Technical Briefs. ACM, 12.Google Scholar

7. Leon A Gatys, Alexander S Ecker, and Matthias Bethge. 2016. Image style transfer using convolutional neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 2414–2423.Google ScholarCross Ref

8. Ishaan Gulrajani, Faruk Ahmed, Martin Arjovsky, Vincent Dumoulin, and Aaron C Courville. 2017. Improved training of wasserstein gans. In Advances in neural information processing systems. 5767–5777.Google Scholar

9. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2015. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE international conference on computer vision. 1026–1034.Google ScholarDigital Library

10. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition. 770–778.Google ScholarCross Ref

11. Paulina Hensman and Kiyoharu Aizawa. 2017. cGAN-based manga colorization using a single training image. In 2017 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Vol. 3. IEEE, 72–77.Google ScholarCross Ref

12. Xun Huang and Serge Belongie. 2017. Arbitrary style transfer in real-time with adaptive instance normalization. In Proceedings of the IEEE International Conference on Computer Vision. 1501–1510.Google ScholarCross Ref

13. Xun Huang, Ming-Yu Liu, Serge Belongie, and Jan Kautz. 2018. Multimodal Unsupervised Image-to-image Translation. In ECCV.Google Scholar

14. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. 2017. Image-to-Image Translation with Conditional Adversarial Networks. CVPR (2017).Google Scholar

15. Anil K Jain and Farshid Farrokhnia. 1990. Unsupervised texture segmentation using Gabor filters. In 1990 IEEE international conference on systems, man, and cybernetics conference proceedings. IEEE, 14–19.Google ScholarCross Ref

16. John F Jarvis, C Ni Judice, and WH Ninke. 1976. A survey of techniques for the display of continuous tone pictures on bilevel displays. Computer graphics and image processing 5, 1 (1976), 13–40.Google Scholar

17. Justin Johnson, Alexandre Alahi, and Li Fei-Fei. 2016. Perceptual losses for real-time style transfer and super-resolution. In European conference on computer vision. Springer, 694–711.Google ScholarCross Ref

18. Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).Google Scholar

19. Diederik P Kingma and Max Welling. 2013. Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114 (2013).Google Scholar

20. Solomon Kullback and Richard A Leibler. 1951. On information and sufficiency. The annals of mathematical statistics 22, 1 (1951), 79–86.Google Scholar

21. Suha Kwak, Seunghoon Hong, and Bohyung Han. 2017. Weakly supervised semantic segmentation using superpixel pooling network. In Thirty-First AAAI Conference on Artificial Intelligence.Google ScholarDigital Library

22. Hsin-Ying Lee, Hung-Yu Tseng, Jia-Bin Huang, Maneesh Singh, and Ming-Hsuan Yang. 2018. Diverse image-to-image translation via disentangled representations. In Proceedings of the European conference on computer vision (ECCV). 35–51.Google ScholarDigital Library

23. Anat Levin, Dani Lischinski, and Yair Weiss. 2004. Colorization using optimization. In ACM transactions on graphics (tog), Vol. 23. ACM, 689–694.Google Scholar

24. Chengze Li, Xueting Liu, and Tien-Tsin Wong. 2017a. Deep Extraction of Manga Structural Lines. ACM Transactions on Graphics (SIGGRAPH 2017 issue) 36, 4 (July 2017), 117:1–117:12.Google Scholar

25. Yanghao Li, Naiyan Wang, Jiaying Liu, and Xiaodi Hou. 2017b. Demystifying neural style transfer. arXiv preprint arXiv:1701.01036 (2017).Google ScholarDigital Library

26. Guilin Liu, Fitsum A. Reda, Kevin J. Shih, Ting-Chun Wang, Andrew Tao, and Bryan Catanzaro. 2018. Image Inpainting for Irregular Holes Using Partial Convolutions. In The European Conference on Computer Vision (ECCV).Google Scholar

27. Ming-Yu Liu, Thomas Breuel, and Jan Kautz. 2017. Unsupervised image-to-image translation networks. In Advances in Neural Information Processing Systems. 700–708.Google Scholar

28. Xiuwen Liu and DeLiang Wang. 2006. Image and texture segmentation using local spectral histograms. IEEE Transactions on Image Processing 15, 10 (2006), 3066–3077.Google ScholarDigital Library

29. Bangalore S Manjunath and Wei-Ying Ma. 1996. Texture features for browsing and retrieval of image data. IEEE Transactions on pattern analysis and machine intelligence 18, 8 (1996), 837–842.Google ScholarDigital Library

30. Yusuke Matsui, Kota Ito, Yuji Aramaki, Azuma Fujimoto, Toru Ogawa, Toshihiko Yamasaki, and Kiyoharu Aizawa. 2017. Sketch-based Manga Retrieval using Manga109 Dataset. Multimedia Tools and Applications 76, 20 (2017), 21811–21838.Google ScholarDigital Library

31. Wai-Man Pang, Yingge Qu, Tien-Tsin Wong, Daniel Cohen-Or, and Pheng-Ann Heng. 2008. Structure-aware halftoning. In ACM Transactions on Graphics (TOG), Vol. 27. ACM, 89.Google ScholarDigital Library

32. Adam Paszke, Sam Gross, Soumith Chintala, Gregory Chanan, Edward Yang, Zachary DeVito, Zeming Lin, Alban Desmaison, Luca Antiga, and Adam Lerer. 2017. Automatic differentiation in PyTorch. (2017).Google Scholar

33. Yingge Qu, Wai-Man Pang, Tien-Tsin Wong, and Pheng-Ann Heng. 2008. Richness-Preserving Manga Screening. ACM Transactions on Graphics (SIGGRAPH Asia 2008 issue) 27, 5 (December 2008), 155:1–155:8.Google Scholar

34. Yingge Qu, Tien-Tsin Wong, and Pheng-Ann Heng. 2006. Manga colorization. In ACM Transactions on Graphics (TOG), Vol. 25. ACM, 1214–1220.Google ScholarDigital Library

35. Trygve Randen and John Hakon Husoy. 1999. Filtering for texture classification: A comparative study. IEEE Transactions on pattern analysis and machine intelligence 21, 4 (1999), 291–310.Google ScholarDigital Library

36. Danilo Jimenez Rezende, Shakir Mohamed, and Daan Wierstra. 2014. Stochastic back-propagation and approximate inference in deep generative models. arXiv preprint arXiv:1401.4082 (2014).Google Scholar

37. Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2015. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention. Springer, 234–241.Google ScholarCross Ref

38. Masaki Saito and Yusuke Matsui. 2015. Illustration2vec: a semantic vector representation of illustrations. In SIGGRAPH Asia 2015 Technical Briefs. ACM, 5.Google Scholar

39. Daniel SᏳkora, Jan Buriánek, and Jiří Žára. 2004. Unsupervised colorization of black-and-white cartoons. In Proceedings of the 3rd international symposium on Non-photorealistic animation and rendering. ACM, 121–127.Google ScholarDigital Library

40. Robert Ulichney. 1987. Digital halftoning. MIT press.Google ScholarDigital Library

41. Georges Winkenbach and David H Salesin. 1994. Computer-generated pen-and-ink illustration. In Proceedings of the 21st annual conference on Computer graphics and interactive techniques. ACM, 91–100.Google ScholarDigital Library

42. Li Xu, Cewu Lu, Yi Xu, and Jiaya Jia. 2011. Image smoothing via L 0 gradient minimization. In ACM Transactions on Graphics (TOG), Vol. 30. ACM, 174.Google ScholarDigital Library

43. Zili Yi, Hao Zhang, Ping Tan, and Minglun Gong. 2017. Dualgan: Unsupervised dual learning for image-to-image translation. In Proceedings of the IEEE international conference on computer vision. 2849–2857.Google ScholarCross Ref

44. Xianwen Yu, Xiaoning Zhang, Yang Cao, and Min Xia. 2019. VAEGAN: a collaborative filtering framework based on adversarial variational autoencoders. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence. 4206–4212.Google ScholarCross Ref

45. Lvmin Zhang, Chengze Li, Tien-Tsin Wong, Yi Ji, and Chunping Liu. 2018. Two-stage sketch colorization. In SIGGRAPH Asia 2018 Technical Papers. ACM, 261.Google Scholar

46. Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A Efros. 2017a. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international conference on computer vision. 2223–2232.Google ScholarCross Ref

47. Jun-Yan Zhu, Richard Zhang, Deepak Pathak, Trevor Darrell, Alexei A Efros, Oliver Wang, and Eli Shechtman. 2017b. Toward Multimodal Image-to-Image Translation. In Advances in Neural Information Processing Systems 30, I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett (Eds.). Curran Associates, Inc., 465–476. http://papers.nips.cc/paper/6650-toward-multimodal-image-to-image-translation.pdfGoogle Scholar