“Lips-sync 3D speech animation” by Huang, Chen, Chuang and Guan

Conference:

Type(s):

Title:

- Lips-sync 3D speech animation

Presenter(s)/Author(s):

Abstract:

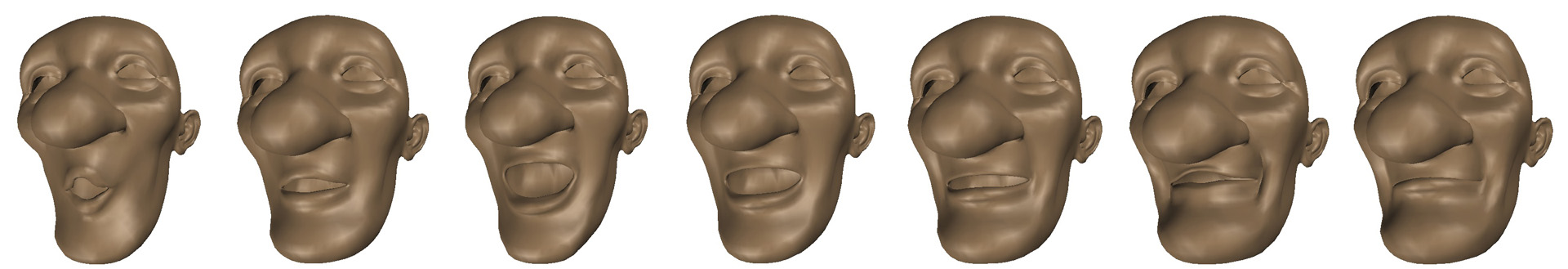

Facial animation is traditionally considered as an important but tedious task for many applications.Recently the demand for lips-syncs animation is increasing, but there seems few fast and easy generation methods.In this talk, a system to synthesize lips-syncs speech animation given a novel utterance is presented. Our system uses a nonlinear blend-shape method and derives key-shapes using a novel automatic clustering algorithm. Finally a Gaussian-phoneme model is used to predict the proper motion dynamic that can be used for synthesizing a new speech animation.

References:

1. Ezzat, T., Geiger, G., and Poggio, T. 2002. Trainable video-realistic speech animation. In SIGGRAPH 2002, 388–398.

2. Frey, B. J., and Dueck, D. 2007. Clustering by passing messages between data points. Science 315, 5814.

3. Sumner, R. W., Zwicker, M., Gotsman, C., and Popović, J. 2005. Mesh-based inverse kinematics. ACM TOG 24, 3, 488–495. (SIGGRAPH 2005).