“Learning to Generate 3D Shapes from a Single Example” by Wu and Zheng

Conference:

Type(s):

Title:

- Learning to Generate 3D Shapes from a Single Example

Session/Category Title:

- Shape Generation

Presenter(s)/Author(s):

Abstract:

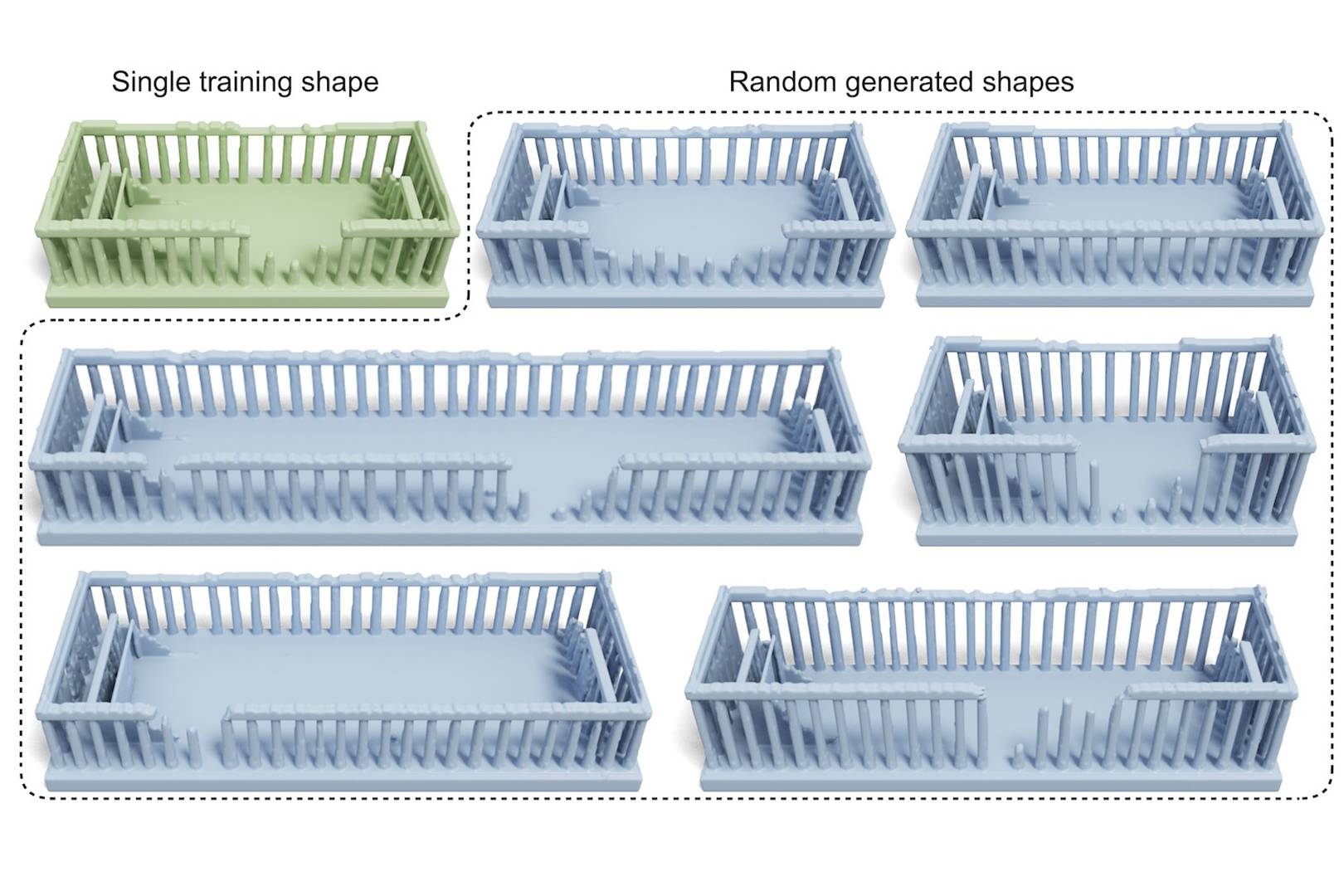

Existing generative models for 3D shapes are typically trained on a large 3D dataset, often of a specific object category. In this paper, we investigate the deep generative model that learns from only a single reference 3D shape. Specifically, we present a multi-scale GAN-based model designed to capture the input shape’s geometric features across a range of spatial scales. To avoid large memory and computational cost induced by operating on the 3D volume, we build our generator atop the tri-plane hybrid representation, which requires only 2D convolutions. We train our generative model on a voxel pyramid of the reference shape, without the need of any external supervision or manual annotation. Once trained, our model can generate diverse and high-quality 3D shapes possibly of different sizes and aspect ratios. The resulting shapes present variations across different scales, and at the same time retain the global structure of the reference shape. Through extensive evaluation, both qualitative and quantitative, we demonstrate that our model can generate 3D shapes of various types.1

References:

1. Panos Achlioptas, Olga Diamanti, Ioannis Mitliagkas, and Leonidas Guibas. 2018. Learning representations and generative models for 3d point clouds. In International conference on machine learning. PMLR, 40–49.

2. Heli Ben-Hamu, Haggai Maron, Itay Kezurer, Gal Avineri, and Yaron Lipman. 2018. Multi-chart generative surface modeling. ACM Transactions on Graphics (TOG) 37, 6 (2018), 1–15.

3. Martin Bokeloh, Michael Wand, and Hans-Peter Seidel. 2010. A connection between partial symmetry and inverse procedural modeling. In ACM SIGGRAPH 2010 papers. 1–10.

4. Ruojin Cai, Guandao Yang, Hadar Averbuch-Elor, Zekun Hao, Serge Belongie, Noah Snavely, and Bharath Hariharan. 2020. Learning gradient fields for shape generation. In European Conference on Computer Vision. Springer, 364–381.

5. Eric R Chan, Connor Z Lin, Matthew A Chan, Koki Nagano, Boxiao Pan, Shalini De Mello, Orazio Gallo, Leonidas Guibas, Jonathan Tremblay, Sameh Khamis, et al. 2021. Efficient geometry-aware 3D generative adversarial networks. arXiv preprint arXiv:2112.07945 (2021).

6. Angel X. Chang, Thomas Funkhouser, Leonidas Guibas, Pat Hanrahan, Qixing Huang, Zimo Li, Silvio Savarese, Manolis Savva, Shuran Song, Hao Su, Jianxiong Xiao, Li Yi, and Fisher Yu. 2015. ShapeNet: An Information-Rich 3D Model Repository. Technical Report arXiv:1512.03012 [cs.GR]. Stanford University — Princeton University — Toyota Technological Institute at Chicago.

7. Anpei Chen, Zexiang Xu, Andreas Geiger, Jingyi Yu, and Hao Su. 2022b. TensoRF: Tensorial Radiance Fields. In European Conference on Computer Vision (ECCV).

8. Haiwei Chen, Jiayi Liu, Weikai Chen, Shichen Liu, and Yajie Zhao. 2022a. Exemplar-bsaed Pattern Synthesis with Implicit Periodic Field Network. arXiv preprint arXiv:2204.01671 (2022).

9. Zhiqin Chen, Vladimir G Kim, Matthew Fisher, Noam Aigerman, Hao Zhang, and Siddhartha Chaudhuri. 2021. Decor-gan: 3d shape detailization by conditional refinement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 15740–15749.

10. Zhiqin Chen, Andrea Tagliasacchi, and Hao Zhang. 2020. Bsp-net: Generating compact meshes via binary space partitioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 45–54.

11. Zhiqin Chen and Hao Zhang. 2019. Learning implicit fields for generative shape modeling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 5939–5948.

12. Jooyoung Choi, Jungbeom Lee, Yonghyun Jeong, and Sungroh Yoon. 2021. Toward spatially unbiased generative models. arXiv preprint arXiv:2108.01285 (2021).

13. David S Ebert, F Kenton Musgrave, Darwyn Peachey, Ken Perlin, and Steven Worley. 2003. Texturing & modeling: a procedural approach. Morgan Kaufmann.

14. Thomas Funkhouser, Michael Kazhdan, Philip Shilane, Patrick Min, William Kiefer, Ayellet Tal, Szymon Rusinkiewicz, and David Dobkin. 2004. Modeling by example. ACM transactions on graphics (TOG) 23, 3 (2004), 652–663.

15. Jun Gao, Wenzheng Chen, Tommy Xiang, Alec Jacobson, Morgan McGuire, and Sanja Fidler. 2020. Learning deformable tetrahedral meshes for 3d reconstruction. Advances In Neural Information Processing Systems 33 (2020), 9936–9947.

16. Lin Gao, Tong Wu, Yu-Jie Yuan, Ming-Xian Lin, Yu-Kun Lai, and Hao Zhang. 2021. Tm-net: Deep generative networks for textured meshes. ACM Transactions on Graphics (TOG) 40, 6 (2021), 1–15.

17. Michael Garland and Paul S Heckbert. 1997. Surface simplification using quadric error metrics. In Proceedings of the 24th annual conference on Computer graphics and interactive techniques. 209–216.

18. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative Adversarial Nets. In Advances in Neural Information Processing Systems, Z. Ghahramani, M. Welling, C. Cortes, N. Lawrence, and K.Q. Weinberger (Eds.), Vol. 27. Curran Associates, Inc. https://proceedings.neurips.cc/paper/2014/file/5ca3e9b122f61f8f06494c97b1afccf3-Paper.pdf

19. Niv Granot, Ben Feinstein, Assaf Shocher, Shai Bagon, and Michal Irani. 2021. Drop the gan: In defense of patches nearest neighbors as single image generative models. arXiv preprint arXiv:2103.15545 (2021).

20. Gal Greshler, Tamar Shaham, and Tomer Michaeli. 2021. Catch-A-Waveform: Learning to Generate Audio from a Single Short Example. In Advances in Neural Information Processing Systems, M. Ranzato, A. Beygelzimer, Y. Dauphin, P.S. Liang, and J. Wortman Vaughan (Eds.), Vol. 34. Curran Associates, Inc., 20916–20928. https://proceedings.neurips.cc/paper/2021/file/af21d0c97db2e27e13572cbf59eb343d-Paper.pdf

21. Thibault Groueix, Matthew Fisher, Vladimir G Kim, Bryan C Russell, and Mathieu Aubry. 2018. A papier-mâché approach to learning 3d surface generation. In Proceedings of the IEEE conference on computer vision and pattern recognition. 216–224.

22. Éric Guérin, Julie Digne, Eric Galin, Adrien Peytavie, Christian Wolf, Bedrich Benes, and Benoît Martinez. 2017. Interactive example-based terrain authoring with conditional generative adversarial networks. Acm Transactions on Graphics (TOG) 36, 6 (2017), 1–13.

23. Ishaan Gulrajani, Faruk Ahmed, Martin Arjovsky, Vincent Dumoulin, and Aaron C Courville. 2017. Improved training of wasserstein gans. Advances in neural information processing systems 30 (2017).

24. Niv Haim, Ben Feinstein, Niv Granot, Assaf Shocher, Shai Bagon, Tali Dekel, and Michal Irani. 2021. Diverse Generation from a Single Video Made Possible. arXiv preprint arXiv:2109.08591 (2021).

25. Charles Han, Eric Risser, Ravi Ramamoorthi, and Eitan Grinspun. 2008. Multiscale texture synthesis. In ACM SIGGRAPH 2008 papers. 1–8.

26. Rana Hanocka, Gal Metzer, Raja Giryes, and Daniel Cohen-Or. 2020. Point2Mesh: A Self-Prior for Deformable Meshes. ACM Trans. Graph. 39, 4 (2020).

27. Amir Hertz, Rana Hanocka, Raja Giryes, and Daniel Cohen-Or. 2020. Deep Geometric Texture Synthesis. ACM Trans. Graph. 39, 4, Article 108 (2020).

28. Tobias Hinz, Matthew Fisher, Oliver Wang, and Stefan Wermter. 2021. Improved techniques for training single-image gans. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 1300–1309.

29. Pradeep Kumar Jayaraman, Joseph G Lambourne, Nishkrit Desai, Karl DD Willis, Aditya Sanghi, and Nigel JW Morris. 2022. SolidGen: An Autoregressive Model for Direct B-rep Synthesis. arXiv preprint arXiv:2203.13944 (2022).

30. R. Kenny Jones, Theresa Barton, Xianghao Xu, Kai Wang, Ellen Jiang, Paul Guerrero, Niloy J. Mitra, and Daniel Ritchie. 2020. ShapeAssembly: Learning to Generate Programs for 3D Shape Structure Synthesis. ACM Transactions on Graphics (TOG), Siggraph Asia 2020 39, 6 (2020), Article 234.

31. Evangelos Kalogerakis, Siddhartha Chaudhuri, Daphne Koller, and Vladlen Koltun. 2012. A probabilistic model for component-based shape synthesis. Acm Transactions on Graphics (TOG) 31, 4 (2012), 1–11.

32. Diederik P. Kingma and Jimmy Ba. 2015. Adam: A Method for Stochastic Optimization. In 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7–9, 2015, Conference Track Proceedings. http://arxiv.org/abs/1412.6980

33. Diederik P. Kingma and Max Welling. 2014. Auto-Encoding Variational Bayes. In 2nd International Conference on Learning Representations, ICLR 2014, Banff, AB, Canada, April 14–16, 2014, Conference Track Proceedings, Yoshua Bengio and Yann LeCun (Eds.). http://arxiv.org/abs/1312.6114

34. Marian Kleineberg, Matthias Fey, and Frank Weichert. 2020. Adversarial Generation of Continuous Implicit Shape Representations. In Eurographics 2020 – Short Papers, Alexander Wilkie and Francesco Banterle (Eds.). The Eurographics Association.

35. Changjian Li, Hao Pan, Adrien Bousseau, and Niloy J Mitra. 2020. Sketch2cad: Sequential cad modeling by sketching in context. ACM Transactions on Graphics (TOG) 39, 6 (2020), 1–14.

36. Jun Li, Kai Xu, Siddhartha Chaudhuri, Ersin Yumer, Hao Zhang, and Leonidas Guibas. 2017. Grass: Generative recursive autoencoders for shape structures. ACM Transactions on Graphics (TOG) 36, 4 (2017), 1–14.

37. Peizhuo Li, Kfir Aberman, Zihan Zhang, Rana Hanocka, and Olga Sorkine-Hornung. 2022. GANimator: Neural Motion Synthesis from a Single Sequence. ACM Transactions on Graphics (TOG) 41, 4 (2022), 138.

38. Ruihui Li, Xianzhi Li, Ka-Hei Hui, and Chi-Wing Fu. 2021. SP-GAN: Sphere-guided 3D shape generation and manipulation. ACM Transactions on Graphics (TOG) 40, 4 (2021), 1–12.

39. Hsueh-Ti Derek Liu, Vladimir G Kim, Siddhartha Chaudhuri, Noam Aigerman, and Alec Jacobson. 2020. Neural subdivision. arXiv preprint arXiv:2005.01819 (2020).

40. Steven Longay, Adam Runions, Frédéric Boudon, and Przemyslaw Prusinkiewicz. 2012. TreeSketch: Interactive Procedural Modeling of Trees on a Tablet.. In [email protected] Expressive. Citeseer, 107–120.

41. William E. Lorensen and Harvey E. Cline. 1987. Marching Cubes: A High Resolution 3D Surface Construction Algorithm (SIGGRAPH ’87). Association for Computing Machinery, New York, NY, USA, 163–169.

42. Radomír Měch and Przemyslaw Prusinkiewicz. 1996. Visual models of plants interacting with their environment. In Proceedings of the 23rd annual conference on Computer graphics and interactive techniques. 397–410.

43. Paul Merrell. 2007. Example-based model synthesis. In Proceedings of the 2007 symposium on Interactive 3D graphics and games. 105–112.

44. Paul Merrell and Dinesh Manocha. 2008. Continuous model synthesis. In ACM SIGGRAPH Asia 2008 papers. 1–7.

45. Lars Mescheder, Michael Oechsle, Michael Niemeyer, Sebastian Nowozin, and Andreas Geiger. 2019. Occupancy Networks: Learning 3D Reconstruction in Function Space. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

46. Oscar Michel, Roi Bar-On, Richard Liu, Sagie Benaim, and Rana Hanocka. 2022. Text2mesh: Text-driven neural stylization for meshes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 13492–13502.

47. Kaichun Mo, Paul Guerrero, Li Yi, Hao Su, Peter Wonka, Niloy Mitra, and Leonidas J Guibas. 2019. Structurenet: Hierarchical graph networks for 3d shape generation. arXiv preprint arXiv:1908.00575 (2019).

48. Pascal Müller, Peter Wonka, Simon Haegler, Andreas Ulmer, and Luc Van Gool. 2006. Procedural modeling of buildings. In ACM SIGGRAPH 2006 Papers. 614–623.

49. F Kenton Musgrave, Craig E Kolb, and Robert S Mace. 1989. The synthesis and rendering of eroded fractal terrains. ACM Siggraph Computer Graphics 23, 3 (1989), 41–50.

50. Charlie Nash, Yaroslav Ganin, SM Ali Eslami, and Peter Battaglia. 2020. Polygen: An autoregressive generative model of 3d meshes. In International Conference on Machine Learning. PMLR, 7220–7229.

51. Yoav IH Parish and Pascal Müller. 2001. Procedural modeling of cities. In Proceedings of the 28th annual conference on Computer graphics and interactive techniques. 301–308.

52. Jeong Joon Park, Peter Florence, Julian Straub, Richard Newcombe, and Steven Lovegrove. 2019. Deepsdf: Learning continuous signed distance functions for shape representation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 165–174.

53. Taesung Park, Jun-Yan Zhu, Oliver Wang, Jingwan Lu, Eli Shechtman, Alexei Efros, and Richard Zhang. 2020. Swapping autoencoder for deep image manipulation. Advances in Neural Information Processing Systems 33 (2020), 7198–7211.

54. Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, et al. 2019. Pytorch: An imperative style, high-performance deep learning library. Advances in neural information processing systems 32 (2019).

55. Dario Pavllo, Jonas Kohler, Thomas Hofmann, and Aurelien Lucchi. 2021. Learning Generative Models of Textured 3D Meshes from Real-World Images. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 13879–13889.

56. Songyou Peng, Michael Niemeyer, Lars Mescheder, Marc Pollefeys, and Andreas Geiger. 2020. Convolutional occupancy networks. In European Conference on Computer Vision. Springer, 523–540.

57. Przemyslaw Prusinkiewicz, Lars Mündermann, Radoslaw Karwowski, and Brendan Lane. 2001. The use of positional information in the modeling of plants. In Proceedings of the 28th annual conference on Computer graphics and interactive techniques. 289–300.

58. Tamar Rott Shaham, Tali Dekel, and Tomer Michaeli. 2019. Singan: Learning a generative model from a single natural image. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 4570–4580.

59. Assaf Shocher, Shai Bagon, Phillip Isola, and Michal Irani. 2019. Ingan: Capturing and retargeting the” dna” of a natural image. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 4492–4501.

60. Ruben M Smelik, Klaas Jan De Kraker, Tim Tutenel, Rafael Bidarra, and Saskia A Groenewegen. 2009. A survey of procedural methods for terrain modelling. In Proceedings of the CASA workshop on 3D advanced media in gaming and simulation (3AMIGAS), Vol. 2009. sn, 25–34.

61. Jerry O Talton, Yu Lou, Steve Lesser, Jared Duke, Radomír Měch, and Vladlen Koltun. 2011. Metropolis procedural modeling. ACM Transactions on Graphics (TOG) 30, 2 (2011), 1–14.

62. Jiapeng Tang, Xiaoguang Han, Junyi Pan, Kui Jia, and Xin Tong. 2019. A skeleton-bridged deep learning approach for generating meshes of complex topologies from single rgb images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 4541–4550.

63. Dmitry Ulyanov, Andrea Vedaldi, and Victor Lempitsky. 2016. Instance normalization: The missing ingredient for fast stylization. arXiv preprint arXiv:1607.08022 (2016).

64. Jörg Vollmer, Robert Mencl, and Heinrich Mueller. 1999. Improved laplacian smoothing of noisy surface meshes. In Computer graphics forum, Vol. 18. Wiley Online Library, 131–138.

65. Peng-Shuai Wang, Yang Liu, and Xin Tong. 2022. Dual Octree Graph Networks for Learning Adaptive Volumetric. ACM Transactions on Graphics (SIGGRAPH) 41, 4 (2022).

66. Li-Yi Wei, Sylvain Lefebvre, Vivek Kwatra, and Greg Turk. 2009. State of the art in example-based texture synthesis. In Eurographics 2009, State of the Art Report, EG-STAR. Eurographics Association, 93–117.

67. Karl DD Willis, Yewen Pu, Jieliang Luo, Hang Chu, Tao Du, Joseph G Lambourne, Armando Solar-Lezama, and Wojciech Matusik. 2021. Fusion 360 gallery: A dataset and environment for programmatic cad construction from human design sequences. ACM Transactions on Graphics (TOG) 40, 4 (2021), 1–24.

68. Jiajun Wu, Chengkai Zhang, Tianfan Xue, Bill Freeman, and Josh Tenenbaum. 2016. Learning a probabilistic latent space of object shapes via 3d generative-adversarial modeling. Advances in neural information processing systems 29 (2016).

69. Rundi Wu, Chang Xiao, and Changxi Zheng. 2021. Deepcad: A deep generative network for computer-aided design models. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 6772–6782.

70. Rundi Wu, Yixin Zhuang, Kai Xu, Hao Zhang, and Baoquan Chen. 2020. Pq-net: A generative part seq2seq network for 3d shapes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 829–838.

71. Kai Xu, Hao Zhang, Daniel Cohen-Or, and Baoquan Chen. 2012. Fit and diverse: Set evolution for inspiring 3d shape galleries. ACM Transactions on Graphics (TOG) 31, 4 (2012), 1–10.

72. Rui Xu, Xintao Wang, Kai Chen, Bolei Zhou, and Chen Change Loy. 2021. Positional encoding as spatial inductive bias in gans. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 13569–13578.

73. Guandao Yang, Xun Huang, Zekun Hao, Ming-Yu Liu, Serge Belongie, and Bharath Hariharan. 2019. Pointflow: 3d point cloud generation with continuous normalizing flows. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 4541–4550.

74. Howard Zhou, Jie Sun, Greg Turk, and James M Rehg. 2007. Terrain synthesis from digital elevation models. IEEE transactions on visualization and computer graphics 13, 4 (2007), 834–848.

75. Yang Zhou, Zhen Zhu, Xiang Bai, Dani Lischinski, Daniel Cohen-Or, and Hui Huang. 2018. Non-Stationary Texture Synthesis by Adversarial Expansion. ACM Trans. Graph. 37, 4, Article 49 (jul 2018), 13 pages.