“Learning Reconstructability for Drone Aerial Path Planning” by Liu, Lin, Hu, Xie, Fu, et al. …

Conference:

Type(s):

Title:

- Learning Reconstructability for Drone Aerial Path Planning

Session/Category Title:

- Acquisition

Presenter(s)/Author(s):

Abstract:

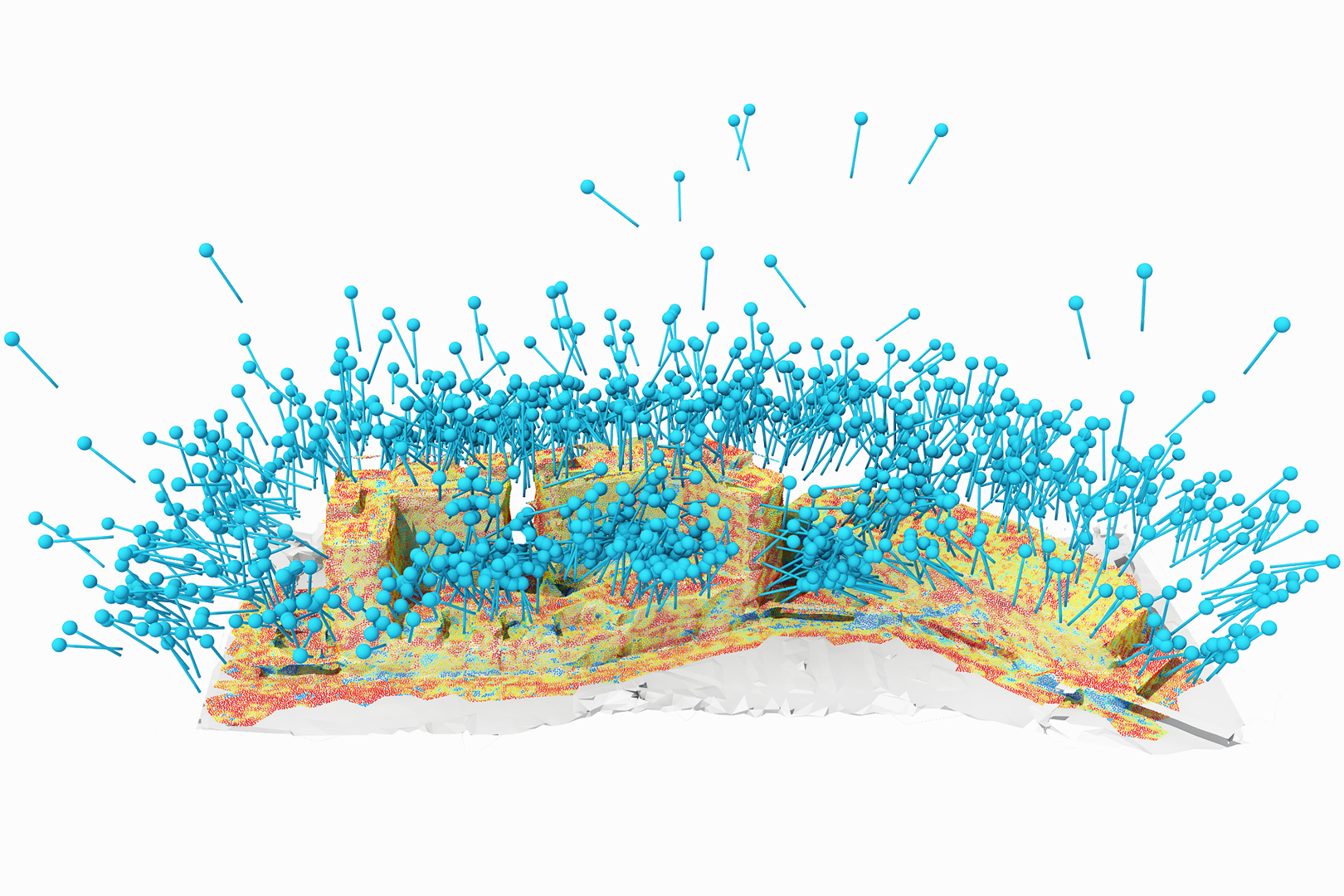

We introduce the first learning-based reconstructability predictor to improve view and path planning for large-scale 3D urban scene acquisition using unmanned drones. In contrast to previous heuristic approaches, our method learns a model that explicitly predicts how well a 3D urban scene will be reconstructed from a set of viewpoints. To make such a model trainable and simultaneously applicable to drone path planning, we simulate the proxy-based 3D scene reconstruction during training to set up the prediction. Specifically, the neural network we design is trained to predict the scene reconstructability as a function of the proxy geometry, a set of viewpoints, and optionally a series of scene images acquired in flight. To reconstruct a new urban scene, we first build the 3D scene proxy, then rely on the predicted reconstruction quality and uncertainty measures by our network, based off of the proxy geometry, to guide the drone path planning. We demonstrate that our data-driven reconstructability predictions are more closely correlated to the true reconstruction quality than prior heuristic measures. Further, our learned predictor can be easily integrated into existing path planners to yield improvements. Finally, we devise a new iterative view planning framework, based on the learned reconstructability, and show superior performance of the new planner when reconstructing both synthetic and real scenes.

References:

1. Nicolas Carion, Francisco Massa, Gabriel Synnaeve, Nicolas Usunier, Alexander Kirillov, and Sergey Zagoruyko. 2020. End-to-End Object Detection with Transformers. In Proc. Euro. Conf. on Computer Vision. 213–229.

2. Angel X. Chang, Thomas Funkhouser, Leonidas Guibas, Pat Hanrahan, Qixing Huang, Zimo Li, Silvio Savarese, Manolis Savva, Shuran Song, Hao Su, Jianxiong Xiao, Li Yi, and Fisher Yu. 2015. ShapeNet: An Information-Rich 3D Model Repository. arXiv preprint arXiv:1512.03012 (2015).

3. Zhiqin Chen and Hao Zhang. 2019. Learning Implicit Fields for Generative Shape Modeling. In Proc. IEEE Conf. on Computer Vision & Pattern Recognition. 5939–5948.

4. Yikang Ding, Wentao Yuan, Qingtian Zhu, Haotian Zhang, Xiangyue Liu, Yuanjiang Wang, and Xiao Liu. 2022. TransMVSNet: Global Context-Aware Multi-View Stereo Network With Transformers. In Proc. IEEE Conf. on Computer Vision & Pattern Recognition. 8585–8594.

5. Yasutaka Furukawa, Brian Curless, Steven M Seitz, and Richard Szeliski. 2010. Towards Internet-scale Multi-view Stereo. In Proc. IEEE Conf. on Computer Vision & Pattern Recognition. 1434–1441.

6. Kyle Genova, Manolis Savva, Angel X. Chang, and Thomas A. Funkhouser. 2017. Learning Where to Look: Data-Driven Viewpoint Set Selection for 3D Scenes. arXiv preprint arXiv:1704.02393 (2017).

7. Khang Truong Giang, Soohwan Song, Daekyum Kim, and Sunghee Choi. 2021. Sequential Depth Completion With Confidence Estimation for 3D Model Reconstruction. IEEE Robotics and Automation Letters 6 (2021), 327–334.

8. Xiaodong Gu, Zhiwen Fan, Siyu Zhu, Zuozhuo Dai, Feitong Tan, and Ping Tan. 2020. Cascade Cost Volume for High-Resolution Multi-View Stereo and Stereo Matching. In Proc. IEEE Conf. on Computer Vision & Pattern Recognition. 2492–2501.

9. Kaiming He, Georgia Gkioxari, Piotr Dollár, and Ross Girshick. 2017. Mask R-CNN. In Proc. Int. Conf. on Computer Vision. 2980–2988.

10. Benjamin Hepp, Debadeepta Dey, Sudipta N. Sinha, Ashish Kapoor, Neel Joshi, and Otmar Hilliges. 2018a. Learn-to-Score: Efficient 3D Scene Exploration by Predicting View Utility. Proc. Euro. Conf. on Computer Vision 11219 (2018), 455–472.

11. Benjamin Hepp, Matthias Nießner, and Otmar Hilliges. 2018b. Plan3D: Viewpoint and Trajectory Optimization for Aerial Multi-View Stereo Reconstruction. ACM Trans. on Graphics 38, 1 (2018), 4:1–4:17.

12. Xinyu Huang, Peng Wang, Xinjing Cheng, Dingfu Zhou, Qichuan Geng, and Ruigang Yang. 2019. The Apolloscape Open Dataset for Autonomous Driving and its Application. IEEE Trans. Pattern Analysis & Machine Intelligence 42, 10 (2019), 2702–2719.

13. Arno Knapitsch, Jaesik Park, Qian-Yi Zhou, and Vladlen Koltun. 2017. Tanks and Temples: Benchmarking Large-Scale Scene Reconstruction. ACM Trans. on Graphics 36, 4 (2017), 78:1–78:13.

14. Tobias Koch, Marco Körner, and Friedrich Fraundorfer. 2019. Automatic and Semantically-aware 3D UAV Flight Planning for Image-based 3D Reconstruction. Remote Sensing 11, 13 (2019), 1550.

15. Zhaoshuo Li, Xingtong Liu, Nathan Drenkow, Andy Ding, Francis X. Creighton, Russell H. Taylor, and Mathias Unberath. 2021. Revisiting Stereo Depth Estimation From a Sequence-to-Sequence Perspective With Transformers. In Proc. Int. Conf. on Computer Vision. 6197–6206.

16. Liqiang Lin, Yilin Liu, Yue Hu, Xingguang Yan, Ke Xie, and Hui Huang. 2022. Capturing, Reconstructing, and Simulating: the UrbanScene3D Dataset. In Proc. Euro. Conf. on Computer Vision.

17. Yilin Liu, Ruiqi Cui, Ke Xie, Minglun Gong, and Hui Huang. 2021a. Aerial Path Planning for Online Real-Time Exploration and Offline High-Quality Reconstruction of Large-Scale Urban Scenes. ACM Trans. on Graphics 40, 6 (2021), 226:1–226:16.

18. Yilin Liu, Ke Xie, and Hui Huang. 2021b. VGF-Net: Visual-Geometric Fusion Learning for Simultaneous Drone Navigation and Height Mapping. Graphical Models 116 (2021), 101108.

19. Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. 2020. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. In Proc. Euro. Conf. on Computer Vision. 405–421.

20. Cheng Peng and Volkan Isler. 2019. Adaptive View Planning for Aerial 3D Reconstruction. In Proc. IEEE Int. Conf. on Robotics & Automation. 2981–2987.

21. Mike Roberts, Debadeepta Dey, Anh Truong, Sudipta Sinha, Shital Shah, Ashish Kapoor, Pat Hanrahan, and Neel Joshi. 2017. Submodular Trajectory Optimization for Aerial 3D Scanning. In Proc. Int. Conf. on Computer Vision. 5324–5333.

22. Lukas Schmid, Michael Pantic, Raghav Khanna, Lionel Ott, Roland Siegwart, and Juan Nieto. 2020. An Efficient Sampling-Based Method for Online Informative Path Planning in Unknown Environments. IEEE Robotics and Automation Letters 5 (2020), 1500–1507.

23. Neil Smith, Nils Moehrle, Michael Goesele, and Wolfgang Heidrich. 2018. Aerial Path Planning for Urban Scene Reconstruction: A Continuous Optimization Method and Benchmark. ACM Trans. on Graphics 37, 6 (2018), 183:1–183:15.

24. Soohwan Song, Daekyum Kim, and Sungho Jo. 2020. Online Coverage and Inspection Planning for 3D Modeling. Autonomous Robots 44 (2020), 1431–1450.

25. Yifan Sun, Qixing Huang, Dun-Yu Hsiao, Li Guan, and Gang Hua. 2021. Learning View Selection for 3D Scenes. In Proc. IEEE Conf. on Computer Vision & Pattern Recognition. 14464–14473.

26. Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. 2017. Attention is All you Need. In Proc. Conf. on Neural Information Processing Systems. 5998–6008.

27. Yiheng Xie, Towaki Takikawa, Shunsuke Saito, Or Litany, Shiqin Yan, Numair Khan, Federico Tombari, James Tompkin, Vincent Sitzmann, and Srinath Sridhar. 2022. Neural Fields in Visual Computing and Beyond. Computer Graphics Forum 41, 2 (2022), 641–676.

28. Han Zhang, Yucong Yao, Ke Xie, Chi-Wing Fu, Hao Zhang, and Hui Huang. 2021. Continuous Aerial Path Planning for 3D Urban Scene Reconstruction. ACM Trans. on Graphics 40, 6 (2021), 225:1–225:15.

29. Xiaohui Zhou, Ke Xie, Kai Huang, Yilin Liu, Yang Zhou, Minglun Gong, and Hui Huang. 2020. Offsite Aerial Path Planning for Efficient Urban Scene Reconstruction. ACM Trans. on Graphics 39, 6 (2020), 192:1–192:16.