“Learning a model of facial shape and expression from 4D scans” by Li, Bolkart, Black, Li and Romero

Conference:

Type(s):

Title:

- Learning a model of facial shape and expression from 4D scans

Session/Category Title: Avatars and Faces

Presenter(s)/Author(s):

Abstract:

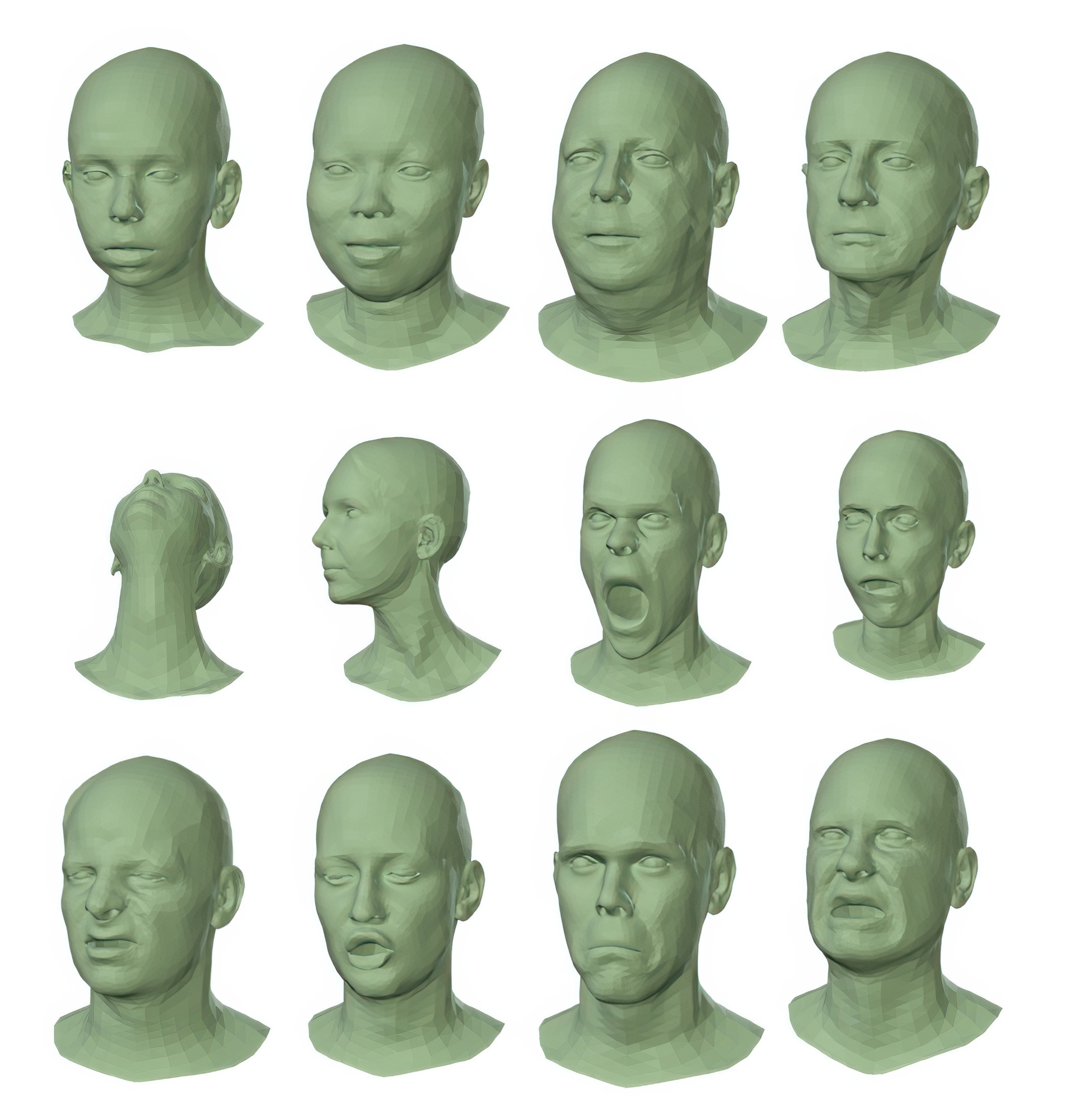

The field of 3D face modeling has a large gap between high-end and low-end methods. At the high end, the best facial animation is indistinguishable from real humans, but this comes at the cost of extensive manual labor. At the low end, face capture from consumer depth sensors relies on 3D face models that are not expressive enough to capture the variability in natural facial shape and expression. We seek a middle ground by learning a facial model from thousands of accurately aligned 3D scans. Our FLAME model (Faces Learned with an Articulated Model and Expressions) is designed to work with existing graphics software and be easy to fit to data. FLAME uses a linear shape space trained from 3800 scans of human heads. FLAME combines this linear shape space with an articulated jaw, neck, and eyeballs, pose-dependent corrective blendshapes, and additional global expression blendshapes. The pose and expression dependent articulations are learned from 4D face sequences in the D3DFACS dataset along with additional 4D sequences. We accurately register a template mesh to the scan sequences and make the D3DFACS registrations available for research purposes. In total the model is trained from over 33, 000 scans. FLAME is low-dimensional but more expressive than the FaceWarehouse model and the Basel Face Model. We compare FLAME to these models by fitting them to static 3D scans and 4D sequences using the same optimization method. FLAME is significantly more accurate and is available for research purposes (http://flame.is.tue.mpg.de).

References:

1. O. Alexander, M. Rogers, W. Lambeth, M. Chiang, and P. Debevec. 2009. The Digital Emily Project: Photoreal Facial Modeling and Animation. In SIGGRAPH 2009 Courses. 12:1–12:15.

2. B. Allen, B. Curless, and Z. Popović. 2003. The space of human body shapes: Reconstruction and parameterization from range scans. In Transactions on Graphics (Proceedings of SIGGRAPH), Vol. 22. 587–594.

3. B. Allen, B.n Curless, Z. Popović, and A. Hertzmann. 2006. Learning a Correlated Model of Identity and Pose-dependent Body Shape Variation for Real-time Synthesis. In ACM SIGGRAPH/Eurographics Symposium on Computer Animation (SCA ’06). 147–156.

4. B. Amberg, R. Knothe, and T. Vetter. 2008. Expression Invariant 3D Face Recognition with a Morphable Model. In International Conference on Automatic Face Gesture Recognition. 1–6.

5. B. Amberg, S. Romdhani, and T. Vetter. 2007. Optimal Step Nonrigid ICP Algorithms for Surface Registration. In Conference on Computer Vision and Pattern Recognition. 1–8.

6. D. Anguelov, P. Srinivasan, D. Koller, S. Thrun, J. Rodgers, and J. Davis. 2005. SCAPE: Shape Completion and Animation of People. Transactions on Graphics (Proceedings of SIGGRAPH) 24, 3 (2005), 408–416.

7. T. Beeler and D. Bradley. 2014. Rigid Stabilization of Facial Expressions. Transactions on Graphics (Proceedings of SIGGRAPH) 33, 4 (2014), 44:1–44:9.

8. T. Beeler, F. Hahn, D. Bradley, B. Bickel, P. Beardsley, C. Gotsman, R. W. Sumner, and M. Gross. 2011. High-quality passive facial performance capture using anchor frames. Transactions on Graphics (Proceedings of SIGGRAPH) 30, 4 (2011), 75:1–75:10.

9. V. Blanz and T. Vetter. 1999. A morphable model for the synthesis of 3D faces. In SIGGRAPH. 187–194.

10. F. Bogo, M. J. Black, M. Loper, and J. Romero. 2015. Detailed Full-Body Reconstructions of Moving People from Monocular RGB-D Sequences. In International Conference on Computer Vision. 2300–2308.

11. F. Bogo, J. Romero, M. Loper, and M.J. Black. 2014. FAUST: Dataset and Evaluation for 3D Mesh Registration. In Conference on Computer Vision and Pattern Recognition. 3794–3801.

12. T. Bolkart and S. Wuhrer. 2015. A Groupwise Multilinear Correspondence Optimization for 3D Faces. In International Conference on Computer Vision. 3604–3612.

13. J. Booth, A. Roussos, A. Ponniah, D. Dunaway, and S. Zafeiriou. 2017. Large Scale 3D Morphable Models. International Journal of Computer Vision (2017), 1–22.

14. J. Booth, A. Roussos, S. Zafeiriou, A. Ponniahy, and D. Dunaway. 2016. A 3D Morphable Model Learnt from 10,000 Faces. In Conference on Computer Vision and Pattern Recognition. 5543–5552.

15. S. Bouaziz, Y. Wang, and M. Pauly. 2013. Online modeling for realtime facial animation. Transactions on Graphics (Proceedings of SIGGRAPH) 32, 4 (2013), 40:1–40:10.

16. A. Bronstein, M. Bronstein, and R. Kimmel. 2008. Numerical Geometry of Non-Rigid Shapes (1 ed.). Springer Publishing Company, Incorporated.

17. A. Brunton, T. Bolkart, and S. Wuhrer. 2014. Multilinear wavelets: A statistical shape space for human faces. In European Conference on Computer Vision. 297–312.

18. C. Cao, D. Bradley, K. Zhou, and T. Beeler. 2015. Real-time High-fidelity Facial Performance Capture. Transactions on Graphics (Proceedings of SIGGRAPH) 34, 4 (2015), 46:1–46:9.

19. C. Cao, Y. Weng, S. Zhou, Y. Tong, and K. Zhou. 2014. FaceWarehouse: A 3D facial expression database for visual computing. Transactions on Visualization and Computer Graphics 20, 3 (2014), 413–425.

20. Y. Chen, D. Robertson, and R. Cipolla. 2011. A Practical System for Modelling Body Shapes from Single View Measurements. In British Machine Vision Conference. 82.1–82.11.

21. D. Cosker, E. Krumhuber, and A. Hilton. 2011. A FACS valid 3D dynamic action unit data-base with applications to 3D dynamic morphable facial modeling. In International Conference on Computer Vision. 2296–2303.

22. R. Davies, C. Twining, and C. Taylor. 2008. Statistical Models of Shape: Optimisation and Evaluation. Springer.

23. L. Dutreve, A. Meyer, and S. Bouakaz. 2011. Easy acquisition and real-time animation of facial wrinkles. Computer Animation and Virtual Worlds 22, 2–3 (2011), 169–176.

24. P. Ekman and W. Friesen. 1978. Facial Action Coding System: A Technique for the Measurement of Facial Movement. Consulting Psychologists Press.

25. C. Ferrari, G. Lisanti, S. Berretti, and A. Del Bimbo. 2015. Dictionary Learning based 3D Morphable Model Construction for Face Recognition with Varying Expression and Pose. In International Conference on 3D Vision. 509–517.

26. FLAME. 2017. http://flame.is.tue.mpg.de. (2017).

27. P. Garrido, L. Valgaerts, C. Wu, and C. Theobalt. 2013. Reconstructing detailed dynamic face geometry from monocular video. Transactions on Graphics (Proceedings of SIGGRAPH Asia) 32, 6 (2013), 158:1–158:10.

28. P. Garrido, M. Zollhöfer, D. Casas, L. Valgaerts, K. Varanasi, P. Perez, and C. Theobalt. 2016. Reconstruction of Personalized 3D Face Rigs from Monocular Video. Transactions on Graphics (Presented at SIGGRAPH 2016) 35, 3 (2016), 28:1–28:15.

29. S. Geman and D. E. McClure. 1987. Statistical methods for tomographic image reconstruction. Proceedings of the 46th Session of the International Statistical Institute, Bulletin of the ISI 52 (1987).

30. N. Hasler, C. Stoll, M. Sunkel, B. Rosenhahn, and H.-P. Seidel. 2009. A Statistical Model of Human Pose and Body Shape. Computer Graphics Forum (2009).

31. D.A. Hirshberg, M. Loper, E. Rachlin, and M.J. Black. 2012. Coregistration: Simultaneous alignment and modeling of articulated 3D shape. In European Conference on Computer Vision. 242–255.

32. A. E. Ichim, S. Bouaziz, and M. Pauly. 2015. Dynamic 3D Avatar Creation from Handheld Video Input. Transactions on Graphics (Proceedings of SIGGRAPH) 34, 4 (2015), 45:1–45:14.

33. I. Kemelmacher-Shlizerman and S. M. Seitz. 2011. Face Reconstruction in the Wild. In International Conference on Computer Vision. 1746–1753.

34. L. Kobbelt, S. Campagna, J. Vorsatz, and H.-P. Seidel. 1998. Interactive Multi-resolution Modeling on Arbitrary Meshes. In SIGGRAPH. 105–114.

35. Y. Kozlov, D. Bradley, M. Bächer, B. Thomaszewski, T. Beeler, and M. Gross. 2017. Enriching Facial Blendshape Rigs with Physical Simulation. Computer Graphics Forum (2017).

36. H. Li, T. Weise, and M. Pauly. 2010. Example-based facial rigging. Transactions on Graphics (Proceedings of SIGGRAPH) 29, 4 (2010), 32:1–32:6.

37. H. Li, J. Yu, Y. Ye, and C. Bregler. 2013. Realtime facial animation with on-the-fly correctives. Transactions on Graphics (Proceedings of SIGGRAPH) 32, 4 (2013), 42:1–42:10.

38. J. Li, W. Xu, Z. Cheng, K. Xu, and R. Klein. 2015. Lightweight wrinkle synthesis for 3D facial modeling and animation. Computer-Aided Design 58 (2015), 117–122.

39. M. Loper, N. Mahmood, J. Romero, G. Pons-Moll, and M.J. Black. 2015. SMPL: A Skinned Multi-person Linear Model. Transactions on Graphics (Proceedings of SIGGRAPH Asia) 34, 6 (2015), 248:1–248:16.

40. M. M. Loper and M. J. Black. 2014. OpenDR: An Approximate Differentiable Renderer. In European Conference on Computer Vision. 154–169.

41. T. Neumann, K. Varanasi, S. Wenger, M. Wacker, M. Magnor, and C. Theobalt. 2013. Sparse Localized Deformation Components. Transactions on Graphics (Proceedings of SIGGRAPH Asia) 32, 6 (2013), 179:1–179:10.

42. J. Nocedal and S. J. Wright. 2006. Numerical Optimization. Springer.

43. P. Paysan, R. Knothe, B. Amberg, S. Romdhani, and T. Vetter. 2009. A 3D Face Model for Pose and Illumination Invariant Face Recognition. In International Conference on Advanced Video and Signal Based Surveillance. 296–301.

44. F. Pedregosa, G. Varoquaux, A. Gramfort, V. Michel, B. Thirion, O. Grisel, M. Blondel, P. Prettenhofer, R. Weiss, V. Dubourg, J. Vanderplas, A. Passos, D. Cournapeau, M. Brucher, M. Perrot, and E. Duchesnay. 2011. Scikit-learn: Machine Learning in Python. Journal of Machine Learning Research 12 (2011), 2825–2830.

45. L. Pishchulin, S. Wuhrer, T. Helten, C. Theobalt, and B. Schiele. 2017. Building Statistical Shape Spaces for 3D Human Modeling. Pattern Recognition 67, C (July 2017), 276–286.

46. K. Robinette, S. Blackwell, H. Daanen, M. Boehmer, S. Fleming, T. Brill, D. Hoeferlin, and D. Burnsides. 2002. Civilian American and European Surface Anthropometry Resource (CAESAR) Final Report. Technical Report AFRL-HE-WP-TR-2002-0169. US Air Force Research Laboratory.

47. A. Salazar, S. Wuhrer, C. Shu, and F. Prieto. 2014. Fully automatic expression-invariant face correspondence. Machine Vision and Applications 25, 4 (2014), 859–879.

48. F. Shi, H.-T. Wu, X. Tong, and J. Chai. 2014. Automatic Acquisition of High-fidelity Facial Performances Using Monocular Videos. Transactions on Graphics (Proceedings of SIGGRAPH Asia) 33, 6 (2014), 222:1–222:13.

49. R.W. Sumner and J. Popović. 2004. Deformation transfer for triangle meshes. Transactions on Graphics (Proceedings of SIGGRAPH) 23, 3 (2004), 399–405.

50. S. Suwajanakorn, I. Kemelmacher-Shlizerman, and S. M. Seitz. 2014. Total Moving Face Reconstruction. In European Conference on Computer Vision. 796–812.

51. S. Suwajanakorn, S. M. Seitz, and I. Kemelmacher-Shlizerman. 2015. What Makes Tom Hanks Look Like Tom Hanks. In International Conference on Computer Vision. 3952–3960.

52. J. Thies, M. Zollhöfer, M. Nießner, L. Valgaerts, M. Stamminger, and C. Theobalt. 2015. Real-time Expression Transfer for Facial Reenactment. Transactions on Graphics (Proceedings of SIGGRAPH Asia) 34, 6 (2015), 183:1–183:14.

53. D. Vlasic, M. Brand, H. Pfister, and J. Popović. 2005. Face transfer with multilinear models. Transactions on Graphics (Proceedings of SIGGRAPH) 24, 3 (2005), 426–433.

54. T. Weise, S. Bouaziz, H. Li, and M. Pauly. 2011. Realtime performance-based facial animation. Transactions on Graphics (Proceedings of SIGGRAPH) 30, 4 (2011), 77:1–77:10.

55. E. Wood, T. Baltrušaitis, L.-P. Morency, P. Robinson, and A. Bulling. 2016. A 3D morphable eye region model for gaze estimation. In European Conference on Computer Vision. 297–313.

56. C. Wu, D. Bradley, M. Gross, and T. Beeler. 2016. An Anatomically-constrained Local Deformation Model for Monocular Face Capture. Transactions on Graphics (Proceedings of SIGGRAPH) 35, 4 (2016), 115:1–115:12.

57. X. Xiong and F. De la Torre. 2013. Supervised descent method and its applications to face alignment. In Conference on Computer Vision and Pattern Recognition. 532–539.

58. F. Xu, J. Chai, Y. Liu, and X. Tong. 2014. Controllable High-fidelity Facial Performance Transfer. Transactions on Graphics (Proceedings of SIGGRAPH) 33, 4 (2014), 42:1–42:11.

59. F. Yang, J. Wang, E. Shechtman, L. Bourdev, and D. Metaxas. 2011. Expression flow for 3D-aware face component transfer. Transactions on Graphics (Proceedings of SIGGRAPH) 30, 4 (2011), 60:1–10.

60. L. Yin, X. Wei, Y. Sun, J. Wang, and M. J. Rosato. 2006. A 3D Facial Expression Database for Facial Behavior Research. In International Conference on Automatic Face and Gesture Recognition. 211–216.