“HeroMirror Interactive: A Gesture Controlled Augmented Reality Gaming Experience” by Matuszka, Czuczor and Sóstai

Conference:

Type(s):

Title:

- HeroMirror Interactive: A Gesture Controlled Augmented Reality Gaming Experience

Presenter(s)/Author(s):

Entry Number:

- 28

Abstract:

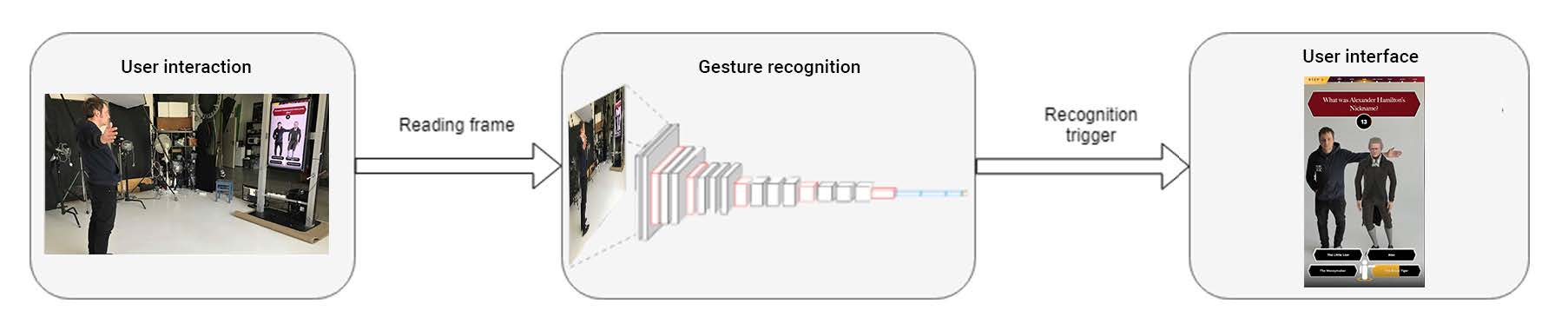

Appropriately chosen user interfaces are essential parts of immersive augmented reality experiences. Regular user interfaces cannot be efficiently used for interactive, real-time augmented reality applications. In this study, a gesture controlled educational gaming experience is described where gesture recognition relies on deep learning methods. Our implementation is able to replace a depth-camera based gesture recognition system using conventional camera while ensuring the same level of recognition accuracy.

References:

- Ronald T Azuma. 1997. A survey of augmented reality. Presence: Teleoperators & Virtual Environments 6, 4 (1997), 355–385.

- Chen Chen, Roozbeh Jafari, and Nasser Kehtarnavaz. 2015. Improving human action recognition using fusion of depth camera and inertial sensors. IEEE Transactions on Human-Machine Systems 45, 1 (2015), 51–61.

- Alana Elza Fontes Da Gama, Thiago Menezes Chaves, Lucas Silva Figueiredo, Adriana Baltar, Ma Meng, Nassir Navab, Veronica Teichrieb, and Pascal Fallavollita. 2016. MirrARbilitation: A clinically-related gesture recognition interactive tool for an AR rehabilitation system. Computer methods and programs in biomedicine 135 (2016), 105–114.

- Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition. 770–778.

- Tsung-Yi Lin, Priya Goyal, Ross Girshick, Kaiming He, and Piotr Dollár. 2017. Focal loss for dense object detection. In Proceedings of the IEEE international conference on computer vision. 2980–2988.

- Chong Wang, Zhong Liu, and Shing-Chow Chan. 2015. Superpixel-based hand gesture recognition with kinect depth camera. IEEE transactions on multimedia 17, 1 (2015), 29–39.

Keyword(s):

Acknowledgements:

The authors would like to thank Márton Helényi for animation development, Norbert Kovács for user interaction recommendations, and Dániel Siket for reference image creation. The authors would also like to thank the anonymous referees for their valuable comments and helpful suggestions.