“Graph Matching based Anime Colorization with Multiple References” by Maejima, Kubo, Funatomi, Yotsukura, Nakamura, et al. …

Conference:

Type(s):

Title:

- Graph Matching based Anime Colorization with Multiple References

Presenter(s)/Author(s):

Entry Number:

- 13

Abstract:

ABSTRACT

We propose a graph matching-based anime-colorization method from line drawings using multiple reference images. A graph structure of each frame in an input line drawing sequence helps to find correspondences of regions to be colorized between frames. However, it is difficult to find precise correspondences of whole frames only from a single reference image, because the graph structure tends to change drastically during the sequence. Therefore, our method first finds an optimal image from multiple reference images according to a cost function that represents shape similarity between nodes and compatibility of node pairs. While it is necessary to prepare several manually colored reference images, our method is still effective in reducing the effort required for colorization in anime production. We demonstrate the effectiveness of our method using actual images from our production.

INTRODUCTION

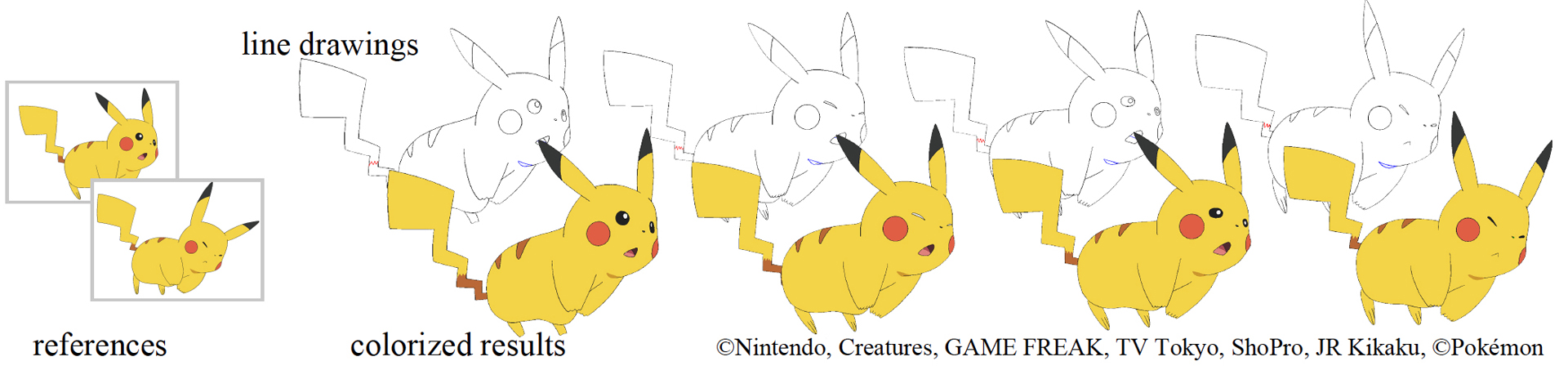

Traditional manual colorization process in 2D anime production is a tedious work even under the support of software such as [CELSYS 2008]. Thus, automatic colorization for line drawings is required to streamline a process of anime production. Previous work for automatic colorization can be categorized into two approaches; deep learning based [Furusawa et al. 2017; Ramassamy et al. 2018] and reference graph matching approach [Sato et al. 2014]. For the former, collecting a large dataset extracting from previously released manually created animation is a reasonable option, however, it is not very practical to collect larger dataset including sub characters which rarely appear in the animation. For the latter approach, no large amount of data collection is required, however, colorization often fails in case the graph structure changes drastically according to the character animation. Therefore, it is difficult to find precise correspondences of whole frames only from a single reference image. Thus, we propose an algorithm which allows multiple reference images. In this method, regions to be colorized are represented as nodes in a graph structure. To find an optimal image to be the reference for each line drawing sketches of an input sequence from multiple reference images, we utilize the cost function which represents similarity between nodes and edge pairs of two graphs from reference and target images. In Fig. 1, a part of the automatic colorized images by our method from multiple reference images are displayed. In this sequence, the graph structures of the odd number frames and even number frames are lightly difference. Therefore, more than two reference images are necessary to find the better correspondence. Since our method allows to be used multiple images as reference images, this figure demonstrates the efficiency of our method for a practical anime-production pipeline.

COLORIZATION PROCEDURE

Assuming that a reference graph collection {Gr/m } has already constructed beforehand. Each graph in {Gr/m } is composed by nodes corresponding regions acquired from reference images of a target character via segmentation algorithm [Zhang et al. 2009] and edges connecting between nodes. Given a target image sequence, a target graph collection {Gt/n } for this sequence are constructed by the same manner for the reference graph construction. Then, the graph matching problem for all pair of reference and target graphs in both collections is solved to choose an optimal reference graph for each target image. Finally, colorization is performed by filling regions with colors assigned to nodes on the optimal reference graph frame by frame.

OPTIMAL REFERENCE SELECTION

To find an optimal reference graph, we need to define a cost function so that it exhibit the performance of colorization, and optimize it. Let us consider an affinity matrix K between a reference graph Gr m and a target graph Gt n in the same fashion as [Zhou and De la Torre 2016]. We design a node affinity matrix Kp whose element k p i,j represents shape similarity between regions corresponding i-th node on Gr m and j-th node on Gt n using Shape Context Ci,j [Belongie et al. 2001]. k p/i,j = exp(− C 2 i,j σ 2 1 ) (1) Where, σ1 represents a standard deviation of Ci,j . We also define the edge affinity matrix Kq whose element k q k,l represents similarity of edge pair from Gr m and Gt n as follows.

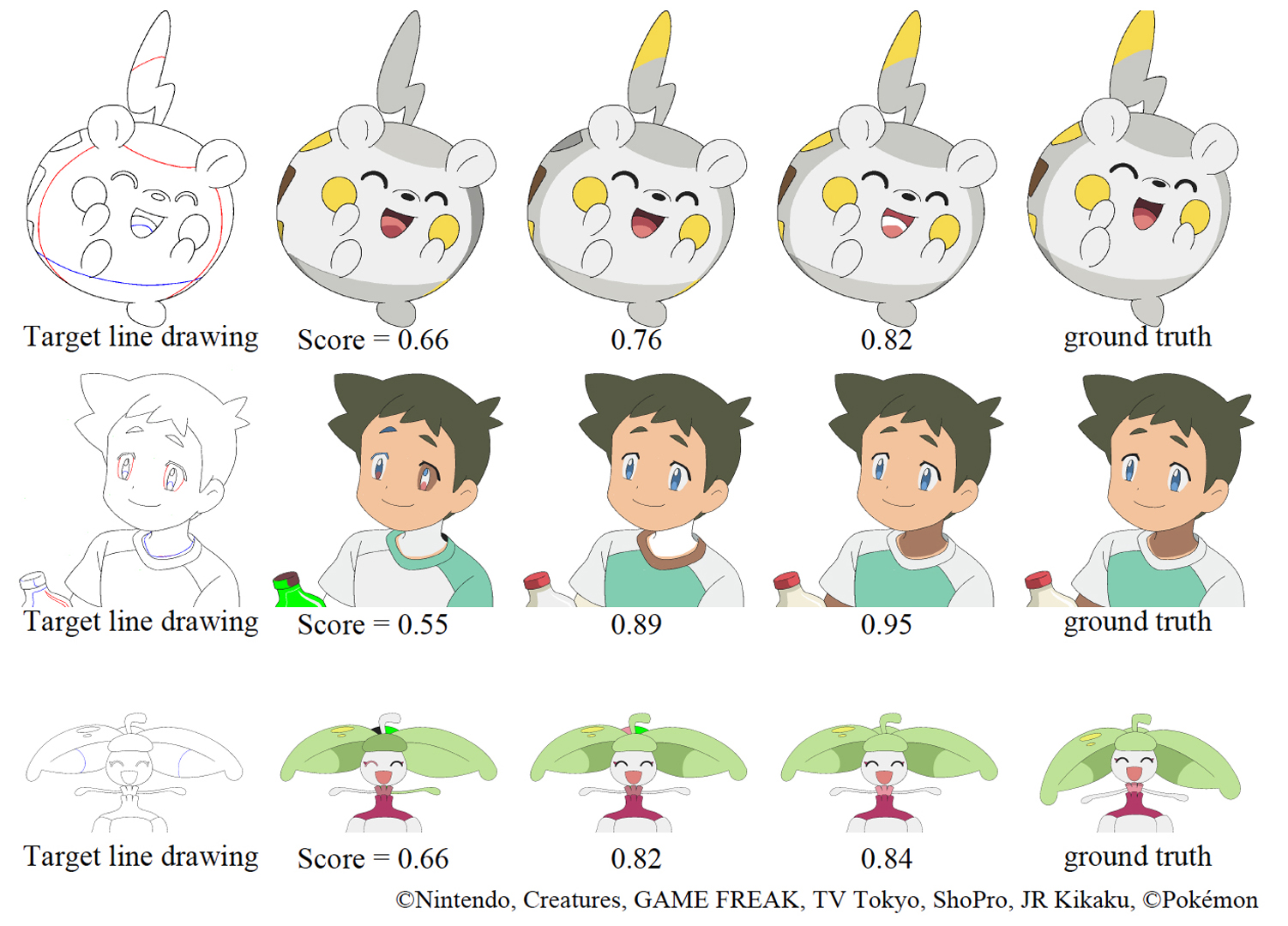

k q/k,l = exp(− d 2 k,l 2σ 2 2 − a 2 k,l 2σ 2 3 ) (2) Where dk,l = (dk −dl )/max(dk ,dl ). dk means length of the edge k on the Gr m and dl is that of the edge l on the Gt n . Also, we introduce magnitude relationship of areas between nodes connecting edge k and edge l (Each edge stars from node s to terminating node e.); ak,l = (a s/k*a e/l − a s/l *a e/k )/max(a s/k* a e/l , a s/l* a e/k ) as same manner [Sato et al. 2014]. σ2 and σ3 represents a standard deviation of dk,l and ak,l respectively. We solve this graph matching problem by Spectral Matching [Leordeanu and Hebert 2005] with affinity matrix K. A score can be obtained from the quadratic form x T Kx with the matching result x. Note that, we set σ1 = σ2 = 0.5 and σ3 = 1 for all experiments. According to score for each reference, the optimal reference can be selected for each frame of the target sequence.

The relationship between the scores and the colorized results is shown in Fig. 2. The figure clearly shows that the method colorizes more precisely, the scores gets closer to 1.0. Therefore, the score should presents the confidence of the colorized results.

RESULT AND DISCUSSION

As shown in Fig. 1, our method works for line drawings from an actual production pipeline. In case of rigid or nearly rigid transformation, only a single reference image is to be used. However, under the large deformation or missing/appearing parts, the performance obviously degrades because the graph structure is drastically changed compared with a reference. Thanks to the multiple reference images, our method can find an optimal reference image even in such situation. In the future, by introducing a latest graph matching technique like [Zhou and De la Torre 2016], colorization performance would be improved.

References:

- Serge Belongie, Jitendra Malik, and Jan Puzicha. 2001. Shape Context: A New Descriptor for Shape Matching and Object Recognition. In Advances in Neural Information Processing Systems 13, T. K. Leen, T. G. Dietterich, and V. Tresp (Eds.). 831–837.

- Inc. CELSYS. 2008. PaintMan. http://www.retasstudio.net/products/paintman/. (2008). Chie Furusawa, Kazuyuki Hiroshiba, Keisuke Ogaki, and Yuri Odagiri. 2017. Comicolorization: Semi-automatic Manga Colorization. In SIGGRAPH Asia 2017 Technical Briefs (SA ’17). 12:1–12:4.

- Marius Leordeanu and Martial Hebert. 2005. A Spectral Technique for Correspondence Problems Using Pairwise Constraints. In Proceedings of the Tenth IEEE International Conference on Computer Vision – Volume 2 (ICCV ’05). 1482–1489.

- Sophie Ramassamy, Hiroyuki Kubo, Takuya Funatomi, Daichi Ishii, Akinobu Maejima, Satoshi Nakamura, and Yasuhiro Mukaigawa. 2018. Pre- and Post-processes for Automatic Colorization Using a Fully Convolutional Network. In SIGGRAPH Asia 2018 Posters (SA ’18). 70:1–70:2.

- Kazuhiro Sato, Yusuke Matsui, Toshihiko Yamasaki, and Kiyoharu Aizawa. 2014. Reference-based Manga Colorization by Graph Correspondence Using Quadratic Programming. In SIGGRAPH Asia 2014 Technical Briefs (SA ’14). 15:1–15:4.

- Song-Hai Zhang, Tao Chen, Yi-Fei Zhang, Shi-Min Hu, and Ralph R. Martin. 2009. Vectorizing Cartoon Animations. IEEE Transactions on Visualization and Computer Graphics 15, 4 (2009), 618–629.

- Feng Zhou and Fernando De la Torre. 2016. Factorized Graph Matching. IEEE Transactions on Pattern Analysis and Machine Intelligence 38, 9 (2016), 1774–1789.