“EyeNeRF: a hybrid representation for photorealistic synthesis, animation and relighting of human eyes” by Li, Meka, Mueller, Buehler, Hilliges, et al. …

Conference:

Type(s):

Title:

- EyeNeRF: a hybrid representation for photorealistic synthesis, animation and relighting of human eyes

Presenter(s)/Author(s):

Abstract:

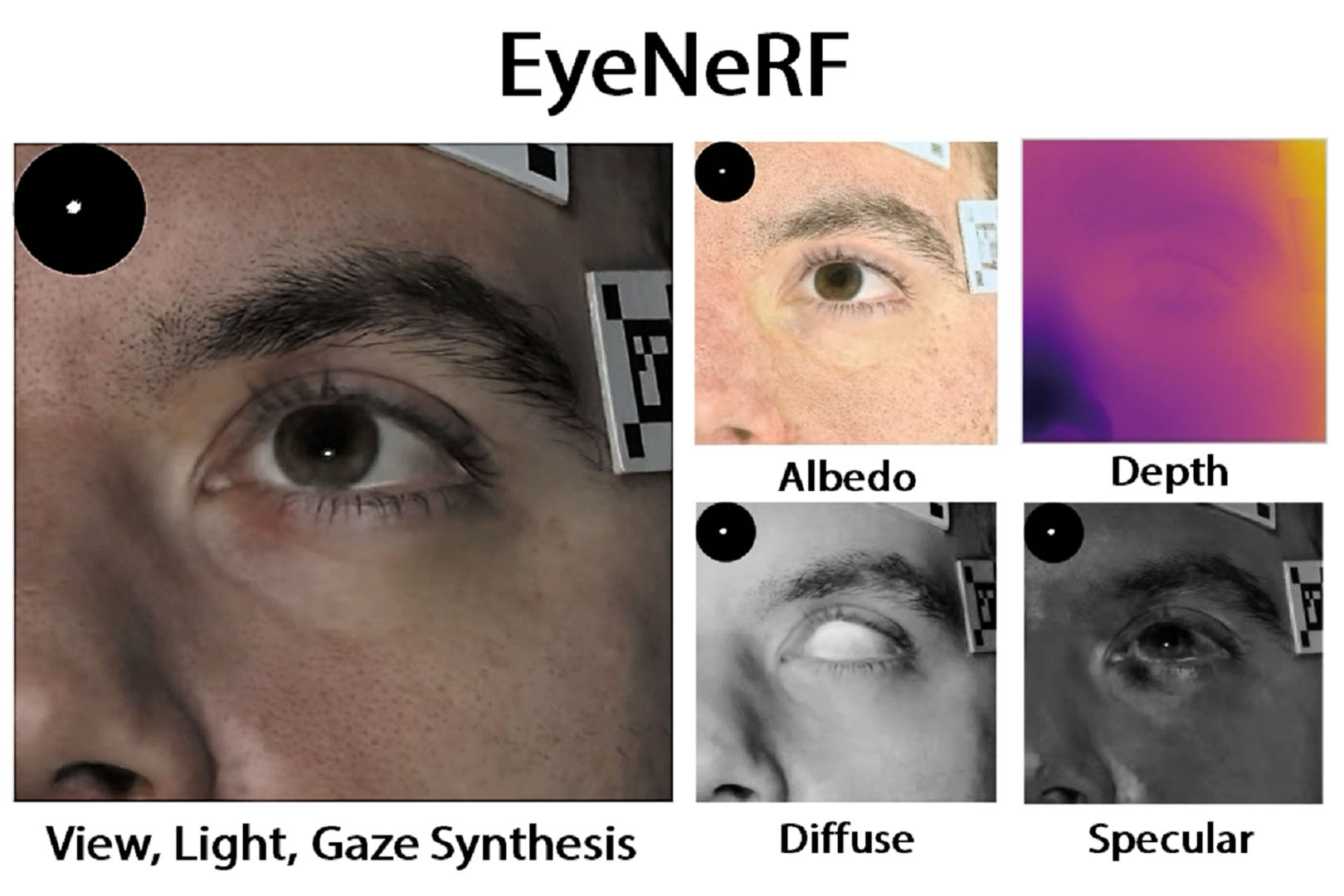

A unique challenge in creating high-quality animatable and relightable 3D avatars of real people is modeling human eyes, particularly in conjunction with the surrounding periocular face region. The challenge of synthesizing eyes is multifold as it requires 1) appropriate representations for the various components of the eye and the periocular region for coherent viewpoint synthesis, capable of representing diffuse, refractive and highly reflective surfaces, 2) disentangling skin and eye appearance from environmental illumination such that it may be rendered under novel lighting conditions, and 3) capturing eyeball motion and the deformation of the surrounding skin to enable re-gazing.These challenges have traditionally necessitated the use of expensive and cumbersome capture setups to obtain high-quality results, and even then, modeling of the full eye region holistically has remained elusive. We present a novel geometry and appearance representation that enables high-fidelity capture and photorealistic animation, view synthesis and relighting of the eye region using only a sparse set of lights and cameras. Our hybrid representation combines an explicit parametric surface model for the eyeball surface with implicit deformable volumetric representations for the periocular region and the interior of the eye. This novel hybrid model has been designed specifically to address the various parts of that exceptionally challenging facial area – the explicit eyeball surface allows modeling refraction and high frequency specular reflection at the cornea, whereas the implicit representation is well suited to model lower frequency skin reflection via spherical harmonics and can represent non-surface structures such as hair (i.e. eyebrows) or highly diffuse volumetric bodies (i.e. sclera), both of which are a challenge for explicit surface models. Tightly integrating the two representations in a joint framework allows controlled photoreal image synthesis and joint optimization of both the geometry parameters of the eyeball and the implicit neural network in continuous 3D space. We show that for high-resolution close-ups of the human eye, our model can synthesize high-fidelity animated gaze from novel views under unseen illumination conditions, allowing to generate visually rich eye imagery.

References:

1. Mallikarjun B R, Ayush Tewari, Tae-Hyun Oh, Tim Weyrich, Bernd Bickel, Hans-Peter Seidel, Hanspeter Pfister, Wojciech Matusik, Mohamed Elgharib, and Christian Theobalt. 2021. Monocular Reconstruction of Neural Face Reflectance Fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition.Google Scholar

2. Pascal B?rard, Derek Bradley, Markus Gross, and Thabo Beeler. 2016. Lightweight Eye Capture Using a Parametric Model. ACM Trans. Graph. 35, 4 (2016). https://doi.org/2897824.2925962Google ScholarDigital Library

3. P. B?rard, D. Bradley, M. Gross, and Thabo Beeler. 2019. Practical Person-Specific Eye Rigging. Computer Graphics Forum 38 (05 2019), 441–454. Google ScholarCross Ref

4. Pascal B?rard, Derek Bradley, Maurizio Nitti, Thabo Beeler, and Markus Gross. 2014. High-Quality Capture of Eyes. ACM Trans. Graph. 33, 6, Article 223 (nov 2014), 12 pages. Google ScholarDigital Library

5. Fausto Bernardini, Joshua Mittleman, Holly Rushmeier, Cl?udio Silva, and Gabriel Taubin. 1999. The Ball-Pivoting Algorithm for Surface Reconstruction. IEEE Transactions on Visualization and Computer Graphics 5, 4 (oct 1999), 349–359. Google ScholarDigital Library

6. Sai Bi, Stephen Lombardi, Shunsuke Saito, Tomas Simon, Shih-En Wei, Kevyn Mcphail, Ravi Ramamoorthi, Yaser Sheikh, and Jason Saragih. 2021. Deep relightable appearance models for animatable faces. ACM Transactions on Graphics (TOG) 40, 4 (August 2021).Google ScholarDigital Library

7. Sai Bi, Zexiang Xu, Kalyan Sunkavalli, Milo? Ha?an, Yannick Hold-Geoffroy, David Kriegman, and Ravi Ramamoorthi. 2020. Deep Reflectance Volumes: Relightable Reconstructions from Multi-View Photometric Images. arXiv:2007.09892 [cs.CV]Google Scholar

8. Mark Boss, Raphael Braun, Varun Jampani, Jonathan T. Barron, Ce Liu, and Hendrik P.A. Lensch. 2021. NeRD: Neural Reflectance Decomposition from Image Collections. In IEEE International Conference on Computer Vision (ICCV).Google Scholar

9. Marcel C. Buehler, Seonwook Park, Shalini De Mello, Xucong Zhang, and Otmar Hilliges. 2019. Content-Consistent Generation of Realistic Eyes with Style. In International Conference on Computer Vision Workshops (ICCVW) (Seoul, Korea).Google Scholar

10. Dawson-Haggerty et al. [n.d.]. trimesh. https://trimsh.org/Google Scholar

11. Carolina De Freitas, Marco Ruggeri, Fabrice Manns, Arthur Ho, and Jean-Marie Parel. 2013. In vivo measurement of the average refractive index of the human crystalline lens using optical coherence tomography. Optics letters 38, 2 (2013), 85–87.Google Scholar

12. Paul Debevec, Tim Hawkins, Chris Tchou, Haarm-Pieter Duiker, and Westley Sarokin. 2000a. Acquiring the Reflectance Field of a Human Face. In SIGGRAPH. New Orleans, LA. http://ict.usc.edu/pubs/Acquiring%20the%20Re%EF%AC%82ectance%20Field%20of%20a%20Human%20Face.pdfGoogle ScholarDigital Library

13. Paul Debevec, Tim Hawkins, Chris Tchou, Haarm-Pieter Duiker, Westley Sarokin, and Mark Sagar. 2000b. Acquiring the Reflectance Field of a Human Face. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’00). ACM Press/Addison-Wesley Publishing Co., USA, 145–156. Google ScholarDigital Library

14. Abdallah Dib, Cedric Thebault, Junghyun Ahn, Philippe-Henri Gosselin, Christian Theobalt, and Louis Chevallier. 2021. Towards High Fidelity Monocular Face Reconstruction with Rich Reflectance using Self-supervised Learning and Ray Tracing. arXiv:2103.15432 [cs.CV]Google Scholar

15. Augustin Fresnel. 1868. Oeuvres compl?tes d’augustin Fresnel. Vol. 2. Imprimerie imp?riale.Google Scholar

16. Wolfgang Fuhl, Gjergji Kasneci, and Enkelejda Kasneci. 2021. TEyeD: Over 20 million real-world eye images with Pupil, Eyelid, and Iris 2D and 3D Segmentations, 2D and 3D Landmarks, 3D Eyeball, Gaze Vector, and Eye Movement Types. arXiv:2102.02115 [eess.IV]Google Scholar

17. R. Fusek. 2018. Pupil localization using geodesic distance. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) 11241 LNCS (2018), 433–444. Google ScholarCross Ref

18. Guy Gafni, Justus Thies, Michael Zollh?fer, and Matthias Nie?ner. 2021. Dynamic Neural Radiance Fields for Monocular 4D Facial Avatar Reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 8649–8658.Google ScholarCross Ref

19. Yaroslav Ganin, Daniil Kononenko, Diana Sungatullina, and Victor Lempitsky. 2016. DeepWarp: Photorealistic Image Resynthesis for Gaze Manipulation. arXiv:1607.07215 [cs.CV]Google Scholar

20. Zhe He, Adrian Spurr, Xucong Zhang, and Otmar Hilliges. 2019. Photo-Realistic Monocular Gaze Redirection Using Generative Adversarial Networks. In IEEE International Conference on Computer Vision (ICCV) (Seoul, Korea). IEEE.Google Scholar

21. Joseph Ivanic and Klaus Ruedenberg. 1996. Rotation matrices for real spherical harmonics. Direct determination by recursion. The Journal of Physical Chemistry 100, 15 (1996), 6342–6347.Google ScholarCross Ref

22. James T Kajiya and Brian P Von Herzen. 1984. Ray tracing volume densities. ACM SIGGRAPH computer graphics 18, 3 (1984), 165–174.Google Scholar

23. Harsimran Kaur and Roberto Manduchi. 2020. EyeGAN: Gaze-Preserving, Mask-Mediated Eye Image Synthesis. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV).Google ScholarCross Ref

24. Harsimran Kaur and Roberto Manduchi. 2021. Subject Guided Eye Image Synthesis With Application to Gaze Redirection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV). 11–20.Google ScholarCross Ref

25. Joohwan Kim, Michael Stengel, Alexander Majercik, Shalini De Mello, David Dunn, Samuli Laine, Morgan McGuire, and David Luebke. 2019. NVGaze: An Anatomically-Informed Dataset for Low-Latency, Near-Eye Gaze Estimation. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (Glasgow, Scotland UK) (CHI ’19). ACM, New York, NY, USA, 10 pages. Google ScholarDigital Library

26. Daniil Kononenko and Victor Lempitsky. 2015. Learning to look up: Realtime monocular gaze correction using machine learning. In 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 4667–4675. Google ScholarCross Ref

27. Yves Le Grand. 1957. Light, Color and Vision. Wiley, New York, NY, USA.Google Scholar

28. Daniel H. Lee and Adam K. Anderson. 2017. Reading What the Mind Thinks From How the Eye Sees. Psychological Science 28, 4 (2017), 494–503. arXiv:https://doi.org/10.1177/0956797616687364 PMID: 28406382. Google ScholarCross Ref

29. Kangwook Lee, Hoon Kim, and Changho Suh. 2018. Simulated+Unsupervised Learning With Adaptive Data Generation and Bidirectional Mappings. In 6th International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, April 30 – May 3, 2018, Conference Track Proceedings. OpenReview.net. https://openreview.net/forum?id=SkHDoG-CbGoogle Scholar

30. Zhengqi Li, Simon Niklaus, Noah Snavely, and Oliver Wang. 2021. Neural Scene Flow Fields for Space-Time View Synthesis of Dynamic Scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarCross Ref

31. Stephen Lombardi, Tomas Simon, Jason Saragih, Gabriel Schwartz, Andreas Lehrmann, and Yaser Sheikh. 2019. Neural Volumes: Learning Dynamic Renderable Volumes from Images. ACM Trans. Graph. 38, 4, Article 65 (July 2019), 14 pages.Google ScholarDigital Library

32. Feng Lu, Yusuke Sugano, Takahiro Okabe, and Yoichi Sato. 2015. Gaze Estimation From Eye Appearance: A Head Pose-Free Method via Eye Image Synthesis. IEEE Transactions on Image Processing 24, 11 (2015), 3680–3693. Google ScholarDigital Library

33. B R Mallikarjun, Ayush Tewari, Abdallah Dib, Tim Weyrich, Bernd Bickel, Hans-Peter Seidel, Hanspeter Pfister, Wojciech Matusik, Louis Chevallier, Mohamed Elgharib, et al. 2021. PhotoApp: Photorealistic Appearance Editing of Head Portraits. ACM Transactions on Graphics 40, 4 (2021), 1–16.Google Scholar

34. Abhimitra Meka, Christian Haene, Rohit Pandey, Michael Zollhoefer, Sean Fanello, Graham Fyffe, Adarsh Kowdle, Xueming Yu, Jay Busch, Jason Dourgarian, Peter Denny, Sofien Bouaziz, Peter Lincoln, Matt Whalen, Geoff Harvey, Jonathan Taylor, Shahram Izadi, Andrea Tagliasacchi, Paul Debevec, Christian Theobalt, Julien Valentin, and Christoph Rhemann. 2019. Deep Reflectance Fields – High-Quality Facial Reflectance Field Inference From Color Gradient Illumination. ACM Transactions on Graphics (Proceedings SIGGRAPH) 38, 4. Google ScholarDigital Library

35. Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. 2020. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. In ECCV.Google Scholar

36. Thomas M?ller, Alex Evans, Christoph Schied, and Alexander Keller. 2022. Instant Neural Graphics Primitives with a Multiresolution Hash Encoding. arXiv:2201.05989 (January 2022).Google Scholar

37. Ko Nishino and Shree Nayar. 2006. Corneal Imaging System: Environment from Eyes. International Journal of Computer Vision 70 (10 2006), 23–40. Google ScholarDigital Library

38. Rohit Pandey, Sergio Orts-Escolano, Chloe LeGendre, Christian Haene, Sofien Bouaziz, Christoph Rhemann, Paul Debevec, and Sean Fanello. 2021. Total Relighting: Learning to Relight Portraits for Background Replacement. ACM Transactions on Graphics (Proceedings SIGGRAPH) 40, 4. Google ScholarDigital Library

39. Keunhong Park, Utkarsh Sinha, Jonathan T. Barron, Sofien Bouaziz, Dan B Goldman, Steven M. Seitz, and Ricardo Martin-Brualla. 2021a. Nerfies: Deformable Neural Radiance Fields. ICCV (2021).Google Scholar

40. Keunhong Park, Utkarsh Sinha, Peter Hedman, Jonathan T. Barron, Sofien Bouaziz, Dan B Goldman, Ricardo Martin-Brualla, and Steven M. Seitz. 2021b. HyperNeRF: A Higher-Dimensional Representation for Topologically Varying Neural Radiance Fields. ACM Trans. Graph. 40, 6, Article 238 (dec 2021).Google ScholarCross Ref

41. Sudi Patel, John Marshall, and Frederick W Fitzke. 1995. Refractive index of the human corneal epithelium and stroma., 100–141 pages.Google Scholar

42. Albert Pumarola, Enric Corona, Gerard Pons-Moll, and Francesc Moreno-Noguer. 2021. D-nerf: Neural radiance fields for dynamic scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10318–10327.Google ScholarCross Ref

43. Gabriel Schwartz, Shih-En Wei, Te-Li Wang, Stephen Lombardi, Tomas Simon, Jason Saragih, and Yaser Sheikh. 2020. The eyes have it: An integrated eye and face model for photorealistic facial animation. ACM Transactions on Graphics (TOG) 39, 4 (2020), 91–1.Google ScholarDigital Library

44. Ashish Shrivastava, Tomas Pfister, Oncel Tuzel, Joshua Susskind, Wenda Wang, and Russell Webb. 2017. Learning from Simulated and Unsupervised Images through Adversarial Training. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2242–2251. Google ScholarCross Ref

45. Zhixin Shu, Sunil Hadap, Eli Shechtman, Kalyan Sunkavalli, Sylvain Paris, and Dimitris Samaras. 2017. Portrait Lighting Transfer Using a Mass Transport Approach. ACM Trans. Graph. 37, 1, Article 2 (oct 2017), 15 pages. Google ScholarDigital Library

46. Fengyi Song, Xiaoyang Tan, Xue Liu, and Songcan Chen. 2014. Eyes closeness detection from still images with multi-scale histograms of principal oriented gradients. Pattern Recognition 47, 9 (2014), 2825–2838. Google ScholarCross Ref

47. Pratul P. Srinivasan, Boyang Deng, Xiuming Zhang, Matthew Tancik, Ben Mildenhall, and Jonathan T. Barron. 2021. NeRV: Neural Reflectance and Visibility Fields for Relighting and View Synthesis. In CVPR.Google Scholar

48. Yusuke Sugano, Yasuyuki Matsushita, and Yoichi Sato. 2014. Learning-by-Synthesis for Appearance-Based 3D Gaze Estimation. In 2014 IEEE Conference on Computer Vision and Pattern Recognition. 1821–1828. Google ScholarDigital Library

49. Tiancheng Sun, Kai-En Lin, Sai Bi, Zexiang Xu, and Ravi Ramamoorthi. 2021. NeLF: Neural Light-transport Field for Portrait View Synthesis and Relighting. In Eurographics Symposium on Rendering.Google Scholar

50. Tiancheng Sun, Zexiang Xu, Xiuming Zhang, Sean Fanello, Christoph Rhemann, Paul Debevec, Yun-Ta Tsai, Jonathan T Barron, and Ravi Ramamoorthi. 2020. Light stage super-resolution: continuous high-frequency relighting. ACM Transactions on Graphics (TOG) 39, 6 (2020), 1–12.Google ScholarDigital Library

51. J. Thies, M. Zollh?fer, M. Stamminger, C. Theobalt, and M. Nie?ner. 2018. HeadOn: Real-time Reenactment of Human Portrait Videos. ACM Transactions on Graphics 2018 (TOG) (2018).Google Scholar

52. Justus Thies, Michael Zoll?fer, Marc Stamminger, Christian Theobalt, and Matthias Nie?ner. 2016. FaceVR: Real-Time Facial Reenactment and Eye Gaze Control in Virtual Reality. arXiv preprint arXiv:1610.03151 (2016).Google Scholar

53. Marc Tonsen, Julian Steil, Yusuke Sugano, and Andreas Bulling. 2017. InvisibleEye: Mobile Eye Tracking Using Multiple Low-Resolution Cameras and Learning-Based Gaze Estimation. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 1, 3, Article 106 (sep 2017), 21 pages. Google ScholarDigital Library

54. Marc Tonsen, Xucong Zhang, Yusuke Sugano, and Andreas Bulling. 2016. Labelled Pupils in the Wild: A Dataset for Studying Pupil Detection in Unconstrained Environments. In Proceedings of the Ninth Biennial ACM Symposium on Eye Tracking Research Applications (Charleston, South Carolina) (ETRA ’16). Association for Computing Machinery, New York, NY, USA, 139–142. Google ScholarDigital Library

55. Edgar Tretschk, Ayush Tewari, Vladislav Golyanik, Michael Zollh?fer, Christoph Lassner, and Christian Theobalt. 2021. Non-Rigid Neural Radiance Fields: Reconstruction and Novel View Synthesis of a Dynamic Scene From Monocular Video. In IEEE International Conference on Computer Vision (ICCV). IEEE.Google ScholarCross Ref

56. Ingo Wald, Sven Woop, Carsten Benthin, Gregory S Johnson, and Manfred Ernst. 2014. Embree: a kernel framework for efficient CPU ray tracing. ACM Transactions on Graphics (TOG) 33, 4 (2014), 1–8.Google ScholarDigital Library

57. Kang Wang, Rui Zhao, and Qiang Ji. 2018. A Hierarchical Generative Model for Eye Image Synthesis and Eye Gaze Estimation. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. 440–448. Google ScholarCross Ref

58. Erroll Wood, Tadas Baltrusaitis, Xucong Zhang, Yusuke Sugano, Peter Robinson, and Andreas Bulling. 2015. Rendering of Eyes for Eye-Shape Registration and Gaze Estimation. In Proc. of the IEEE International Conference on Computer Vision (ICCV 2015) (2015-12-12).Google ScholarDigital Library

59. Zhengyang Wu, Srivignesh Rajendran, Tarrence Van As, Vijay Badrinarayanan, and Andrew Rabinovich. 2019. EyeNet: A Multi-Task Deep Network for Off-Axis Eye Gaze Estimation. In 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW). 3683–3687. Google ScholarCross Ref

60. Zhengyang Wu, Srivignesh Rajendran, Tarrence van As, Joelle Zimmermann, Vijay Badrinarayanan, and Andrew Rabinovich. 2020. MagicEyes: A Large Scale Eye Gaze Estimation Dataset for Mixed Reality. arXiv:2003.08806 [cs.CV]Google Scholar

61. Wenqi Xian, Jia-Bin Huang, Johannes Kopf, and Changil Kim. 2021. Space-time Neural Irradiance Fields for Free-Viewpoint Video. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 9421–9431.Google ScholarCross Ref

62. Qinjie Xiao, Hanyuan Zhang, Zhaorui Zhang, Yiqian Wu, Luyuan Wang, Xiaogang Jin, Xinwei Jiang, Yong-Liang Yang, Tianjia Shao, and Kun Zhou. 2021. EyelashNet: A Dataset and a Baseline Method for Eyelash Matting. ACM Trans. Graph. 40, 6, Article 217 (dec 2021), 17 pages. Google ScholarDigital Library

63. Jason Y. Zhang, Gengshan Yang, Shubham Tulsiani, and Deva Ramanan. 2021c. NeRS: Neural Reflectance Surfaces for Sparse-view 3D Reconstruction in the Wild. In Conference on Neural Information Processing Systems.Google Scholar

64. Kai Zhang, Fujun Luan, Qianqian Wang, Kavita Bala, and Noah Snavely. 2021a. PhySG: Inverse Rendering with Spherical Gaussians for Physics-based Material Editing and Relighting. In The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

65. Xiuming Zhang, Pratul P. Srinivasan, Boyang Deng, Paul Debevec, William T. Freeman, and Jonathan T. Barron. 2021b. NeRFactor: Neural Factorization of Shape and Reflectance under an Unknown Illumination. ACM Trans. Graph. 40, 6, Article 237 (dec 2021), 18 pages. Google ScholarDigital Library

66. Xucong Zhang, Yusuke Sugano, Mario Fritz, and Andreas Bulling. 2015. Appearance-based Gaze Estimation in the Wild. In Proc. of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 4511–4520.Google ScholarCross Ref

67. Yufeng Zheng, Seonwook Park, Xucong Zhang, Shalini De Mello, and Otmar Hilliges. 2020. Self-Learning Transformations for Improving Gaze and Head Redirection. In Neural Information Processing Systems (NeurIPS).Google Scholar

68. Hao Zhou, Sunil Hadap, Kalyan Sunkavalli, and David Jacobs. 2019. Deep Single-Image Portrait Relighting. In 2019 IEEE/CVF International Conference on Computer Vision (ICCV). 7193–7201. Google ScholarCross Ref

69. Qian-Yi Zhou, Jaesik Park, and Vladlen Koltun. 2018. Open3D: A Modern Library for 3D Data Processing. arXiv:1801.09847 (2018).Google Scholar