“EyelashNet: a dataset and a baseline method for eyelash matting” by Xiao, Zhang, Zhang, Wu, Wang, et al. …

Conference:

Type(s):

Title:

- EyelashNet: a dataset and a baseline method for eyelash matting

Session/Category Title: Synthesizing Human Images

Presenter(s)/Author(s):

Abstract:

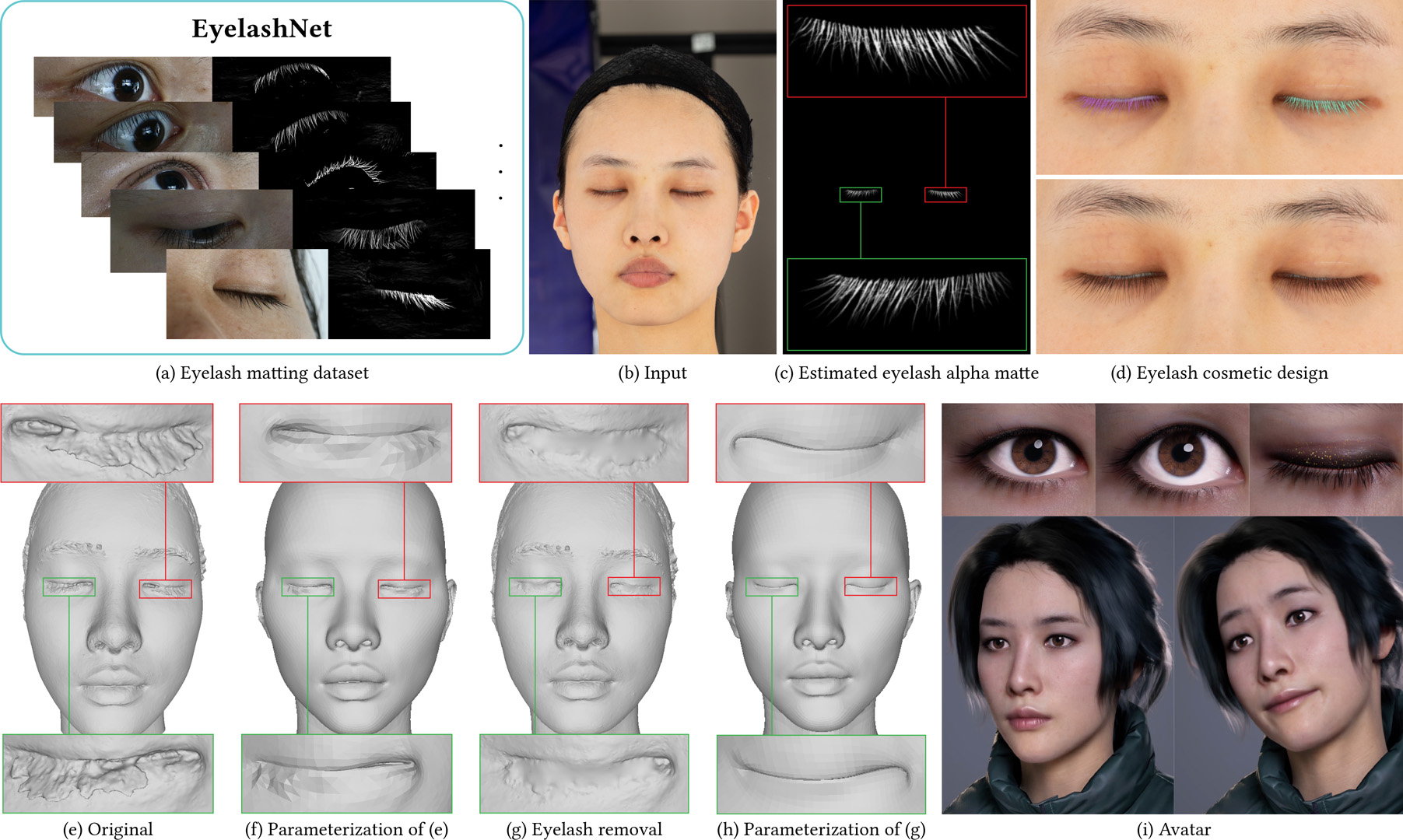

Eyelashes play a crucial part in the human facial structure and largely affect the facial attractiveness in modern cosmetic design. However, the appearance and structure of eyelashes can easily induce severe artifacts in high-fidelity multi-view 3D face reconstruction. Unfortunately it is highly challenging to remove eyelashes from portrait images using both traditional and learning-based matting methods due to the delicate nature of eyelashes and the lack of eyelash matting dataset. To this end, we present EyelashNet, the first eyelash matting dataset which contains 5,400 high-quality eyelash matting data captured from real world and 5,272 virtual eyelash matting data created by rendering avatars. Our work consists of a capture stage and an inference stage to automatically capture and annotate eyelashes instead of tedious manual efforts. The capture is based on a specifically-designed fluorescent labeling system. By coloring the eyelashes with a safe and invisible fluorescent substance, our system takes paired photos with colored and normal eyelashes by turning the equipped ultraviolet (UVA) flash on and off. We further correct the alignment between each pair of photos and use a novel alpha matte inference network to extract the eyelash alpha matte. As there is no prior eyelash dataset, we propose a progressive training strategy that progressively fuses captured eyelash data with virtual eyelash data to learn the latent semantics of real eyelashes. As a result, our method can accurately extract eyelash alpha mattes from fuzzy and self-shadow regions such as pupils, which is almost impossible by manual annotations. To validate the advantage of EyelashNet, we present a baseline method based on deep learning that achieves state-of-the-art eyelash matting performance with RGB portrait images as input. We also demonstrate that our work can largely benefit important real applications including high-fidelity personalized avatar and cosmetic design.

References:

1. Yagiz Aksoy, Tunç Ozan Aydin, and Marc Pollefeys. 2017. Designing Effective Inter-Pixel Information Flow for Natural Image Matting. In 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017. IEEE Computer Society, 228–236.

2. Yagiz Aksoy, Tae-Hyun Oh, Sylvain Paris, Marc Pollefeys, and Wojciech Matusik. 2018. Semantic soft segmentation. ACM Trans. Graph. 37, 4 (2018), 72:1–72:13.

3. Thabo Beeler, Bernd Bickel, Gioacchino Noris, Paul A. Beardsley, Steve Marschner, Robert W. Sumner, and Markus H. Gross. 2012. Coupled 3D reconstruction of sparse facial hair and skin. ACM Trans. Graph. 31, 4 (2012), 117:1–117:10.

4. Amit Bermano, Thabo Beeler, Yeara Kozlov, Derek Bradley, Bernd Bickel, and Markus H. Gross. 2015. Detailed spatio-temporal reconstruction of eyelids. ACM Trans. Graph. 34, 4 (2015), 44:1–44:11.

5. Shaofan Cai, Xiaoshuai Zhang, Haoqiang Fan, Haibin Huang, Jiangyu Liu, Jiaming Liu, Jiaying Liu, Jue Wang, and Jian Sun. 2019. Disentangled Image Matting. In 2019 IEEE/CVF International Conference on Computer Vision, ICCV 2019. IEEE, 8818–8827.

6. Chen Cao, Derek Bradley, Kun Zhou, and Thabo Beeler. 2015. Real-time high-fidelity facial performance capture. ACM Trans. Graph. 34, 4 (2015), 46:1–46:9.

7. Quan Chen, Tiezheng Ge, Yanyu Xu, Zhiqiang Zhang, Xinxin Yang, and Kun Gai. 2018. Semantic Human Matting. In 2018 ACM Multimedia Conference on Multimedia Conference, MM 2018. ACM, 618–626.

8. Yunjey Choi, Youngjung Uh, Jaejun Yoo, and Jung-Woo Ha. 2020. StarGAN v2: Diverse Image Synthesis for Multiple Domains. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020. IEEE, 8185–8194.

9. International Electrotechnical Commission. [n.d.]. Photobiological safety of lamps and lamp systems. https://webstore.iec.ch/publication/7076

10. Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li, and Li Fei-Fei. 2009. ImageNet: A large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition. 248–255.

11. Alexey Dosovitskiy, Philipp Fischer, Eddy Ilg, Philip Häusser, Caner Hazirbas, Vladimir Golkov, Patrick van der Smagt, Daniel Cremers, and Thomas Brox. 2015. FlowNet: Learning Optical Flow with Convolutional Networks. In 2015 IEEE International Conference on Computer Vision, ICCV 2015. IEEE Computer Society, 2758–2766.

12. Xiaoxue Feng, Xiaohui Liang, and Zili Zhang. 2016. A Cluster Sampling Method for Image Matting via Sparse Coding. In Computer Vision – ECCV 2016 – 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part II (Lecture Notes in Computer Science, Vol. 9906). Springer, 204–219.

13. Marco Forte and François Pitié. 2020. F, B, Alpha Matting. arXiv:2003.07711 [cs.CV]

14. Pablo Garrido, Michael Zollhöfer, Dan Casas, Levi Valgaerts, Kiran Varanasi, Patrick Pérez, and Christian Theobalt. 2016. Reconstruction of Personalized 3D Face Rigs from Monocular Video. ACM Trans. Graph. 35, 3 (2016), 28:1–28:15.

15. Priya Goyal, Piotr Dollár, Ross Girshick, Pieter Noordhuis, Lukasz Wesolowski, Aapo Kyrola, Andrew Tulloch, Yangqing Jia, and Kaiming He. 2018. Accurate, Large Minibatch SGD: Training ImageNet in 1 Hour. arXiv:1706.02677 [cs.CV]

16. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep Residual Learning for Image Recognition. In 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016. IEEE Computer Society, 770–778.

17. Tong He, Zhi Zhang, Hang Zhang, Zhongyue Zhang, Junyuan Xie, and Mu Li. 2019. Bag of Tricks for Image Classification with Convolutional Neural Networks. In 2019 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019. Computer Vision Foundation / IEEE, 558–567.

18. Fu-Chung Huang, Gordon Wetzstein, Brian A. Barsky, and Ramesh Raskar. 2014. Eyeglasses-free display: towards correcting visual aberrations with computational light field displays. ACM Trans. Graph. 33, 4 (2014), 59:1–59:12.

19. Eddy Ilg, Nikolaus Mayer, Tonmoy Saikia, Margret Keuper, Alexey Dosovitskiy, and Thomas Brox. 2017. FlowNet 2.0: Evolution of Optical Flow Estimation with Deep Networks. In 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017. IEEE Computer Society, 1647–1655.

20. Daz Productions Inc. 2021. Daz3D – Model, Render, & Animate. https://www.daz3d.com/.

21. Sergey Ioffe and Christian Szegedy. 2015. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning (Proceedings of Machine Learning Research, Vol. 37), Francis Bach and David Blei (Eds.). PMLR, Lille, France, 448–456.

22. Joohwan Kim, Michael Stengel, Alexander Majercik, Shalini De Mello, David Dunn, Samuli Laine, Morgan McGuire, and David Luebke. 2019. NVGaze: An Anatomically-Informed Dataset for Low-Latency, Near-Eye Gaze Estimation. Association for Computing Machinery, New York, NY, USA, 1–12.

23. Cheng-Han Lee, Ziwei Liu, Lingyun Wu, and Ping Luo. 2020. MaskGAN: Towards Diverse and Interactive Facial Image Manipulation. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020. IEEE, 5548–5557.

24. Hao Li, Thibaut Weise, and Mark Pauly. 2010. Example-based facial rigging. ACM Trans. Graph. 29, 4 (2010), 32:1–32:6.

25. Jiaman Li, Zhengfei Kuang, Yajie Zhao, Mingming He, Karl Bladin, and Hao Li. 2020. Dynamic facial asset and rig generation from a single scan. ACM Trans. Graph. 39, 6 (2020), 215:1–215:18.

26. Tianye Li, Timo Bolkart, Michael J. Black, Hao Li, and Javier Romero. 2017. Learning a model of facial shape and expression from 4D scans. ACM Trans. Graph. 36, 6 (2017), 194:1–194:17.

27. Yaoyi Li and Hongtao Lu. 2020. Natural Image Matting via Guided Contextual Attention. In The Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, The Thirty-Second Innovative Applications of Artificial Intelligence Conference, IAAI 2020, The Tenth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2020. AAAI Press, 11450–11457.

28. Shanchuan Lin, Andrey Ryabtsev, Soumyadip Sengupta, Brian Curless, Steve Seitz, and Ira Kemelmacher-Shlizerman. 2020. Real-Time High-Resolution Background Matting. arXiv:2012.07810 [cs.CV]

29. Tsung-Yi Lin, Michael Maire, Serge J. Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollár, and C. Lawrence Zitnick. 2014. Microsoft COCO: Common Objects in Context. In Computer Vision – ECCV 2014 – 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V (Lecture Notes in Computer Science, Vol. 8693). Springer, 740–755.

30. Jinlin Liu, Yuan Yao, Wendi Hou, Miaomiao Cui, Xuansong Xie, Changshui Zhang, and Xian-Sheng Hua. 2020. Boosting Semantic Human Matting With Coarse Annotations. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020. IEEE, 8560–8569.

31. Si Liu, Xinyu Ou, Ruihe Qian, Wei Wang, and Xiaochun Cao. 2016. Makeup like a Superstar: Deep Localized Makeup Transfer Network. In Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence (New York, New York, USA) (IJCAI’16). AAAI Press, 2568–2575.

32. Ilya Loshchilov and Frank Hutter. 2017. SGDR: Stochastic Gradient Descent with Warm Restarts. arXiv:1608.03983 [cs.LG]

33. Hao Lu, Yutong Dai, Chunhua Shen, and Songcen Xu. 2019. Indices Matter: Learning to Index for Deep Image Matting. In 2019 IEEE/CVF International Conference on Computer Vision, ICCV 2019. IEEE, 3265–3274.

34. Wan-Chun Ma, Mathieu Lamarre, Etienne Danvoye, Chongyang Ma, Manny Ko, Javier von der Pahlen, and Cyrus A. Wilson. 2016. Semantically-aware blendshape rigs from facial performance measurements. In SIGGRAPH ASIA 2016, Macao, December 5-8, 2016 – Technical Briefs. ACM, 3.

35. Sachit Menon, Alexandru Damian, Shijia Hu, Nikhil Ravi, and Cynthia Rudin. 2020. PULSE: Self-Supervised Photo Upsampling via Latent Space Exploration of Generative Models. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020. IEEE, 2434–2442.

36. MOVA. 2021. MOVA Contour Facial Capture. http://www.mova.com/.

37. Nitinraj Nair, Rakshit Kothari, Aayush K Chaudhary, Zhizhuo Yang, Gabriel J Diaz, Jeff B Pelz, and Reynold J Bailey. 2020. RIT-Eyes: Rendering of near-eye images for eye-tracking applications. In ACM Symposium on Applied Perception 2020. 1–9.

38. Giljoo Nam, Chenglei Wu, Min H. Kim, and Yaser Sheikh. 2019. Strand-Accurate Multi-View Hair Capture. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019. Computer Vision Foundation / IEEE, 155–164.

39. Noris. 2021. Noris Color GmbH. http://www.norisusa.com/110UVInk.html.

40. Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, Alban Desmaison, Andreas Köpf, Edward Yang, Zach DeVito, Martin Raison, Alykhan Tejani, Sasank Chilamkurthy, Benoit Steiner, Lu Fang, Junjie Bai, and Soumith Chintala. 2019. PyTorch: An Imperative Style, High-Performance Deep Learning Library. arXiv:1912.01703 [cs.LG]

41. Yu Qiao, Yuhao Liu, Xin Yang, Dongsheng Zhou, Mingliang Xu, Qiang Zhang, and Xiaopeng Wei. 2020. Attention-Guided Hierarchical Structure Aggregation for Image Matting. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020. IEEE, 13673–13682.

42. Christoph Rhemann, Carsten Rother, Jue Wang, Margrit Gelautz, Pushmeet Kohli, and Pamela Rott. 2009. A perceptually motivated online benchmark for image matting. In 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2009). IEEE Computer Society, 1826–1833.

43. Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2015. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015 – 18th International Conference Munich, Germany, October 5 – 9, 2015, Proceedings, Part III (Lecture Notes in Computer Science, Vol. 9351). Springer, 234–241.

44. Gabriel Schwartz, Shih-En Wei, Te-Li Wang, Stephen Lombardi, Tomas Simon, Jason M. Saragih, and Yaser Sheikh. 2020. The eyes have it: an integrated eye and face model for photorealistic facial animation. ACM Trans. Graph. 39, 4 (2020), 91.

45. Soumyadip Sengupta, Vivek Jayaram, Brian Curless, Steven M. Seitz, and Ira Kemelmacher-Shlizerman. 2020. Background Matting: The World Is Your Green Screen. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020. IEEE, 2288–2297.

46. Yi-Chang Shih, Wei-Sheng Lai, and Chia-Kai Liang. 2019. Distortion-free wide-angle portraits on camera phones. ACM Trans. Graph. 38, 4 (2019), 61:1–61:12.

47. Zhixin Shu, Eli Shechtman, Dimitris Samaras, and Sunil Hadap. 2017. EyeOpener: Editing Eyes in the Wild. ACM Trans. Graph. 36, 1 (2017), 1:1–1:13.

48. Alvy Ray Smith and James F. Blinn. 1996. Blue Screen Matting. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques, SIGGRAPH 1996. ACM, 259–268.

49. Steven L. Song, Weiqi Shi, and Michael Reed. 2020. Accurate face rig approximation with deep differential subspace reconstruction. ACM Trans. Graph. 39, 4 (2020), 34.

50. Jian Sun, Yin Li, Sing Bing Kang, and Heung-Yeung Shum. 2006. Flash Matting. ACM Trans. Graph. 25, 3 (July 2006), 772–778.

51. Tristan Swedish, Karin Roesch, Ikhyun Lee, Krishna Rastogi, Shoshana Bernstein, and Ramesh Raskar. 2015. eyeSelfie: self directed eye alignment using reciprocal eye box imaging. ACMTrans. Graph. 34, 4 (2015), 58:1–58:10.

52. Laura C. Trutoiu, Elizabeth J. Carter, Iain Matthews, and Jessica K. Hodgins. 2011. Modeling and Animating Eye Blinks. ACM Trans. Appl. Percept. 8, 3, Article 17 (Aug. 2011), 17 pages.

53. Unsplash. 2021. Unsplash: Photos for everyone. https://unsplash.com/.

54. Congyi Wang, Fuhao Shi, Shihong Xia, and Jinxiang Chai. 2016. Realtime 3D eye gaze animation using a single RGB camera. ACM Trans. Graph. 35, 4 (2016), 118:1–118:14.

55. Mei Wang and Weihong Deng. 2018. Deep visual domain adaptation: A survey. Neurocomputing 312 (2018), 135–153.

56. Quan Wen, Feng Xu, Ming Lu, and Jun-Hai Yong. 2017b. Real-time 3D eyelids tracking from semantic edges. ACM Trans. Graph. 36, 6 (2017), 193:1–193:11.

57. Quan Wen, Feng Xu, and Jun-Hai Yong. 2017a. Real-Time 3D Eye Performance Reconstruction for RGBD Cameras. IEEE Trans. Vis. Comput. Graph. 23, 12 (2017), 2586–2598.

58. Eric Whitmire, Laura Trutoiu, Robert Cavin, David Perek, Brian Scally, James Phillips, and Shwetak Patel. 2016. EyeContact: Scleral Coil Eye Tracking for Virtual Reality. In Proceedings of the 2016 ACM International Symposium on Wearable Computers (Heidelberg, Germany) (ISWC ’16). Association for Computing Machinery, New York, NY, USA, 184–191.

59. Erroll Wood, Tadas Baltrušaitis, Louis-Philippe Morency, Peter Robinson, and Andreas Bulling. 2016. Learning an Appearance-Based Gaze Estimator from One Million Synthesised Images. In Proceedings of the Ninth Biennial ACM Symposium on Eye Tracking Research & Applications. 131–138.

60. Wayne Wu, Chen Qian, Shuo Yang, Quan Wang, Yici Cai, and Qiang Zhou. 2018. Look at Boundary: A Boundary-Aware Face Alignment Algorithm. In 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018. IEEE Computer Society, 2129–2138.

61. Ning Xu, Brian L. Price, Scott Cohen, and Thomas S. Huang. 2017. Deep Image Matting. In 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017. IEEE Computer Society, 311–320.

62. Yunke Zhang, Lixue Gong, Lubin Fan, Peiran Ren, Qixing Huang, Hujun Bao, and Weiwei Xu. 2019. A Late Fusion CNN for Digital Matting. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019. Computer Vision Foundation / IEEE, 7469–7478.