“Extracting depth and matte using a color-filtered aperture”

Conference:

Type(s):

Title:

- Extracting depth and matte using a color-filtered aperture

Session/Category Title:

- Image-based capture

Presenter(s)/Author(s):

Abstract:

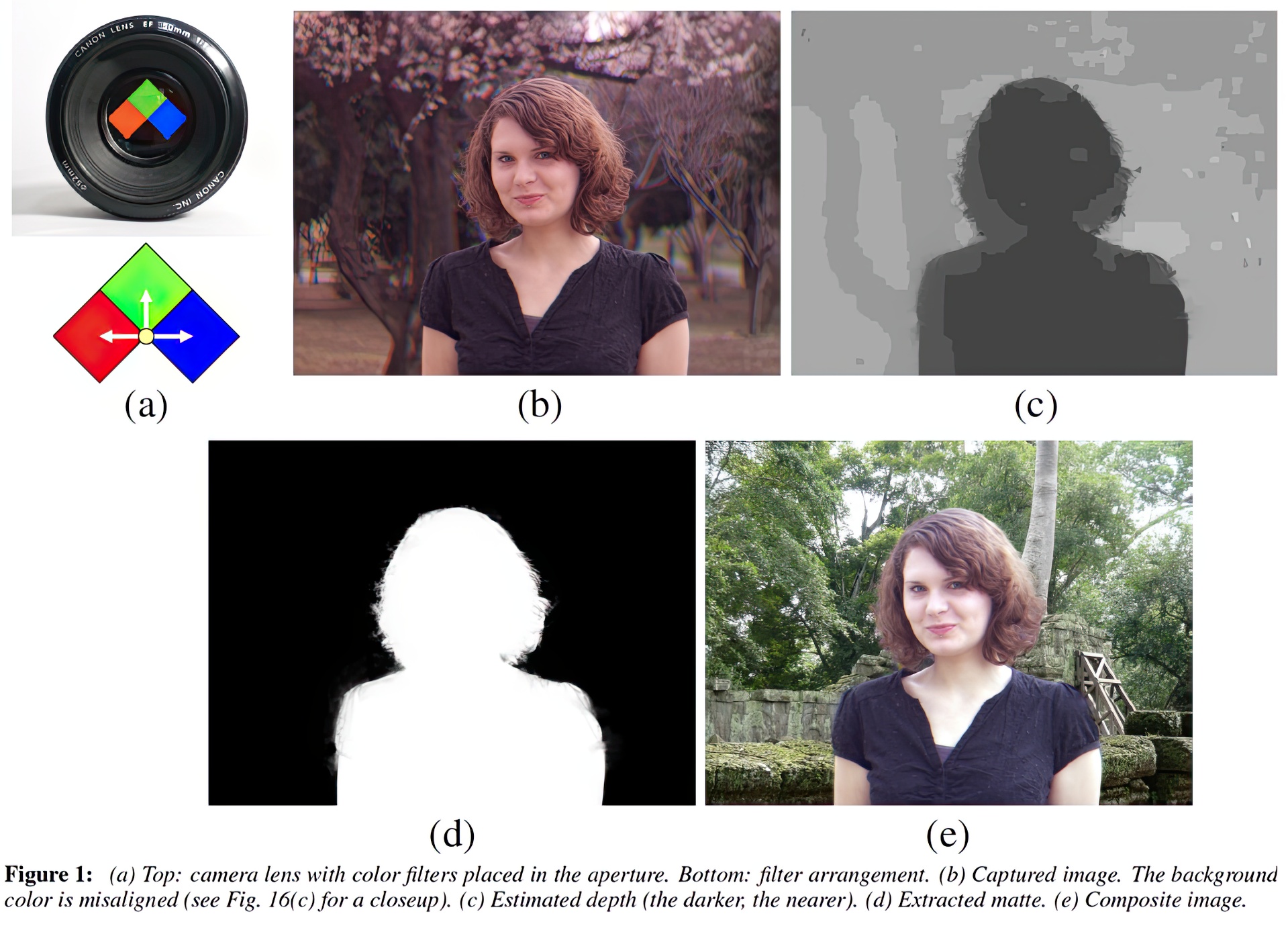

This paper presents a method for automatically extracting a scene depth map and the alpha matte of a foreground object by capturing a scene through RGB color filters placed in the camera lens aperture. By dividing the aperture into three regions through which only light in one of the RGB color bands can pass, we can acquir three shifted views of a scene in the RGB planes of an image in a single exposure. In other words, a captured image has depth-dependent color misalignment. We develop a color alignment measure to estimate disparities between the RGB planes for depth reconstruction. We also exploit color misalignment cues in our matting algorithm in order to disambiguate between the foreground and background regions even where their colors are similar. Based on the extracted depth and matte, the color misalignment in the captured image can be canceled, and various image editing operations can be applied to the reconstructed image, including novel view synthesis, postexposure refocusing, and composition over different backgrounds.

References:

1. Adelson, E. H., and Wang, J. Y. A. 1992. Single lens stereo with a plenoptic camera. IEEE Trans. PAMI 14, 2, 99–106. Google ScholarDigital Library

2. Amari, Y., and Adelson, E. H. 1992. Single-eye range estimation by using displaced apertures with color filters. In Proc. Int. Conf. Industrial Electronics, Control, Instrumentation, and Automation, vol. 3, 1588–1592.Google Scholar

3. Bando, Y., and Nishita, T. 2007. Towards digital refocusing from a single photograph. In Proc. Pacific Graphics, 363–372. Google Scholar

4. Boykov, Y., Veksler, O., and Zabih, R. 2001. Fast approximate energy minimization via graph cuts. IEEE Trans. PAMI 23, 11, 1222–1239. Google ScholarDigital Library

5. Chang, I.-C., Huang, C.-L., Hsueh, W.-J., Lin, H.-C., Chen, C.-C., and Yeh, Y.-H. 2002. A novel 3-D hand-held camera based on tri-aperture lens. In Proc. SPIE 4925, 655–662.Google Scholar

6. Chuang, Y.-Y., Curless, B., Salesin, D. H., and Szeliski, R. 2001. A bayesian approach to digital matting. In Proc. CVPR, vol. 2, 264–271. Google Scholar

7. Chuang, Y.-Y. 2004. New models and methods for matting and compositing. PhD thesis, University of Washington. Google Scholar

8. Georgeiv, T., Zheng, K. C., Curless, B., Salesin, D., Nayar, S., and Intwala, C. 2006. Spatio-angular resolution tradeoff in integral photography. In Proc. EGSR, 263–272. Google Scholar

9. Gradshteyn, I. S., and Ryzhik, I. M. 2000. Table of integrals, series, and products (sixth edition). Academic Press.Google Scholar

10. Grady, L. 2006. Random walks for image segmentation. IEEE Trans. PAMI 28, 11, 1768–1783. Google ScholarDigital Library

11. Green, P., Sun, W., Matusik, W., and Durand, F. 2007. Multi-aperture photograpy. ACM Trans. Gr. 26, 3, 68:1–68:7. Google ScholarDigital Library

12. Joshi, N., Matusik, W., and Avidan., S. 2006. Natural video matting using camera arrays. ACM Trans. Gr. 25, 3, 779–786. Google ScholarDigital Library

13. Kim, J., Kolmogorov, V., and Zabih, R. 2003. Visual correspondence using energy minimization and mutual information. In Proc. ICCV, vol. 2, 1033–1040. Google ScholarDigital Library

14. Levin, A., Fergus, R., Durand, F., and Freeman, W. T. 2007. Image and depth from a conventional camera with a coded aperture. ACM Trans. Gr. 26, 3, 70:1–70:9. Google ScholarDigital Library

15. Levin, A., Lischinski, D., and Weiss, Y. 2008. A closed-form solution to natural image matting. IEEE Trans. PAMI 30, 2, 228–242. Google ScholarDigital Library

16. Lewis, J. P. 1995. Fast template matching. In Proc. Vision Interface, 120–123.Google Scholar

17. Liang, C.-K., Lin, T.-H., Wong, B.-Y., Liu, C., and Chen, H. H. 2008. Programmable aperture photography: multiplexed light field acquisition. ACM Trans. Gr. 27, 3, 55:1–55:10. Google ScholarDigital Library

18. McGuire, M., Matusik, M., Pfister, H., Durand, F., and Hughes, J. 2005. Defocus video matting. ACM Trans. Gr. 24, 3, 567–576. Google ScholarDigital Library

19. McGuire, M., Matusik, W., and Yerazunis, W. 2006. Practical, real-time studio matting using dual imagers. In Proc. EGSR, 235–244. Google Scholar

20. Ng, R., Levoy, M., Brédif, M., Duval, G., Horowitz, M., and Hanrahan, P., 2005. Light field photography with a hand-held plenoptic camera. Tech. Rep. CSTR 2005-02, Stanford Computer Science, Apr.Google Scholar

21. Ng, R. 2005. Fourier slice photography. ACM Trans. Gr. 24, 3, 735–744. Google ScholarDigital Library

22. Omer, I., and Werman, M. 2004. Color lines: image specific color representation. In Proc. CVPR, vol. 2, 946–953. Google ScholarDigital Library

23. Raskar, R., Tumblin, J., Mohan, A., Agrawal, A., and Li, Y. 2006. Computational photography. In Proc. Eurographics STAR.Google Scholar

24. Smith, A. R., and Blinn, J. F. 1996. Blue screen matting. In Proc. ACM SIGGRAPH 96, 259–268. Google Scholar

25. Veeraraghavan, A., Raskar, R., Agrawal, A., Mohan, A., and Tumblin, J. 2007. Dappled photography: mask enhanced cameras for heterodyned light fields and coded aperture refocusing. ACM Trans. Gr. 26, 3, 69:1–69:12. Google ScholarDigital Library

26. Vlahos, P., 1971. Electronic composite photography. U. S. Patent 3,595,987.Google Scholar

27. Wang, J., and Cohen, M. F. 2007. Optimized color sampling for robust matting. In Proc. CVPR.Google Scholar

28. Wexler, Y., Fitzgibbon, A., and Zisserman, A. 2002. Bayesian estimation of layers from multiple images. In Proc. ECCV, 487–501. Google ScholarDigital Library

29. Xiong, W., and Jia, J. 2007. Stereo matching on objects with fractional boundary. In Proc. CVPR.Google Scholar