“Efficient Human Motion Reconstruction from Monocular Videos with Physical Consistency Loss” by Cong, Ruppel, Wang, Pan, Hendrich, et al. …

Conference:

Type(s):

Title:

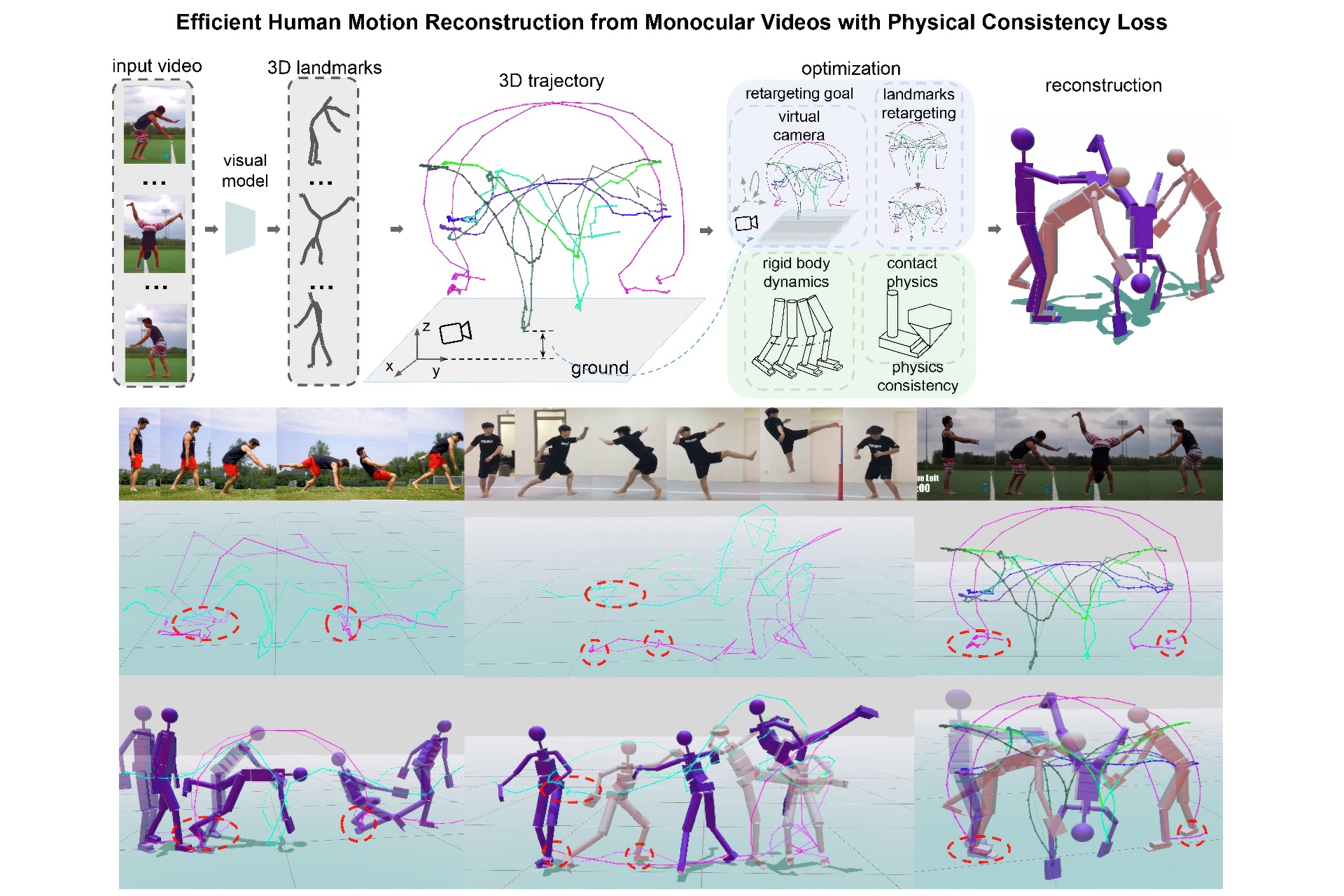

- Efficient Human Motion Reconstruction from Monocular Videos with Physical Consistency Loss

Session/Category Title: Motion Capture and Reconstruction

Presenter(s)/Author(s):

Abstract:

Vision-only motion reconstruction from monocular videos often produces artifacts such as foot sliding and jittery motions. Existing physics-based methods typically either simplify the problem to focus solely on feet-ground contacts, or reconstruct full-body contacts within a physics simulator, necessitating the solution of a time-consuming bilevel optimization problem. To overcome these limitations, we present an efficient gradient-based method for reconstructing complex human motions (including highly dynamic and acrobatic movements) with physical constraints. Our approach reformulates human motion dynamics through a differentiable physical consistency loss within an augmented search space that accounts both for contacts and the camera alignment. This enables us to transform the motion reconstruction task into a single-level trajectory optimization problem. Experimental results demonstrate that our method can reconstruct complex human motions from real-world videos in minutes, which is substantially faster than previous approaches. Additionally, the reconstructed results show enhanced physical realism compared to existing methods.

References:

[1]

Nefeli Andreou, Andreas Aristidou, and Yiorgos Chrysanthou. 2022. Pose representations for deep skeletal animation. In Computer Graphics Forum, Vol. 41. Wiley Online Library, 155–167.

[2]

Hongsuk Choi, Gyeongsik Moon, Ju Yong Chang, and Kyoung Mu Lee. 2021. Beyond static features for temporally consistent 3D human pose and shape from a video. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 1964–1973.

[3]

Hongsuk Choi, Gyeongsik Moon, and Kyoung Mu Lee. 2020. Pose2Mesh: Graph convolutional network for 3D human pose and mesh recovery from a 2D human pose. In European Conference on Computer Vision. Springer, 769–787.

[4]

Vasileios Choutas, Georgios Pavlakos, Timo Bolkart, Dimitrios Tzionas, and Michael J Black. 2020. Monocular expressive body regression through body-driven attention. In European Conference on Computer Vision. Springer, 20–40.

[5]

David Coleman, Ioan Sucan, Sachin Chitta, and Nikolaus Correll. 2014. Reducing the barrier to entry of complex robotic software: a MoveIt! case study. Journal of Software Engineering for Robotics (2014).

[6]

Erwin Coumans and Yunfei Bai. 2016–2021. PyBullet, a Python module for physics simulation for games, robotics and machine learning. http://pybullet.org.

[7]

Junting Dong, Qi Fang, Wen Jiang, Yurou Yang, Qixing Huang, Hujun Bao, and Xiaowei Zhou. 2021a. Fast and robust multi-person 3D pose estimation and tracking from multiple views. IEEE Transactions on Pattern Analysis and Machine Intelligence (2021).

[8]

Zijian Dong, Jie Song, Xu Chen, Chen Guo, and Otmar Hilliges. 2021b. Shape-aware multi-person pose estimation from multi-view images. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 11158–11168.

[9]

FreeMocap. 2022. The FreeMoCap Project: A free-and-open-source, hardware-and-software-agnostic, minimal-cost, research-grade, motion capture system and platform for decentralized scientific research, education, and training. https://github.com/freemocap/freemocap.

[10]

Erik Gärtner, Mykhaylo Andriluka, Erwin Coumans, and Cristian Sminchisescu. 2022a. Differentiable Dynamics for Articulated 3D Human Motion Reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 13190–13200.

[11]

Erik Gärtner, Mykhaylo Andriluka, Hongyi Xu, and Cristian Sminchisescu. 2022b. Trajectory optimization for physics-based reconstruction of 3D human pose from monocular video. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 13106–13115.

[12]

Ivan Grishchenko, Valentin Bazarevsky, Andrei Zanfir, Eduard Gabriel Bazavan, Mihai Zanfir, Richard Yee, Karthik Raveendran, Matsvei Zhdanovich, Matthias Grundmann, and Cristian Sminchisescu. 2022. BlazePose GHUM Holistic: Real-time 3D Human Landmarks and Pose Estimation. arXiv preprint arXiv:2206.11678 (2022).

[13]

Eric Heiden, David Millard, Erwin Coumans, Yizhou Sheng, and Gaurav S Sukhatme. 2021. NeuralSim: Augmenting differentiable simulators with neural networks. In 2021 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 9474–9481.

[14]

Catalin Ionescu, Dragos Papava, Vlad Olaru, and Cristian Sminchisescu. 2014. Human3.6M: Large Scale Datasets and Predictive Methods for 3D Human Sensing in Natural Environments. IEEE Transactions on Pattern Analysis and Machine Intelligence 36, 7 (2014), 1325–1339.

[15]

Umar Iqbal, Kevin Xie, Yunrong Guo, Jan Kautz, and Pavlo Molchanov. 2021. KAMA: 3D keypoint aware body mesh articulation. In 2021 International Conference on 3D Vision (3DV). IEEE, 689–699.

[16]

Jiaxi Jiang, Paul Streli, Huajian Qiu, Andreas Fender, Larissa Laich, Patrick Snape, and Christian Holz. 2022a. AvatarPoser: Articulated Full-Body Pose Tracking from Sparse Motion Sensing. In Proceedings of European Conference on Computer Vision. Springer.

[17]

Yifeng Jiang, Yuting Ye, Deepak Gopinath, Jungdam Won, Alexander W. Winkler, and C. Karen Liu. 2022b. Transformer Inertial Poser: Real-Time Human Motion Reconstruction from Sparse IMUs with Simultaneous Terrain Generation. In SIGGRAPH Asia 2022 Conference Papers (Daegu, Republic of Korea) (SA ’22 Conference Papers). Article 3, 9 pages. https://doi.org/10.1145/3550469.3555428

[18]

Hanbyul Joo, Tomas Simon, and Yaser Sheikh. 2018. Total capture: A 3D deformation model for tracking faces, hands, and bodies. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 8320–8329.

[19]

Angjoo Kanazawa, Michael J Black, David W Jacobs, and Jitendra Malik. 2018. End-to-end recovery of human shape and pose. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 7122–7131.

[20]

Angjoo Kanazawa, Jason Y. Zhang, Panna Felsen, and Jitendra Malik. 2019. Learning 3D Human Dynamics From Video. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

[21]

Muhammed Kocabas, Nikos Athanasiou, and Michael J Black. 2020. Vibe: Video inference for human body pose and shape estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 5253–5263.

[22]

Muhammed Kocabas, Chun-Hao P Huang, Joachim Tesch, Lea Müller, Otmar Hilliges, and Michael J Black. 2021. SPEC: Seeing people in the wild with an estimated camera. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 11035–11045.

[23]

Nikos Kolotouros, Georgios Pavlakos, Michael J Black, and Kostas Daniilidis. 2019. Learning to reconstruct 3D human pose and shape via model-fitting in the loop. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 2252–2261.

[24]

Jogendra Nath Kundu, Siddharth Seth, Varun Jampani, Mugalodi Rakesh, R. Venkatesh Babu, and Anirban Chakraborty. 2020. Self-Supervised 3D Human Pose Estimation via Part Guided Novel Image Synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

[25]

Jiefeng Li, Chao Xu, Zhicun Chen, Siyuan Bian, Lixin Yang, and Cewu Lu. 2021. Hybrik: A hybrid analytical-neural inverse kinematics solution for 3D human pose and shape estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 3383–3393.

[26]

Matthew Loper, Naureen Mahmood, Javier Romero, Gerard Pons-Moll, and Michael J Black. 2015. SMPL: A skinned multi-person linear model. ACM Transactions on Graphics (TOG) 34, 6 (2015), 1–16.

[27]

Naureen Mahmood, Nima Ghorbani, Nikolaus F Troje, Gerard Pons-Moll, and Michael J Black. 2019. AMASS: Archive of motion capture as surface shapes. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 5442–5451.

[28]

Dushyant Mehta, Helge Rhodin, Dan Casas, Pascal Fua, Oleksandr Sotnychenko, Weipeng Xu, and Christian Theobalt. 2017a. Monocular 3D human pose estimation in the wild using improved cnn supervision. In 2017 International Conference on 3D vision (3DV). IEEE, 506–516.

[29]

Dushyant Mehta, Srinath Sridhar, Oleksandr Sotnychenko, Helge Rhodin, Mohammad Shafiei, Hans-Peter Seidel, Weipeng Xu, Dan Casas, and Christian Theobalt. 2017b. VNect: Real-time 3D human pose estimation with a single RGB camera. Acm Transactions on Graphics (TOG) 36, 4 (2017), 1–14.

[30]

Igor Mordatch, Zoran Popović, and Emanuel Todorov. 2012a. Contact-invariant optimization for hand manipulation. In Proceedings of the ACM SIGGRAPH/Eurographics symposium on computer animation. 137–144.

[31]

Igor Mordatch, Emanuel Todorov, and Zoran Popović. 2012b. Discovery of complex behaviors through contact-invariant optimization. ACM Transactions on Graphics (TOG) 31, 4 (2012), 1–8.

[32]

Xue Bin Peng, Pieter Abbeel, Sergey Levine, and Michiel Van de Panne. 2018a. DeepMimic: Example-guided deep reinforcement learning of physics-based character skills. ACM Transactions On Graphics (TOG) 37, 4 (2018), 1–14.

[33]

Xue Bin Peng, Yunrong Guo, Lina Halper, Sergey Levine, and Sanja Fidler. 2022. ASE: Large-scale Reusable Adversarial Skill Embeddings for Physically Simulated Characters. ACM Trans. Graph. 41, 4, Article 94 (July 2022).

[34]

Xue Bin Peng, Angjoo Kanazawa, Jitendra Malik, Pieter Abbeel, and Sergey Levine. 2018b. SFV: Reinforcement learning of physical skills from videos. ACM Transactions On Graphics (TOG) 37, 6 (2018), 1–14.

[35]

Morgan Quigley, Brian Gerkey, Ken Conley, Josh Faust, Tully Foote, Jeremy Leibs, Eric Berger, Rob Wheeler, and Andrew Ng. 2009. ROS: an open-source Robot Operating System. In Proc. of the IEEE Intl. Conf. on Robotics and Automation (ICRA) Workshop on Open Source Robotics. Kobe, Japan.

[36]

N Dinesh Reddy, Laurent Guigues, Leonid Pishchulin, Jayan Eledath, and Srinivasa G Narasimhan. 2021. TesseTrack: End-to-end learnable multi-person articulated 3D pose tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 15190–15200.

[37]

Davis Rempe, Leonidas J Guibas, Aaron Hertzmann, Bryan Russell, Ruben Villegas, and Jimei Yang. 2020. Contact and human dynamics from monocular video. In European Conference on Computer Cision. Springer, 71–87.

[38]

Robotology. 2022. Human-Gazebo. https://github.com/robotology/human-gazebo.

[39]

Philipp Ruppel, Norman Hendrich, and Jianwei Zhang. 2021. Direct Policy Optimization with Differentiable Physical Consistency for Dexterous Manipulation. In 2021 IEEE International Conference on Robotics and Biomimetics (ROBIO). IEEE, 650–655.

[40]

Soshi Shimada, Vladislav Golyanik, Weipeng Xu, Patrick Pérez, and Christian Theobalt. 2021. Neural monocular 3D human motion capture with physical awareness. ACM Transactions on Graphics (ToG) 40, 4 (2021), 1–15.

[41]

Soshi Shimada, Vladislav Golyanik, Weipeng Xu, and Christian Theobalt. 2020. Physcap: Physically plausible monocular 3D motion capture in real time. ACM Transactions on Graphics (ToG) 39, 6 (2020), 1–16.

[42]

Leonid Sigal, Alexandru O Balan, and Michael J Black. 2010. Humaneva: Synchronized video and motion capture dataset and baseline algorithm for evaluation of articulated human motion. International Journal of Computer Vision 87, 1 (2010), 4–27.

[43]

Timo Von Marcard, Roberto Henschel, Michael J Black, Bodo Rosenhahn, and Gerard Pons-Moll. 2018. Recovering accurate 3D human pose in the wild using IMUs and a moving camera. In Proceedings of the European Conference on Computer Vision (ECCV). 601–617.

[44]

Marek Vondrak, Leonid Sigal, and Odest Chadwicke Jenkins. 2008. Physical simulation for probabilistic motion tracking. In 2008 IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 1–8.

[45]

Alexander Winkler, Jungdam Won, and Yuting Ye. 2022. QuestSim: Human Motion Tracking from Sparse Sensors with Simulated Avatars. In SIGGRAPH Asia 2022 Conference Papers. 1–8.

[46]

Donglai Xiang, Hanbyul Joo, and Yaser Sheikh. 2019. Monocular total capture: Posing face, body, and hands in the wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10965–10974.

[47]

Kevin Xie, Tingwu Wang, Umar Iqbal, Yunrong Guo, Sanja Fidler, and Florian Shkurti. 2021. Physics-based human motion estimation and synthesis from videos. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 11532–11541.

[48]

Xinyu Yi, Yuxiao Zhou, Marc Habermann, Soshi Shimada, Vladislav Golyanik, Christian Theobalt, and Feng Xu. 2022a. Physical Inertial Poser (PIP): Physics-aware Real-time Human Motion Tracking from Sparse Inertial Sensors. In IEEE/CVF Conference on Computer Vision and Pattern Recognition.

[49]

Xinyu Yi, Yuxiao Zhou, Marc Habermann, Soshi Shimada, Vladislav Golyanik, Christian Theobalt, and Feng Xu. 2022b. Physical inertial poser (pip): Physics-aware real-time human motion tracking from sparse inertial sensors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 13167–13178.

[50]

Ri Yu, Hwangpil Park, and Jehee Lee. 2021. Human dynamics from monocular video with dynamic camera movements. ACM Transactions on Graphics (TOG) 40, 6 (2021), 1–14.

[51]

Ye Yuan, Umar Iqbal, Pavlo Molchanov, Kris Kitani, and Jan Kautz. 2022. GLAMR: Global Occlusion-Aware Human Mesh Recovery with Dynamic Cameras. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition.

[52]

Ye Yuan and Kris Kitani. 2020. Residual force control for agile human behavior imitation and extended motion synthesis. Advances in Neural Information Processing Systems 33 (2020), 21763–21774.

[53]

Ye Yuan, Shih-En Wei, Tomas Simon, Kris Kitani, and Jason Saragih. 2021. SimPoE: Simulated character control for 3d human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 7159–7169.

[54]

Andrei Zanfir, Elisabeta Marinoiu, and Cristian Sminchisescu. 2018. Monocular 3D pose and shape estimation of multiple people in natural scenes-the importance of multiple scene constraints. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2148–2157.

[55]

Yaofeng Desmond Zhong, Jiequn Han, and Georgia Olympia Brikis. 2022. Differentiable Physics Simulations with Contacts: Do They Have Correct Gradients wrt Position, Velocity and Control?arXiv preprint arXiv:2207.05060 (2022).