“EASI-Tex: Edge-Aware Mesh Texturing from Single Image”

Conference:

Type(s):

Title:

- EASI-Tex: Edge-Aware Mesh Texturing from Single Image

Presenter(s)/Author(s):

Abstract:

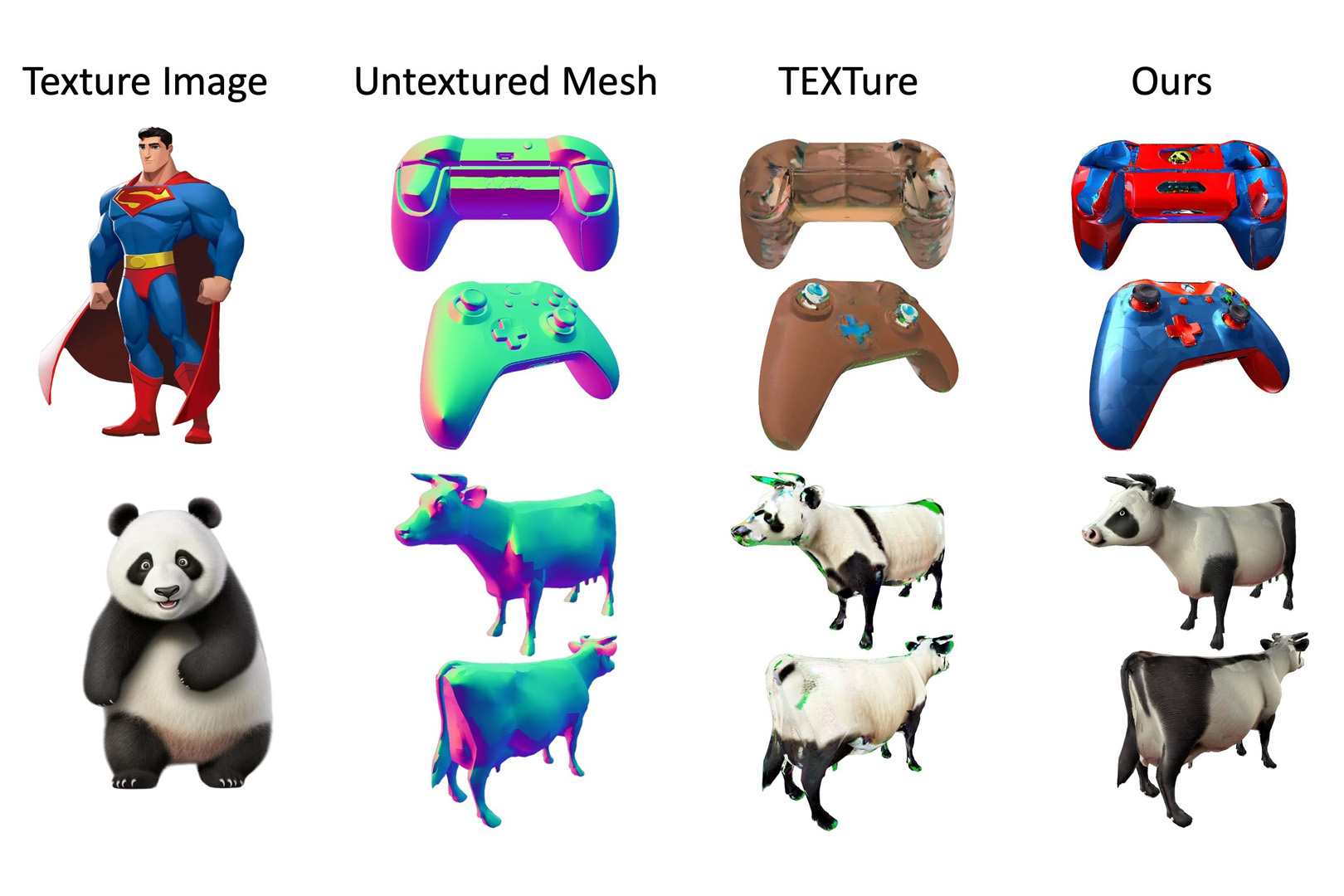

We introduce a novel approach for single-image mesh texturing, which employs a pre-trained image diffusion model with judicious conditioning to seamlessly transfer texture from a single image to a given 3D mesh object, without any optimization or training.

References:

[1]

Yuval Alaluf, Elad Richardson, Gal Metzer, and Daniel Cohen-Or. 2023. A Neural Space-Time Representation for Text-to-Image Personalization. ACM Trans. Graph. 42, 6, Article 243 (dec 2023), 10 pages.

[2]

Ayan Kumar Bhunia, Sai Raj Kishore Perla, Pranay Mukherjee, Abhirup Das, and Partha Pratim Roy. 2019. Texture synthesis guided deep hashing for texture image retrieval. In 2019 IEEE Winter Conference on Applications of Computer Vision (WACV). 609–618.

[3]

Alexey Bokhovkin, Shubham Tulsiani, and Angela Dai. 2023. Mesh2tex: Generating mesh textures from image queries. In Int. Conf. Comput. Vis. 8918–8928.

[4]

Bullza. 2022. Goku Image. Dragon Ball Wiki. https://dragonball.fandom.com/wiki/File:Goku_anime_profile.png

[5]

Tianshi Cao, Karsten Kreis, Sanja Fidler, Nicholas Sharp, and Kangxue Yin. 2023. Texfusion: Synthesizing 3d textures with text-guided image diffusion models. In Int. Conf. Comput. Vis. 4169–4181.

[6]

Cathy. 2019. Lightning McQueen. Every Day is Special. http://every-day-is-special.blogspot.com/2019/10/october-2-name-your-car-day.html

[7]

Dave Zhenyu Chen, Yawar Siddiqui, Hsin-Ying Lee, Sergey Tulyakov, and Matthias Nie?ner. 2023b. Text2tex: Text-driven texture synthesis via diffusion models. In Int. Conf. Comput. Vis. 18558–18568.

[8]

Rui Chen, Yongwei Chen, Ningxin Jiao, and Kui Jia. 2023a. Fantasia3D: Disentangling Geometry and Appearance for High-quality Text-to-3D Content Creation. In Int. Conf. Comput. Vis.

[9]

Xiaobai Chen, Thomas Funkhouser, Dan B Goldman, and Eli Shechtman. 2012. Non-parametric texture transfer using MeshMatch. Adobe Technical Report 2012-2 (Nov. 2012).

[10]

Zhiqin Chen, Kangxue Yin, and Sanja Fidler. 2022. AUV-Net: Learning Aligned UV Maps for Texture Transfer and Synthesis. In IEEE Conf. Comput. Vis. Pattern Recog.

[11]

CREATRBOI. 2022. Gaming Controller. Objaverse. https://sketchfab.com/models/c46f717692b6497a9d6edee010a7bb67/

[12]

Jeremy S De Bonet. 1997. Multiresolution sampling procedure for analysis and synthesis of texture images. In Proc. of ACM SIGGRAPH. 361–368.

[13]

Douglas DeCarlo, Adam Finkelstein, Szymon Rusinkiewicz, and Anthony Santella. 2003. Suggestive contours for conveying shape. ACM Trans. Graph. 22, 3 (2003), 848–855. Meshes: https://gfx.cs.princeton.edu/proj/sugcon/models/.

[14]

Matt Deitke, Ruoshi Liu, Matthew Wallingford, Huong Ngo, Oscar Michel, Aditya Kusupati, Alan Fan, Christian Laforte, Vikram Voleti, Samir Yitzhak Gadre, Eli VanderBilt, Aniruddha Kembhavi, Carl Vondrick, Georgia Gkioxari, Kiana Ehsani, Ludwig Schmidt, and Ali Farhadi. 2024. Objaverse-XL: A Universe of 10M+ 3D Objects. Adv. Neural Inform. Process. Syst. 36 (2024).

[15]

Matt Deitke, Dustin Schwenk, Jordi Salvador, Luca Weihs, Oscar Michel, Eli Vander-Bilt, Ludwig Schmidt, Kiana Ehsani, Aniruddha Kembhavi, and Ali Farhadi. 2023. Objaverse: A Universe of Annotated 3D Objects. In IEEE Conf. Comput. Vis. Pattern Recog. 13142–13153.

[16]

denovdiyenko. 2020. Single Sofa. Objaverse. https://sketchfab.com/models/d5be346315a747ba9d25f29f052cc85e/

[17]

Prafulla Dhariwal and Alexander Nichol. 2021. Diffusion models beat gans on image synthesis. Adv. Neural Inform. Process. Syst. 34 (2021), 8780–8794.

[18]

Yifan Du, Zikang Liu, Junyi Li, and Wayne Xin Zhao. 2022. A Survey of Vision-Language Pre-Trained Models. In IJCAI. 5436–5443. Survey Track.

[19]

Alexei A Efros and Thomas K Leung. 1999. Texture synthesis by non-parametric sampling. In Int. Conf. Comput. Vis., Vol. 2. 1033–1038.

[20]

Freepik. 2024. Fire. Freepik. https://www.freepik.com/icon/fire_488554

[21]

freepik. 2024. Watercolor Geometric. Freepik. https://www.freepik.es/vector-gratis/fondo-geometrico-acuarela_23849500.htm

[22]

Rinon Gal, Yuval Alaluf, Yuval Atzmon, Or Patashnik, Amit Haim Bermano, Gal Chechik, and Daniel Cohen-or. 2023. An Image is Worth One Word: Personalizing Text-to-Image Generation using Textual Inversion. In Int. Conf. Learn. Represent. https://github.com/rinongal/textual_inversion

[23]

Digital Galaxy. 2024. Panda. Freepik. https://www.freepik.com/premium-ai-image/impressive-3d-kung-panda-character-animation-pixar-style_65395176.htm

[24]

GemboGlag. 2023. Green Car. Glagglepedia Wiki. https://glagglepedia.fandom.com/wiki/File:Thingummy.jpeg

[25]

Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial nets. Adv. Neural Inform. Process. Syst. 27 (2014).

[26]

gufv9tdhjnv. 2021. Astronaut. Objaverse. https://sketchfab.com/models/dfde0bf25e2b44809c08105c30610694/

[27]

David J Heeger and James R Bergen. 1995. Pyramid-based texture analysis/synthesis. In Proc. of ACM SIGGRAPH. 229–238.

[28]

Amir Hertz, Rana Hanocka, Raja Giryes, and Daniel Cohen-Or. 2020. Deep Geometric Texture Synthesis. ACM Trans. Graph. 39, 4, Article 108 (2020).

[29]

Jonathan Ho, Ajay Jain, and Pieter Abbeel. 2020. Denoising diffusion probabilistic models. Adv. Neural Inform. Process. Syst. 33 (2020), 6840–6851.

[30]

Keen Software House. 2014. Astronaut. Objaverse. https://sketchfab.com/models/02a99eb6a6214c9cabbd321c2ee6ab86/

[31]

IyzMoe. 2022. Superman Image. Multiversus Wiki. https://multiversus.fandom.com/wiki/File:Superman_Portrait_Full.png

[32]

Bernhard Kerbl, Georgios Kopanas, Thomas Leimk?hler, and George Drettakis. 2023. 3D Gaussian Splatting for Real-Time Radiance Field Rendering. ACM Trans. Graph. (2023).

[33]

Diederik P. Kingma and Max Welling. 2014. Auto-Encoding Variational Bayes. In Int. Conf. Learn. Represent.

[34]

lilett. 2024. Floral Design. Freepik. https://www.freepik.com/premium-vector/hand-drawn-seamless-pattern-with-decorative-flowers-plants_5436979.htm

[35]

Chen-Hsuan Lin, Jun Gao, Luming Tang, Towaki Takikawa, Xiaohui Zeng, Xun Huang, Karsten Kreis, Sanja Fidler, Ming-Yu Liu, and Tsung-Yi Lin. 2023. Magic3d: Highresolution text-to-3d content creation. In IEEE Conf. Comput. Vis. Pattern Recog. 300–309.

[36]

liu1456447215. 2021. Klein Bottle. Objaverse. https://sketchfab.com/models/b50134f60e244e89bc85990650ffc800/

[37]

Jianye Lu, Athinodoros S Georghiades, Andreas Glaser, Hongzhi Wu, Li-Yi Wei, Baining Guo, Julie Dorsey, and Holly Rushmeier. 2007. Context-aware textures. ACM Trans. Graph. 26, 1 (2007), 3–es.

[38]

Tom Mertens, Jan Kautz, Jiawen Chen, Philippe Bekaert, and Fr?do Durand. 2006. Texture Transfer Using Geometry Correlation. Rendering Techniques 273, 10.2312 (2006), 273–284.

[39]

Gal Metzer, Elad Richardson, Or Patashnik, Raja Giryes, and Daniel Cohen-Or. 2023. Latent-nerf for shape-guided generation of 3d shapes and textures. In IEEE Conf. Comput. Vis. Pattern Recog. 12663–12673.

[40]

Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. 2020. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. In Eur. Conf. Comput. Vis.

[41]

Michael Oechsle, Lars Mescheder, Michael Niemeyer, Thilo Strauss, and Andreas Geiger. 2019. Texture fields: Learning texture representations in function space. In Int. Conf. Comput. Vis. 4531–4540.

[42]

Keunhong Park, Konstantinos Rematas, Ali Farhadi, and Steven M. Seitz. 2018. PhotoShape: Photorealistic Materials for Large-Scale Shape Collections. ACM Trans. Graph. 37, 6, Article 192 (Nov. 2018).

[43]

pikisuperstar. 2024. Leaves. Freepik. https://www.freepik.com/free-vector/tropical-green-leaves-background_7557476.htm

[44]

Ben Poole, Ajay Jain, Jonathan T. Barron, and Ben Mildenhall. 2022. DreamFusion: Text-to-3D using 2D diffusion. arXiv preprint arXiv:2209.14988 (2022).

[45]

Emil Praun, Adam Finkelstein, and Hugues Hoppe. 2000. Lapped textures. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, SIGGRAPH. ACM, 465–470.

[46]

Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. 2021. Learning transferable visual models from natural language supervision. In Intl. Conf. on Mach. Lear. PMLR, 8748–8763.

[47]

Elad Richardson, Gal Metzer, Yuval Alaluf, Raja Giryes, and Daniel Cohen-Or. 2023. TEXTure: Text-Guided Texturing of 3D Shapes. In Proc. of ACM SIGGRAPH. Article 54.

[48]

Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Bj?rn Ommer. 2022. High-resolution image synthesis with latent diffusion models. In IEEE Conf. Comput. Vis. Pattern Recog. 10684–10695.

[49]

Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2015. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention (MICCAI). Springer, 234–241.

[50]

John Rubino. 2022. Nascar. Trading Paints. https://www.tradingpaints.com/showroom/view/512478/Mr-Clean-Autodry-Car-Wash-Camaro

[51]

Nataniel Ruiz, Yuanzhen Li, Varun Jampani, Yael Pritch, Michael Rubinstein, and Kfir Aberman. 2023. Dreambooth: Fine tuning text-to-image diffusion models for subject-driven generation. In IEEE Conf. Comput. Vis. Pattern Recog.

[52]

Tianchang Shen, Jun Gao, Kangxue Yin, Ming-Yu Liu, and Sanja Fidler. 2021. Deep marching tetrahedra: a hybrid representation for high-resolution 3d shape synthesis. Adv. Neural Inform. Process. Syst. 34 (2021), 6087–6101.

[53]

Yawar Siddiqui, Justus Thies, Fangchang Ma, Qi Shan, Matthias Nie?ner, and Angela Dai. 2022. Texturify: Generating Textures on 3D Shape Surfaces. In Eur. Conf. Comput. Vis. (Lecture Notes in Computer Science, Vol. 13663). Springer, 72–88.

[54]

Junshu Tang, Tengfei Wang, Bo Zhang, Ting Zhang, Ran Yi, Lizhuang Ma, and Dong Chen. 2023. Make-It-3D: High-fidelity 3D Creation from A Single Image with Diffusion Prior. In Int. Conf. Comput. Vis. 22819–22829.

[55]

Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, ?ukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. Adv. Neural Inform. Process. Syst. 30 (2017).

[56]

Andrey Voynov, Qinghao Chu, Daniel Cohen-Or, and Kfir Aberman. 2023. P+: Extended Textual Conditioning in Text-to-Image Generation. arXiv:2303.09522 [cs.CV]

[57]

Zhengyi Wang, Cheng Lu, Yikai Wang, Fan Bao, Chongxuan Li, Hang Su, and Jun Zhu. 2024. ProlificDreamer: High-Fidelity and Diverse Text-to-3D Generation with Variational Score Distillation. Adv. Neural Inform. Process. Syst. 36 (2024).

[58]

Linjie Yang, Ping Luo, Chen Change Loy, and Xiaoou Tang. 2015. A large-scale car dataset for fine-grained categorization and verification. In IEEE Conf. Comput. Vis. Pattern Recog. 3973–3981. http://mmlab.ie.cuhk.edu.hk/datasets/comp_cars/

[59]

Ling Yang, Zhilong Zhang, Yang Song, Shenda Hong, Runsheng Xu, Yue Zhao, Wentao Zhang, Bin Cui, and Ming-Hsuan Yang. 2023. Diffusion Models: A Comprehensive Survey of Methods and Applications. ACM Comput. Surv. 56, 4, Article 105 (nov 2023), 39 pages.

[60]

Hu Ye, Jun Zhang, Sibo Liu, Xiao Han, and Wei Yang. 2023. Ip-adapter: Text compatible image prompt adapter for text-to-image diffusion models. arXiv preprint arXiv:2308.06721 (2023).

[61]

Daniel Zhabotinsky. 2020. Compact Car. Objaverse. https://sketchfab.com/models/1fc5596cd7124e4ebb2e23b1d4439fc6/

[62]

Daniel Zhabotinsky. 2021. Cargo Truck. Objaverse. https://sketchfab.com/models/663a0953c038434a918cb85725c88ffa/

[63]

Jingyi Zhang, Jiaxing Huang, Sheng Jin, and Shijian Lu. 2024. Vision-Language Models for Vision Tasks: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. (2024).

[64]

Lvmin Zhang, Anyi Rao, and Maneesh Agrawala. 2023. Adding conditional control to text-to-image diffusion models. In Int. Conf. Comput. Vis. 3836–3847.

[65]

Song Chun Zhu, Yingnian Wu, and David Mumford. 1998. Filters, random fields and maximum entropy (FRAME): Towards a unified theory for texture modeling. Int. J. Comput. Vis. 27 (1998), 107–126.