“Dynamic hair modeling from monocular videos using deep neural networks” by Yang, Shi, Zheng and Zhou

Conference:

Type(s):

Title:

- Dynamic hair modeling from monocular videos using deep neural networks

Session/Category Title:

- Hairy & Sketchy Geometry

Presenter(s)/Author(s):

Moderator(s):

Abstract:

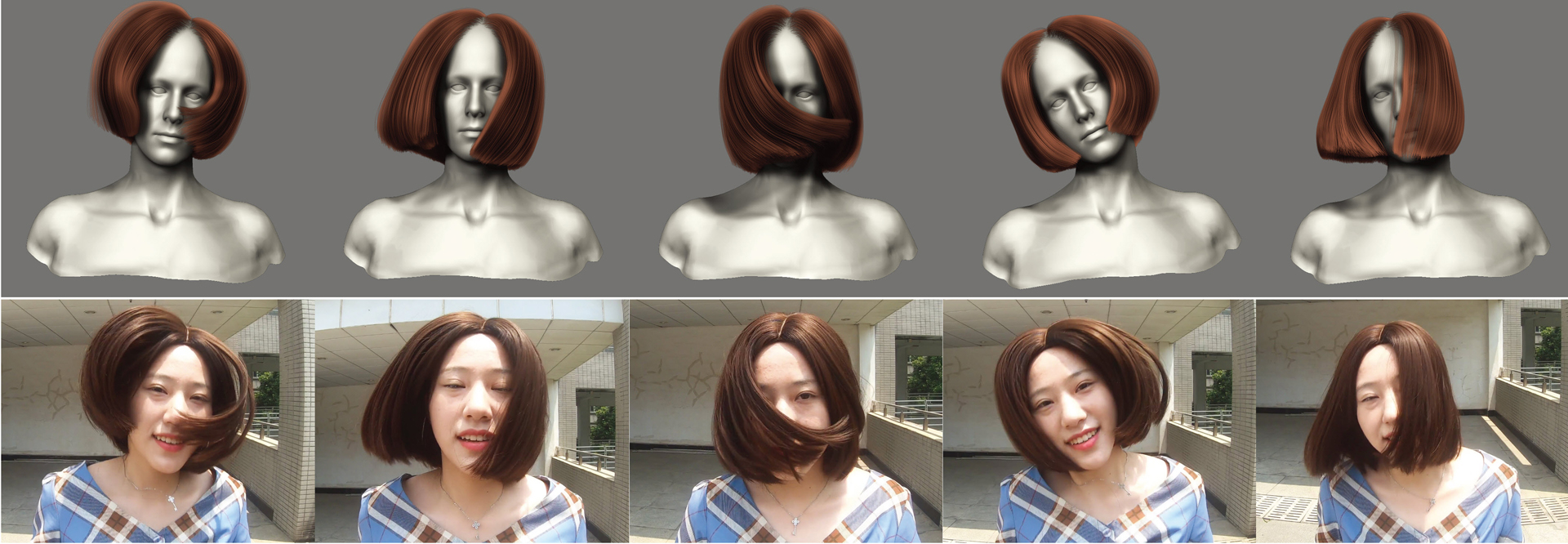

We introduce a deep learning based framework for modeling dynamic hairs from monocular videos, which could be captured by a commodity video camera or downloaded from Internet. The framework mainly consists of two neural networks, i.e., HairSpatNet for inferring 3D spatial features of hair geometry from 2D image features, and HairTempNet for extracting temporal features of hair motions from video frames. The spatial features are represented as 3D occupancy fields depicting the hair volume shapes and 3D orientation fields indicating the hair growing directions. The temporal features are represented as bidirectional 3D warping fields, describing the forward and backward motions of hair strands cross adjacent frames. Both HairSpatNet and HairTempNet are trained with synthetic hair data. The spatial and temporal features predicted by the networks are subsequently used for growing hair strands with both spatial and temporal consistency. Experiments demonstrate that our method is capable of constructing plausible dynamic hair models that closely resemble the input video, and compares favorably to previous single-view techniques.

References:

1. Xudong Cao, Yichen Wei, Fang Wen, and Jian Sun. 2014. Face alignment by explicit shape regression. International Journal of Computer Vision 107, 2 (2014), 177–190.Google ScholarDigital Library

2. Menglei Chai, Linjie Luo, Kalyan Sunkavalli, Nathan Carr, Sunil Hadap, and Kun Zhou. 2015. High-quality hair modeling from a single portrait photo. ACM Trans. Graph. 34, 6 (2015), 204:1–204:10.Google ScholarDigital Library

3. Menglei Chai, Tianjia Shao, Hongzhi Wu, Yanlin Weng, and Kun Zhou. 2016. AutoHair: Fully Automatic Hair Modeling from a Single Image. ACM Trans. Graph. 35, 4 (2016), 116:1–116:12.Google ScholarDigital Library

4. Menglei Chai, Lvdi Wang, Yanlin Weng, Xiaogang Jin, and Kun Zhou. 2013. Dynamic Hair Manipulation in Images and Videos. ACM Trans. Graph. 32, 4 (2013), 75:1–75:8.Google ScholarDigital Library

5. Menglei Chai, Lvdi Wang, Yanlin Weng, Yizhou Yu, Baining Guo, and Kun Zhou. 2012. Single-view Hair Modeling for Portrait Manipulation. ACM Trans. Graph. 31, 4 (2012), 116:1–116:8.Google ScholarDigital Library

6. Dongdong Chen, Jing Liao, Lu Yuan, Nenghai Yu, and Gang Hua. 2017. Coherent online video style transfer. In Proceedings of the IEEE International Conference on Computer Vision. 1105–1114.Google ScholarCross Ref

7. Jose I Echevarria, Derek Bradley, Diego Gutierrez, and Thabo Beeler. 2014. Capturing and stylizing hair for 3D fabrication. ACM Trans. Graph. 33, 4 (2014), 125:1–125:11.Google ScholarDigital Library

8. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial nets. In Advances in neural information processing systems. 2672–2680.Google Scholar

9. Ishaan Gulrajani, Faruk Ahmed, Martin Arjovsky, Vincent Dumoulin, and Aaron C Courville. 2017. Improved training of wasserstein gans. In Advances in Neural Information Processing Systems. 5767–5777.Google Scholar

10. Tomas Lay Herrera, Arno Zinke, and Andreas Weber. 2012. Lighting hair from the inside: A thermal approach to hair reconstruction. ACM Trans. Graph. 31, 6 (2012), 146:1–146:9.Google ScholarDigital Library

11. Liwen Hu, Derek Bradley, Hao Li, and Thabo Beeler. 2017a. Simulation-Ready Hair Capture. Computer Graphics Forum 36, 2 (2017), 281–294.Google ScholarDigital Library

12. Liwen Hu, Chongyang Ma, Linjie Luo, and Hao Li. 2014a. Robust hair capture using simulated examples. ACM Trans. Graph. 33, 4 (2014), 126:1–126:10.Google ScholarDigital Library

13. Liwen Hu, Chongyang Ma, Linjie Luo, and Hao Li. 2015. Single-view hair modeling using a hairstyle database. ACM Trans. Graph. 34, 4 (2015), 125:1–125:9.Google ScholarDigital Library

14. Liwen Hu, Chongyang Ma, Linjie Luo, Li-Yi Wei, and Hao Li. 2014b. Capturing Braided Hairstyles. ACM Trans. Graph. 33, 6 (2014), 225:1–225:9.Google ScholarDigital Library

15. Liwen Hu, Shunsuke Saito, Lingyu Wei, Koki Nagano, Jaewoo Seo, Jens Fursund, Iman Sadeghi, Carrie Sun, Yen-Chun Chen, and Hao Li. 2017b. Avatar digitization from a single image for real-time rendering. ACM Trans. Graph. 36, 6 (2017), 195:1–195:14.Google ScholarDigital Library

16. Takahito Ishikawa, Yosuke Kazama, Eiji Sugisaki, and Shigeo Morishima. 2007. Hair Motion Reconstruction Using Motion Capture System. In ACM SIGGRAPH 2007 Posters (SIGGRAPH ’07). Article 78.Google Scholar

17. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A. Efros. 2017. Image-to-Image Translation with Conditional Adversarial Networks. In IEEE Conference on Computer Vision and Pattern Recognition. 5967–5976.Google Scholar

18. Wenzel Jakob, Jonathan T Moon, and Steve Marschner. 2009. Capturing hair assemblies fiber by fiber. ACM Trans. Graph. 28, 5 (2009), 164:1–164:9.Google ScholarDigital Library

19. Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).Google Scholar

20. Shu Liang, Xiufeng Huang, Xianyu Meng, Kunyao Chen, Linda G. Shapiro, and Ira Kemelmacher-Shlizerman. 2018. Video to Fully Automatic 3D Hair Model. ACM Trans. Graph. 37, 6 (2018), 206:1–206:14.Google ScholarDigital Library

21. Linjie Luo, Hao Li, and Szymon Rusinkiewicz. 2013. Structure-aware hair capture. ACM Trans. Graph. 32, 4 (2013), 76:1–76:12.Google ScholarDigital Library

22. Linjie Luo, Hao Li, Thibaut Weise, Sylvain Paris, Mark Pauly, and Szymon Rusinkiewicz. 2011. Dynamic Hair Capture. Technical Report TR-907-11. Princeton University.Google Scholar

23. L. Luo, C. Zhang, Z. Zhang, and S. Rusinkiewicz. 2013. Wide-Baseline Hair Capture Using Strand-Based Refinement. In 2013 IEEE Conference on Computer Vision and Pattern Recognition. 265–272.Google Scholar

24. Nikolaus Mayer, Eddy Ilg, Philip Hausser, Philipp Fischer, Daniel Cremers, Alexey Dosovitskiy, and Thomas Brox. 2016. A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 4040–4048.Google ScholarCross Ref

25. Giljoo Nam, Chenglei Wu, Min H. Kim, and Yaser Sheikh. 2019. Strand-Accurate Multi-View Hair Capture. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 155–164.Google Scholar

26. Sylvain Paris, Will Chang, Oleg I Kozhushnyan, Wojciech Jarosz, Wojciech Matusik, Matthias Zwicker, and Frédo Durand. 2008. Hair photobooth: geometric and photometric acquisition of real hairstyles. ACM Trans. Graph. 27, 3 (2008), 30:1–30:9.Google ScholarDigital Library

27. Shunsuke Saito, Liwen Hu, Chongyang Ma, Linjie Luo, and Hao Li. 2018. 3D Hair Synthesis Using Volumetric Variational Autoencoders. ACM Trans. Graph. 37, 6 (2018), 208:1–208:12.Google ScholarDigital Library

28. Andrew Selle, Michael Lentine, and Ronald Fedkiw. 2008. A mass spring model for hair simulation. In ACM Transactions on Graphics (TOG), Vol. 27. 64:1–64:11.Google ScholarDigital Library

29. Lvdi Wang, Yizhou Yu, Kun Zhou, and Baining Guo. 2009. Example-based hair geometry synthesis. ACM Trans. Graph. 28, 3 (2009), 56:1–56:9.Google ScholarDigital Library

30. Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Guilin Liu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2018a. Video-to-Video Synthesis. In Advances in Neural Information Processing Systems 31, S. Bengio, H. Wallach, H. Larochelle, K. Grauman, N. Cesa-Bianchi, and R. Garnett (Eds.). 1144–1156.Google Scholar

31. Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2018b. High-Resolution Image Synthesis and Semantic Manipulation With Conditional GANs. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

32. Kelly Ward, Florence Bertails, Tae-Yong Kim, Stephen R Marschner, Marie-Paule Cani, and Ming C Lin. 2007. A survey on hair modeling: Styling, simulation, and rendering. IEEE Trans. Vis. Comp. Graph. 13, 2 (2007), 213–234.Google ScholarDigital Library

33. Zexiang Xu, Hsiang-Tao Wu, Lvdi Wang, Changxi Zheng, Xin Tong, and Yue Qi. 2014. Dynamic Hair Capture Using Spacetime Optimization. ACM Trans. Graph. 33, 6 (2014), 224:1–224:11.Google ScholarDigital Library

34. Tatsuhisa Yamaguchi, Bennett Wilburn, and Eyal Ofek. 2009. Video-based modeling of dynamic hair. In Pacific-Rim Symposium on Image and Video Technology. Springer, 585–596.Google ScholarDigital Library

35. Bo Yang, Hongkai Wen, Sen Wang, Ronald Clark, Andrew Markham, and Niki Trigoni. 2017. 3d object reconstruction from a single depth view with adversarial learning. In Proceedings of the IEEE International Conference on Computer Vision. 679–688.Google ScholarCross Ref

36. Meng Zhang, Menglei Chai, Hongzhi Wu, Hao Yang, and Kun Zhou. 2017. A Data-driven Approach to Four-view Image-based Hair Modeling. ACM Trans. Graph. 36, 4 (2017), 156:1–156:11.Google ScholarDigital Library

37. Meng Zhang, Pan Wu, Hongzhi Wu, Yanlin Weng, Youyi Zheng, and Kun Zhou. 2018. Modeling Hair from an RGB-D Camera. ACM Trans. Graph. 37, 6 (2018), 205:1–205:10.Google ScholarDigital Library

38. Meng Zhang and Youyi Zheng. 2019. Hair-GANs: Recovering 3D Hair Structure from a Single Image. The Visual Informatics (2019), to appear.Google Scholar

39. Qing Zhang, Jing Tong, Huamin Wang, Zhigeng Pan, and Ruigang Yang. 2012. Simulation Guided Hair Dynamics Modeling from Video. Comput. Graph. Forum 31 (2012), 2003–2010.Google ScholarDigital Library

40. Yi Zhou, Liwen Hu, Jun Xing, Weikai Chen, Han-Wei Kung, Xin Tong, and Hao Li. 2018. HairNet: Single-View Hair Reconstruction using Convolutional Neural Networks. In Proceedings of the European Conference on Computer Vision. 235–251.Google ScholarCross Ref