“Dynamic facial asset and rig generation from a single scan” by Li, Kuang, Zhao, He, Bladin, et al. …

Conference:

Type(s):

Title:

- Dynamic facial asset and rig generation from a single scan

Session/Category Title:

- Generation and Inference from Images

Presenter(s)/Author(s):

Abstract:

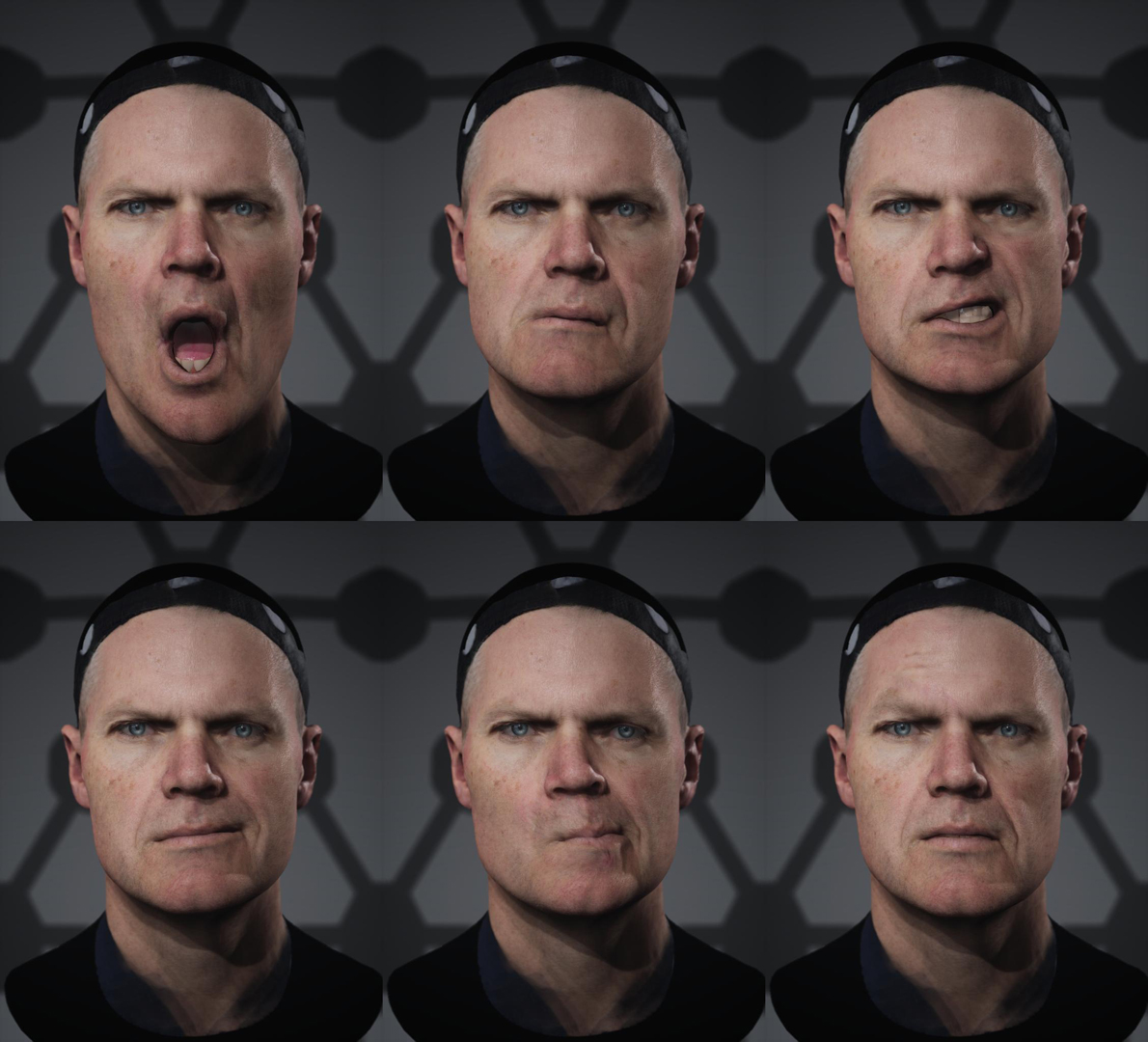

The creation of high-fidelity computer-generated (CG) characters for films and games is tied with intensive manual labor, which involves the creation of comprehensive facial assets that are often captured using complex hardware. To simplify and accelerate this digitization process, we propose a framework for the automatic generation of high-quality dynamic facial models, including rigs which can be readily deployed for artists to polish. Our framework takes a single scan as input to generate a set of personalized blendshapes, dynamic textures, as well as secondary facial components (e.g., teeth and eyeballs). Based on a facial database with over 4, 000 scans with pore-level details, varying expressions and identities, we adopt a self-supervised neural network to learn personalized blendshapes from a set of template expressions. We also model the joint distribution between identities and expressions, enabling the inference of a full set of personalized blendshapes with dynamic appearances from a single neutral input scan. Our generated personalized face rig assets are seamlessly compatible with professional production pipelines for facial animation and rendering. We demonstrate a highly robust and effective framework on a wide range of subjects, and showcase high-fidelity facial animations with automatically generated personalized dynamic textures.

References:

1. 3DScanstore. 2019. 3D Scan Store: Male and female 3d head model 48 x bundle. http://precog.iiitd.edu.in/people/anupama. Online; Accessed: 2019-12-20.Google Scholar

2. Victoria Fernández Abrevaya, Adnane Boukhayma, Stefanie Wuhrer, and Edmond Boyer. 2019. A Decoupled 3D Facial Shape Model by Adversarial Training. In CVPR.Google Scholar

3. Oleg Alexander, Mike Rogers, William Lambeth, Matt Chiang, and Paul Debevec. 2009. The Digital Emily Project: Photoreal Facial Modeling and Animation. In ACM SIGGRAPH 2009 Courses (SIGGRAPH ’09). Article Article 12.Google ScholarDigital Library

4. Brian Amberg, Reinhard Knothe, and Thomas Vetter. 2008. Expression Invariant 3D Face Recognition with a Morphable Model. In International Conference on Automatic Face Gesture Recognition. 1–6.Google ScholarCross Ref

5. Timur Bagautdinov, Chenglei Wu, Jason Saragih, Pascal Fua, and Yaser Sheikh. 2018. Modeling Facial Geometry Using Compositional VAEs. In CVPR.Google Scholar

6. Yancheng Bai and Bernard Ghanem. 2017. Multi-branch fully convolutional network for face detection. arXiv preprint arXiv:1707.06330 (2017).Google Scholar

7. Thabo Beeler, Bernd Bickel, Paul Beardsley, Bob Sumner, and Markus Gross. 2010. High-Quality Single-Shot Capture of Facial Geometry. ACM Trans. Graph. 29, 4, Article Article 40 (2010).Google ScholarDigital Library

8. Thabo Beeler, Fabian Hahn, Derek Bradley, Bernd Bickel, Paul Beardsley, Craig Gotsman, Robert W. Sumner, and Markus Gross. 2011. High-Quality Passive Facial Performance Capture Using Anchor Frames. ACM Trans. Graph. 30, 4, Article Article 75 (2011).Google ScholarDigital Library

9. Pascal Bérard, Derek Bradley, Markus Gross, and Thabo Beeler. 2016. Lightweight Eye Capture Using a Parametric Model. ACM Trans. Graph. 35, 4, Article Article 117 (2016).Google ScholarDigital Library

10. Pascal Bérard, Derek Bradley, Markus Gross, and Thabo Beeler. 2019. Practical Person-Specific Eye Rigging. Computer Graphics Forum (2019). Google ScholarCross Ref

11. Volker Blanz and Thomas Vetter. 1999. A Morphable Model for the Synthesis of 3D Faces. In Proceedings of the 26th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’99). ACM Press/Addison-Wesley Publishing Co., USA, 187–194. Google ScholarDigital Library

12. James Booth, Epameinondas Antonakos, Stylianos Ploumpis, George Trigeorgis, Yannis Panagakis, and Stefanos Zafeiriou. 2017. 3D Face Morphable Models “In-the-Wild”. In CVPR.Google Scholar

13. James Booth, Anastasios Roussos, Stefanos Zafeiriou, Allan Ponniah, and David Dunaway. 2016. A 3d morphable model learnt from 10,000 faces. In CVPR.Google Scholar

14. Sofien Bouaziz, Yangang Wang, and Mark Pauly. 2013. Online Modeling for Realtime Facial Animation. ACM Trans. Graph. 32, 4, Article Article 40 (2013).Google ScholarDigital Library

15. Derek Bradley, Wolfgang Heidrich, Tiberiu Popa, and Alla Sheffer. 2010. High Resolution Passive Facial Performance Capture. ACM Trans. Graph. 29, 4, Article Article 41 (2010), 10 pages.Google ScholarDigital Library

16. Chen Cao, Yanlin Weng, Shun Zhou, Yiying Tong, and Kun Zhou. 2014. FaceWarehouse: A 3D Facial Expression Database for Visual Computing. IEEE Transactions on Visualization and Computer Graphics 20, 3 (2014).Google Scholar

17. Chen Cao, Hongzhi Wu, Yanlin Weng, Tianjia Shao, and Kun Zhou. 2016. Real-Time Facial Animation with Image-Based Dynamic Avatars. ACM Trans. Graph. 35, 4, Article Article 126 (2016).Google ScholarDigital Library

18. E Carrigan, E Zell, C Guiard, and R McDonnell. 2020. Expression Packing: As-Few-As-Possible Training Expressions for Blendshape Transfer. In Computer Graphics Forum, Vol. 39. Wiley Online Library, 219–233.Google Scholar

19. Dan Casas, Andrew Feng, Oleg Alexander, Graham Fyffe, Paul Debevec, Ryosuke Ichikari, Hao Li, Kyle Olszewski, Evan Suma, and Ari Shapiro. 2016. Rapid Photorealistic Blendshape Modeling from RGB-D Sensors. In CASA.Google Scholar

20. Anpei Chen, Zhang Chen, Guli Zhang, Kenny Mitchell, and Jingyi Yu. 2019. PhotoRealistic Facial Details Synthesis From Single Image. In ICCV.Google Scholar

21. Zhao Chen, Vijay Badrinarayanan, Chen-Yu Lee, and Andrew Rabinovich. 2018. GradNorm: Gradient Normalization for Adaptive Loss Balancing in Deep Multitask Networks. In ICML.Google Scholar

22. Shiyang Cheng, Michael M. Bronstein, Yuxiang Zhou, Irene Kotsia, Maja Pantic, and Stefanos Zafeiriou. 2019. MeshGAN: Non-linear 3D Morphable Models of Faces. CoRR (2019). http://arxiv.org/abs/1903.10384Google Scholar

23. Paul Ekman and Wallace V. Friesen. 1978. Facial action coding system: a technique for the measurement of facial movement. In Consulting Psychologists Press.Google Scholar

24. G. Fyffe, P. Graham, B. Tunwattanapong, A. Ghosh, and P. Debevec. 2016. Near-Instant Capture of High-Resolution Facial Geometry and Reflectance. In Proceedings of the 37th Annual Conference of the European Association for Computer Graphics (EG ’16). Eurographics Association, Goslar, DEU, 353–363.Google Scholar

25. Graham Fyffe, Andrew Jones, Oleg Alexander, Ryosuke Ichikari, and Paul Debevec. 2014. Driving high-resolution facial scans with video performance capture. ACM Trans. Graph. 34, 1 (2014), 1–14.Google ScholarDigital Library

26. Graham Fyffe, Koki Nagano, Loc Huynh, Shunsuke Saito, Jay Busch, Andrew Jones, Hao Li, and Paul Debevec. 2017. Multi-View Stereo on Consistent Face Topology. In Computer Graphics Forum, Vol. 36. Wiley Online Library, 295–309.Google Scholar

27. Pablo Garrido, Michael Zollhöfer, Dan Casas, Levi Valgaerts, Kiran Varanasi, Patrick Pérez, and Christian Theobalt. 2016. Reconstruction of Personalized 3D Face Rigs from Monocular Video. ACM Trans. Graph. 35, 3, Article Article 28 (2016), 15 pages.Google ScholarDigital Library

28. Abhijeet Ghosh, Graham Fyffe, Borom Tunwattanapong, Jay Busch, Xueming Yu, and Paul Debevec. 2011. Multiview face capture using polarized spherical gradient illumination. ACM Trans. Graph. 30, 6, 129.Google ScholarDigital Library

29. Paulo Gotardo, Jérémy Riviere, Derek Bradley, Abhijeet Ghosh, and Thabo Beeler. 2018. Practical Dynamic Facial Appearance Modeling and Acquisition. ACM Trans. Graph. 37, 6, Article Article 232 (2018), 13 pages.Google Scholar

30. Pei-Lun Hsieh, Chongyang Ma, Jihun Yu, and Hao Li. 2015. Unconstrained realtime facial performance capture. CVPR (2015).Google Scholar

31. Liwen Hu, Shunsuke Saito, Lingyu Wei, Koki Nagano, Jaewoo Seo, Jens Fursund, Iman Sadeghi, Carrie Sun, Yen-Chun Chen, and Hao Li. 2017. Avatar Digitization from a Single Image for Real-Time Rendering. ACM Trans. Graph. 36, 6, Article Article 195 (2017).Google ScholarDigital Library

32. Haoda Huang, Jinxiang Chai, Xin Tong, and Hsiang-Tao Wu. 2011. Leveraging Motion Capture and 3D Scanning for High-Fidelity Facial Performance Acquisition. ACM Trans. Graph. 30, 4, Article Article 74 (2011).Google ScholarDigital Library

33. Loc Huynh, Weikai Chen, Shunsuke Saito, Jun Xing, Koki Nagano, Andrew Jones, Paul Debevec, and Hao Li. 2018. Mesoscopic Facial Geometry Inference Using Deep Neural Networks. In CVPR.Google Scholar

34. Alexandru Eugen Ichim, Sofien Bouaziz, and Mark Pauly. 2015. Dynamic 3D avatar creation from hand-held video input. ACM Trans. Graph. 34, 4 (2015), 1–14.Google ScholarDigital Library

35. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A. Efros. 2017. Image-to-Image Translation with Conditional Adversarial Networks. In CVPR.Google Scholar

36. Ira Kemelmacher-Shlizerman. 2013. Internet-based Morphable Model. ICCV (2013).Google Scholar

37. Andor Kollar. 2019. Realistic Human Eye. http://kollarandor.com/gallery/3d-human-eye/. Online; Accessed: 2019-7-30.Google Scholar

38. Samuli Laine, Tero Karras, Timo Aila, Antti Herva, Shunsuke Saito, Ronald Yu, Hao Li, and Jaakko Lehtinen. 2017. Production-level facial performance capture using deep convolutional neural networks. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation. 1–10.Google ScholarDigital Library

39. Christian Ledig, Lucas Theis, Ferenc Huszár, Jose Caballero, Andrew Cunningham, Alejandro Acosta, Andrew Aitken, Alykhan Tejani, Johannes Totz, Zehan Wang, et al. 2017. Photo-realistic single image super-resolution using a generative adversarial network. In CVPR.Google Scholar

40. Jessica Lee, Deva Ramanan, and Rohit Girdhar. 2019. MetaPix: Few-Shot Video Retargeting. arXiv:cs.CV/1910.04742Google Scholar

41. J. P. Lewis, Ken Anjyo, Taehyun Rhee, Mengjie Zhang, Fred Pighin, and Zhigang Deng. 2014. Practice and Theory of Blendshape Facial Models. In Eurographics 2014 – State of the Art Reports. The Eurographics Association.Google Scholar

42. Hao Li, Bart Adams, Leonidas J Guibas, and Mark Pauly. 2009. Robust single-view geometry and motion reconstruction. ACM Trans. Graph. 28, 5 (2009), 1–10.Google ScholarDigital Library

43. Hao Li, Robert W. Sumner, and Mark Pauly. 2008. Global Correspondence Optimization for Non-rigid Registration of Depth Scans. In Proceedings of the Symposium on Geometry Processing (SGP ’08). Eurographics Association, Aire-la-Ville, Switzerland, Switzerland, 1421–1430. http://dl.acm.org/citation.cfm?id=1731309.1731326Google ScholarDigital Library

44. Hao Li, Thibaut Weise, and Mark Pauly. 2010. Example-Based Facial Rigging. ACM Trans. Graph., Article Article 32 (2010).Google Scholar

45. Hao Li, Jihun Yu, Yuting Ye, and Chris Bregler. 2013. Realtime Facial Animation with On-the-Fly Correctives. ACM Trans. Graph. 32, 4, Article Article 42 (2013).Google ScholarDigital Library

46. Ruilong Li, Karl Bladin, Yajie Zhao, Chinmay Chinara, Owen Ingraham, Pengda Xiang, Xinglei Ren, Pratusha Prasad, Bipin Kishore, Jun Xing, et al. 2020. Learning Formation of Physically-Based Face Attributes. In CVPR.Google Scholar

47. Tianye Li, Timo Bolkart, Michael J. Black, Hao Li, and Javier Romero. 2017. Learning a Model of Facial Shape and Expression from 4D Scans. ACM Trans. Graph. 36, 6, Article Article 194 (2017).Google ScholarDigital Library

48. Or Litany, Alex Bronstein, Michael Bronstein, and Ameesh Makadia. 2018. Deformable Shape Completion with Graph Convolutional Autoencoders. In CVPR.Google Scholar

49. Ming-Yu Liu, Xun Huang, Arun Mallya, Tero Karras, Timo Aila, Jaakko Lehtinen, and Jan Kautz. 2019. Few-shot unsupervised image-to-image translation. In ICCV.Google Scholar

50. Ziwei Liu, Ping Luo, Xiaogang Wang, and Xiaoou Tang. 2015. Deep Learning Face Attributes in the Wild. In ICCV.Google Scholar

51. Stephen Lombardi, Jason Saragih, Tomas Simon, and Yaser Sheikh. 2018. Deep appearance models for face rendering. ACM Trans. Graph. 37, 4 (2018), 68.Google ScholarDigital Library

52. Wan-Chun Ma, Tim Hawkins, Pieter Peers, Charles-Felix Chabert, Malte Weiss, and Paul Debevec. 2007. Rapid Acquisition of Specular and Diffuse Normal Maps from Polarized Spherical Gradient Illumination. In Proceedings of the 18th Eurographics Conference on Rendering Techniques (EGSR’07). Eurographics Association, Goslar, DEU, 183–194.Google ScholarDigital Library

53. Wan-Chun Ma, Mathieu Lamarre, Etienne Danvoye, Chongyang Ma, Manny Ko, Javier von der Pahlen, and Cyrus A Wilson. 2016. Semantically-aware blendshape rigs from facial performance measurements. In SIGGRAPH ASIA 2016 Technical Briefs. ACM, 3.Google Scholar

54. Ron Kimmel Matan Sela, Elad Richardson. 2017. Unrestricted Facial Geometry Reconstruction Using Image-to-Image Translation. In ICCV.Google Scholar

55. Arnold Maya. 2019. Maya Arnold renderer. https://arnoldrenderer.com/. Online; Accessed: 2019-11-22.Google Scholar

56. Koki Nagano, Jaewoo Seo, Jun Xing, Lingyu Wei, Zimo Li, Shunsuke Saito, Aviral Agarwal, Jens Fursund, and Hao Li. 2018. PaGAN: Real-Time Avatars Using Dynamic Textures. ACM Trans. Graph. 37, 6, Article Article 258 (2018), 12 pages.Google ScholarDigital Library

57. Jun-yong Noh and Ulrich Neumann. 2001. Expression Cloning. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’01).Google Scholar

58. Christopher Oat. 2007. Animated wrinkle maps. In ACM SIGGRAPH 2007 courses. 33–37.Google ScholarDigital Library

59. Kyle Olszewski, Joseph J. Lim, Shunsuke Saito, and Hao Li. 2016. High-Fidelity Facial and Speech Animation for VR HMDs. ACM Trans. Graph. 35, 6 (2016).Google ScholarDigital Library

60. Hayato Onizuka, Diego Thomas, Hideaki Uchiyama, and Rin-ichiro Taniguchi. 2019. Landmark-Guided Deformation Transfer of Template Facial Expressions for Automatic Generation of Avatar Blendshapes. In ICCVW.Google Scholar

61. Chandan Pawaskar, Wan-Chun Ma, Kieran Carnegie, John P Lewis, and Taehyun Rhee. 2013. Expression transfer: A system to build 3D blend shapes for facial animation. In 2013 28th International Conference on Image and Vision Computing New Zealand (IVCNZ 2013). IEEE, 154–159.Google ScholarCross Ref

62. Anurag Ranjan, Timo Bolkart, Soubhik Sanyal, and Michael J. Black. 2018. Generating 3D Faces Using Convolutional Mesh Autoencoders. In ECCV.Google Scholar

63. Christos Sagonas, Epameinondas Antonakos, Georgios Tzimiropoulos, Stefanos Zafeiriou, and Maja Pantic. 2016. 300 faces in-the-wild challenge: Database and results. Image and vision computing 47 (2016), 3–18.Google Scholar

64. Robert W. Sumner and Jovan Popovió. 2004. Deformation Transfer for Triangle Meshes. ACM Trans. Graph. 23, 3 (2004), 399–405.Google ScholarDigital Library

65. Ayush Tewari, Michael Zollhofer, Hyeongwoo Kim, Pablo Garrido, Florian Bernard, Patrick Perez, and Christian Theobalt. 2017. MoFA: Model-Based Deep Convolutional Face Autoencoder for Unsupervised Monocular Reconstruction. In ICCV.Google Scholar

66. Justus Thies, Michael Zollhöfer, Matthias Nieundefinedner, Levi Valgaerts, Marc Stamminger, and Christian Theobalt. 2015. Real-Time Expression Transfer for Facial Reenactment. ACM Trans. Graph. 34, 6 (2015).Google ScholarDigital Library

67. Justus Thies, Michael Zollhöfer, Marc Stamminger, Christian Theobalt, and Matthias Nießner. 2016. Face2Face: Real-Time Face Capture and Reenactment of RGB Videos. In CVPR.Google Scholar

68. Luan Tran, Feng Liu, and Xiaoming Liu. 2019. Towards High-fidelity Nonlinear 3D Face Morphable Model. In CVPR.Google Scholar

69. Luan Tran and Xiaoming Liu. 2018. Nonlinear 3D Face Morphable Mmodel. In CVPR.Google Scholar

70. Triplegangers. 2019. Triplegangers Face Models. https://triplegangers.com/. Online; Accessed: 2019-12-21.Google Scholar

71. Levi Valgaerts, Chenglei Wu, Andrés Bruhn, Hans-Peter Seidel, and Christian Theobalt. 2012. Lightweight Binocular Facial Performance Capture under Uncontrolled Lighting. ACM Trans. Graph. 31, 6 (2012).Google ScholarDigital Library

72. Zdravko Velinov, Marios Papas, Derek Bradley, Paulo Gotardo, Parsa Mirdehghan, Steve Marschner, Jan Novák, and Thabo Beeler. 2018. Appearance Capture and Modeling of Human Teeth. ACM Trans. Graph. 37, 6, Article Article 207 (Dec. 2018).Google ScholarDigital Library

73. Daniel Vlasic, Matthew Brand, Hanspeter Pfister, and Jovan Popovió. 2005. Face Transfer with Multilinear Models. ACM Trans. Graph. 24, 3 (2005), 426–433.Google ScholarDigital Library

74. Ting-Chun Wang, Ming-Yu Liu, Andrew Tao, Guilin Liu, Jan Kautz, and Bryan Catanzaro. 2019. Few-shot Video-to-Video Synthesis. In NeurIPS.Google Scholar

75. Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Guilin Liu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2018a. Video-to-Video Synthesis. In NeurIPS.Google Scholar

76. Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2018b. High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs. In CVPR.Google Scholar

77. Shih-En Wei, Jason Saragih, Tomas Simon, Adam W Harley, Stephen Lombardi, Michal Perdoch, Alexander Hypes, Dawei Wang, Hernan Badino, and Yaser Sheikh. 2019. VR facial animation via multiview image translation. ACM Trans. Graph. 38, 4 (2019), 1–16.Google ScholarDigital Library

78. Thibaut Weise, Hao Li, Luc Van Gool, and Mark Pauly. 2009. Face/Off: live facial puppetry. In SCA ’09.Google ScholarDigital Library

79. Chenglei Wu, Derek Bradley, Pablo Garrido, Michael Zollhöfer, Christian Theobalt, Markus Gross, and Thabo Beeler. 2016. Model-Based Teeth Reconstruction. ACM Trans. Graph. 35, 6, Article Article 220 (2016).Google ScholarDigital Library

80. Shugo Yamaguchi, Shunsuke Saito, Koki Nagano, Yajie Zhao, Weikai Chen, Kyle Olszewski, Shigeo Morishima, and Hao Li. 2018. High-fidelity facial reflectance and geometry inference from an unconstrained image. ACM Trans. Graph. 37, 4 (2018), 1–14.Google ScholarDigital Library

81. Haotian Yang, Hao Zhu, Yanru Wang, Mingkai Huang, Qiu Shen, Ruigang Yang, and Xun Cao. 2020. FaceScape: a Large-scale High Quality 3D Face Dataset and Detailed Riggable 3D Face Prediction. In CVPR.Google Scholar

82. Li Zhang, Noah Snavely, Brian Curless, and Steven M. Seitz. 2004. Spacetime Faces: High Resolution Capture for Modeling and Animation. ACM Trans. Graph. 23, 3 (2004).Google ScholarDigital Library

83. Yuxiang Zhou, Jiankang Deng, Irene Kotsia, and Stefanos Zafeiriou. 2019. Dense 3D Face Decoding Over 2500FPS: Joint Texture & Shape Convolutional Mesh Decoders. In CVPR.Google Scholar