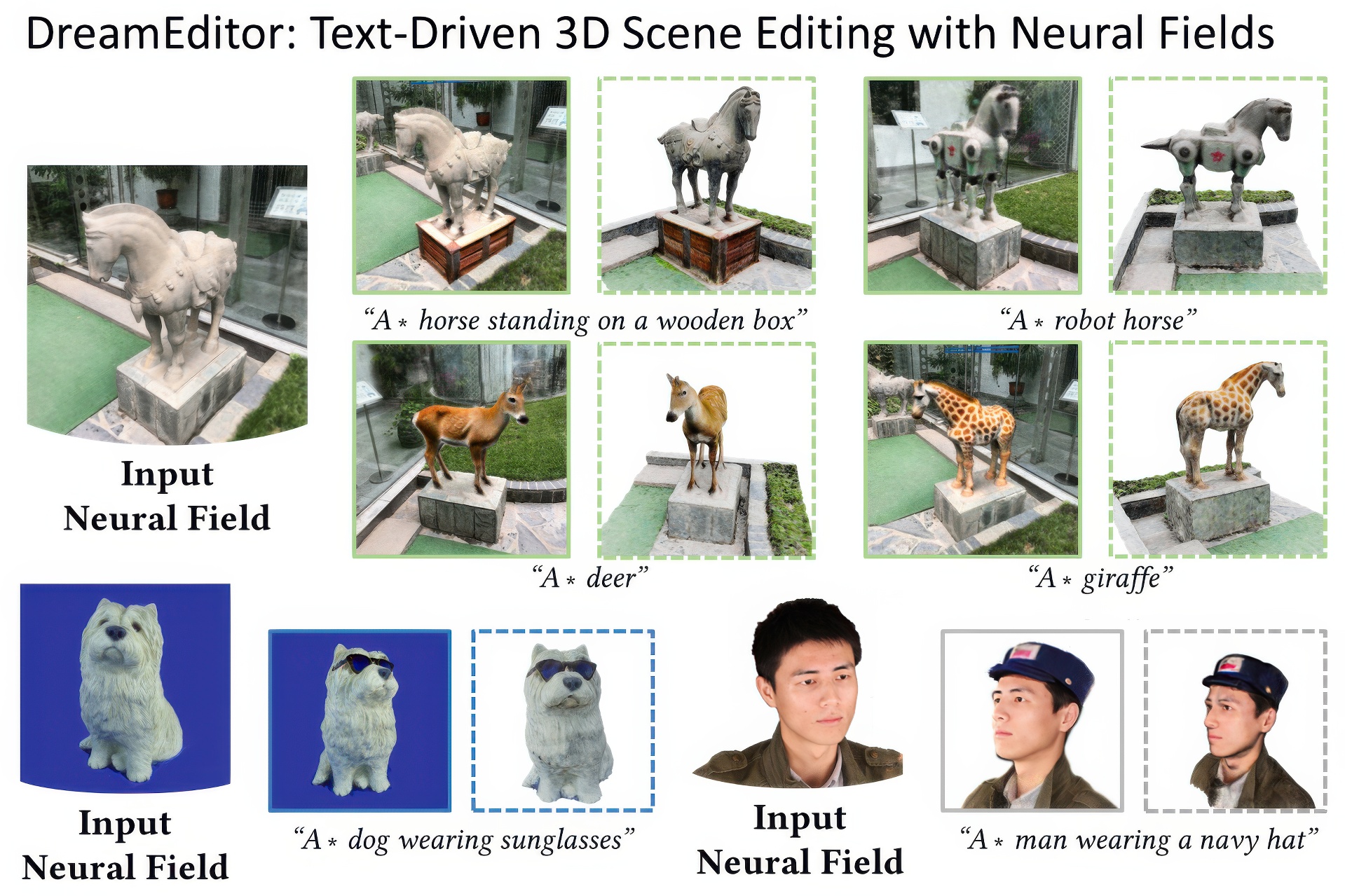

“DreamEditor: Text-Driven 3D Scene Editing with Neural Fields” by Zhuang, Wang, Liu and Li

Conference:

Type(s):

Title:

- DreamEditor: Text-Driven 3D Scene Editing with Neural Fields

Session/Category Title:

- How To Deal With NERF?

Presenter(s)/Author(s):

Abstract:

Neural fields have achieved impressive advancements in view synthesis and scene reconstruction. However, editing these neural fields remains challenging due to the implicit encoding of geometry and texture information. In this paper, we propose DreamEditor, a novel framework that enables users to perform controlled editing of neural fields using text prompts. By representing scenes as mesh-based neural fields, DreamEditor allows localized editing within specific regions. DreamEditor utilizes the text encoder of a pretrained text-to-Image diffusion model to automatically identify the regions to be edited based on the semantics of the text prompts. Subsequently, DreamEditor optimizes the edit region and aligns its geometry and texture with the text prompts through score distillation sampling. Extensive experiments have demonstrated that DreamEditor can accurately edit neural fields of real-world scenes according to the given text prompts while ensuring consistency in irrelevant areas. DreamEditor generates highly realistic textures and geometry, significantly surpassing previous works in both quantitative and qualitative evaluations.

References:

[1]

Omri Avrahami, Dani Lischinski, and Ohad Fried. 2022. Blended diffusion for text-driven editing of natural images. In CVPR 2022. 18208–18218.

[2]

Chong Bao, Yinda Zhang, and Bangbang et al. Yang. 2023. Sine: Semantic-driven image-based nerf editing with prior-guided editing field. In CVPR 2023. 20919–20929.

[3]

Tim Brooks, Aleksander Holynski, and Alexei A Efros. 2022. Instructpix2pix: Learning to follow image editing instructions. arXiv preprint arXiv:2211.09800 (2022).

[4]

Jianchuan Chen, Ying Zhang, Di Kang, Xuefei Zhe, Linchao Bao, Xu Jia, and Huchuan Lu. 2021. Animatable neural radiance fields from monocular rgb videos. arXiv preprint arXiv:2106.13629 (2021).

[5]

Rui Chen, Yongwei Chen, Ningxin Jiao, and Kui Jia. 2023. Fantasia3D: Disentangling Geometry and Appearance for High-quality Text-to-3D Content Creation. arXiv preprint arXiv:2303.13873 (2023).

[6]

Yongwei Chen, Rui Chen, Jiabao Lei, Yabin Zhang, and Kui Jia. 2022. Tango: Text-driven photorealistic and robust 3d stylization via lighting decomposition. arXiv preprint arXiv:2210.11277 (2022).

[7]

Guillaume Couairon, Jakob Verbeek, Holger Schwenk, and Matthieu Cord. 2022. Diffedit: Diffusion-based semantic image editing with mask guidance. arXiv preprint arXiv:2210.11427 (2022).

[8]

Rinon Gal, Yuval Alaluf, Yuval Atzmon, Or Patashnik, Amit H Bermano, Gal Chechik, and Daniel Cohen-Or. 2022a. An image is worth one word: Personalizing text-to-image generation using textual inversion. arXiv preprint arXiv:2208.01618 (2022).

[9]

Rinon Gal, Or Patashnik, Haggai Maron, Amit H Bermano, Gal Chechik, and Daniel Cohen-Or. 2022b. StyleGAN-NADA: CLIP-guided domain adaptation of image generators. ACM Transactions on Graphics (TOG) 41, 4 (2022), 1–13.

[10]

William Gao, Noam Aigerman, Thibault Groueix, Vladimir G Kim, and Rana Hanocka. 2023. TextDeformer: Geometry Manipulation using Text Guidance. arXiv preprint arXiv:2304.13348 (2023).

[11]

Amos Gropp, Lior Yariv, Niv Haim, Matan Atzmon, and Yaron Lipman. 2020. Implicit geometric regularization for learning shapes. arXiv preprint arXiv:2002.10099 (2020).

[12]

Ayaan Haque, Matthew Tancik, Alexei A Efros, Aleksander Holynski, and Angjoo Kanazawa. 2023. Instruct-NeRF2NeRF: Editing 3D Scenes with Instructions. arXiv preprint arXiv:2303.12789 (2023).

[13]

Amir Hertz, Ron Mokady, Jay Tenenbaum, Kfir Aberman, Yael Pritch, and Daniel Cohen-Or. 2022. Prompt-to-prompt image editing with cross attention control. arXiv preprint arXiv:2208.01626 (2022).

[14]

Jonathan Ho, Ajay Jain, and Pieter Abbeel. 2020. Denoising diffusion probabilistic models. NeurIPS 2020 33 (2020), 6840–6851.

[15]

Ajay Jain, Ben Mildenhall, Jonathan T Barron, and et al.2022. Zero-shot text-guided object generation with dream fields. In CVPR 2022. 867–876.

[16]

Rasmus Jensen, Anders Dahl, George Vogiatzis, Engin Tola, and Henrik Aanæs. 2014. Large scale multi-view stereopsis evaluation. In CVPR 2014. 406–413.

[17]

Bahjat Kawar, Shiran Zada, Oran Lang, Omer Tov, Huiwen Chang, Tali Dekel, Inbar Mosseri, and Michal Irani. 2022. Imagic: Text-based real image editing with diffusion models. arXiv preprint arXiv:2210.09276 (2022).

[18]

Sosuke Kobayashi, Eiichi Matsumoto, and Vincent Sitzmann. 2022. Decomposing nerf for editing via feature field distillation. arXiv preprint arXiv:2205.15585 (2022).

[19]

Yuan Li, Zhi-Hao Lin, David Forsyth, Jia-Bin Huang, and Shenlong Wang. 2022. ClimateNeRF: Physically-based Neural Rendering for Extreme Climate Synthesis. arXiv e-prints (2022), arXiv–2211.

[20]

Chen-Hsuan Lin, Jun Gao, Luming Tang, Towaki Takikawa, Xiaohui Zeng, Xun Huang, Karsten Kreis, Sanja Fidler, Ming-Yu Liu, and Tsung-Yi Lin. 2022. Magic3D: High-Resolution Text-to-3D Content Creation. arXiv preprint arXiv:2211.10440 (2022).

[21]

Hao-Kang Liu, I Shen, Bing-Yu Chen, 2022. NeRF-In: Free-form NeRF inpainting with RGB-D priors. arXiv preprint arXiv:2206.04901 (2022).

[22]

Lingjie Liu, Jiatao Gu, Kyaw Zaw Lin, and et al.2020. Neural sparse voxel fields. NeurIPS 2020 33 (2020), 15651–15663.

[23]

Steven Liu, Xiuming Zhang, Zhoutong Zhang, Richard Zhang, Jun-Yan Zhu, and Bryan Russell. 2021. Editing conditional radiance fields. In ICCV 2021. 5773–5783.

[24]

William E Lorensen and Harvey E Cline. 1987. Marching cubes: A high resolution 3D surface construction algorithm. ACM siggraph computer graphics 21, 4 (1987), 163–169.

[25]

Gal Metzer, Elad Richardson, Or Patashnik, Raja Giryes, and Daniel Cohen-Or. 2022. Latent-NeRF for Shape-Guided Generation of 3D Shapes and Textures. arXiv preprint arXiv:2211.07600 (2022).

[26]

Oscar Michel, Roi Bar-On, Richard Liu, and et al.2022. Text2mesh: Text-driven neural stylization for meshes. In CVPR 2022. 13492–13502.

[27]

Ben Mildenhall, Pratul P Srinivasan, Matthew Tancik, Jonathan T Barron, Ravi Ramamoorthi, and Ren Ng. 2021. Nerf: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 65, 1 (2021), 99–106.

[28]

Nasir Mohammad Khalid, Tianhao Xie, Eugene Belilovsky, and Tiberiu Popa. 2022. CLIP-Mesh: Generating textured meshes from text using pretrained image-text models. In SIGGRAPH Asia 2022. 1–8.

[29]

Thomas Müller, Alex Evans, Christoph Schied, and Alexander Keller. 2022. Instant neural graphics primitives with a multiresolution hash encoding. ACM Transactions on Graphics (ToG) 41, 4 (2022), 1–15.

[30]

Atsuhiro Noguchi, Xiao Sun, Stephen Lin, and Tatsuya Harada. 2021. Neural articulated radiance field. In ICCV 2021. 5762–5772.

[31]

Julian Ost, Issam Laradji, Alejandro Newell, and et al.2022. Neural point light fields. In CVPR 2022. 18419–18429.

[32]

Sida Peng, Yuanqing Zhang, Yinghao Xu, and et al.2021. Neural body: Implicit neural representations with structured latent codes for novel view synthesis of dynamic humans. In CVPR 2021. 9054–9063.

[33]

Ben Poole, Ajay Jain, Jonathan T Barron, and Ben Mildenhall. 2022. Dreamfusion: Text-to-3d using 2d diffusion. arXiv preprint arXiv:2209.14988 (2022).

[34]

Charles R Qi, Hao Su, Kaichun Mo, and Leonidas J Guibas. 2017. Pointnet: Deep learning on point sets for 3d classification and segmentation. In CVPR 2017. 652–660.

[35]

Amit Raj, Srinivas Kaza, Ben Poole, Michael Niemeyer, Nataniel Ruiz, Ben Mildenhall, Shiran Zada, Kfir Aberman, Michael Rubinstein, Jonathan Barron, 2023. DreamBooth3D: Subject-Driven Text-to-3D Generation. arXiv preprint arXiv:2303.13508 (2023).

[36]

Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. 2022. Hierarchical text-conditional image generation with clip latents. arXiv preprint arXiv:2204.06125 (2022).

[37]

Jeremy Reizenstein, Roman Shapovalov, Philipp Henzler, and et al.2021. Common objects in 3d: Large-scale learning and evaluation of real-life 3d category reconstruction. In ICCV 2021. 10901–10911.

[38]

Elad Richardson, Gal Metzer, Yuval Alaluf, Raja Giryes, and Daniel Cohen-Or. 2023. Texture: Text-guided texturing of 3d shapes. arXiv preprint arXiv:2302.01721 (2023).

[39]

Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. 2022. High-resolution image synthesis with latent diffusion models. In CVPR 2022. 10684–10695.

[40]

Nataniel Ruiz, Yuanzhen Li, Varun Jampani, Yael Pritch, Michael Rubinstein, and Kfir Aberman. 2022. Dreambooth: Fine tuning text-to-image diffusion models for subject-driven generation. arXiv preprint arXiv:2208.12242 (2022).

[41]

Chitwan Saharia, William Chan, and Saurabh et al. Saxena. 2022. Photorealistic text-to-image diffusion models with deep language understanding. NeurIPS 2022 35 (2022), 36479–36494.

[42]

Etai Sella, Gal Fiebelman, Peter Hedman, and Hadar Averbuch-Elor. 2023. Vox-E: Text-guided Voxel Editing of 3D Objects. arXiv preprint arXiv:2303.12048 (2023).

[43]

Tianwei Shen, Zixin Luo, Lei Zhou, and et al.2018. Matchable image retrieval by learning from surface reconstruction. In ACCV 2018. Springer, 415–431.

[44]

Jiaming Song, Chenlin Meng, and Stefano Ermon. 2020. Denoising diffusion implicit models. arXiv preprint arXiv:2010.02502 (2020).

[45]

Robert W Sumner, Johannes Schmid, and Mark Pauly. 2007. Embedded deformation for shape manipulation. In ACM siggraph 2007 papers. 80–es.

[46]

Ashish Vaswani, Noam Shazeer, Niki Parmar, and et al.2017. Attention is all you need. NeurIPS 2017 30 (2017).

[47]

Can Wang, Menglei Chai, Mingming He, and et al.2022a. Clip-nerf: Text-and-image driven manipulation of neural radiance fields. In CVPR 2022. 3835–3844.

[48]

Can Wang, Ruixiang Jiang, Menglei Chai, Mingming He, Dongdong Chen, and Jing Liao. 2023. Nerf-art: Text-driven neural radiance fields stylization. IEEE Transactions on Visualization and Computer Graphics (2023).

[49]

Chen Wang, Xian Wu, Yuan-Chen Guo, and et al.2022c. NeRF-SR: High Quality Neural Radiance Fields using Supersampling. In ACM MM 2022. 6445–6454.

[50]

Haochen Wang, Xiaodan Du, Jiahao Li, Raymond A Yeh, and Greg Shakhnarovich. 2022b. Score Jacobian Chaining: Lifting Pretrained 2D Diffusion Models for 3D Generation. arXiv preprint arXiv:2212.00774 (2022).

[51]

Peng Wang, Lingjie Liu, Yuan Liu, Christian Theobalt, Taku Komura, and Wenping Wang. 2021. Neus: Learning neural implicit surfaces by volume rendering for multi-view reconstruction. arXiv preprint arXiv:2106.10689 (2021).

[52]

Fanbo Xiang, Zexiang Xu, Milos Hasan, and et al.2021. Neutex: Neural texture mapping for volumetric neural rendering. In CVPR 2021. 7119–7128.

[53]

Tianhan Xu and Tatsuya Harada. 2022. Deforming radiance fields with cages. In Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXXIII. Springer, 159–175.

[54]

Bangbang Yang, Chong Bao, and Junyi et al. Zeng. 2022. Neumesh: Learning disentangled neural mesh-based implicit field for geometry and texture editing. In ECCV 2022. Springer, 597–614.

[55]

Yao Yao, Zixin Luo, Shiwei Li, and et al.2020. BlendedMVS: A Large-scale Dataset for Generalized Multi-view Stereo Networks. In CVPR 2020.

[56]

Lior Yariv, Peter Hedman, Christian Reiser, Dor Verbin, Pratul P Srinivasan, Richard Szeliski, Jonathan T Barron, and Ben Mildenhall. 2023. BakedSDF: Meshing Neural SDFs for Real-Time View Synthesis. arXiv preprint arXiv:2302.14859 (2023).

[57]

Yu-Jie Yuan, Yang-Tian Sun, Yu-Kun Lai, and et al.2022. NeRF-editing: geometry editing of neural radiance fields. In CVPR 2022. 18353–18364.