“Diffusing Colors: Image Colorization with Text Guided Diffusion” by Zabari, Azulay, Gorkor, Halperin and Fried

Conference:

Type(s):

Title:

- Diffusing Colors: Image Colorization with Text Guided Diffusion

Session/Category Title:

- Magic Diffusion Model

Presenter(s)/Author(s):

Abstract:

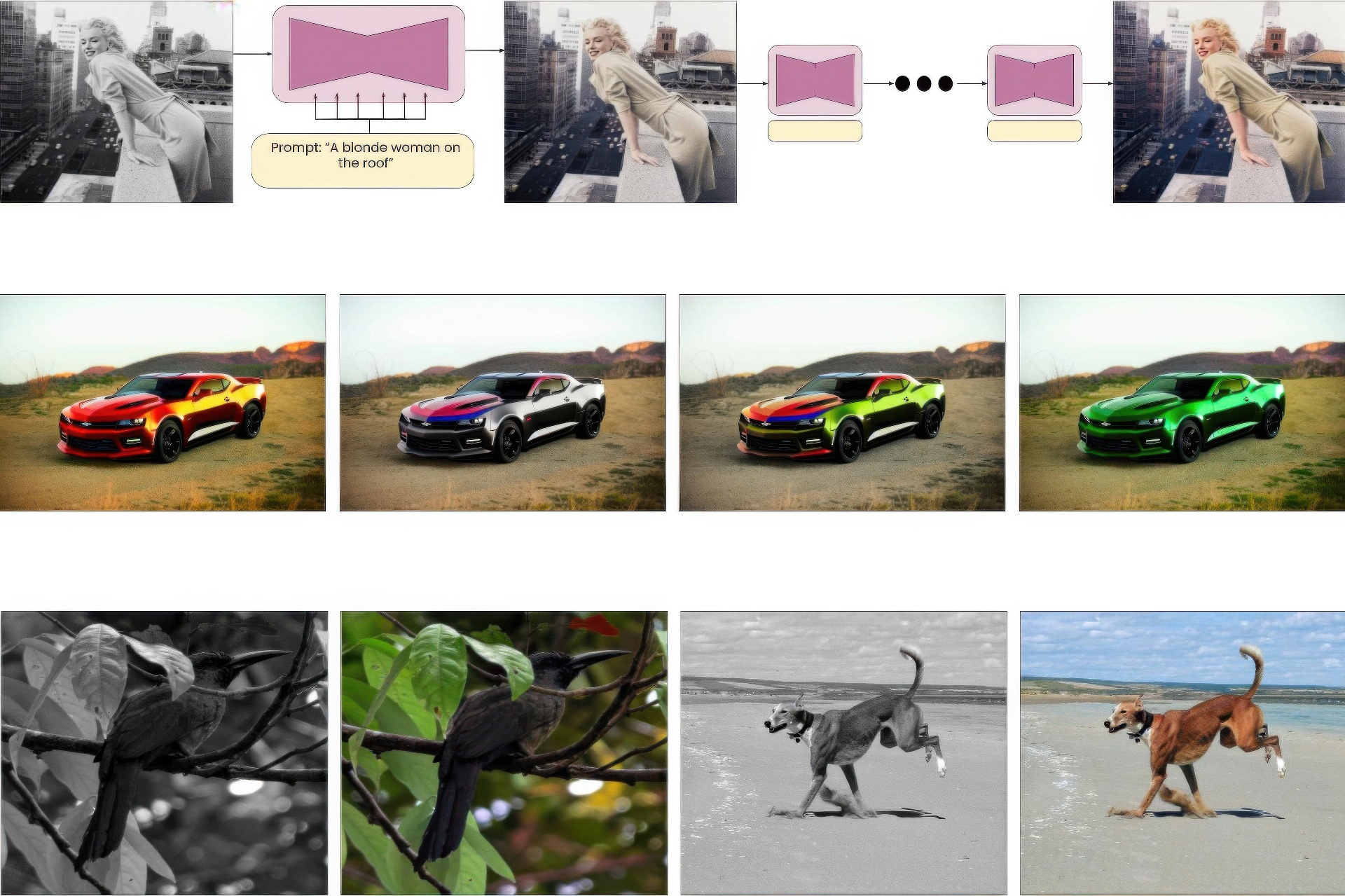

The colorization of grayscale images is a complex and subjective task with significant challenges. Despite recent progress in employing large-scale datasets with deep neural networks, difficulties with controllability and visual quality persist. To tackle these issues, we present a novel image colorization framework that utilizes image diffusion techniques with granular text prompts. This integration not only produces colorization outputs that are semantically appropriate but also greatly improves the level of control users have over the colorization process. Our method provides a balance between automation and control, outperforming existing techniques in terms of visual quality and semantic coherence. We leverage a pretrained generative Diffusion Model, and show that we can finetune it for the colorization task without losing its generative power or attention to text prompts. Moreover, we present a novel CLIP-based ranking model that evaluates color vividness, enabling automatic selection of the most suitable level of vividness based on the specific scene semantics. Our approach holds potential particularly for color enhancement and historical image colorization.

References:

[1]

Jason Antic. 2019. DeOldify: A open-source project for colorizing old images (and video). (2019).

[2]

Omri Avrahami, Ohad Fried, and Dani Lischinski. 2022a. Blended Latent Diffusion. arXiv preprint arXiv:2206.02779 (2022).

[3]

Omri Avrahami, Thomas Hayes, Oran Gafni, Sonal Gupta, Yaniv Taigman, Devi Parikh, Dani Lischinski, Ohad Fried, and Xi Yin. 2023. SpaText: Spatio-Textual Representation for Controllable Image Generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 18370–18380.

[4]

Omri Avrahami, Dani Lischinski, and Ohad Fried. 2022b. Blended Diffusion for Text-Driven Editing of Natural Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 18208–18218.

[5]

Arpit Bansal, Eitan Borgnia, Hong-Min Chu, Jie Li, Hamideh Kazemi, Furong Huang, Micah Goldblum, Jonas Geiping, and Tom Goldstein. 2022. Cold Diffusion: Inverting Arbitrary Image Transforms Without Noise. ArXiv abs/2208.09392 (2022).

[6]

Tom B. Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, Sandhini Agarwal, Ariel Herbert-Voss, Gretchen Krueger, T. J. Henighan, Rewon Child, Aditya Ramesh, Daniel M. Ziegler, Jeff Wu, Clemens Winter, Christopher Hesse, Mark Chen, Eric Sigler, Mateusz Litwin, Scott Gray, Benjamin Chess, Jack Clark, Christopher Berner, Sam McCandlish, Alec Radford, Ilya Sutskever, and Dario Amodei. 2020. Language Models are Few-Shot Learners. ArXiv abs/2005.14165 (2020).

[7]

Holger Caesar, Jasper R. R. Uijlings, and Vittorio Ferrari. 2016. COCO-Stuff: Thing and Stuff Classes in Context. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (2016), 1209–1218.

[8]

Zheng Chang, Shuchen Weng, Yu Li, Si Li, and Boxin Shi. 2022. L-CoDer: Language-Based Colorization with Color-Object Decoupling Transformer. In European Conference on Computer Vision.

[9]

Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li, and Li Fei-Fei. 2009. ImageNet: A large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition. 248–255. https://doi.org/10.1109/CVPR.2009.5206848

[10]

Prafulla Dhariwal and Alex Nichol. 2021. Diffusion Models Beat GANs on Image Synthesis. ArXiv abs/2105.05233 (2021).

[11]

Rinon Gal, Or Patashnik, Haggai Maron, Gal Chechik, and Daniel Cohen-Or. 2021. StyleGAN-NADA: CLIP-Guided Domain Adaptation of Image Generators. ArXiv abs/2108.00946 (2021).

[12]

David Hasler and Sabine E. Suesstrunk. 2003. Measuring colorfulness in natural images. In Human Vision and Electronic Imaging VIII, Bernice E. Rogowitz and Thrasyvoulos N. Pappas (Eds.). Vol. 5007. International Society for Optics and Photonics, SPIE, 87 – 95. https://doi.org/10.1117/12.477378

[13]

Amir Hertz, Ron Mokady, Jay M. Tenenbaum, Kfir Aberman, Yael Pritch, and Daniel Cohen-Or. 2022. Prompt-to-Prompt Image Editing with Cross Attention Control. ArXiv abs/2208.01626 (2022).

[14]

Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. 2017. GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. In Proceedings of the 31st International Conference on Neural Information Processing Systems (Long Beach, California, USA) (NIPS’17). Curran Associates Inc., Red Hook, NY, USA, 6629–6640.

[15]

Jonathan Ho, William Chan, Chitwan Saharia, Jay Whang, Ruiqi Gao, Alexey A. Gritsenko, Diederik P. Kingma, Ben Poole, Mohammad Norouzi, David J. Fleet, and Tim Salimans. 2022a. Imagen Video: High Definition Video Generation with Diffusion Models. ArXiv abs/2210.02303 (2022).

[16]

Jonathan Ho, Ajay Jain, and P. Abbeel. 2020. Denoising Diffusion Probabilistic Models. ArXiv abs/2006.11239 (2020).

[17]

Jonathan Ho, Chitwan Saharia, William Chan, David J. Fleet, Mohammad Norouzi, and Tim Salimans. 2021. Cascaded Diffusion Models for High Fidelity Image Generation. J. Mach. Learn. Res. 23 (2021), 47:1–47:33.

[18]

Jonathan Ho and Tim Salimans. 2022. Classifier-Free Diffusion Guidance. arxiv:2207.12598 [cs.LG]

[19]

Jonathan Ho, Tim Salimans, Alexey Gritsenko, William Chan, Mohammad Norouzi, and David J. Fleet. 2022b. Video Diffusion Models. ArXiv abs/2204.03458 (2022).

[20]

Zhitong Huang, Nanxuan Zhao, and Jing Liao. 2022. Unicolor: A unified framework for multi-modal colorization with transformer. ACM Transactions on Graphics (TOG) 41, 6 (2022), 1–16.

[21]

Xiaozhong Ji, Boyuan Jiang, Donghao Luo, Guangpin Tao, Wenqing Chu, Zhifeng Xie, Chengjie Wang, and Ying Tai. 2022. ColorFormer: Image Colorization via Color Memory Assisted Hybrid-Attention Transformer. In European Conference on Computer Vision.

[22]

Bahjat Kawar, Shiran Zada, Oran Lang, Omer Tov, Hui-Tang Chang, Tali Dekel, Inbar Mosseri, and Michal Irani. 2022. Imagic: Text-Based Real Image Editing with Diffusion Models. ArXiv abs/2210.09276 (2022).

[23]

Geon-Yeong Kim, Kyoungkook Kang, Seong Hyun Kim, Hwayoon Lee, Sehoon Kim, Jonghyun Kim, Seung-Hwan Baek, and Sunghyun Cho. 2022. BigColor: Colorization using a Generative Color Prior for Natural Images. In European Conference on Computer Vision.

[24]

Zhifeng Kong, Wei Ping, Jiaji Huang, Kexin Zhao, and Bryan Catanzaro. 2020. DiffWave: A Versatile Diffusion Model for Audio Synthesis. ArXiv abs/2009.09761 (2020).

[25]

Manoj Kumar, Dirk Weissenborn, and Nal Kalchbrenner. 2021. Colorization Transformer. ArXiv abs/2102.04432 (2021).

[26]

Gustav Larsson, Michael Maire, and Gregory Shakhnarovich. 2016a. Learning Representations for Automatic Colorization. In European Conference on Computer Vision.

[27]

Gustav Larsson, Michael Maire, and Gregory Shakhnarovich. 2016b. Learning Representations for Automatic Colorization. In European Conference on Computer Vision.

[28]

Anat Levin, Dani Lischinski, and Yair Weiss. 2004. Colorization using optimization. ACM SIGGRAPH 2004 Papers (2004).

[29]

Hanyuan Liu, Jinbo Xing, Minshan Xie, Chengze Li, and Tien-Tsin Wong. 2023. Improved Diffusion-based Image Colorization via Piggybacked Models. ArXiv abs/2304.11105 (2023). https://api.semanticscholar.org/CorpusID:258291599

[30]

Varun Manjunatha, Mohit Iyyer, Jordan L. Boyd-Graber, and Larry S. Davis. 2018. Learning to Color from Language. ArXiv abs/1804.06026 (2018).

[31]

Vadim Popov, Ivan Vovk, Vladimir Gogoryan, Tasnima Sadekova, and Mikhail Kudinov. 2021. Grad-TTS: A Diffusion Probabilistic Model for Text-to-Speech. In International Conference on Machine Learning.

[32]

Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, 2021. Learning transferable visual models from natural language supervision. In International conference on machine learning. PMLR, 8748–8763.

[33]

Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. 2022. Hierarchical Text-Conditional Image Generation with CLIP Latents. ArXiv abs/2204.06125 (2022).

[34]

Aditya Ramesh, Mikhail Pavlov, Gabriel Goh, Scott Gray, Chelsea Voss, Alec Radford, Mark Chen, and Ilya Sutskever. 2021. Zero-Shot Text-to-Image Generation. ArXiv abs/2102.12092 (2021).

[35]

Robin Rombach, A. Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. 2021. High-Resolution Image Synthesis with Latent Diffusion Models. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2021), 10674–10685.

[36]

Chitwan Saharia, William Chan, Huiwen Chang, Chris A. Lee, Jonathan Ho, Tim Salimans, David J. Fleet, and Mohammad Norouzi. 2022a. Palette: Image-to-Image Diffusion Models. arxiv:2111.05826 [cs.CV]

[37]

Chitwan Saharia, William Chan, Saurabh Saxena, Lala Li, Jay Whang, Emily L. Denton, Seyed Kamyar Seyed Ghasemipour, Burcu Karagol Ayan, Seyedeh Sara Mahdavi, Raphael Gontijo Lopes, Tim Salimans, Jonathan Ho, David J. Fleet, and Mohammad Norouzi. 2022b. Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding. ArXiv abs/2205.11487 (2022).

[38]

Jheng-Wei Su, Hung kuo Chu, and Jia-Bin Huang. 2020. Instance-Aware Image Colorization. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2020), 7965–7974.

[39]

Patricia Vitoria, Lara Raad, and Coloma Ballester. 2019. ChromaGAN: Adversarial Picture Colorization with Semantic Class Distribution. 2020 IEEE Winter Conference on Applications of Computer Vision (WACV) (2019), 2434–2443.

[40]

Xintao Wang, Yu Li, Honglun Zhang, and Ying Shan. 2021. Towards Real-World Blind Face Restoration with Generative Facial Prior. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

[41]

Yinhuai Wang, Jiwen Yu, and Jian Zhang. 2022. Zero-Shot Image Restoration Using Denoising Diffusion Null-Space Model. ArXiv abs/2212.00490 (2022).

[42]

Shuchen Weng, Hao Wu, Zheng Chang, Jiajun Tang, Si Li, and Boxin Shi. 2022. L-CoDe: Language-Based Colorization Using Color-Object Decoupled Conditions. In AAAI Conference on Artificial Intelligence.

[43]

Yanze Wu, Xintao Wang, Yu Li, Honglun Zhang, Xun Zhao, and Ying Shan. 2021. Towards vivid and diverse image colorization with generative color prior. In Proceedings of the IEEE/CVF international conference on computer vision. 14377–14386.

[44]

Menghan Xia, Wenbo Hu, Tien-Tsin Wong, and Jue Wang. 2022. Disentangled Image Colorization via Global Anchors. ACM Transactions on Graphics (TOG) 41 (2022), 1 – 13.

[45]

Lvmin Zhang and Maneesh Agrawala. 2023. Adding Conditional Control to Text-to-Image Diffusion Models. ArXiv abs/2302.05543 (2023).

[46]

Richard Zhang, Phillip Isola, and Alexei A. Efros. 2016. Colorful Image Colorization. In European Conference on Computer Vision.

[47]

Richard Zhang, Jun-Yan Zhu, Phillip Isola, Xinyang Geng, Angela S. Lin, Tianhe Yu, and Alexei A. Efros. 2017. Real-time user-guided image colorization with learned deep priors. ACM Transactions on Graphics (TOG) 36 (2017), 1 – 11.