“DeepRemaster: temporal source-reference attention networks for comprehensive video enhancement” by Iizuka and Simo-Serra

Conference:

Type(s):

Title:

- DeepRemaster: temporal source-reference attention networks for comprehensive video enhancement

Session/Category Title:

- Learning from Video

Presenter(s)/Author(s):

Moderator(s):

Abstract:

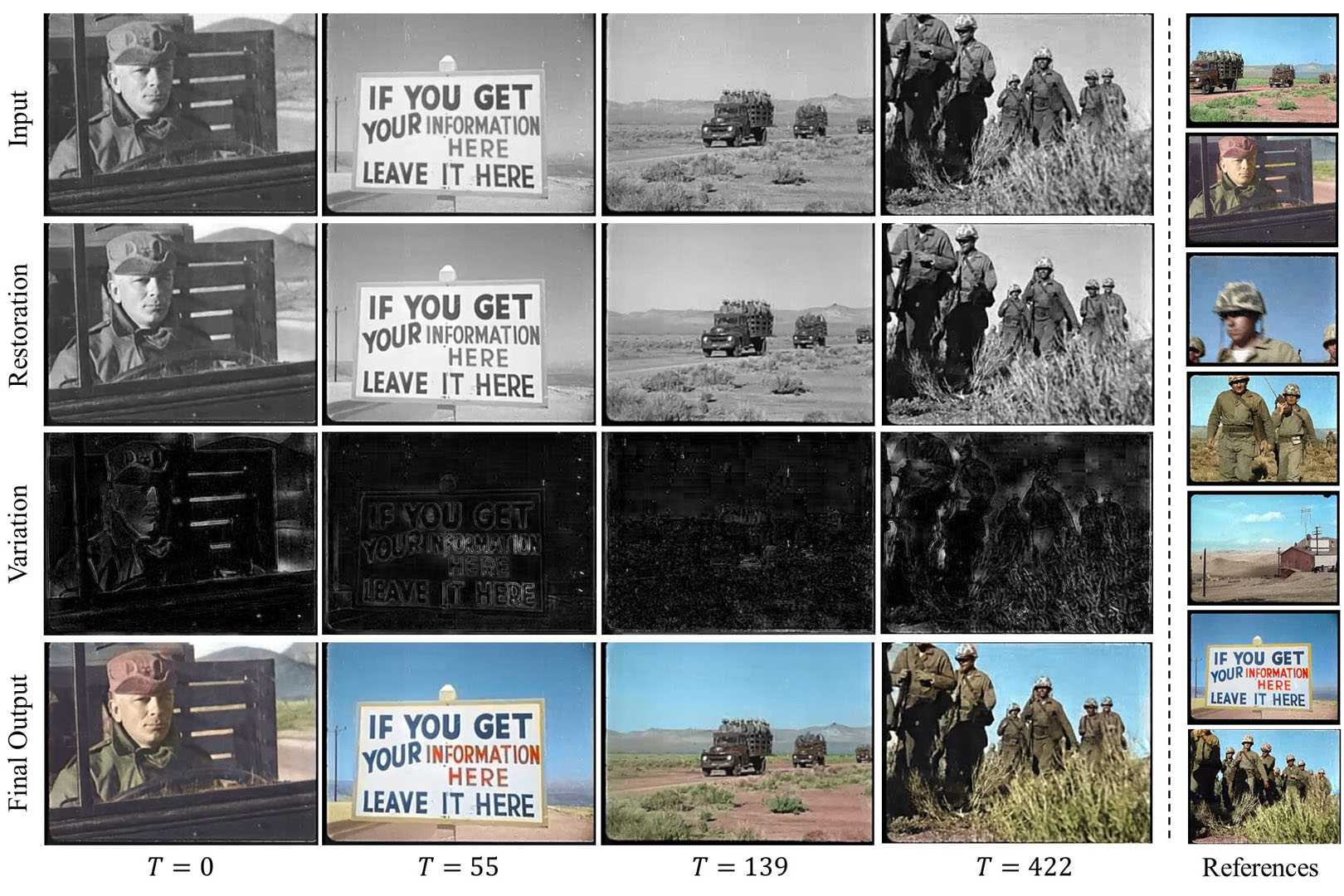

The remastering of vintage film comprises of a diversity of sub-tasks including super-resolution, noise removal, and contrast enhancement which aim to restore the deteriorated film medium to its original state. Additionally, due to the technical limitations of the time, most vintage film is either recorded in black and white, or has low quality colors, for which colorization becomes necessary. In this work, we propose a single framework to tackle the entire remastering task semi-interactively. Our work is based on temporal convolutional neural networks with attention mechanisms trained on videos with data-driven deterioration simulation. Our proposed source-reference attention allows the model to handle an arbitrary number of reference color images to colorize long videos without the need for segmentation while maintaining temporal consistency. Quantitative analysis shows that our framework outperforms existing approaches, and that, in contrast to existing approaches, the performance of our framework increases with longer videos and more reference color images.

References:

1. Sami Abu-El-Haija, Nisarg Kothari, Joonseok Lee, Apostol (Paul) Natsev, George Toderici, Balakrishnan Varadarajan, and Sudheendra Vijayanarasimhan. 2016. YouTube-8M: A Large-Scale Video Classification Benchmark. In arXiv:1609.08675. https://arxiv.org/pdf/1609.08675v1.pdfGoogle Scholar

2. Xiaobo An and Fabio Pellacini. 2008. AppProp: All-pairs Appearance-space Edit Propagation. ACM Transactions on Graphics (Proceedings of SIGGRAPH) 27, 3 (Aug. 2008), 40:1–40:9.Google ScholarDigital Library

3. Dzmitry Bahdanau, Kyunghyun Cho, and Yoshua Bengio. 2015. Neural machine translation by jointly learning to align and translate. (2015).Google Scholar

4. Steve Bako, Thijs Vogels, Brian McWilliams, Mark Meyer, Jan Novák, Alex Harvill, Pradeep Sen, Tony Derose, and Fabrice Rousselle. 2017. Kernel-predicting convolutional networks for denoising Monte Carlo renderings. ACM Transactions on Graphics (Proceedings of SIGGRAPH) 36, 4 (2017), 97–1.Google ScholarDigital Library

5. Nicolas Bonneel, James Tompkin, Kalyan Sunkavalli, Deqing Sun, Sylvain Paris, and Hanspeter Pfister. 2015. Blind Video Temporal Consistency. ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia) 34, 6 (2015).Google Scholar

6. Denny Britz, Anna Goldie, Minh-Thang Luong, and Quoc Le. 2017. Massive Exploration of Neural Machine Translation Architectures. In Conference on Empirical Methods in Natural Language Processing.Google Scholar

7. Chakravarty R Alla Chaitanya, Anton S Kaplanyan, Christoph Schied, Marco Salvi, Aaron Lefohn, Derek Nowrouzezahrai, and Timo Aila. 2017. Interactive reconstruction of Monte Carlo image sequences using a recurrent denoising autoencoder. ACM Transactions on Graphics (Proceedings of SIGGRAPH) 36, 4 (2017), 98.Google Scholar

8. Jianpeng Cheng, Li Dong, and Mirella Lapata. 2016. Long short-termmemory-networks for machine reading. In Conference on Empirical Methods in Natural Language Processing.Google ScholarCross Ref

9. Alex Yong-Sang Chia, Shaojie Zhuo, Raj Kumar Gupta, Yu-Wing Tai, Siu-Yeung Cho, Ping Tan, and Stephen Lin. 2011. Semantic Colorization with Internet Images. ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia) 30, 6 (2011), 156:1–156:8.Google Scholar

10. Djork-Arné Clevert, Thomas Unterthiner, and Sepp Hochreiter. 2015. Fast and accurate deep network learning by exponential linear units (elus). In International Conference on Learning Representations.Google Scholar

11. K. Dabov, A. Foi, V. Katkovnik, and K. Egiazarian. 2007. Image Denoising by Sparse 3-D Transform-Domain Collaborative Filtering. IEEE Transactions on Image Processing 16, 8 (2007), 2080–2095.Google ScholarDigital Library

12. A. Danielyan, V. Katkovnik, and K. Egiazarian. 2012. BM3D Frames and Variational Image Deblurring. IEEE Transactions on Image Processing 21, 4 (2012), 1715–1728.Google ScholarDigital Library

13. Yuchen Fan, Jiahui Yu, and Thomas S Huang. 2018. Wide-activated Deep Residual Networks based Restoration for BPG-compressed Images. In IEEE Conference on Computer Vision and Pattern Recognition Workshops.Google Scholar

14. Mingming He, Dongdong Chen, Jing Liao, Pedro V Sander, and Lu Yuan. 2018. Deep exemplar-based colorization. ACM Transactions on Graphics (Proceedings of SIGGRAPH) 37, 4 (2018), 47.Google ScholarDigital Library

15. Yi-Chin Huang, Yi-Shin Tung, Jun-Cheng Chen, Sung-Wen Wang, and Ja-Ling Wu. 2005. An Adaptive Edge Detection Based Colorization Algorithm and Its Applications. In ACMMM. 351–354.Google Scholar

16. Satoshi Iizuka, Edgar Simo-Serra, and Hiroshi Ishikawa. 2016. Let there be Color!: Joint End-to-end Learning of Global and Local Image Priors for Automatic Image Colorization with Simultaneous Classification. ACM Transactions on Graphics (Proceedings of SIGGRAPH) 35, 4 (2016).Google Scholar

17. Sergey Ioffe and Christian Szegedy. 2015. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In International Conference on Machine Learning.Google ScholarDigital Library

18. Revital Irony, Daniel Cohen-Or, and Dani Lischinski. 2005. Colorization by Example. In Eurographics Conference on Rendering Techniques. 201–210.Google Scholar

19. T. H. Kim, M. S. M. Sajjadi, M. Hirsch, and B. Schölkopf. 2018. Spatio-temporal Transformer Network for Video Restoration. In European Conference on Computer Vision.Google Scholar

20. Wei-Sheng Lai, Jia-Bin Huang, Oliver Wang, Eli Shechtman, Ersin Yumer, and Ming-Hsuan Yang. 2018. Learning Blind Video Temporal Consistency. In European Conference on Computer Vision.Google Scholar

21. Gustav Larsson, Michael Maire, and Gregory Shakhnarovich. 2016. Learning representations for automatic colorization. In European Conference on Computer Vision.Google ScholarCross Ref

22. Stamatios Lefkimmiatis. 2018. Universal Denoising Networks: A Novel CNN Architecture for Image Denoising. In IEEE Conference on Computer Vision and Pattern Recognition.Google Scholar

23. Anat Levin, Dani Lischinski, and Yair Weiss. 2004. Colorization using Optimization. ACM Transactions on Graphics (Proceedings of SIGGRAPH) 23 (2004), 689–694.Google ScholarDigital Library

24. Sifei Liu, Guangyu Zhong, Shalini De Mello, Jinwei Gu, Varun Jampani, Ming-Hsuan Yang, and Jan Kautz. 2018. Switchable Temporal Propagation Network. In European Conference on Computer Vision.Google Scholar

25. Xiaopei Liu, Liang Wan, Yingge Qu, Tien-Tsin Wong, Stephen Lin, Chi-Sing Leung, and Pheng-Ann Heng. 2008. Intrinsic Colorization. ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia) 27, 5 (December 2008), 152:1–152:9.Google ScholarDigital Library

26. Thang Luong, Hieu Pham, and Christopher D Manning. 2015. Effective Approaches to Attention-based Neural Machine Translation. In Conference on Empirical Methods in Natural Language Processing.Google ScholarCross Ref

27. M. Maggioni, G. Boracchi, A. Foi, and K. Egiazarian. 2012. Video Denoising, Deblocking, and Enhancement Through Separable 4-D Nonlocal Spatiotemporal Transforms. IEEE Transactions on Image Processing 21, 9 (2012), 3952–3966.Google ScholarDigital Library

28. M. Maggioni, E. Sánchez-Monge, and A. Foi. 2014. Joint Removal of Random and Fixed-Pattern Noise Through Spatiotemporal Video Filtering. IEEE Transactions on Image Processing 23, 10 (2014), 4282–4296.Google ScholarCross Ref

29. D. Martin, C. Fowlkes, D. Tal, and J. Malik. 2001. A Database of Human Segmented Natural Images and its Application to Evaluating Segmentation Algorithms and Measuring Ecological Statistics. In International Conference on Computer Vision.Google Scholar

30. Simone Meyer, Victor Cornillère, Abdelaziz Djelouah, Christopher Schroers, and Markus Gross. 2018. Deep Video Color Propagation. In British Machine Vision Conference.Google Scholar

31. Ankur P Parikh, Oscar Täckström, Dipanjan Das, and Jakob Uszkoreit. 2016. A decomposable attention model for natural language inference. In Conference on Empirical Methods in Natural Language Processing.Google ScholarCross Ref

32. Niki Parmar, Ashish Vaswani, Jakob Uszkoreit, Łukasz Kaiser, Noam Shazeer, and Alexander Ku. 2018. Image Transformer. In International Conference on Machine Learning.Google Scholar

33. François Pitié, Anil C. Kokaram, and Rozenn Dahyot. 2007. Automated Colour Grading Using Colour Distribution Transfer. Computer Vision and Image Understanding 107, 1–2 (July 2007), 123–137.Google ScholarDigital Library

34. Erik Reinhard, Michael Ashikhmin, Bruce Gooch, and Peter Shirley. 2001. Color Transfer between Images. IEEE Computer Graphics and Applications 21, 5 (sep 2001), 34–41.Google ScholarDigital Library

35. Patsorn Sangkloy, Jingwan Lu, Chen Fang, Fisher Yu, and James Hays. 2017. Scribbler: Controlling deep image synthesis with sketch and color. In IEEE Conference on Computer Vision and Pattern Recognition.Google ScholarCross Ref

36. Wenzhe Shi, Jose Caballero, Ferenc Huszár, Johannes Totz, Andrew P Aitken, Rob Bishop, Daniel Rueckert, and Zehan Wang. 2016. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In IEEE Conference on Computer Vision and Pattern Recognition.Google ScholarCross Ref

37. Yu-Wing Tai, Jiaya Jia, and Chi-Keung Tang. 2005. Local Color Transfer via Probabilistic Segmentation by Expectation-Maximization. In IEEE Conference on Computer Vision and Pattern Recognition. 747–754.Google Scholar

38. Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. In Conference on Neural Information Processing Systems.Google Scholar

39. Thijs Vogels, Fabrice Rousselle, Brian McWilliams, Gerhard Röthlin, Alex Harvill, David Adler, Mark Meyer, and Jan Novák. 2018. Denoising with kernel prediction and asymmetric loss functions. ACM Transactions on Graphics (Proceedings of SIGGRAPH) 37, 4 (2018), 124.Google ScholarDigital Library

40. Carl Vondrick, Abhinav Shrivastava, Alireza Fathi, Sergio Guadarrama, and Kevin Murphy. 2018. Tracking emerges by colorizing videos. In European Conference on Computer Vision.Google ScholarCross Ref

41. Xiaolong Wang, Ross Girshick, Abhinav Gupta, and Kaiming He. 2018. Non-local neural networks. In IEEE Conference on Computer Vision and Pattern Recognition.Google ScholarCross Ref

42. Tomihisa Welsh, Michael Ashikhmin, and Klaus Mueller. 2002. Transferring Color to Greyscale Images. ACM Transactions on Graphics (Proceedings of SIGGRAPH) 21, 3 (July 2002), 277–280.Google ScholarDigital Library

43. Fuzhang Wu, Weiming Dong, Yan Kong, Xing Mei, Jean-Claude Paul, and Xiaopeng Zhang. 2013. Content-Based Colour Transfer. 32, 1 (2013), 190–203.Google Scholar

44. Kelvin Xu, Jimmy Ba, Ryan Kiros, Kyunghyun Cho, Aaron Courville, Ruslan Salakhudinov, Rich Zemel, and Yoshua Bengio. 2015. Show, attend and tell: Neural image caption generation with visual attention. In International Conference on Machine Learning.Google ScholarDigital Library

45. Li Xu, Qiong Yan, and Jiaya Jia. 2013. A Sparse Control Model for Image and Video Editing. ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia) 32, 6 (Nov. 2013), 197:1–197:10.Google ScholarDigital Library

46. Jiahui Yu, Yuchen Fan, Jianchao Yang, Ning Xu, Zhaowen Wang, Xinchao Wang, and Thomas S. Huang. 2018. Wide Activation for Efficient and Accurate Image Super-Resolution. CoRR abs/1808.08718 (2018). arXiv:1808.08718Google Scholar

47. Matthew D. Zeiler. 2012. ADADELTA: An Adaptive Learning Rate Method. CoRR abs/1212.5701 (2012).Google Scholar

48. Han Zhang, Ian Goodfellow, Dimitris Metaxas, and Augustus Odena. 2018a. Self-Attention Generative Adversarial Networks. arXiv preprint arXiv:1805.08318 (2018).Google Scholar

49. Kai Zhang, Wangmeng Zuo, Yunjin Chen, Deyu Meng, and Lei Zhang. 2017b. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Transactions on Image Processing 26, 7 (2017), 3142–3155.Google ScholarDigital Library

50. Kai Zhang, Wangmeng Zuo, and Lei Zhang. 2018b. FFDNet: Toward a Fast and Flexible Solution for CNN based Image Denoising. IEEE Transactions on Image Processing (2018).Google Scholar

51. Richard Zhang, Phillip Isola, and Alexei A Efros. 2016. Colorful image colorization. In European Conference on Computer Vision.Google ScholarCross Ref

52. Richard Zhang, Jun-Yan Zhu, Phillip Isola, Xinyang Geng, Angela S Lin, Tianhe Yu, and Alexei A Efros. 2017a. Real-Time User-Guided Image Colorization with Learned Deep Priors. ACM Transactions on Graphics (Proceedings of SIGGRAPH) 9, 4 (2017).Google Scholar